Documentation

Welcome to the documentation of Computer Vision Annotation Tool.

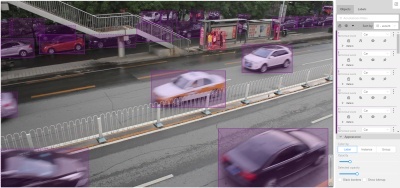

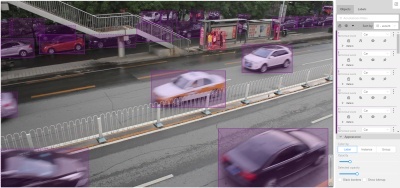

CVAT is a free, online, interactive video and image annotation tool for computer vision.

It is being developed and used by Intel to annotate millions of objects with different properties.

Many UI and UX decisions are based on feedbacks from professional data annotation team.

Try it online app.cvat.ai.

Our documentation provides information for AI researchers, system administrators, developers, simple and advanced users.

The documentation is divided into three sections, and each section is divided into subsections basic and advanced.

Basic information and sections needed for a quick start.

Answers to frequently asked questions.

Computer Vision Annotation Tool GitHub repository.

This section contains documents for CVAT simple and advanced users.

This section contains documents for system administrators.

This section contains documents for developers.

1 - Getting started

This section contains basic information and links to sections necessary for a quick start.

Installation

First step is to install CVAT on your system:

To learn how to create a superuser and log in to CVAT,

go to the authorization section.

Getting started in CVAT

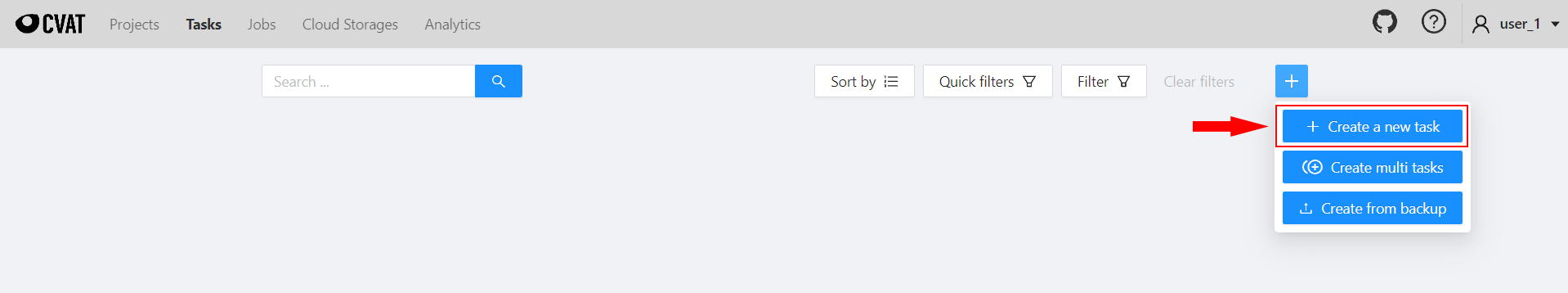

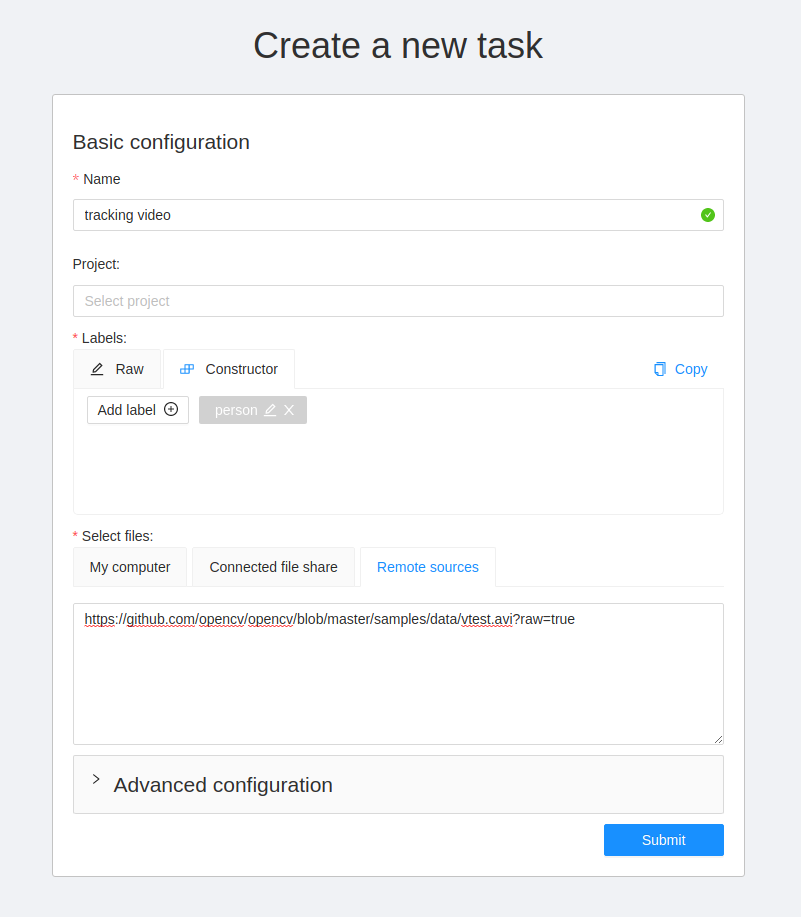

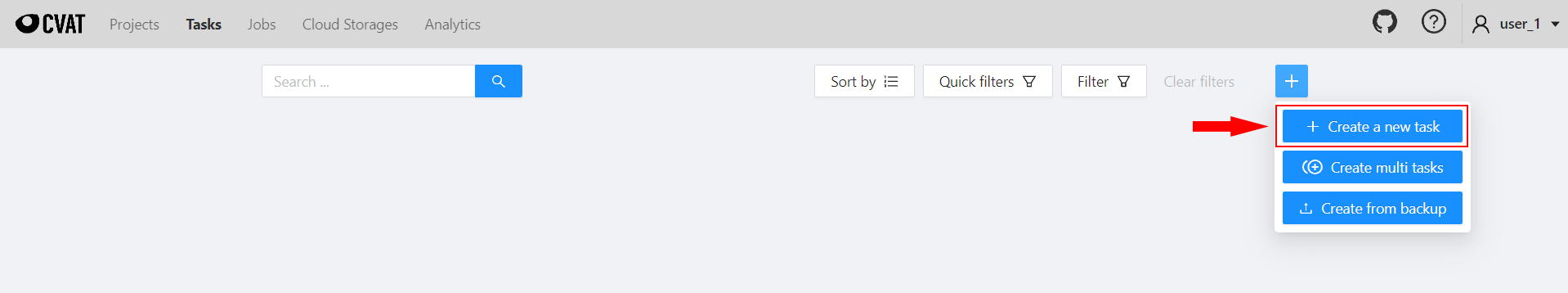

To create a task, go to Tasks section. Click Create new task to go to the task creation page.

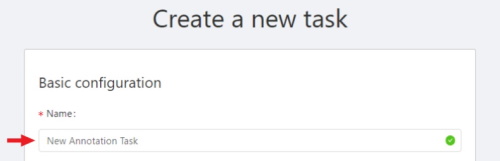

Set the name of the future task.

Set the label using the constructor: first click Add label, then enter the name of the label and choose the color.

You need to upload images or videos for your future annotation. To do so, simply drag and drop the files.

To learn more, go to creating an annotation task

Annotation

Basic

When the task is created, you will see a corresponding message in the top right corner.

Click the Open task button to go to the task page.

Once on the task page, open a link to the job in the jobs list.

Choose a correct section for your type of the task and start annotation.

Advanced

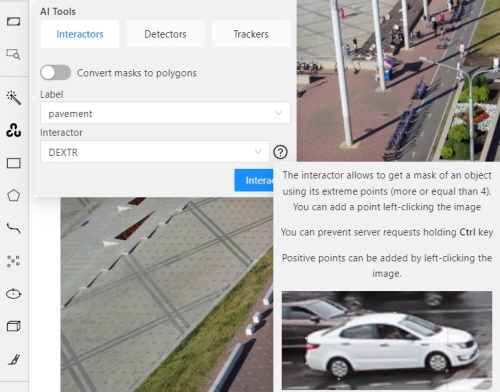

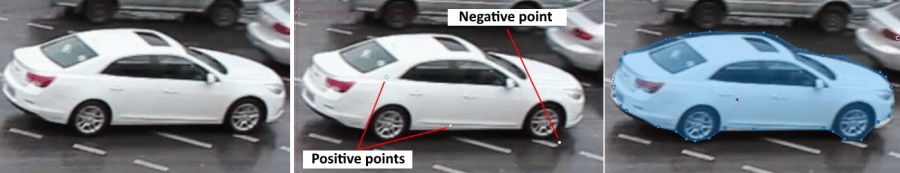

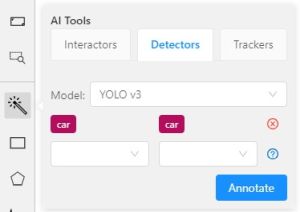

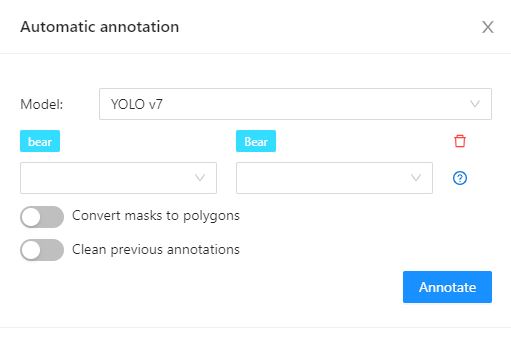

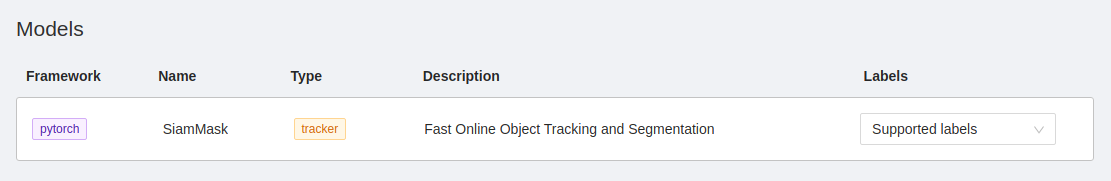

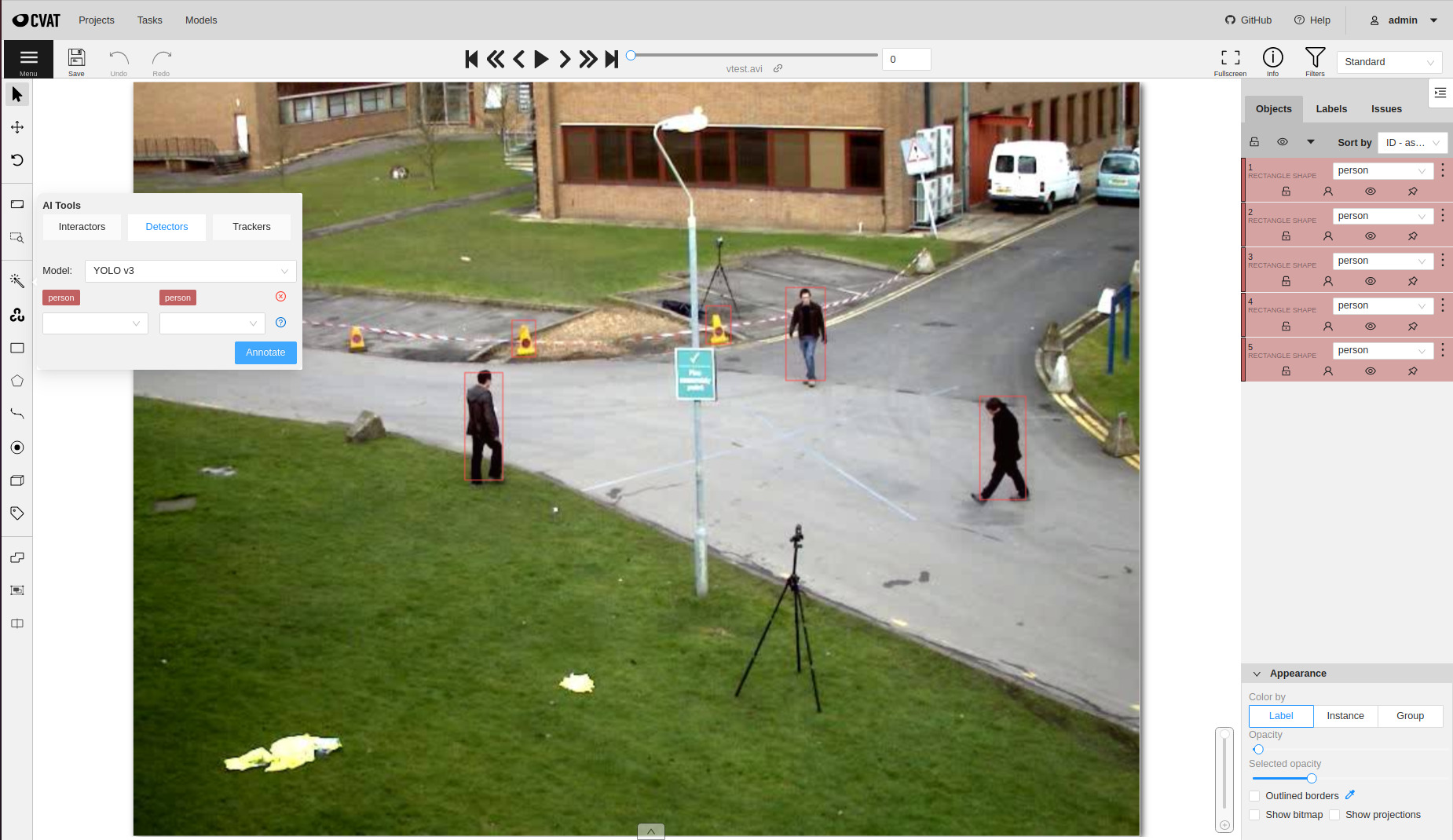

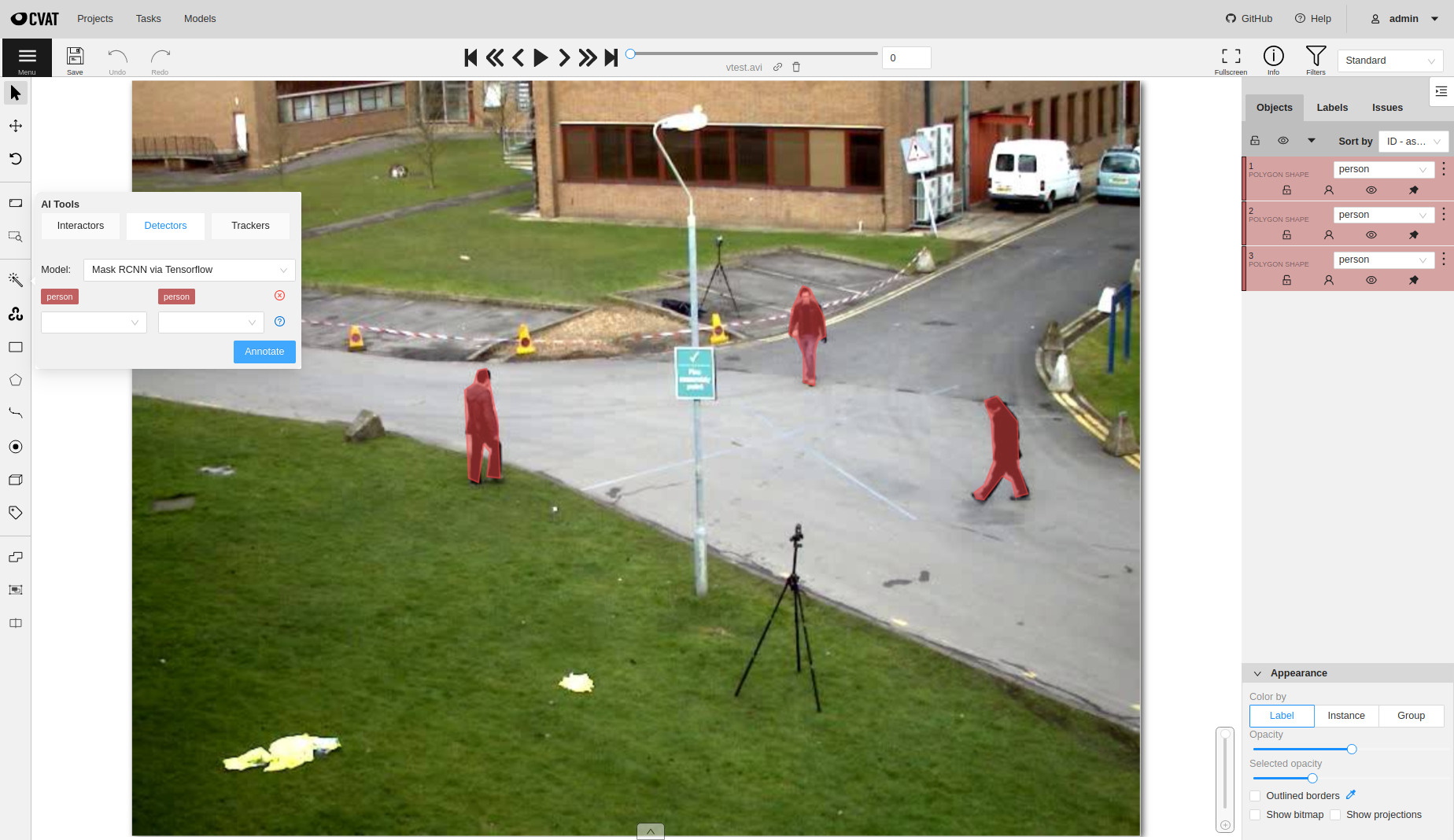

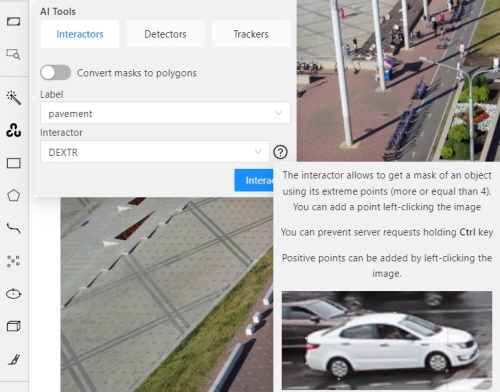

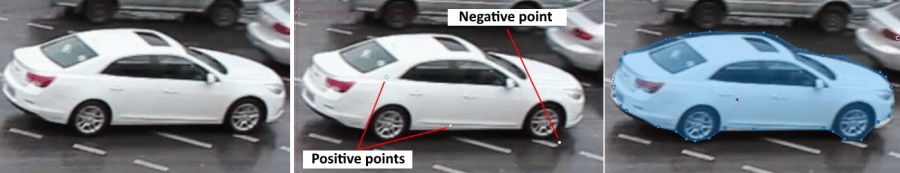

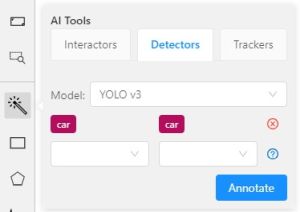

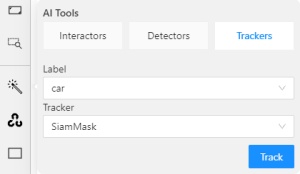

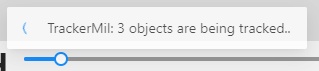

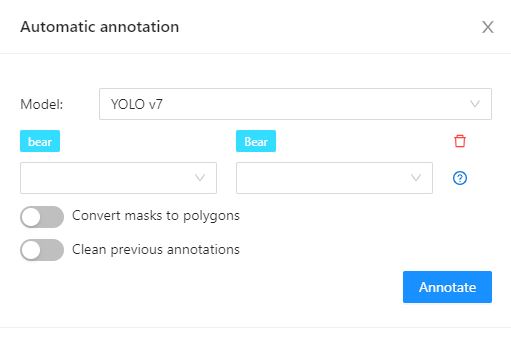

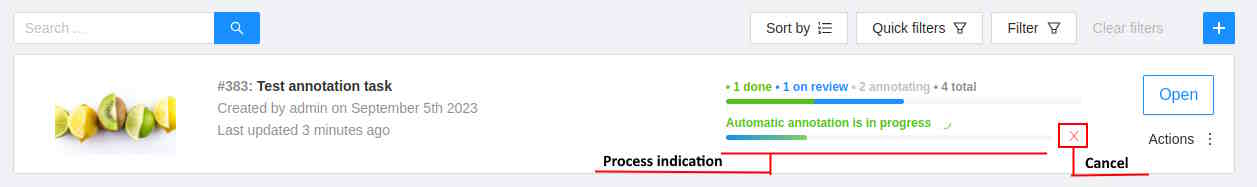

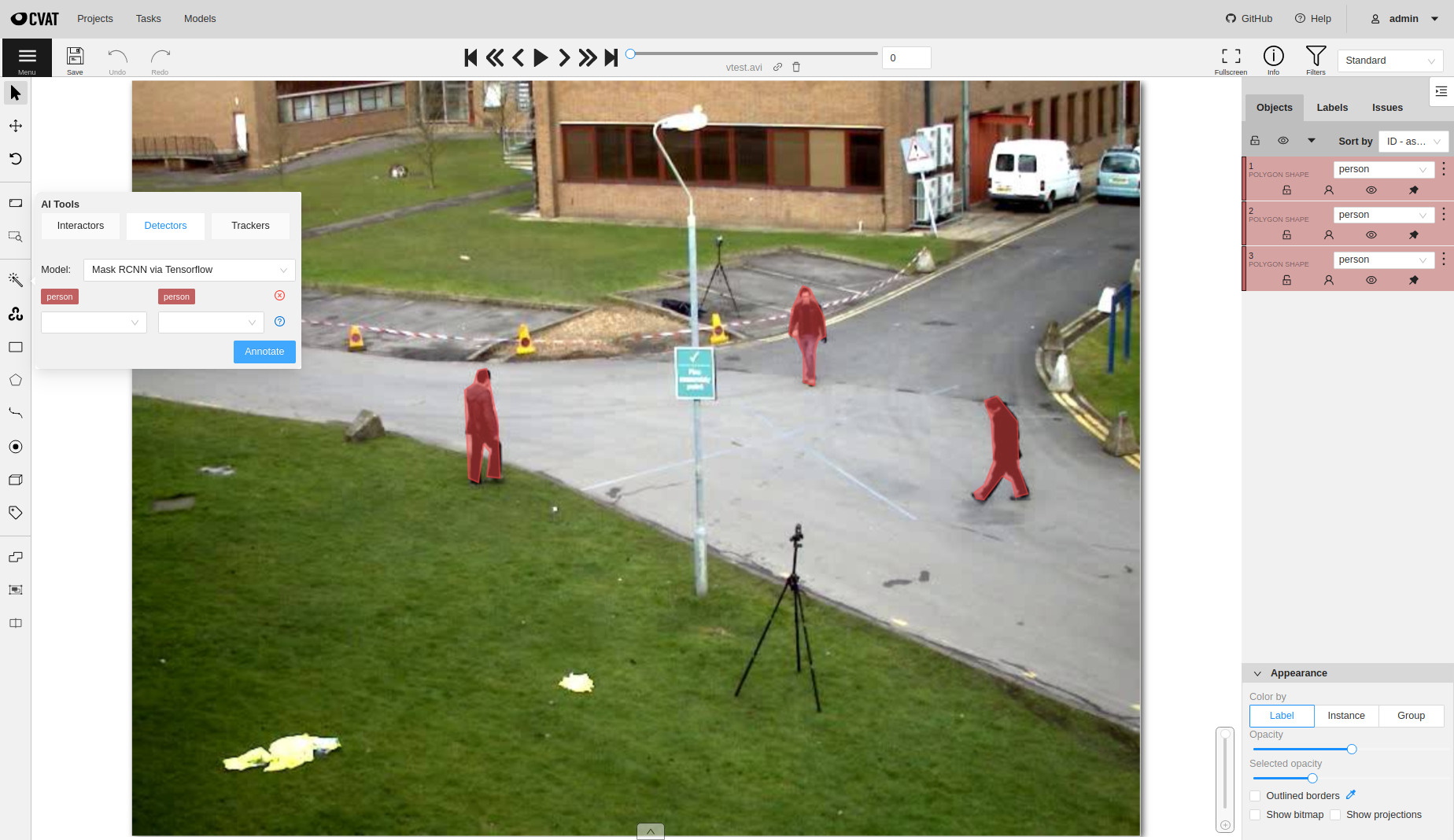

In CVAT there is the possibility of using automatic and semi-automatic annotation what gives

you the opportunity to speed up the execution of the annotation:

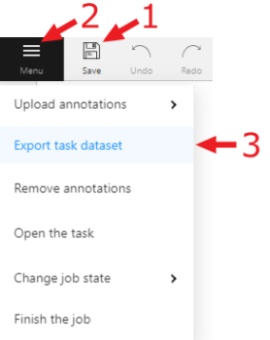

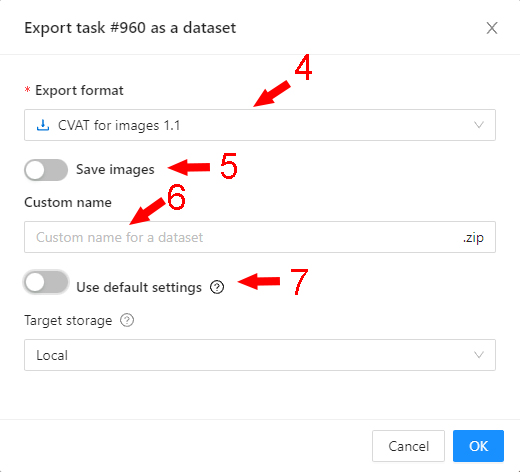

Export dataset

-

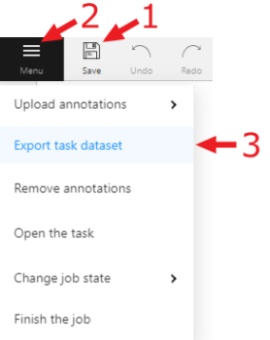

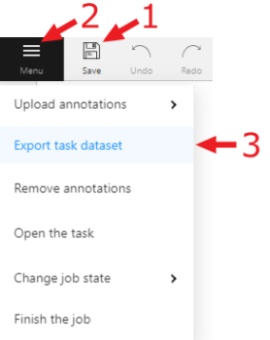

To download the annotations, first you have to save all changes.

Click the Save button or press Ctrl+Sto save annotations quickly.

-

After you saved the changes, click the Menu button.

-

Then click the Export dataset button.

-

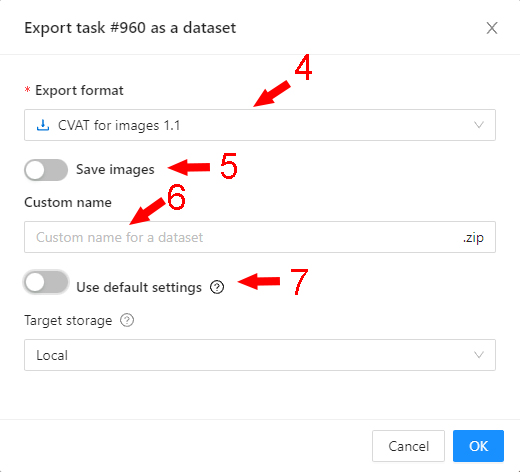

Lastly choose a format of the dataset.

Exporting is available in formats from the list of supported formats.

To learn more, go to export/import datasets section.

2 - Manual

This section contains documents for CVAT simple and advanced users

2.1 - Basics

This section contains basic documents for CVAT users

2.1.1 - Authorization

-

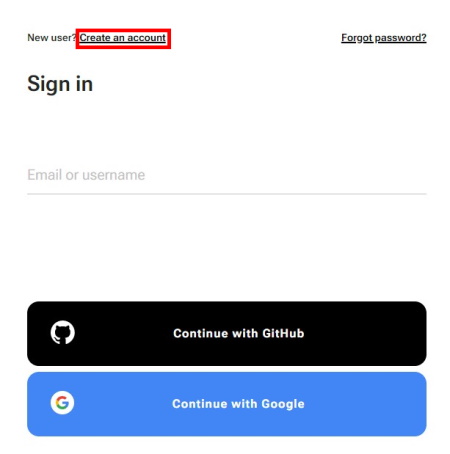

First of all, you have to log in to CVAT tool.

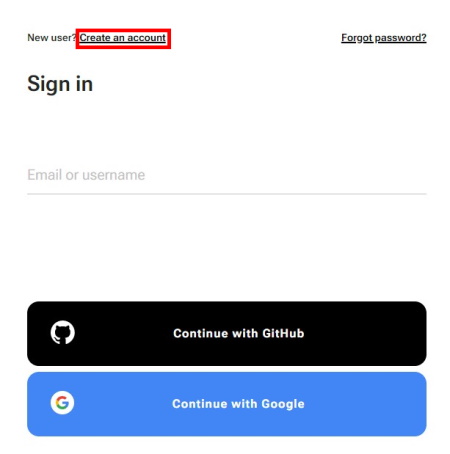

-

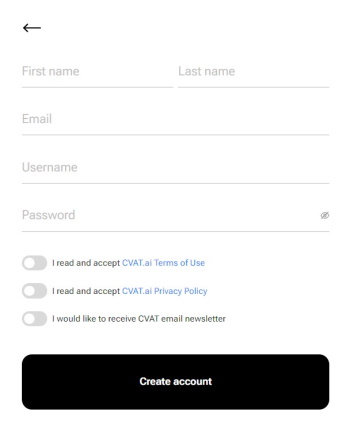

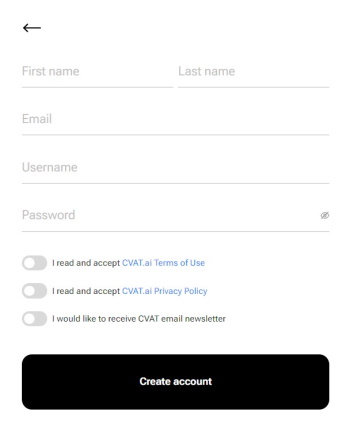

For register a new user press “Create an account”

-

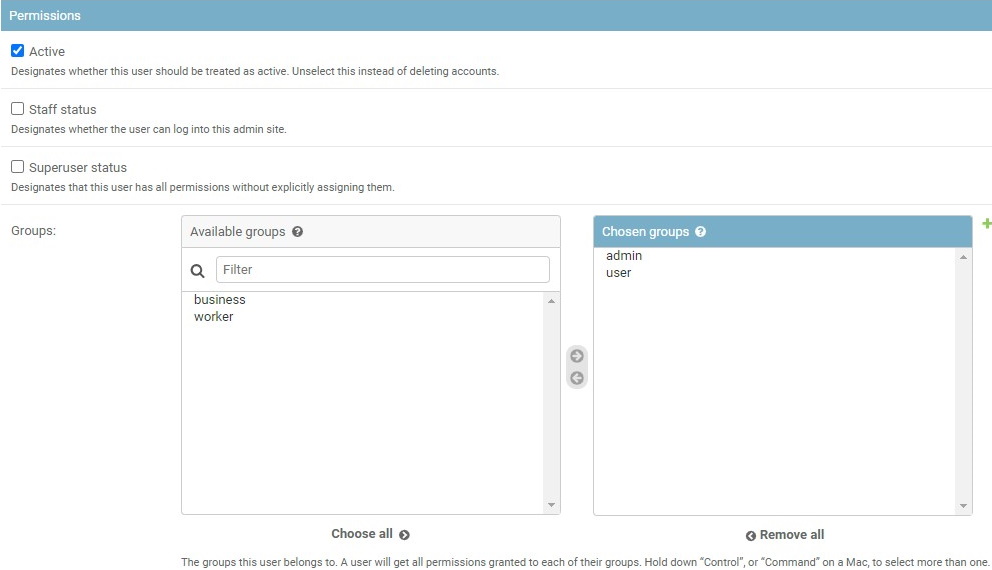

You can register a user but by default it will not have rights even to view

list of tasks. Thus you should create a superuser. The superuser can use

Django administration panel to assign correct

groups to the user. Please use the command below to create an admin account:

docker exec -it cvat_server bash -ic 'python3 ~/manage.py createsuperuser'

-

If you want to create a non-admin account, you can do that using the link below

on the login page. Don’t forget to modify permissions for the new user in the

administration panel. There are several groups (aka roles): admin, user,

annotator, observer.

Administration panel

Go to the Django administration panel. There you can:

2.1.2 - Creating an annotation task

Instructions on how to create and configure an annotation task.

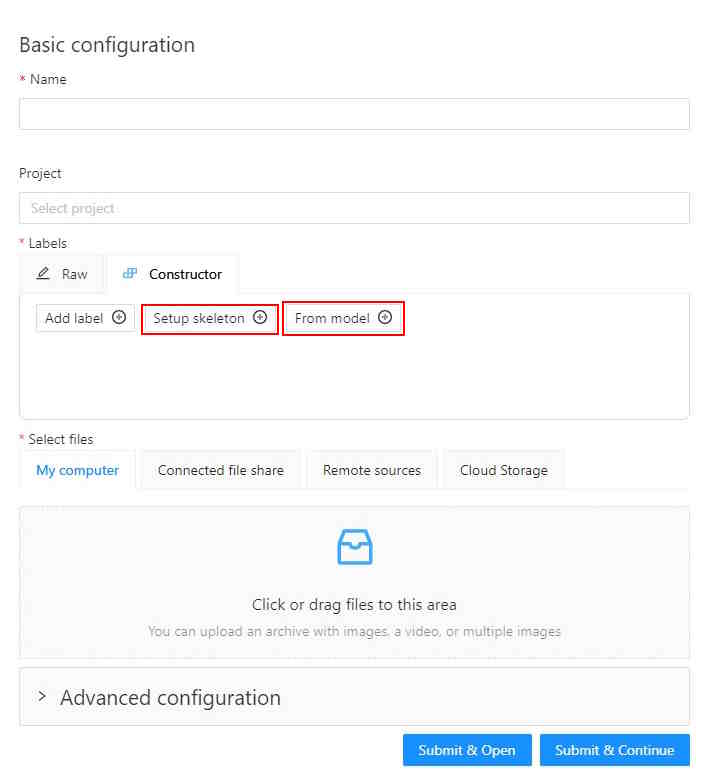

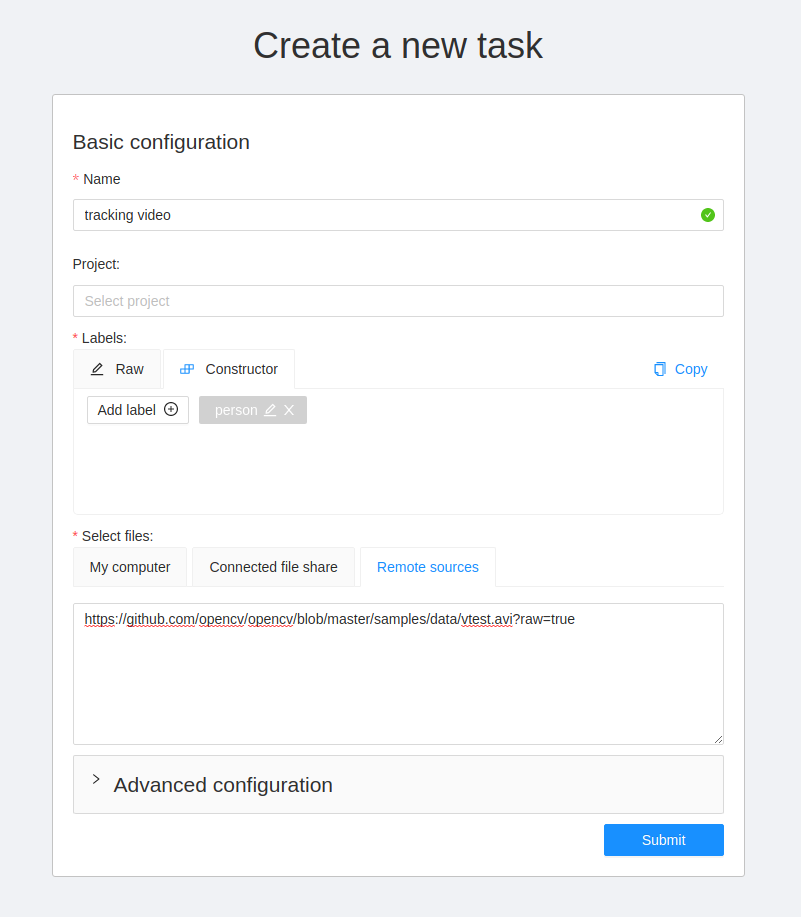

Create an annotation task pressing + button and select Create new task on the tasks page or on the project page.

Notice that the task will be created in the organization that you selected at the time of creation.

Read more about organizations.

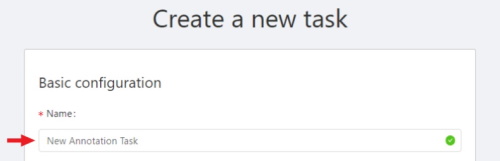

Specify parameters of the task:

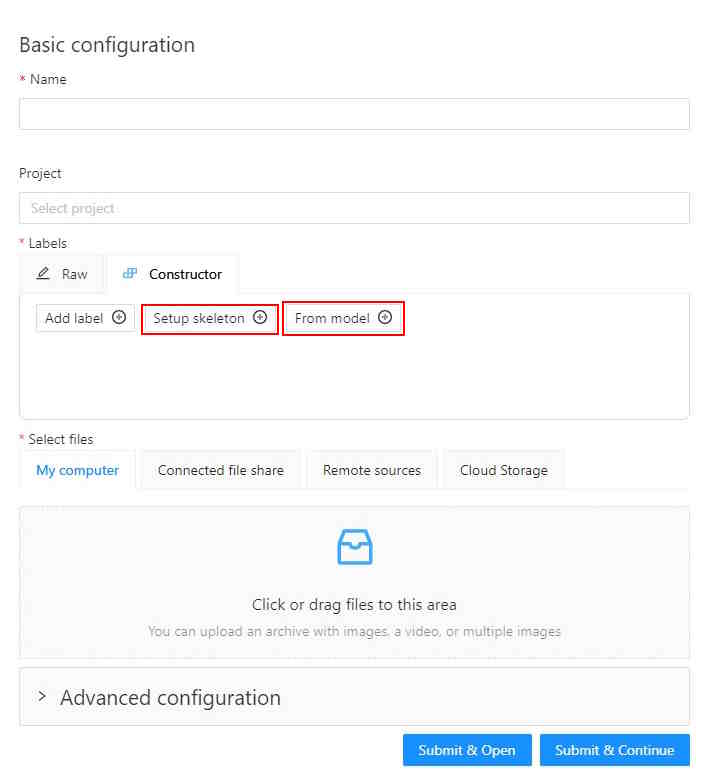

Basic configuration

Name

The name of the task to be created.

Projects

The project that this task will be related with.

Labels

There are two ways of working with labels (available only if the task is not related to the project):

-

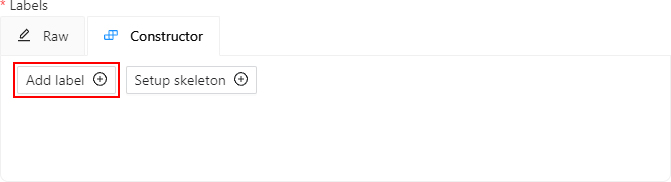

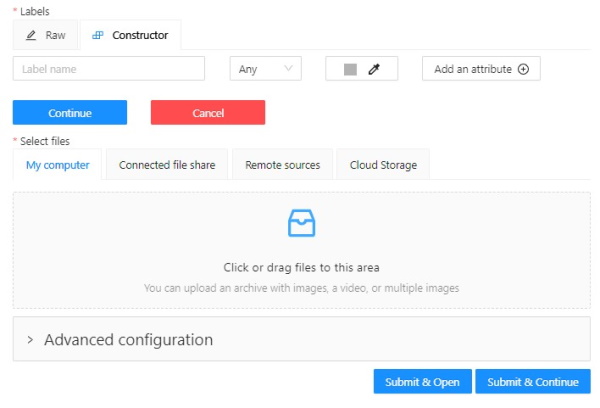

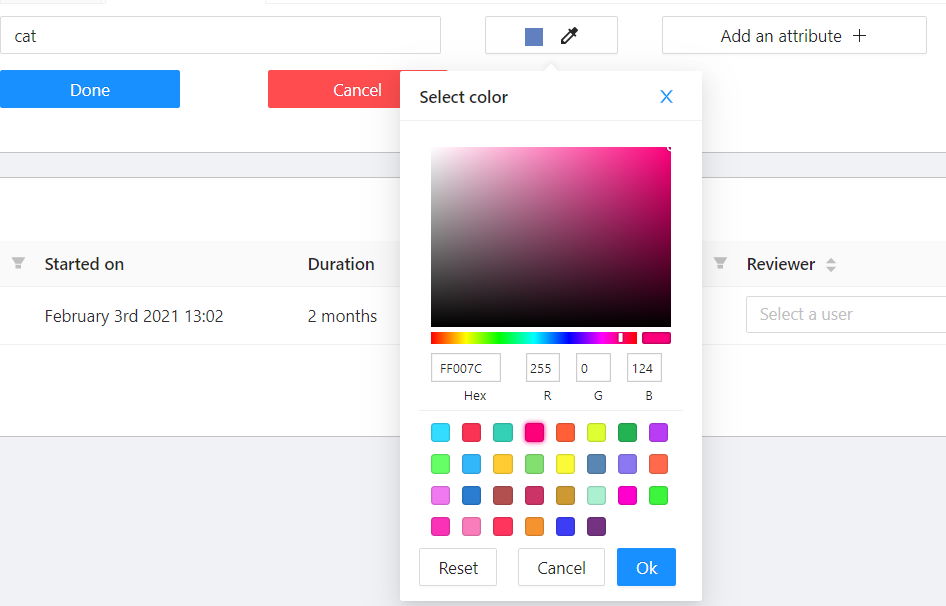

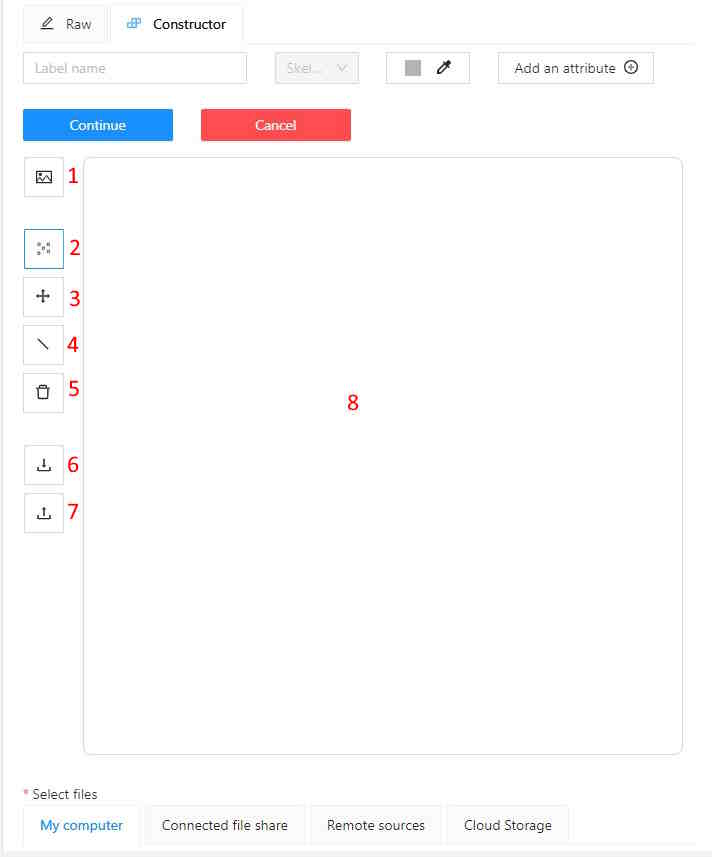

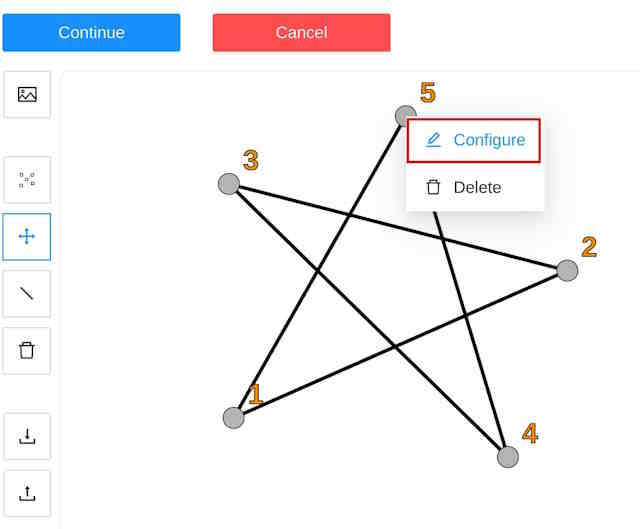

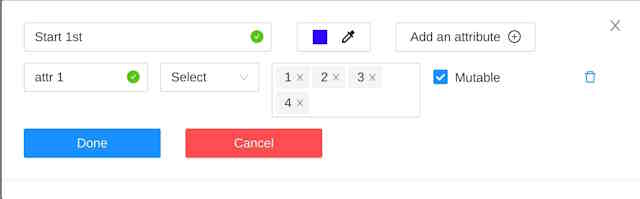

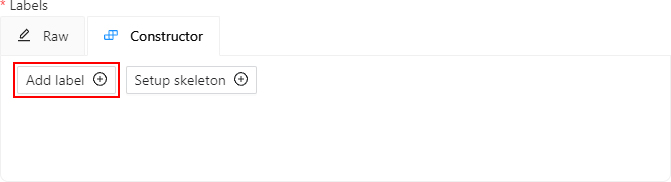

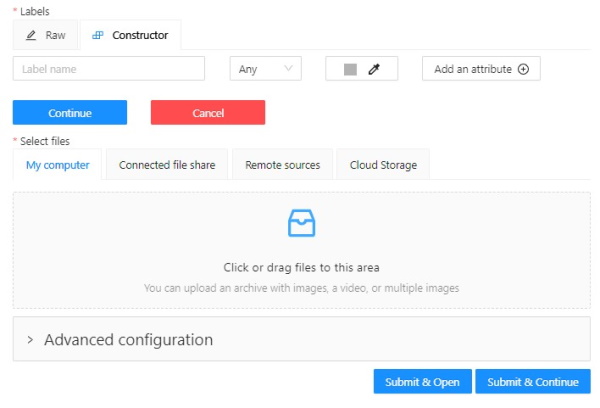

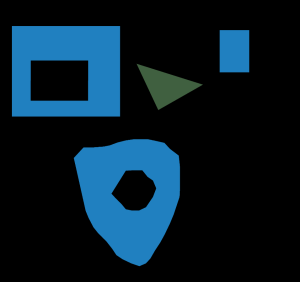

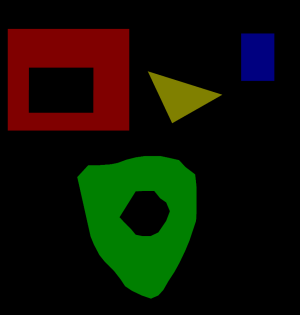

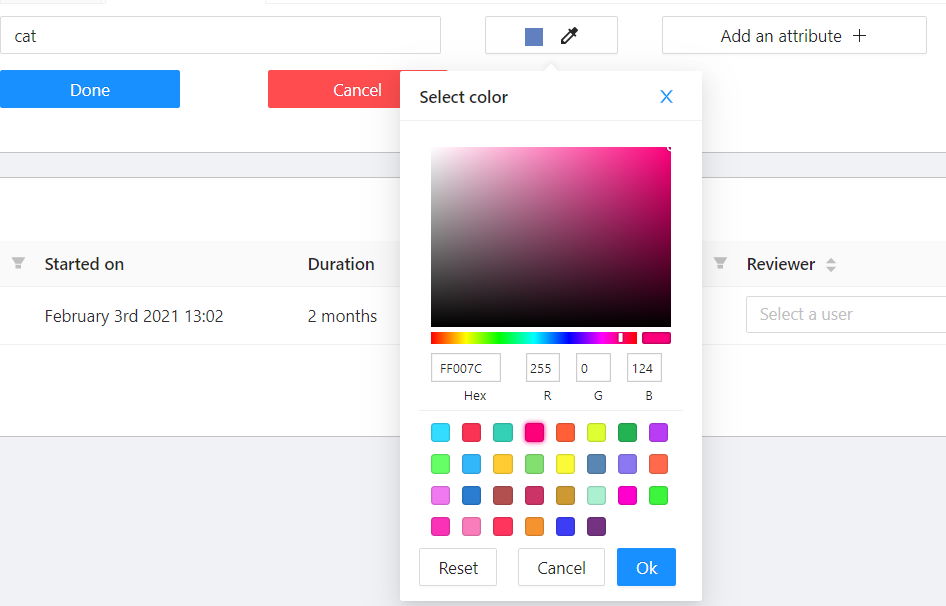

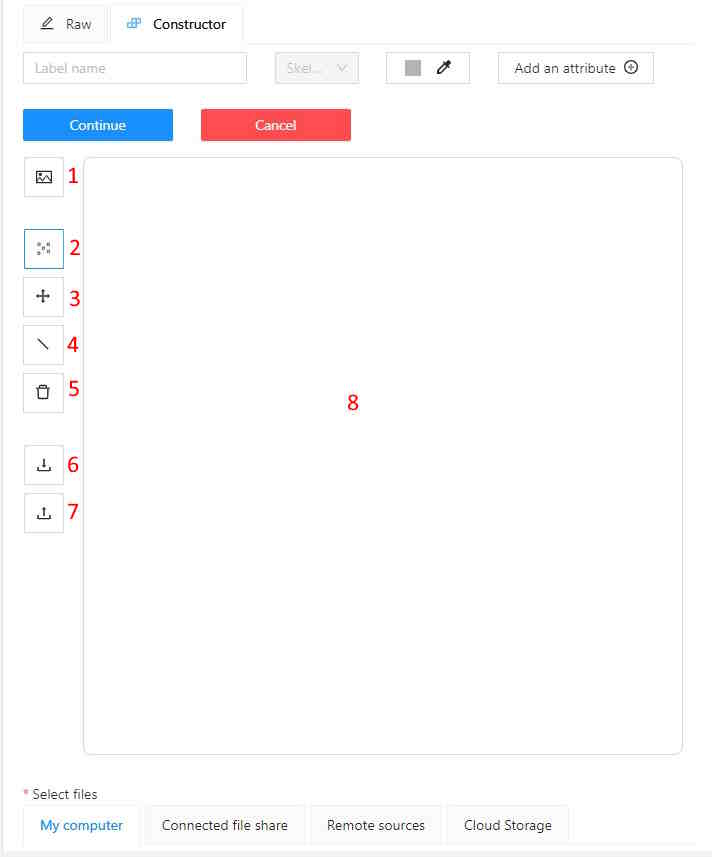

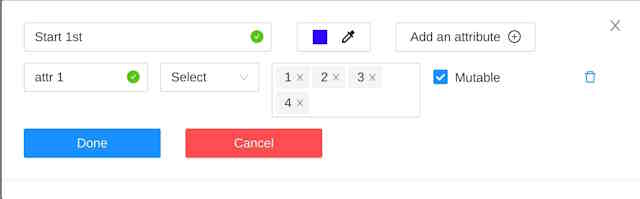

The Constructor is a simple way to add and adjust labels. To add a new label click the Add label button.

You can set a name of the label in the Label name field and choose a color for each label.

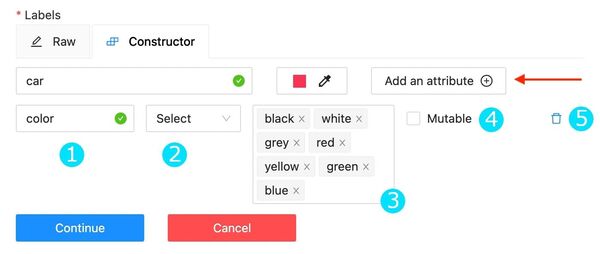

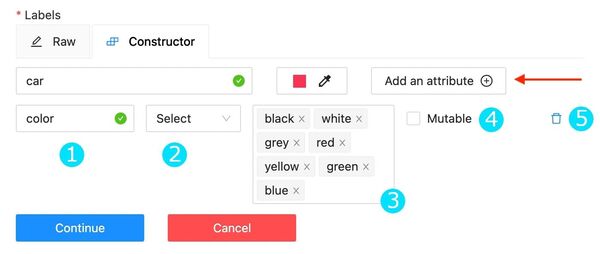

If necessary you can add an attribute and set its properties by clicking Add an attribute:

The following actions are available here:

- Set the attribute’s name.

- Choose the way to display the attribute:

- Select — drop down list of value

- Radio — is used when it is necessary to choose just one option out of few suggested.

- Checkbox — is used when it is necessary to choose any number of options out of suggested.

- Text — is used when an attribute is entered as a text.

- Number — is used when an attribute is entered as a number.

- Set values for the attribute. The values could be separated by pressing

Enter.

The entered value is displayed as a separate element which could be deleted

by pressing Backspace or clicking the close button (x).

If the specified way of displaying the attribute is Text or Number,

the entered value will be displayed as text by default (e.g. you can specify the text format).

- Checkbox

Mutable determines if an attribute would be changed frame to frame.

- You can delete the attribute by clicking the close button (x).

Click the Continue button to add more labels.

If you need to cancel adding a label - press the Cancel button.

After all the necessary labels are added click the Done button.

After clicking Done the added labels would be displayed as separate elements of different colour.

You can edit or delete labels by clicking Update attributes or Delete label.

-

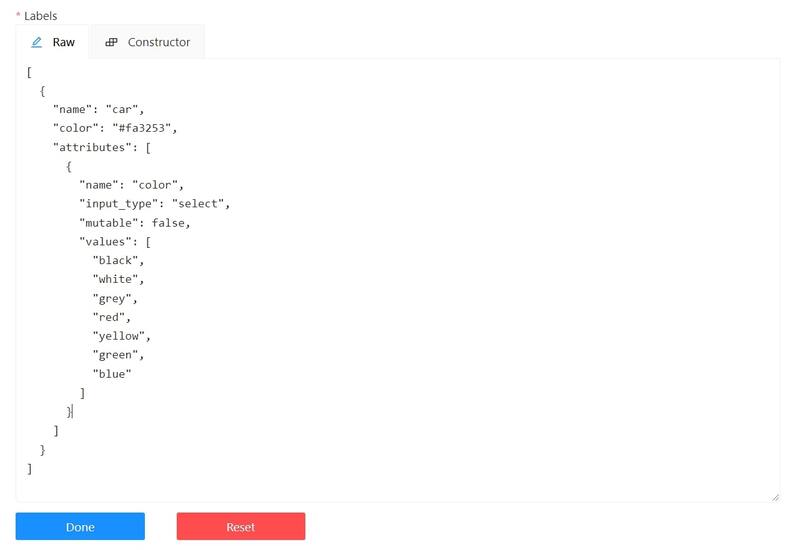

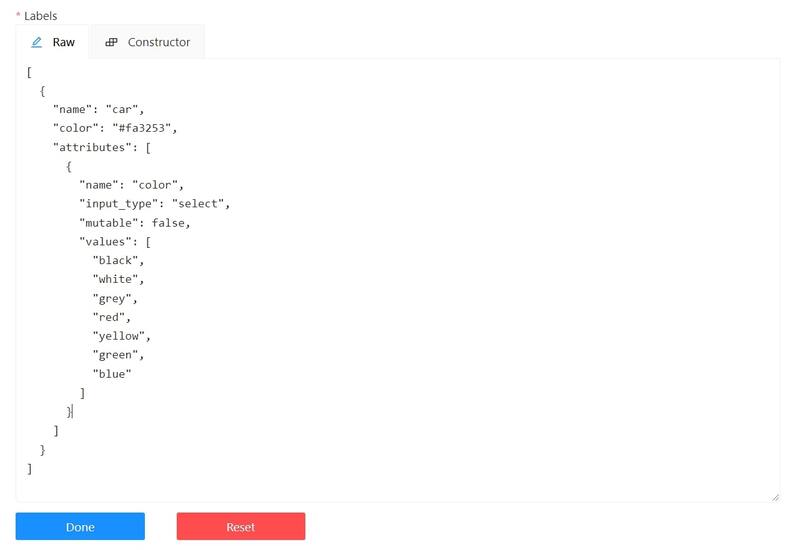

The Raw is a way of working with labels for an advanced user.

Raw presents label data in json format with an option of editing and copying labels as a text.

The Done button applies the changes and the Reset button cancels the changes.

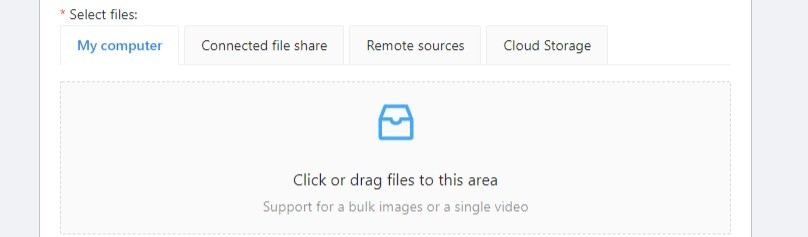

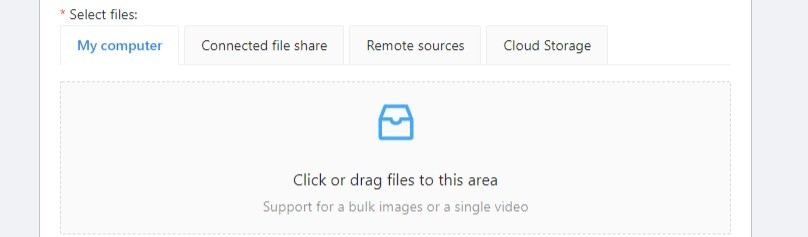

Select files

Press tab My computer to choose some files for annotation from your PC.

If you select tab Connected file share you can choose files for annotation from your network.

If you select Remote source , you’ll see a field where you can enter a list of URLs (one URL per line).

If you upload a video or dataset with images and select Use cache option, you can attach a manifest.jsonl file.

You can find how to prepare it here.

If you select the Cloud Storage tab, you can select a cloud storage (for this type the cloud storage name),

after that choose the manifest file and select the required files.

For more information on how to attach cloud storage, see attach cloud storage

To create a 3D task, you must prepare an archive with one of the following directory structures:

VELODYNE FORMAT

Structure:

velodyne_points/

data/

image_01.bin

IMAGE_00 # unknown dirname,

# generally image_01.png can be under IMAGE_00, IMAGE_01, IMAGE_02, IMAGE_03, etc

data/

image_01.png

3D POINTCLOUD DATA FORMAT

Structure:

pointcloud/

00001.pcd

related_images/

00001_pcd/

image_01.png # or any other image

3D, DEFAULT DATAFORMAT Option 1

Structure:

data/

image.pcd

image.png

3D, DEFAULT DATAFORMAT Option 2

Structure:

data/

image_1/

image_1.pcd

context_1.png # or any other name

context_2.jpg

You can’t mix 2D and 3D data in the same task.

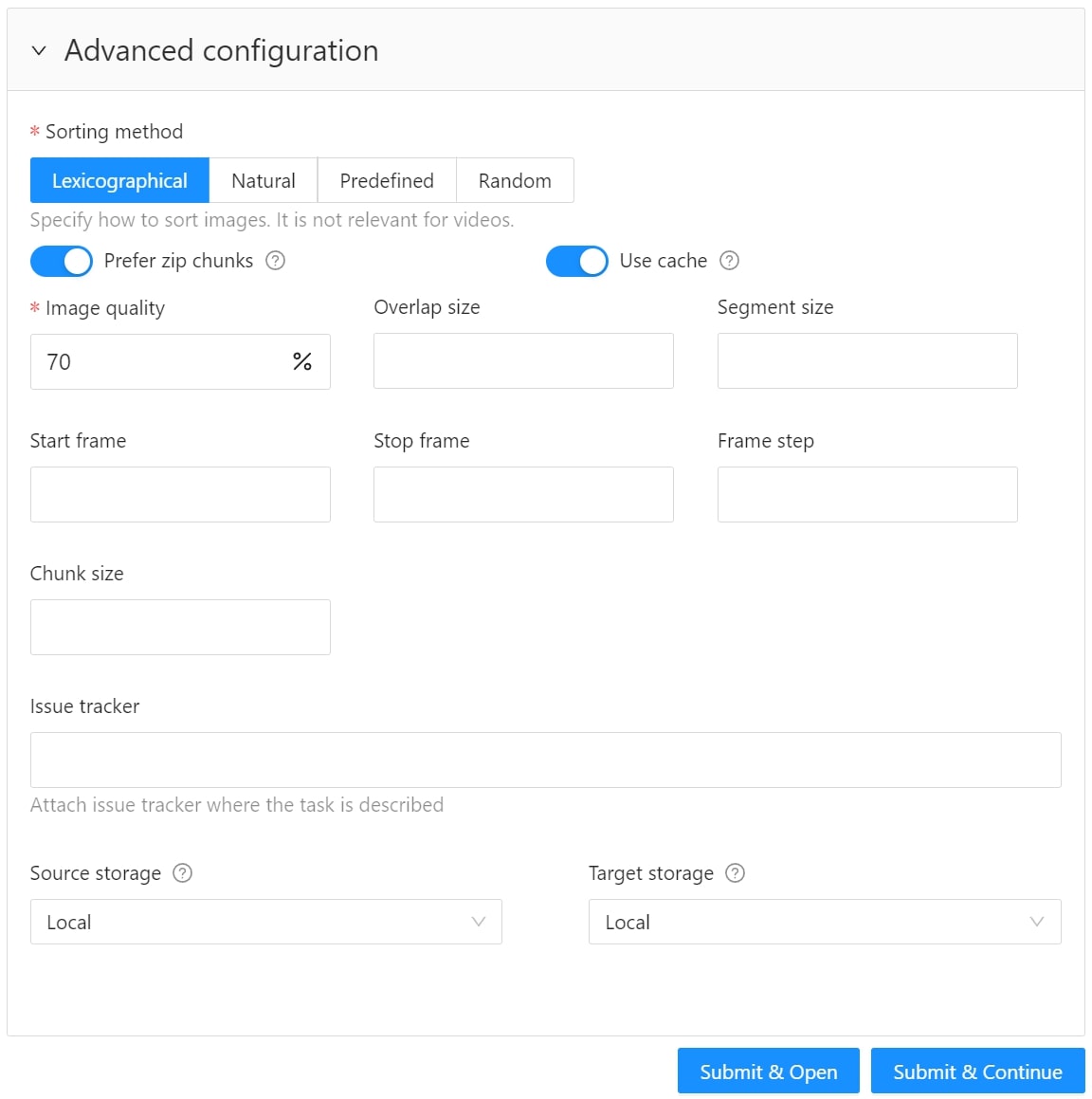

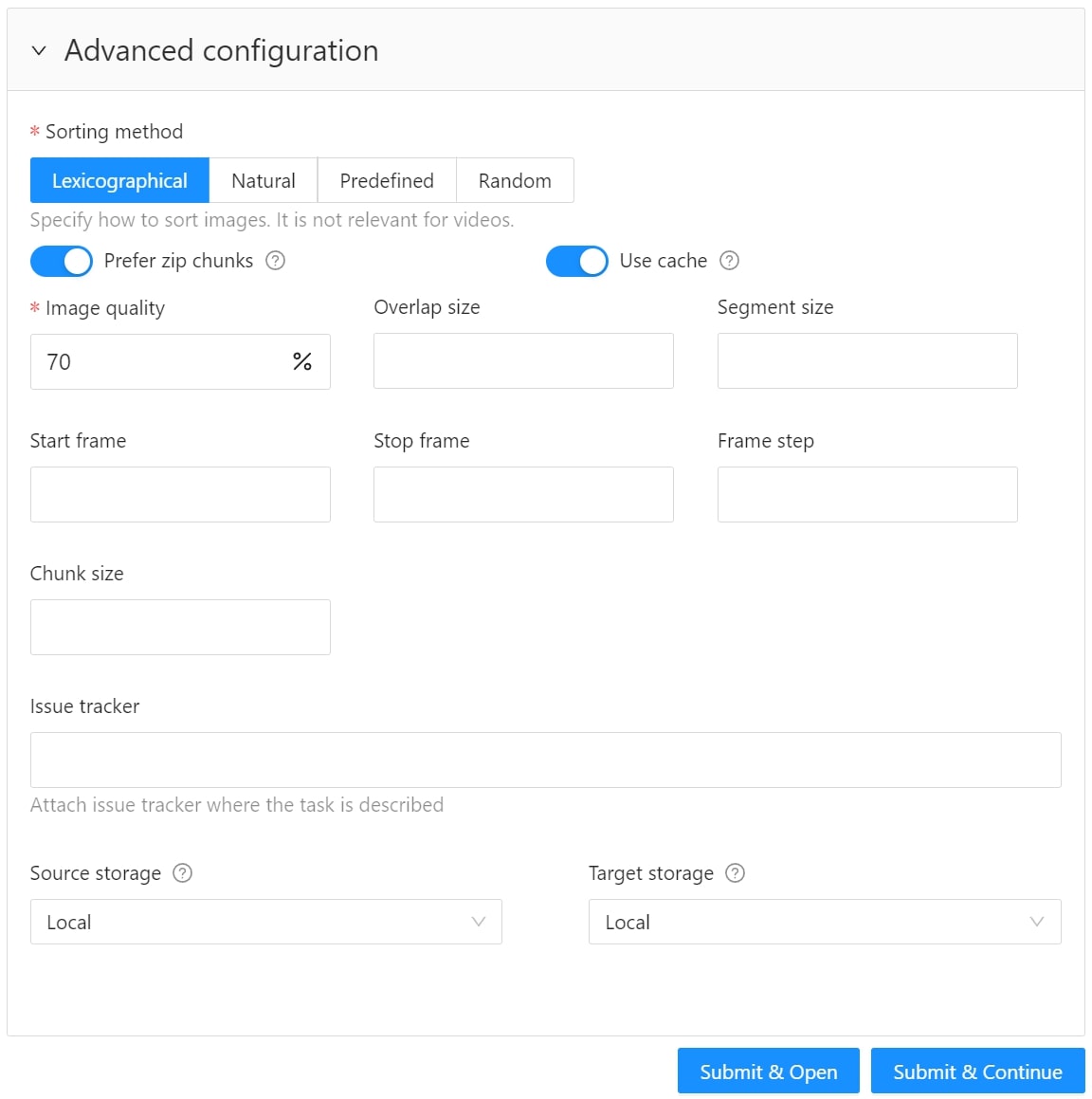

Advanced configuration

Sorting method

Option to sort the data. It is not relevant for videos.

For example, the sequence 2.jpeg, 10.jpeg, 1.jpeg after sorting will be:

lexicographical: 1.jpeg, 10.jpeg, 2.jpegnatural: 1.jpeg, 2.jpeg, 10.jpegpredefined: 2.jpeg, 10.jpeg, 1.jpeg

Use zip/video chunks

Force to use zip chunks as compressed data. Cut out content for videos only.

Use cache

Defines how to work with data. Select the checkbox to switch to the “on-the-fly data processing”,

which will reduce the task creation time (by preparing chunks when requests are received)

and store data in a cache of limited size with a policy of evicting less popular items.

See more here.

Image Quality

Use this option to specify quality of uploaded images.

The option helps to load high resolution datasets faster.

Use the value from 5 (almost completely compressed images) to 100 (not compressed images).

Overlap Size

Use this option to make overlapped segments.

The option makes tracks continuous from one segment into another.

Use it for interpolation mode. There are several options for using the parameter:

- For an interpolation task (video sequence).

If you annotate a bounding box on two adjacent segments they will be merged into one bounding box.

If overlap equals to zero or annotation is poor on adjacent segments inside a dumped annotation file,

you will have several tracks, one for each segment, which corresponds to the object.

- For an annotation task (independent images).

If an object exists on overlapped segments, the overlap is greater than zero

and the annotation is good enough on adjacent segments, it will be automatically merged into one object.

If overlap equals to zero or annotation is poor on adjacent segments inside a dumped annotation file,

you will have several bounding boxes for the same object.

Thus, you annotate an object on the first segment.

You annotate the same object on second segment, and if you do it right, you

will have one track inside the annotations.

If annotations on different segments (on overlapped frames)

are very different, you will have two shapes for the same object.

This functionality works only for bounding boxes.

Polygons, polylines, points don’t support automatic merge on overlapped segments

even the overlap parameter isn’t zero and match between corresponding shapes on adjacent segments is perfect.

Segment size

Use this option to divide a huge dataset into a few smaller segments.

For example, one job cannot be annotated by several labelers (it isn’t supported).

Thus using “segment size” you can create several jobs for the same annotation task.

It will help you to parallel data annotation process.

Start frame

Frame from which video in task begins.

Stop frame

Frame on which video in task ends.

Frame Step

Use this option to filter video frames.

For example, enter 25 to leave every twenty fifth frame in the video or every twenty fifth image.

Chunk size

Defines a number of frames to be packed in a chunk when send from client to server.

Server defines automatically if empty.

Recommended values:

- 1080p or less: 36

- 2k or less: 8 - 16

- 4k or less: 4 - 8

- More: 1 - 4

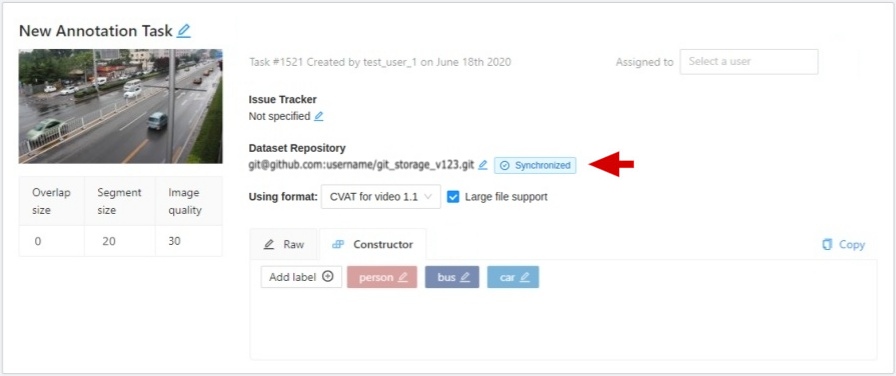

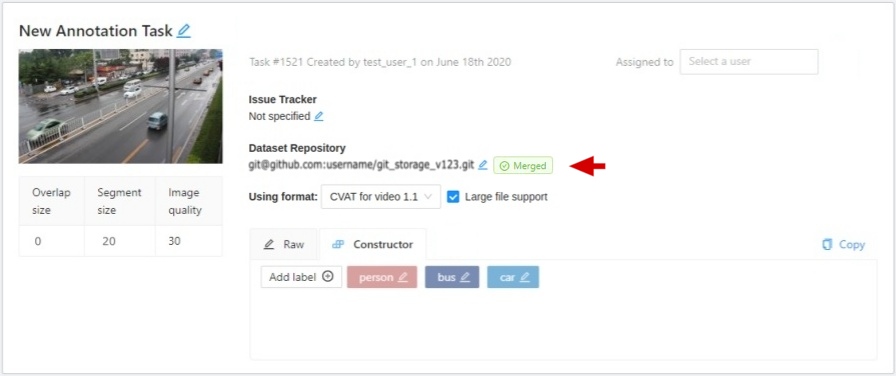

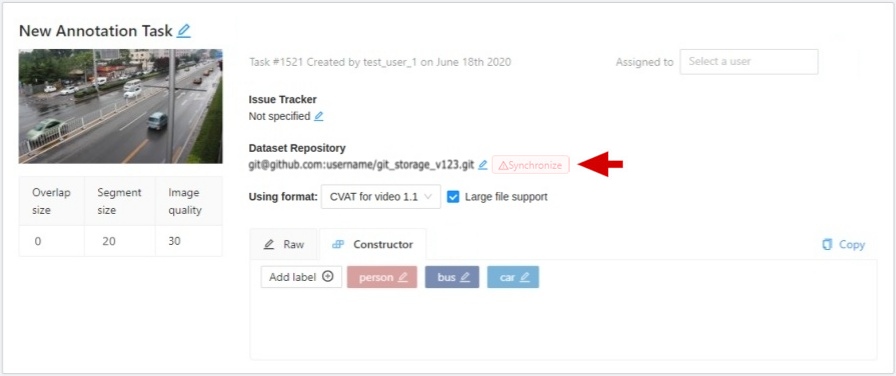

Dataset Repository

URL link of the repository optionally specifies the path to the repository for storage

(default: annotation / <dump_file_name> .zip).

The .zip and .xml file extension of annotation are supported.

Field format: URL [PATH] example: https://github.com/project/repos.git [1/2/3/4/annotation.xml]

Supported URL formats :

https://github.com/project/repos[.git]github.com/project/repos[.git]git@github.com:project/repos[.git]

After the task is created, the synchronization status is displayed on the task page.

If you specify a dataset repository, when you create a task, you will see a message

about the need to grant access with the ssh key.

This is the key you need to add to your github account.

For other git systems, you can learn about adding an ssh key in their documentation.

Use LFS

If the annotation file is large, you can create a repository with

LFS support.

Issue tracker

Specify full issue tracker’s URL if it’s necessary.

To save and open task click on Submit & Open button. Also you

can click on Submit & Continue button for creating several tasks in sequence.

Then, the created tasks will be displayed on a tasks page.

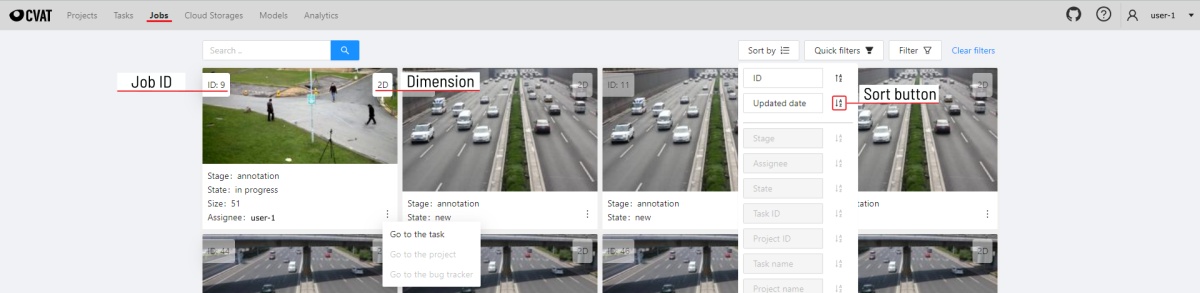

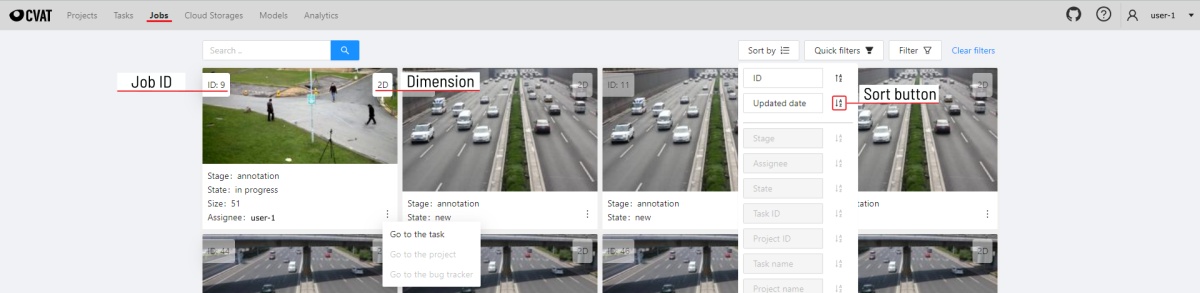

2.1.3 - Jobs page

On the jobs page, users (for example, with the worker role)

can see the jobs that are assigned to them without having access to the task page,

as well as track progress, sort and apply filters to the job list.

On the job page there is a list of jobs presented in the form of tiles, where each tile is one job.

Each element contains:

- job ID

- dimension

2D or 3D

- preview

- stage and state

- when hovering over an element, you can see:

- menu to navigate to a task, project, or bug tracker.

To open the job in a new tab, click on the job by holding Ctrl.

In the upper left corner there is a search bar, using which you can find the job by assignee, stage, state, etc.

In the upper right corner there are sorting, quick filters and filter.

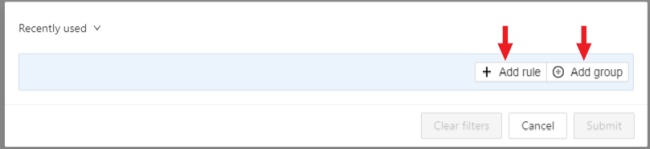

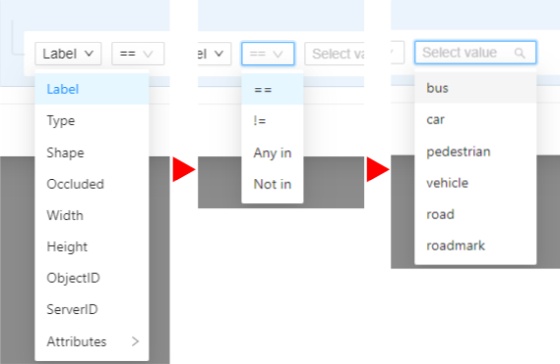

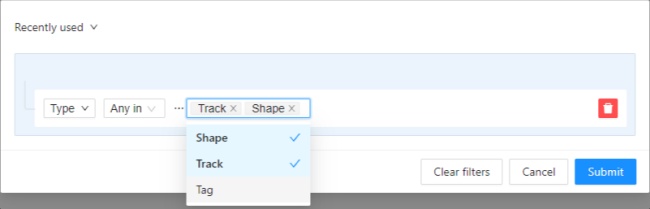

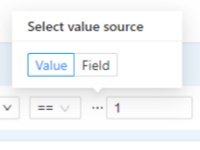

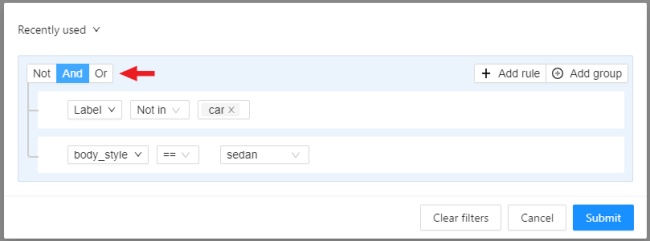

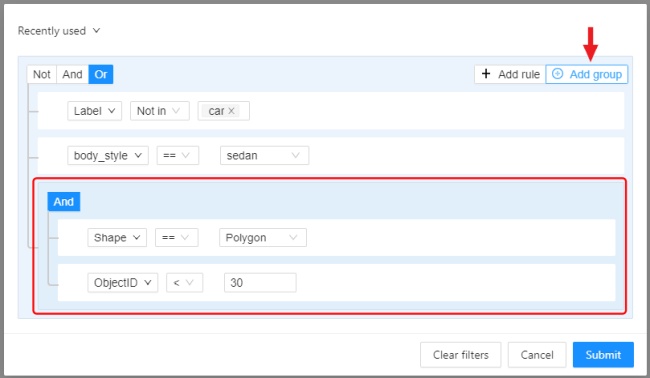

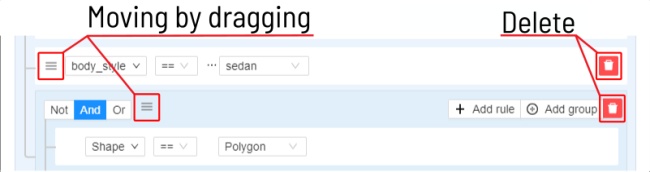

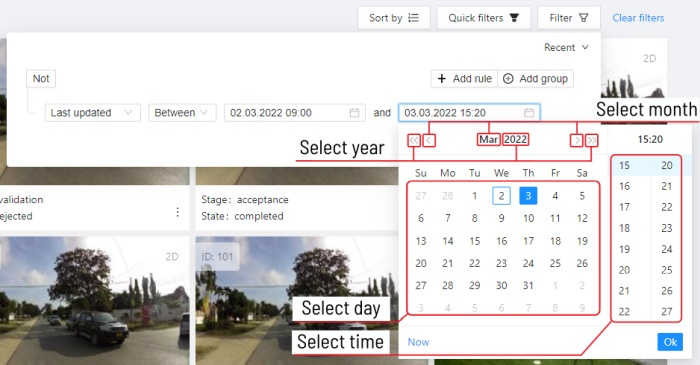

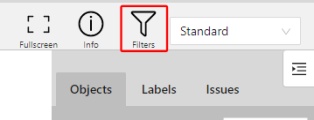

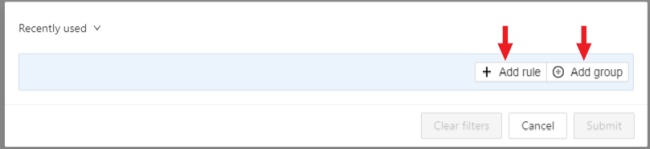

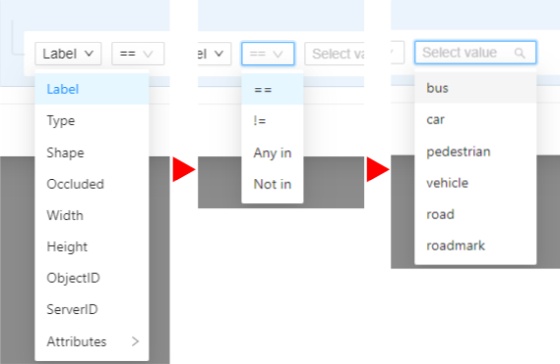

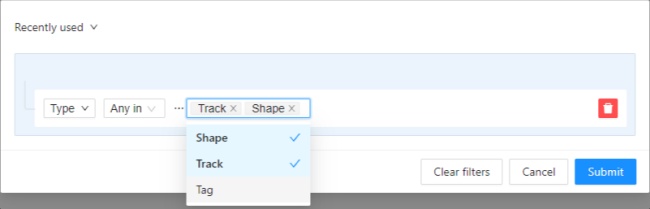

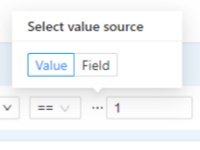

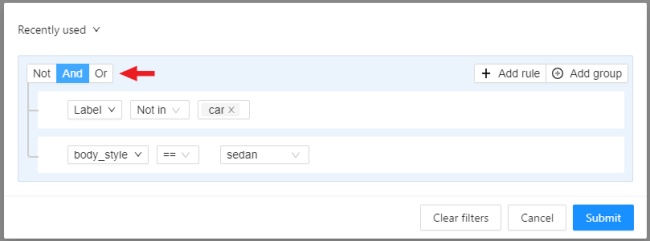

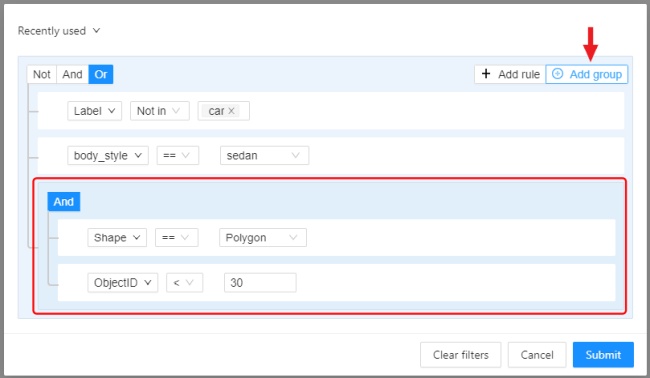

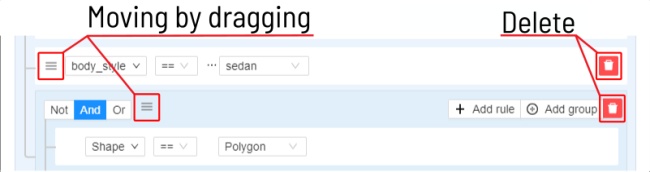

Filter

Applying filter disables the quick filter.

The filter works similarly to the filters for annotation,

you can create rules from properties, operators

and values and group rules into groups. For more details, see the filter section.

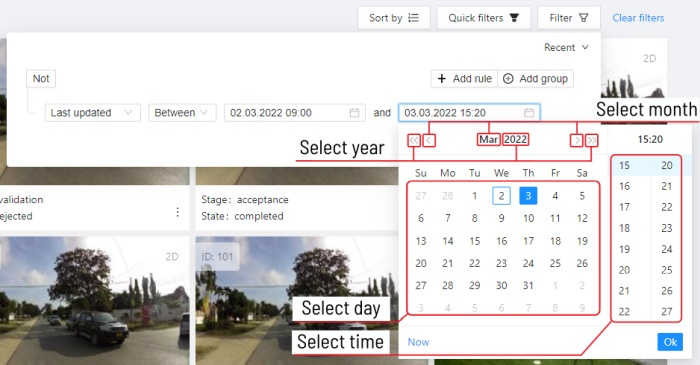

Learn more about date and time selection.

For clear all filters press Clear filters.

Supported properties for jobs list

| Properties |

Supported values |

Description |

State |

all the state names |

The state of the job

(can be changed in the menu inside the job) |

Stage |

all the stage names |

The stage of the job

(is specified by a drop-down list on the task page) |

Dimension |

2D or 3D |

Depends on the data format

(read more in creating an annotation task) |

Assignee |

username |

Assignee is the user who is working on the job.

(is specified on task page) |

Last updated |

last modified date and time (or value range) |

The date can be entered in the dd.MM.yyyy HH:mm format

or by selecting the date in the window that appears

when you click on the input field |

ID |

number or range of job ID |

|

Task ID |

number or range of task ID |

|

Project ID |

number or range of project ID |

|

Task name |

task name |

Set when creating a task,

can be changed on the (task page) |

Project name |

project name |

Specified when creating a project,

can be changed on the (project section) |

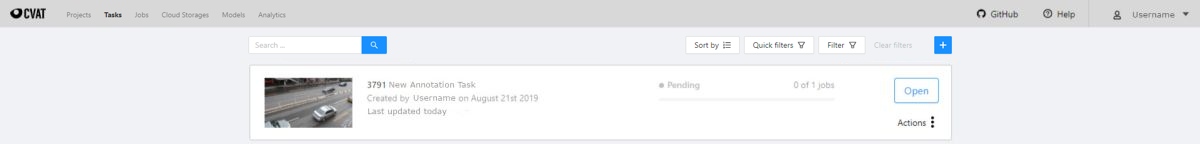

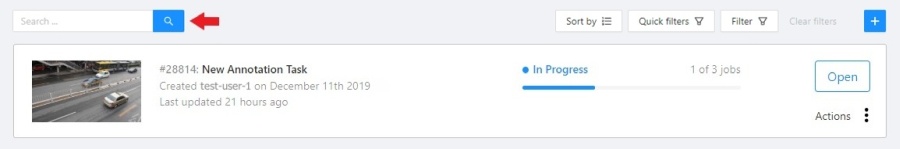

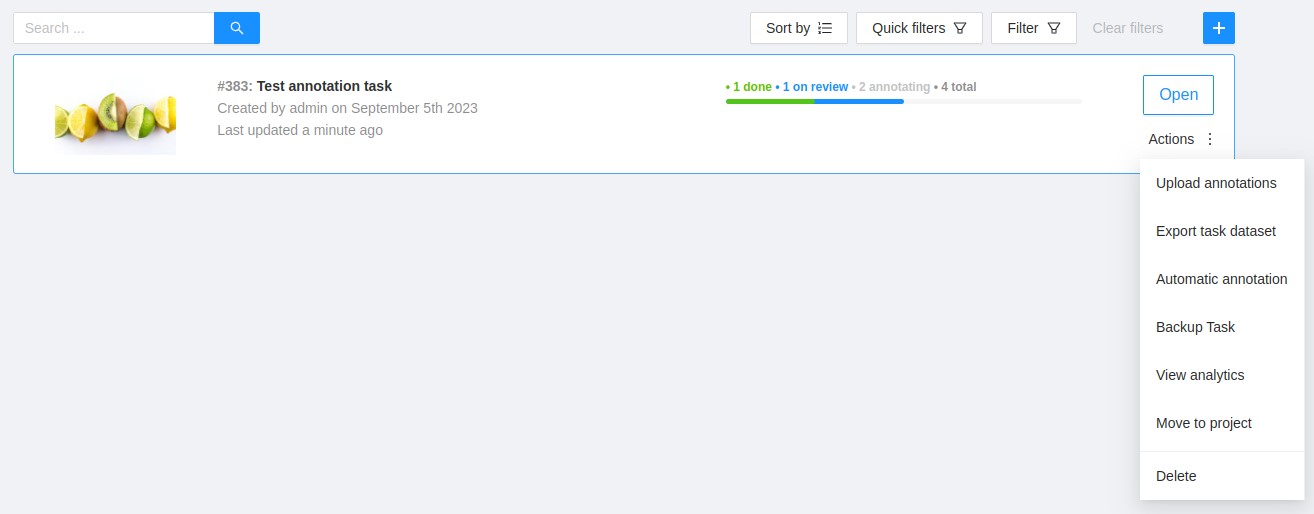

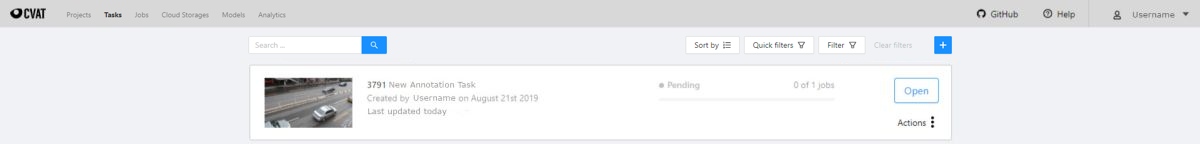

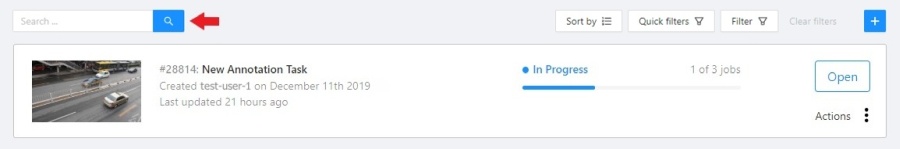

2.1.4 - Tasks page

Overview of the Tasks page.

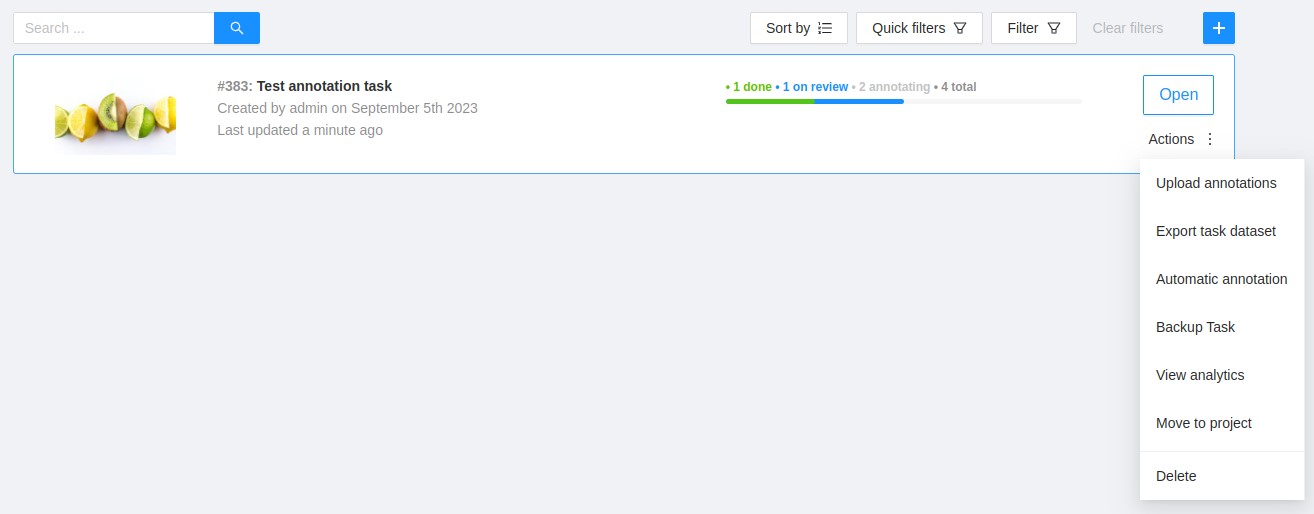

The tasks page contains elements and each of them relates to a separate task. They are sorted in creation order.

Each element contains: task name, preview, progress bar, button Open, and menu Actions.

Each button is responsible for a in menu Actions specific function:

Export task dataset — download annotations or annotations and images in a specific format.

More information is available in the export/import datasets

section.Upload annotation upload annotations in a specific format.

More information is available in the export/import datasets

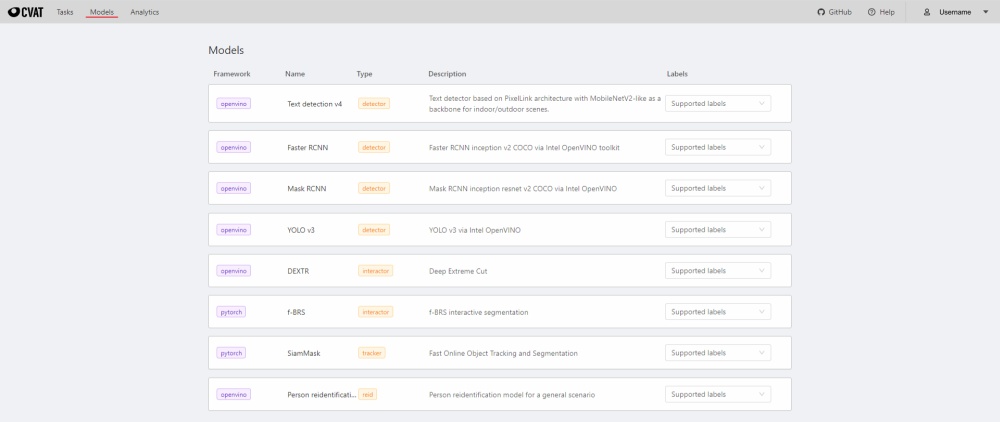

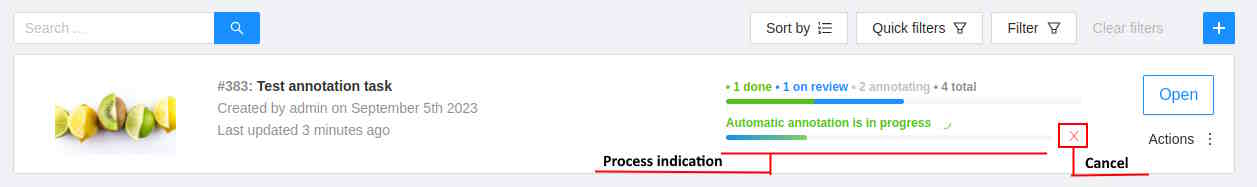

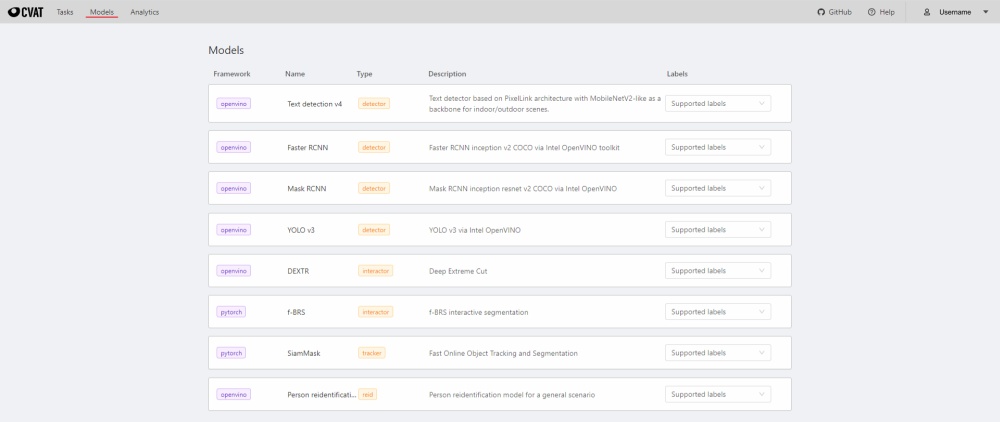

section.Automatic Annotation — automatic annotation with OpenVINO toolkit.

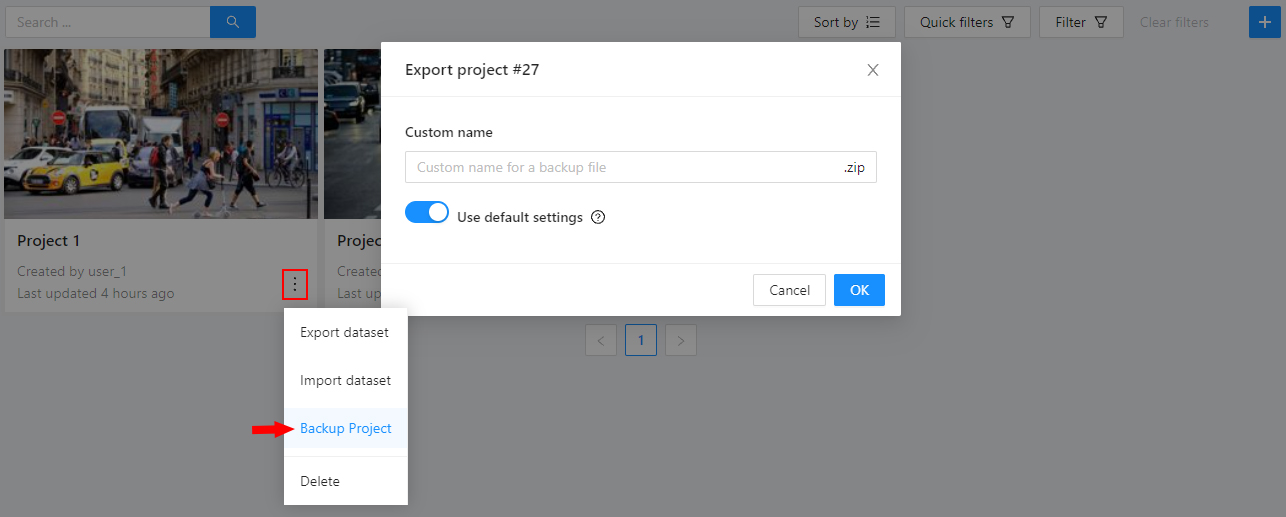

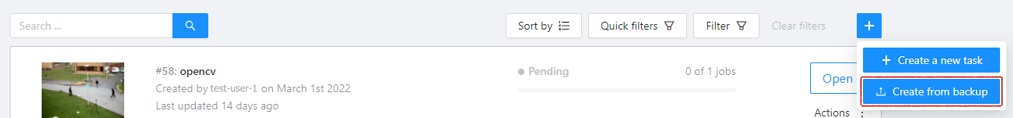

Presence depends on how you build the CVAT instance.Backup task — make a backup of this task into a zip archive.

Read more in the backup section.Move to project — Moving a task to a project (can be used to move a task from one project to another).

Note that attributes reset during the moving process. In case of label mismatch,

you can create or delete necessary labels in the project/task.

Some task labels can be matched with the target project labels.Delete — delete task.

In the upper left corner there is a search bar, using which you can find the task by assignee, task name etc.

In the upper right corner there are sorting, quick filters and filter.

Filter

Applying filter disables the quick filter.

The filter works similarly to the filters for annotation,

you can create rules from properties,

operators and values and group rules into groups.

For more details, see the filter section.

Learn more about date and time selection.

For clear all filters press Clear filters.

Supported properties for tasks list

| Properties |

Supported values |

Description |

Dimension |

2D or 3D |

Depends on the data format

(read more in creating an annotation task) |

Status |

annotation, validation or completed |

|

Data |

video, images |

Depends on the data format

(read more in creating an annotation task) |

Subset |

test, train, validation or custom subset |

[read more] [subset] |

Assignee |

username |

Assignee is the user who is working on the project, task or job.

(is specified on task page) |

Owner |

username |

The user who owns the project, task, or job |

Last updated |

last modified date and time (or value range) |

The date can be entered in the dd.MM.yyyy HH:mm format

or by selecting the date in the window that appears

when you click on the input field |

ID |

number or range of job ID |

|

Project ID |

number or range of project ID |

|

Name |

name |

On the tasks page - name of the task,

on the project page - name of the project |

Project name |

project name |

Specified when creating a project,

can be changed on the (project section) |

Push Open button to go to task details.

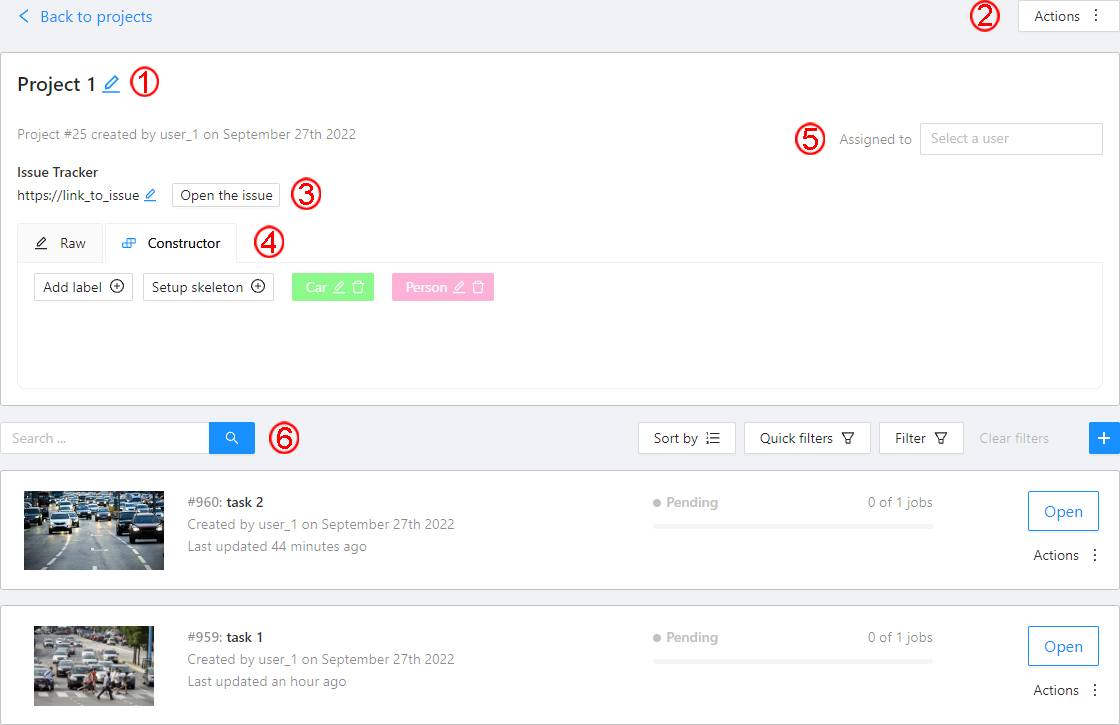

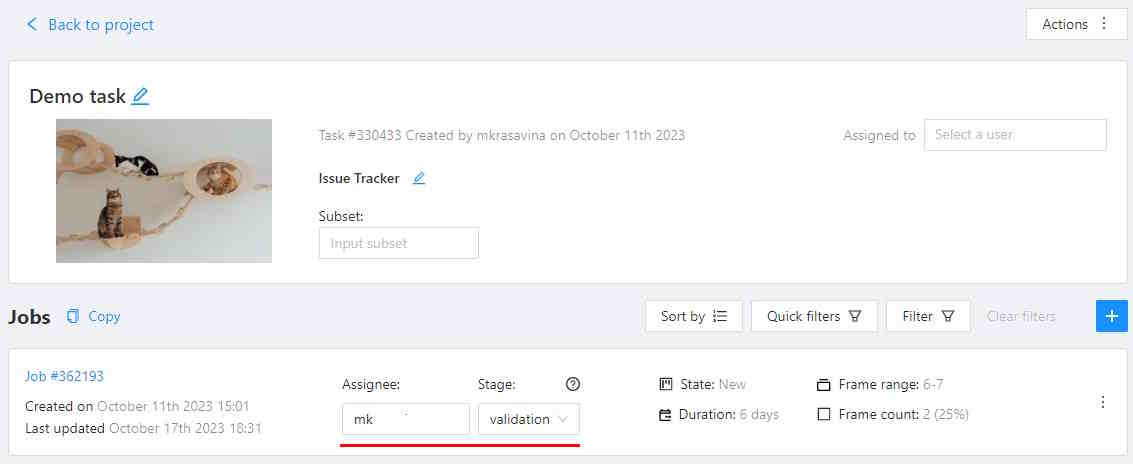

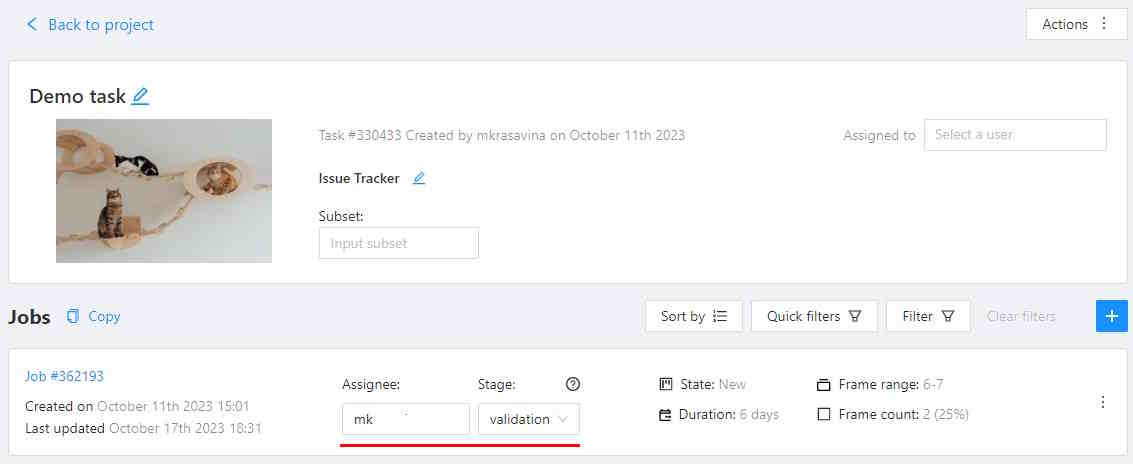

2.1.5 - Task details

Overview of the Task details page.

Task details is a task page which contains a preview, a progress bar

and the details of the task (specified when the task was created) and the jobs section.

-

The next actions are available on this page:

-

Change the task’s title.

-

Open Actions menu.

-

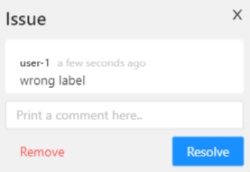

Change issue tracker or open issue tracker if it is specified.

-

Change labels (available only if the task is not related to the project).

You can add new labels or add attributes for the existing labels in the Raw mode or the Constructor mode.

By clicking Copy you will copy the labels to the clipboard.

-

Assigned to — is used to assign a task to a person. Start typing an assignee’s name and/or

choose the right person out of the dropdown list.

In the list of users, you will only see the users of the organization

where the task is created.

-

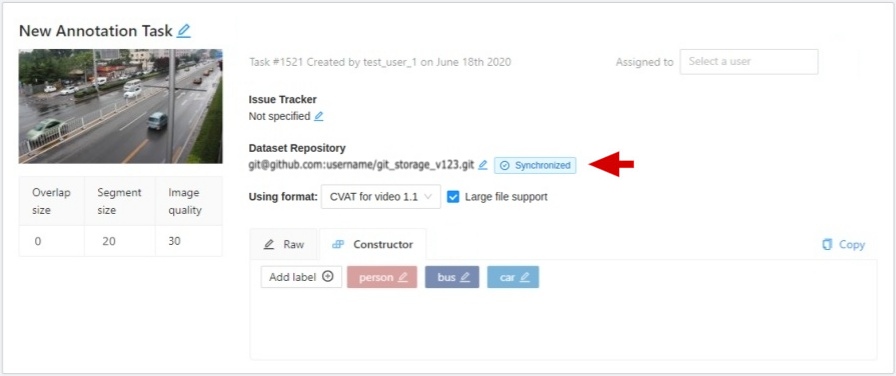

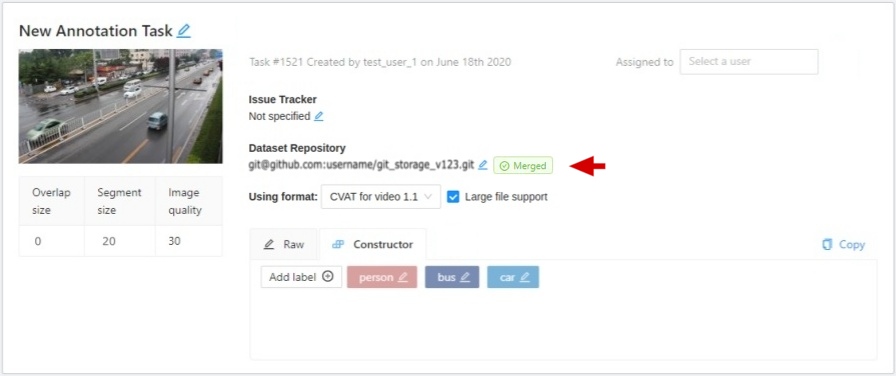

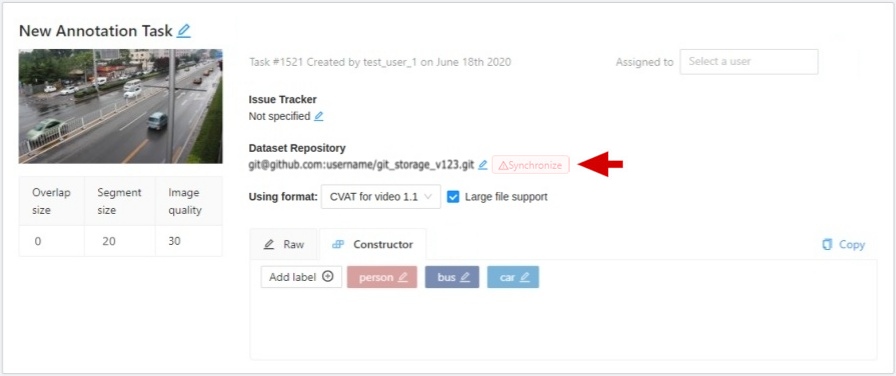

Dataset Repository

- Repository link

- Synchronization status with dataset repository.

When you click on the status, the current annotation will be sent. It has several states:

- Synchronized - task synchronized, that is, created a pull of requisites with an actual annotation file.

- Merged - merged pull request with up-to-date annotation file.

- Synchronize - highlighted in red, annotations are not synced.

- Use a format drop-down list of formats in which the annotation can be synchronized.

- Support for large file enabling the use of LFS.

-

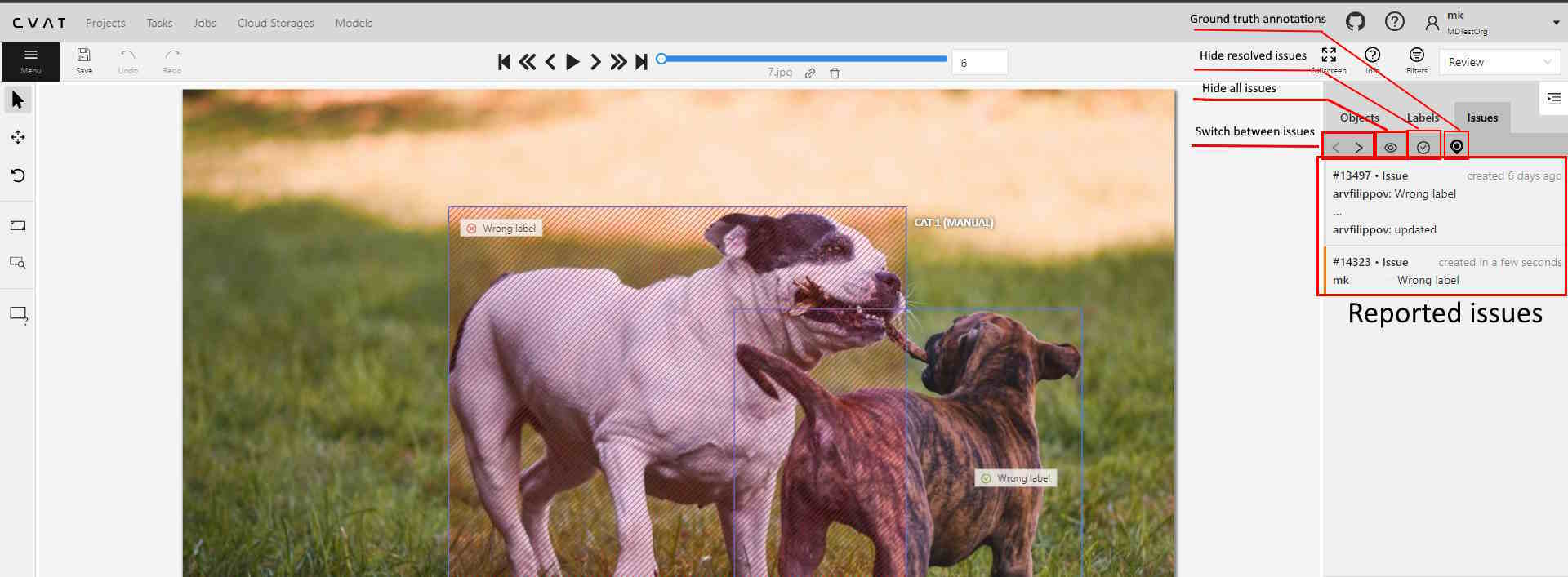

Jobs — is a list of all jobs for a particular task. Here you can find the next data:

- Jobs name with a hyperlink to it.

- Frames — the frame interval.

- A stage of the job. The stage is specified by a drop-down list.

There are three stages:

annotation, validation or acceptance. This value affects the task progress bar.

- A state of the job. The state can be changed by an assigned user in the menu inside the job.

There are several possible states:

new, in progress, rejected, completed.

- Started on — start date of this job.

- Duration — is the amount of time the job is being worked.

- Assignee is the user who is working on the job.

You can start typing an assignee’s name and/or choose the right person out of the dropdown list.

- Reviewer – a user assigned to carry out the review,

read more in the review section.

Copy. By clicking Copy you will copy the job list to the clipboard.

The job list contains direct links to jobs.

You can filter or sort jobs by status, as well as by assigner or reviewer.

Follow a link inside Jobs section to start annotation process.

In some cases, you can have several links. It depends on size of your

task and Overlap Size and Segment Size parameters. To improve

UX, only the first chunk of several frames will be loaded and you will be able

to annotate first images. Other frames will be loaded in background.

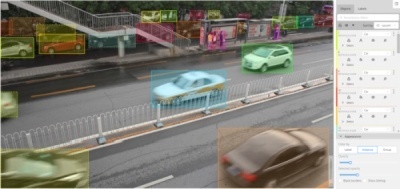

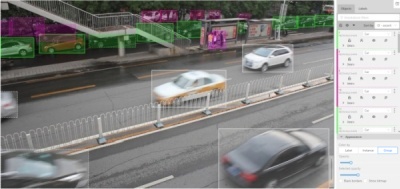

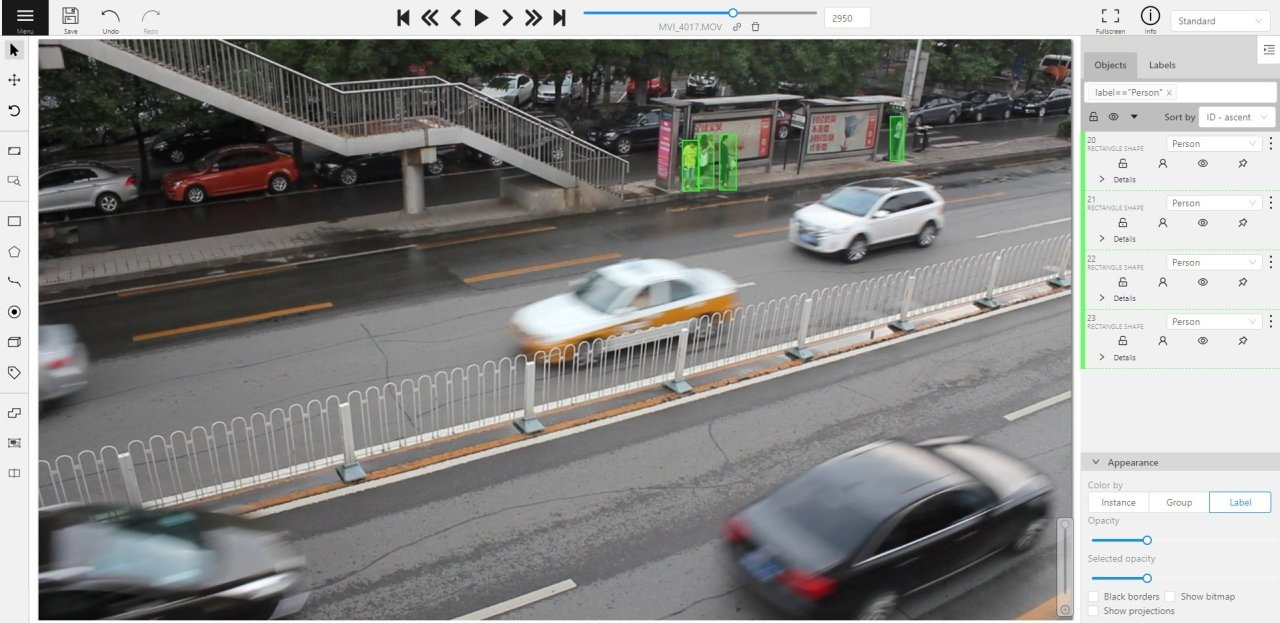

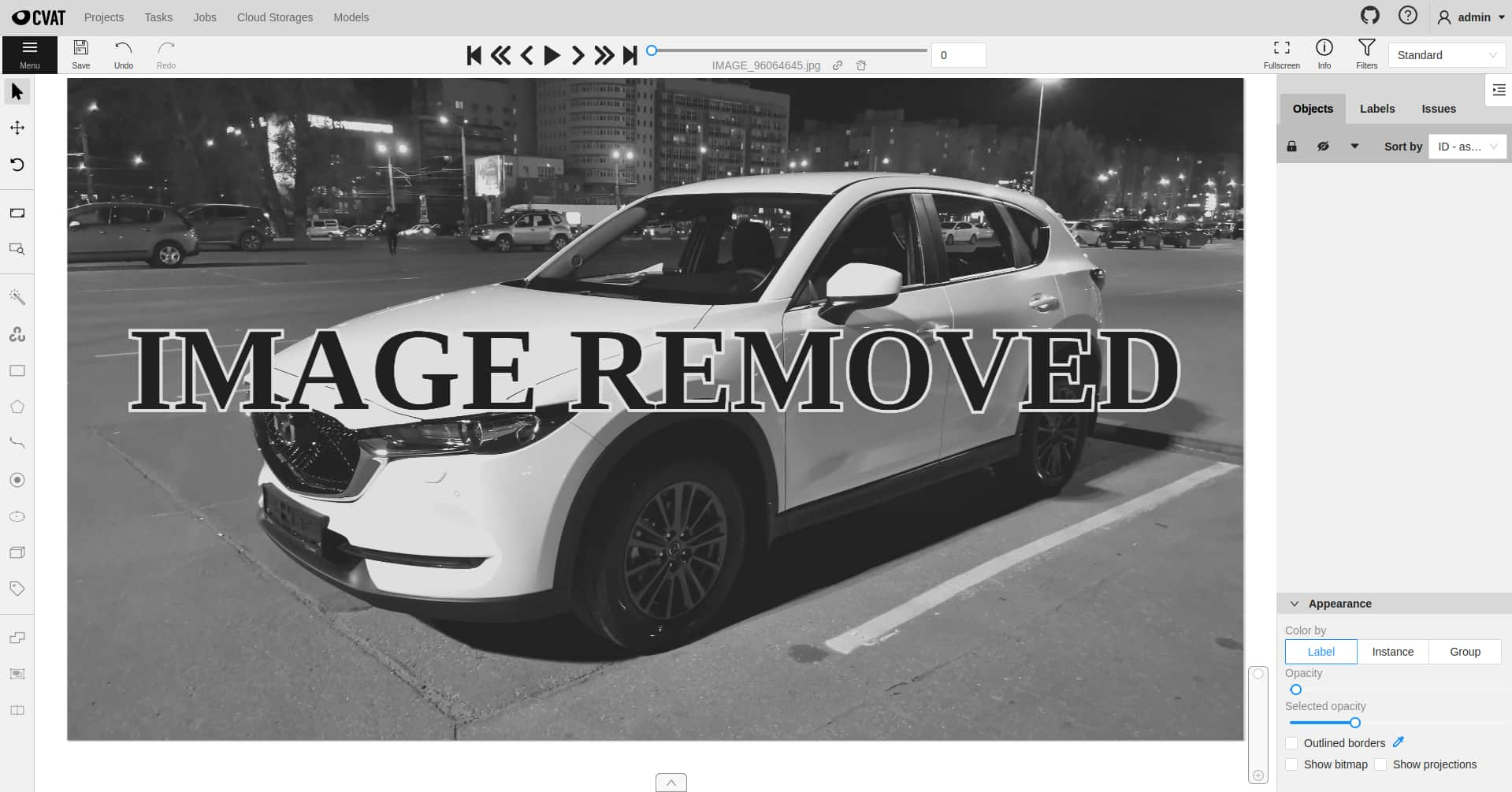

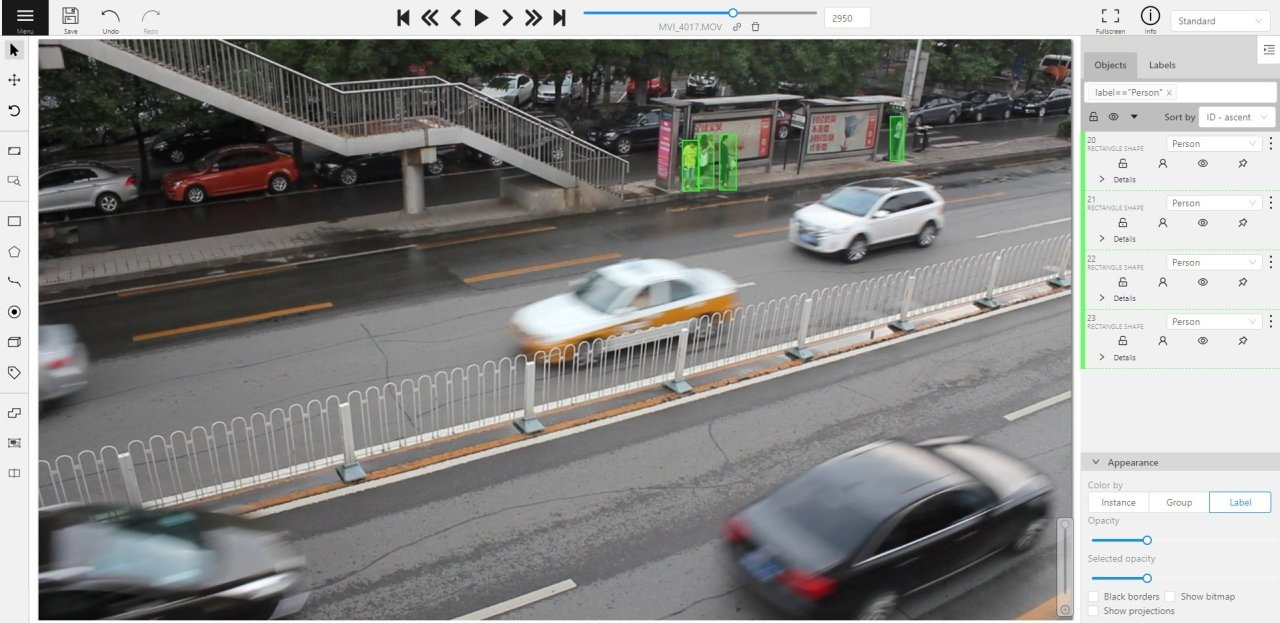

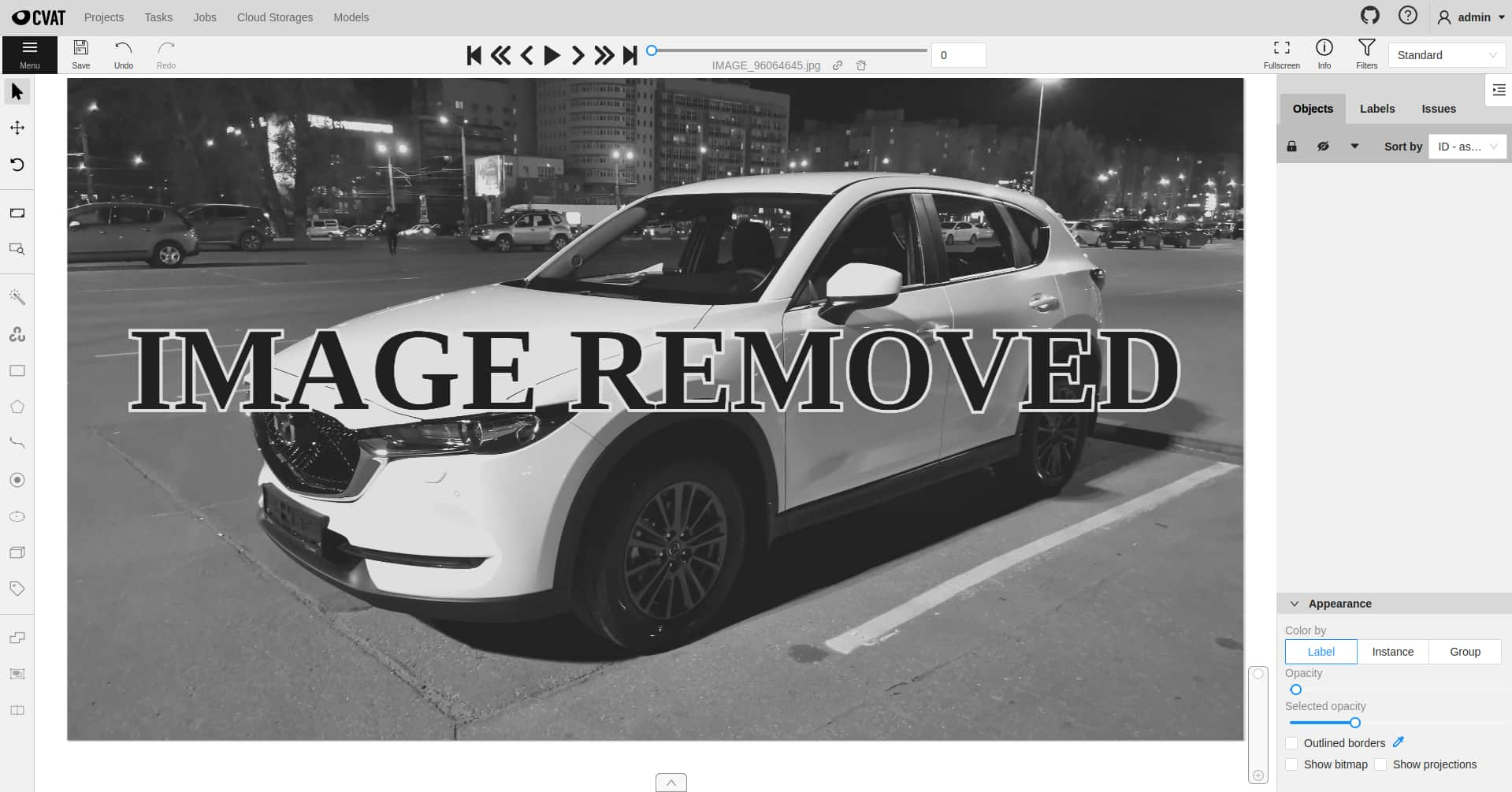

2.1.6 - Interface of the annotation tool

Main user interface

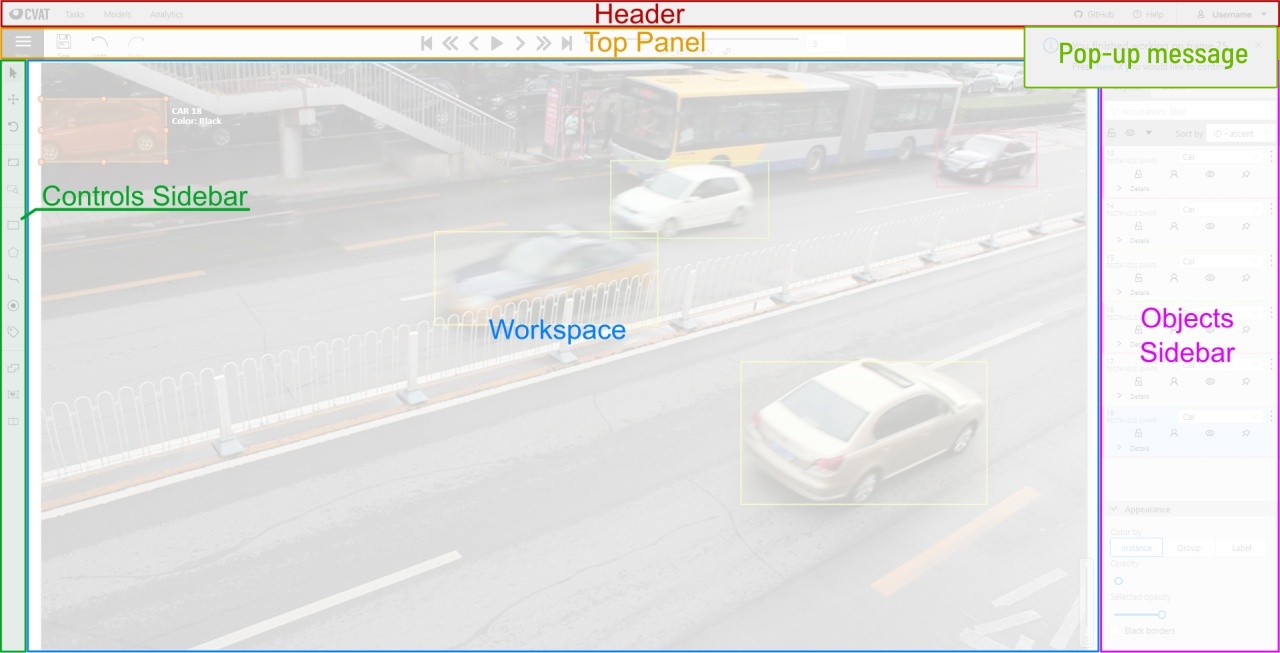

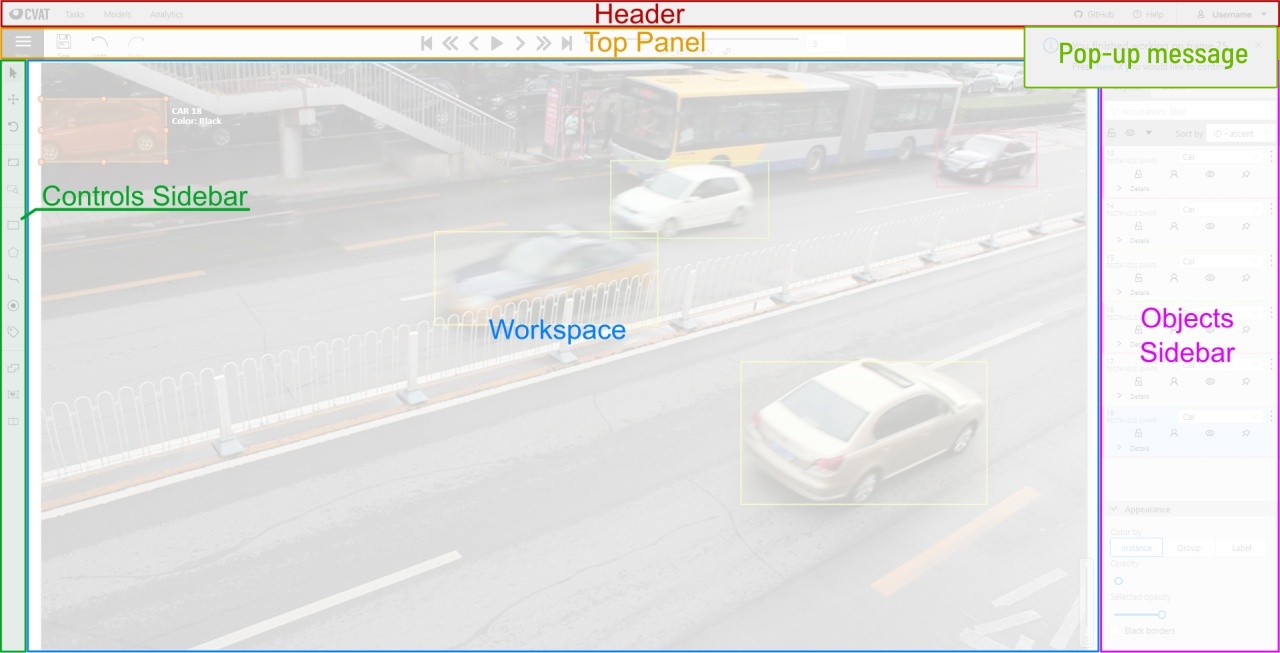

The tool consists of:

-

Header - pinned header used to navigate CVAT sections and account settings;

-

Top panel — contains navigation buttons, main functions and menu access;

-

Workspace — space where images are shown;

-

Controls sidebar — contains tools for navigating the image, zoom,

creating shapes and editing tracks (merge, split, group);

-

Objects sidebar — contains label filter, two lists:

objects (on the frame) and labels (of objects on the frame) and appearance settings.

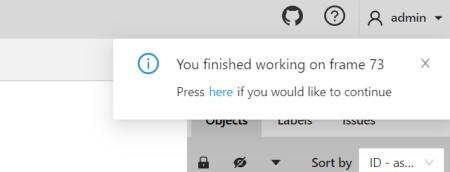

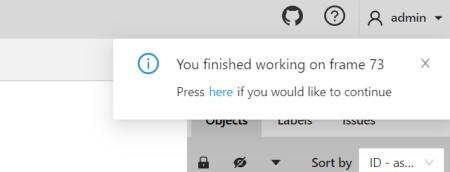

Pop-up messages

In CVAT, you’ll receive pop-up messages in the upper-right corner, on any page.

Pop-up messages can contain useful information, links, or error messages.

Informational messages inform about the end of the auto-annotation process.

Learn more about auto-annotation.

Jump Suggestion Messages

Open a task

After creating a task, you can immediately open it by clicking Open task.

Learn more about creating a task.

Continue to the frame on which the work on the job is finished

When you open a job that you previously worked on, you will receive pop-up messages with a proposal

to go to the frame that was visited before closing the tab.

Error Messages

If you perform impossible actions, you may receive an error message.

The message may contain information about the error

or a prompt to open the browser console (shortcut F12) for information.

If you encounter a bug that you can’t solve yourself,

you can create an issue on GitHub.

2.1.7 - Basic navigation

Overview of basic controls.

-

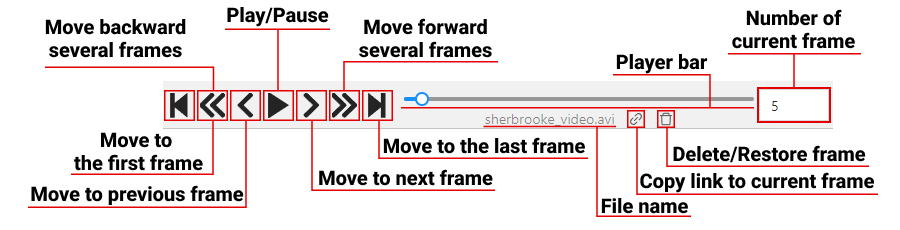

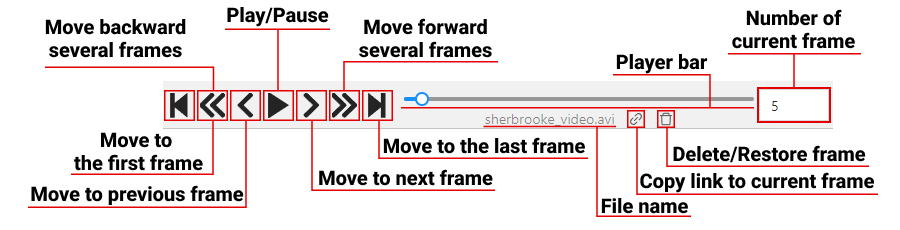

Use arrows below to move to the next/previous frame.

Use the scroll bar slider to scroll through frames.

Almost every button has a shortcut.

To get a hint about a shortcut, just move your mouse pointer over an UI element.

-

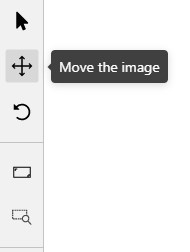

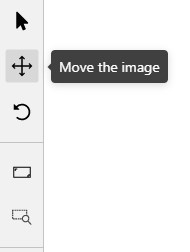

To navigate the image, use the button on the controls sidebar.

Another way an image can be moved/shifted is by holding the left mouse button inside

an area without annotated objects.

If the Mouse Wheel is pressed, then all annotated objects are ignored. Otherwise the

a highlighted bounding box will be moved instead of the image itself.

-

You can use the button on the sidebar controls to zoom on a region of interest.

Use the button Fit the image to fit the image in the workspace.

You can also use the mouse wheel to scale the image

(the image will be zoomed relatively to your current cursor position).

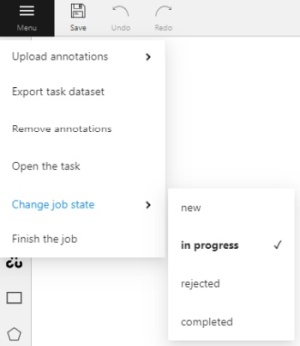

2.1.8 - Top Panel

Overview of controls available on the top panel of the annotation tool.

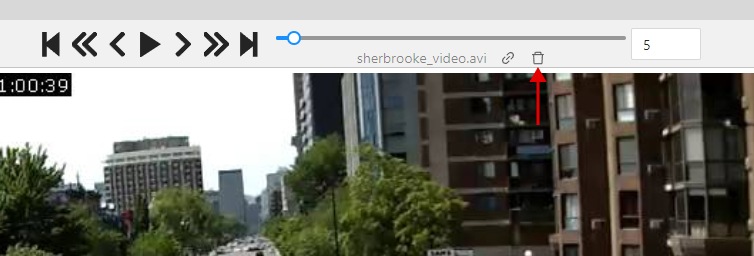

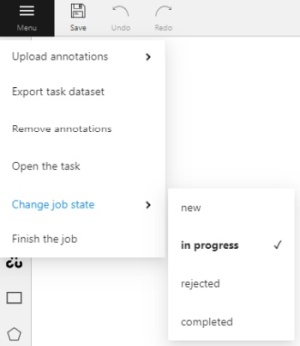

It is the main menu of the annotation tool. It can be used to download, upload and remove annotations.

Button assignment:

-

Upload Annotations — uploads annotations into a task.

-

Export as a dataset — download a data set from a task in one of the supported formats.

You can also enter a Custom name and enable the Save images checkbox if you want the dataset to contain images.

-

Remove Annotations — calls the confirmation window if you click Delete, the annotation of the current job

will be removed, if you click Select range you can remove annotation on range frames, if you activate checkbox

Delete only keyframe for tracks then only keyframes will be deleted from the tracks, on the selected range.

-

Open the task — opens a page with details about the task.

-

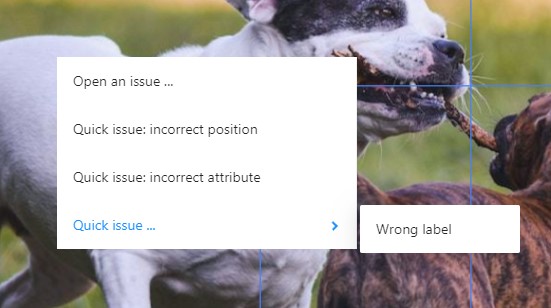

Change job state - changes the state of the job (new, in progress, rejected, completed).

-

Finish the job/Renew the job - changes the job stage and state

to acceptance and completed / annotation and new correspondingly.

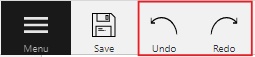

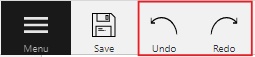

Save Work

Saves annotations for the current job. The button has an indication of the saving process.

Use buttons to undo actions or redo them.

Done

Used to complete the creation of the object. This button appears only when the object is being created.

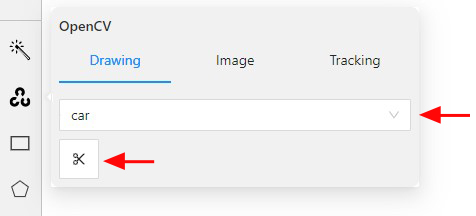

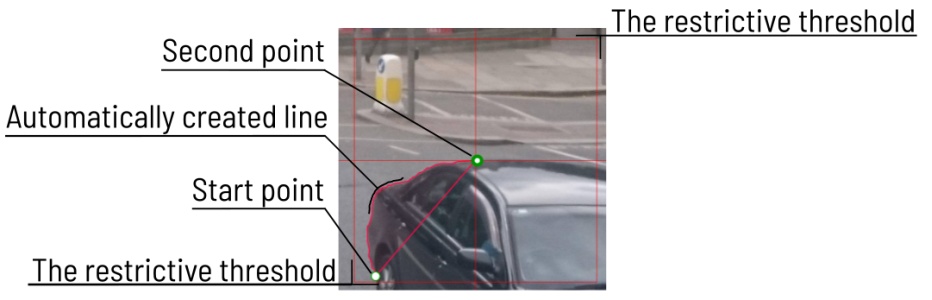

Block

Used to pause automatic line creation when drawing a polygon with

OpenCV Intelligent scissors.

Also used to postpone server requests when creating an object using AI Tools.

When blocking is activated, the button turns blue.

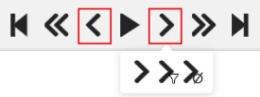

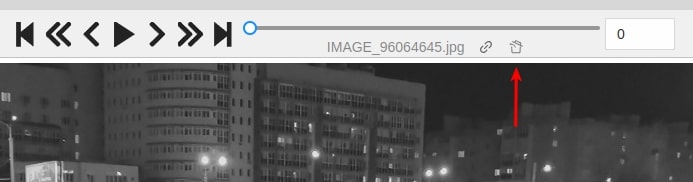

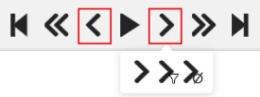

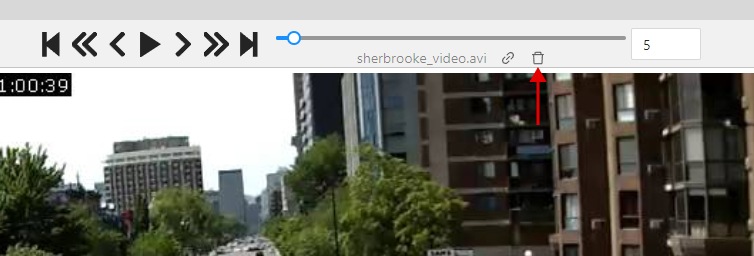

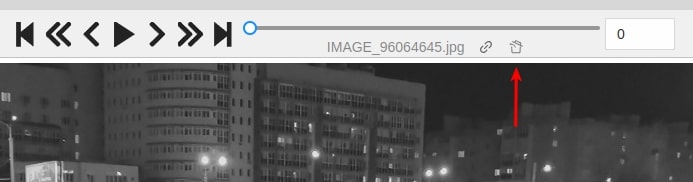

Player

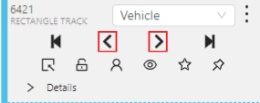

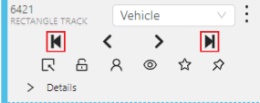

Go to the first /the latest frames.

Go to the next/previous frame with a predefined step. Shortcuts:

V — step backward, C — step forward. By default the step is 10 frames

(change at Account Menu —> Settings —> Player Step).

The button to go to the next / previous frame has the customization possibility.

To customize, right-click on the button and select one of three options:

- The default option - go to the next / previous frame (the step is 1 frame).

- Go to the next / previous frame that has any objects (in particular filtered).

Read the filter section to know the details how to use it.

- Go to the next / previous frame without annotation at all.

Use this option in cases when you need to find missed frames quickly.

Shortcuts: D - previous, F - next.

Play the sequence of frames or the set of images.

Shortcut: Space (change at Account Menu —> Settings —> Player Speed).

Go to a specific frame. Press ~ to focus on the element.

Fullscreen Player

The fullscreen player mode. The keyboard shortcut is F11.

Info

Open the job info.

Overview:

Assignee - the one to whom the job is assigned.Reviewer – a user assigned to carry out the review,

read more in the review section.Start Frame - the number of the first frame in this job.End Frame - the number of the last frame in this job.Frames - the total number of all frames in the job.

Annotations statistics:

This is a table number of created shapes, sorted by labels (e.g. vehicle, person)

and type of annotation (shape, track). As well as the number of manual and interpolated frames.

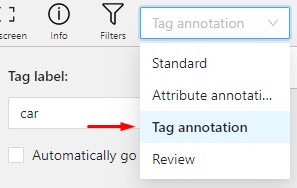

UI switcher

Switching between user interface modes.

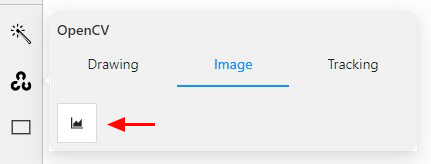

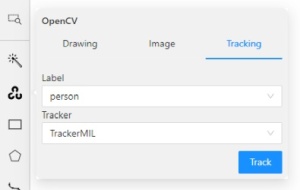

2.1.9 - Controls sidebar

Overview of available functions on the controls sidebar of the annotation tool.

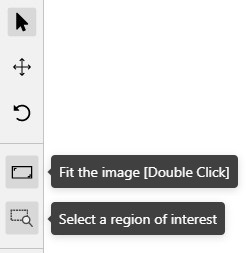

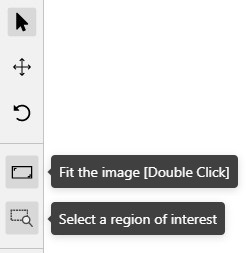

Navigation

Navigation block - contains tools for moving and rotating images.

| Icon |

Description |

|

Cursor (Esc)- a basic annotation pedacting tool. |

|

Move the image- a tool for moving around the image without

the possibility of editing. |

|

Rotate- two buttons to rotate the current frame

a clockwise (Ctrl+R) and anticlockwise (Ctrl+Shift+R).

You can enable Rotate all images in the settings to rotate all the images in the job |

Zoom

Zoom block - contains tools for image zoom.

| Icon |

Description |

|

Fit image- fits image into the workspace size.

Shortcut - double click on an image |

|

Select a region of interest- zooms in on a selected region.

You can use this tool to quickly zoom in on a specific part of the frame. |

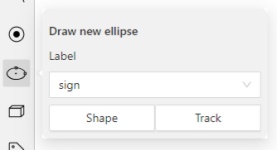

Shapes

Shapes block - contains all the tools for creating shapes.

Edit

Edit block - contains tools for editing tracks and shapes.

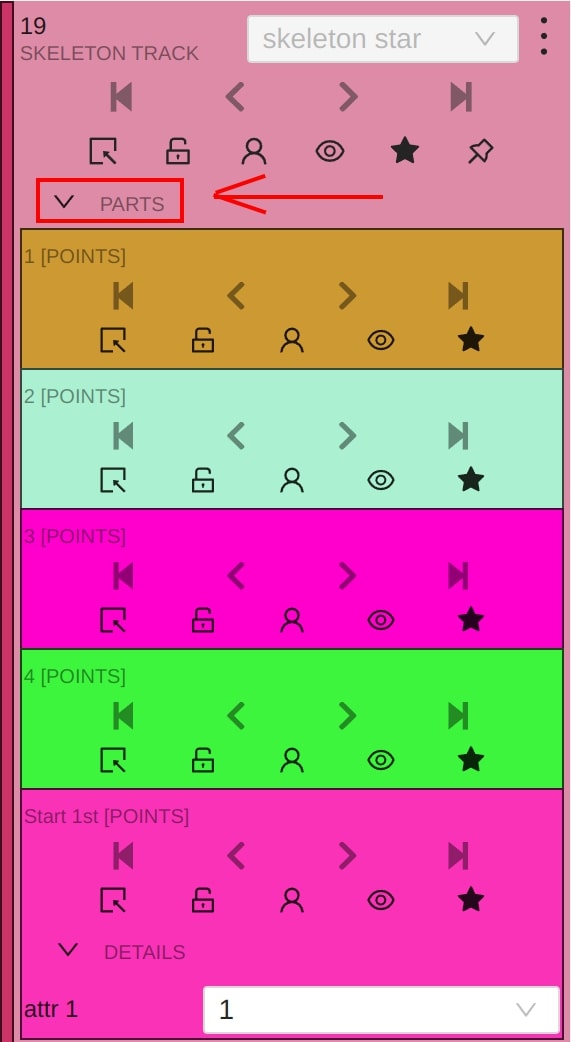

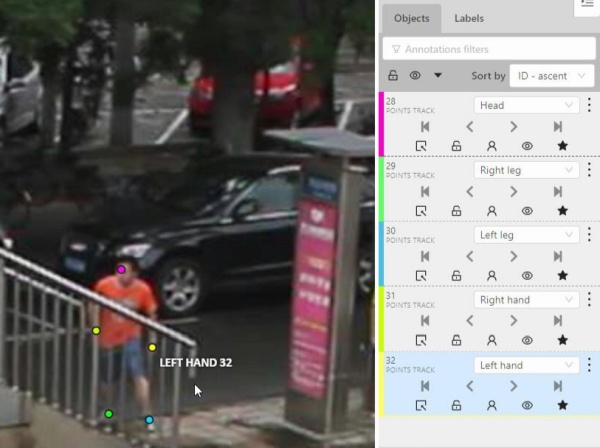

2.1.10 - Objects sidebar

Overview of available functions on the objects sidebar of the annotation tool.

Hide - the button hides the object’s sidebar.

Objects

Filter input box

The way how to use filters is described in the advanced guide here.

List of objects

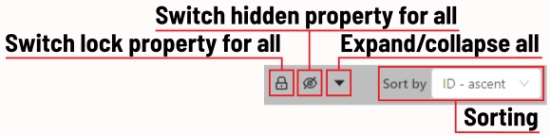

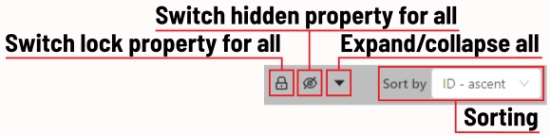

- Switch lock property for all - switches lock property of all objects in the frame.

- Switch hidden property for all - switches hide property of all objects in the frame.

- Expand/collapse all - collapses/expands the details field of all objects in the frame.

- Sorting - sort the list of objects: updated time, ID - accent, ID - descent

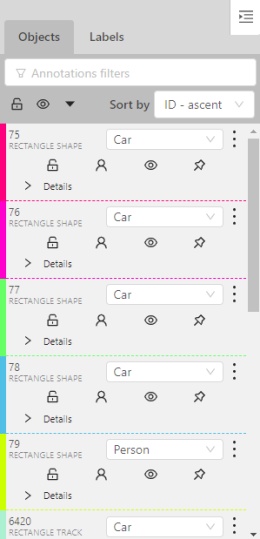

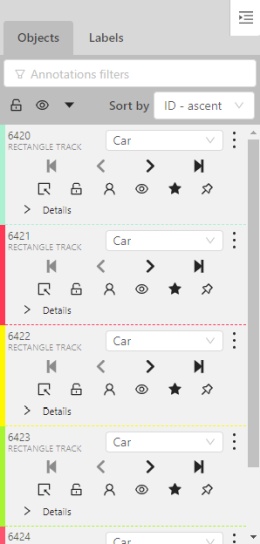

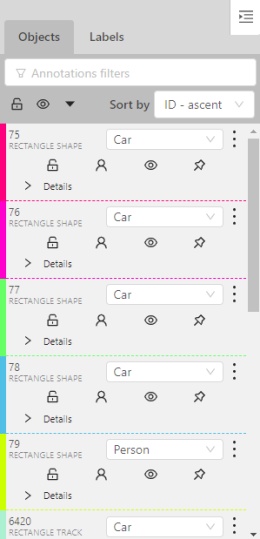

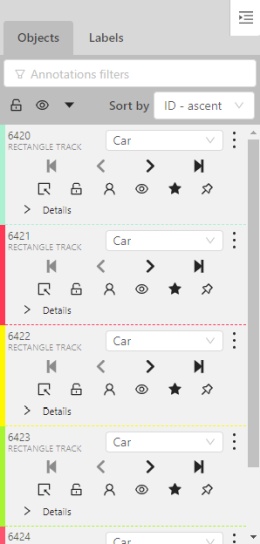

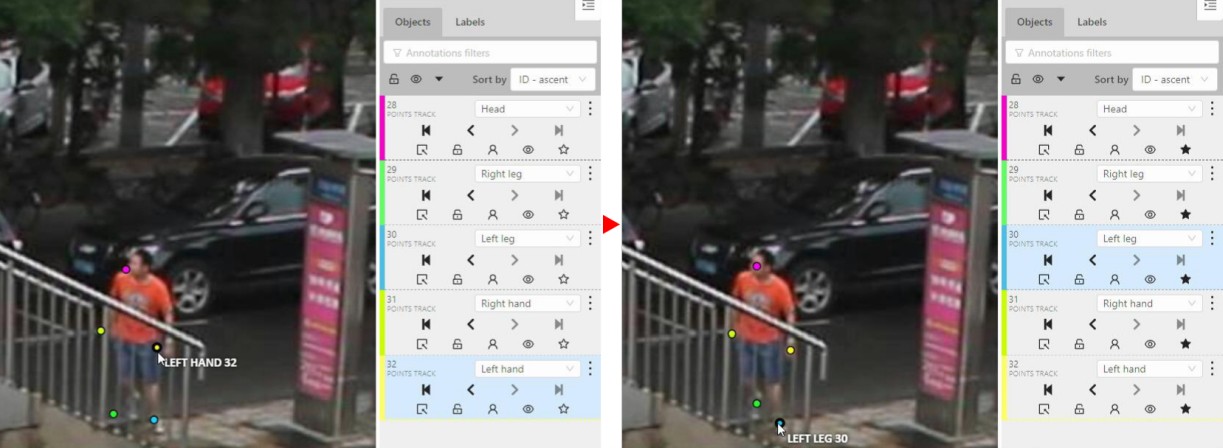

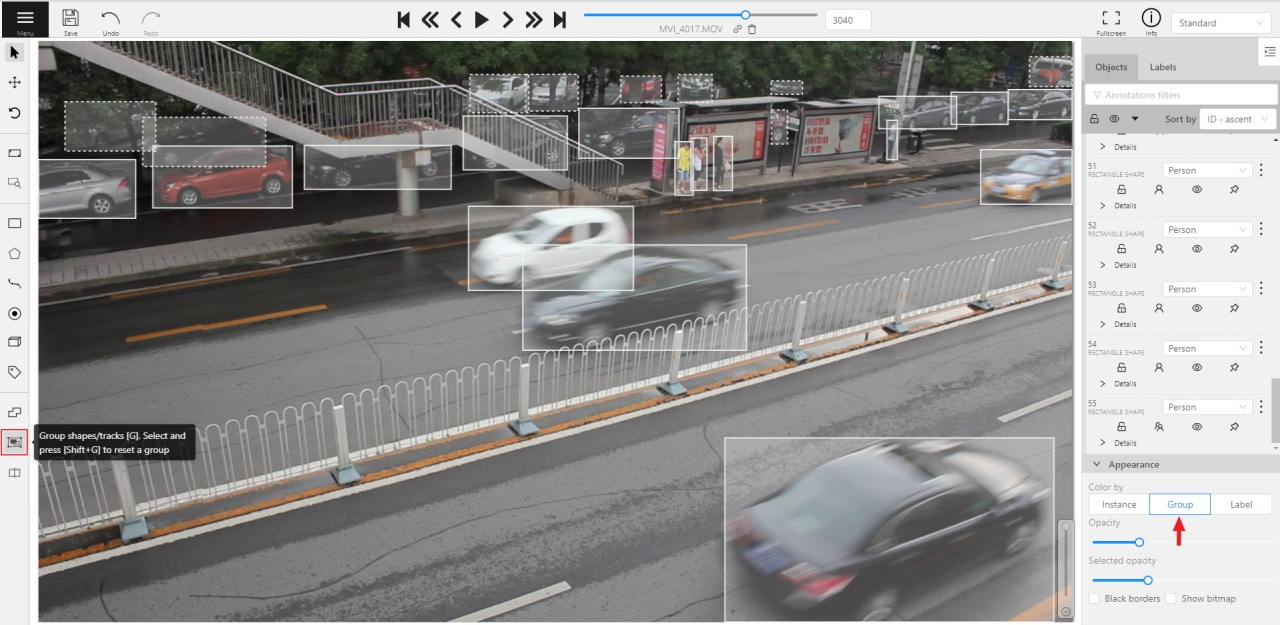

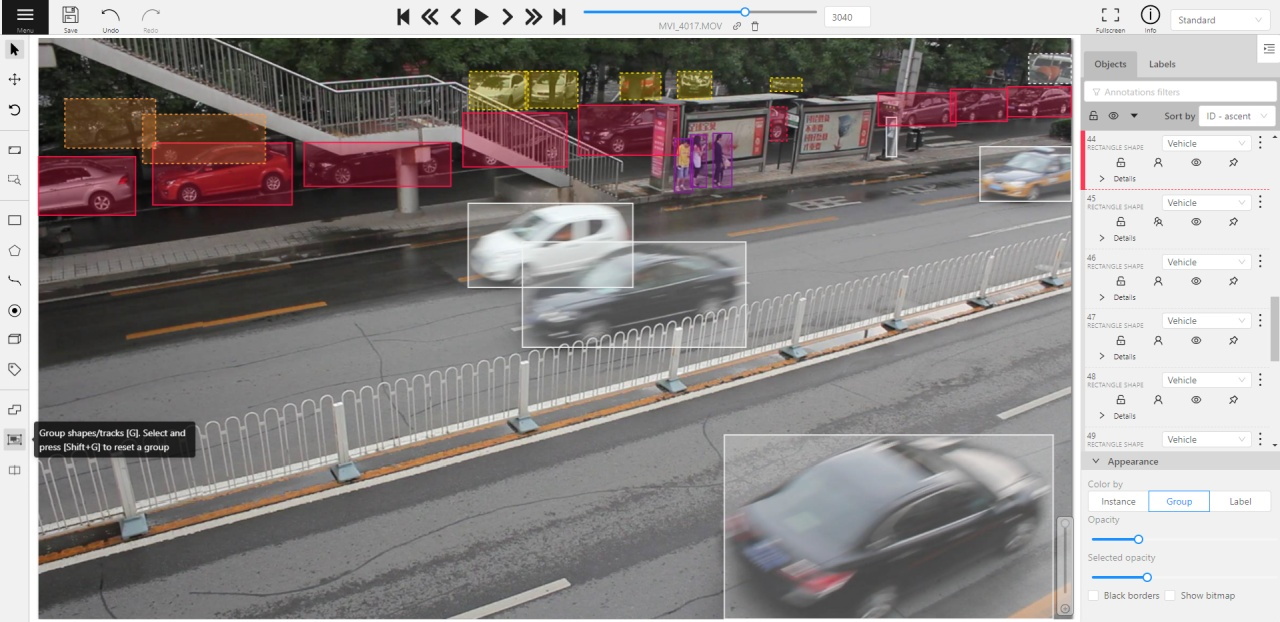

In the objects sidebar you can see the list of available objects on the current

frame. The following figure is an example of how the list might look like:

| Shape mode |

Track mode |

|

|

Objects on the side bar

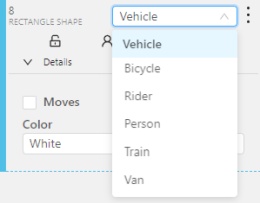

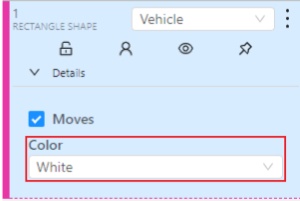

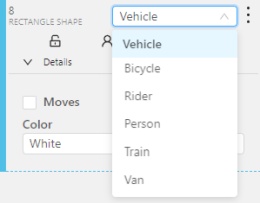

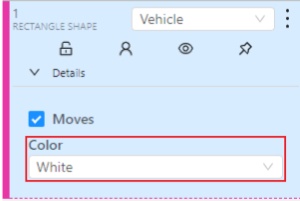

The type of a shape can be changed by selecting Label property.

For instance, it can look like shown on the figure below:

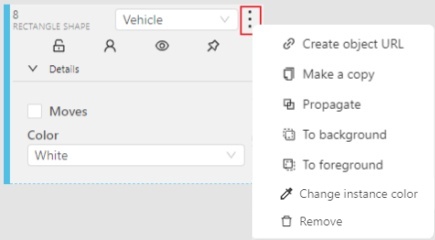

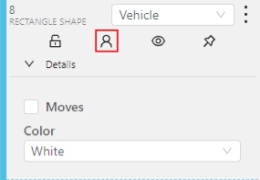

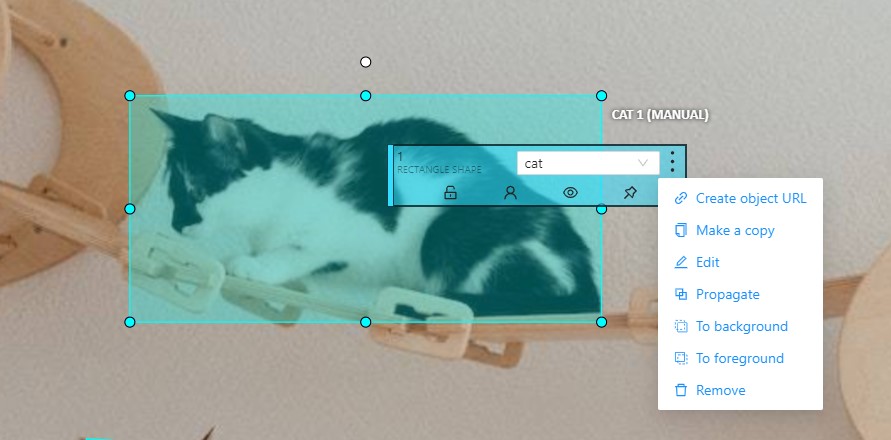

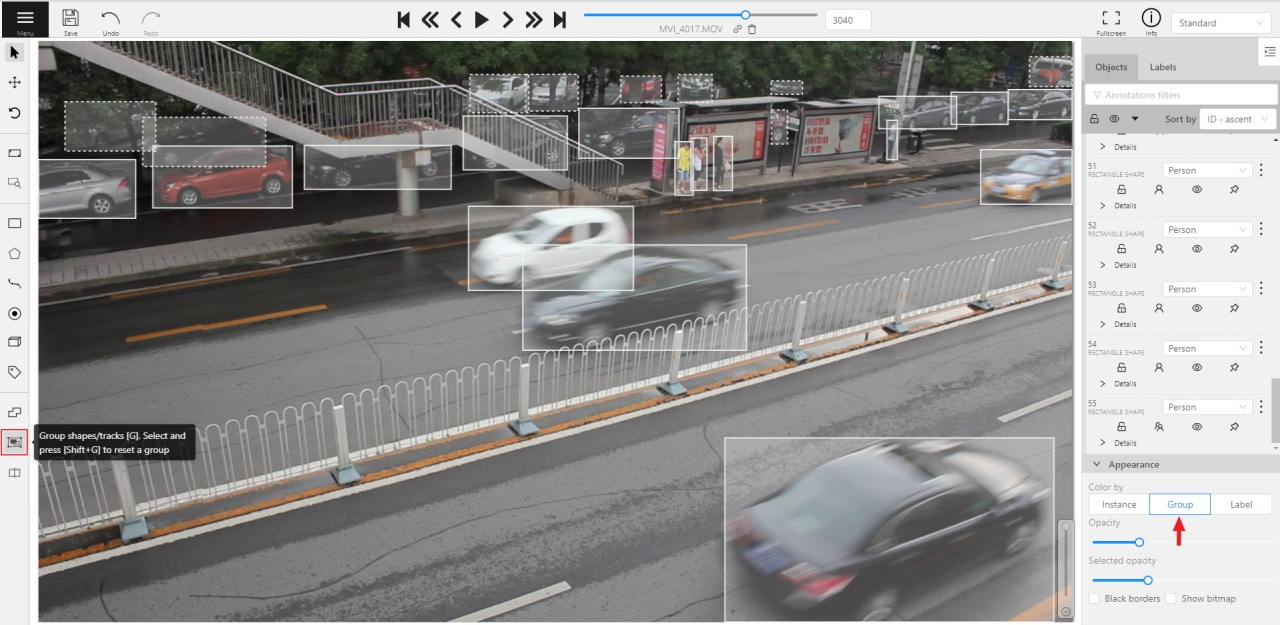

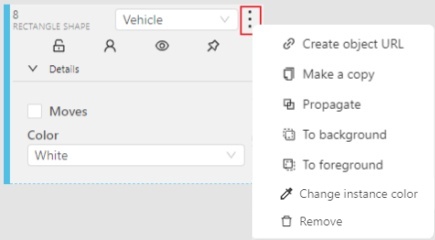

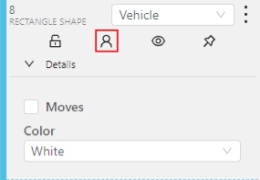

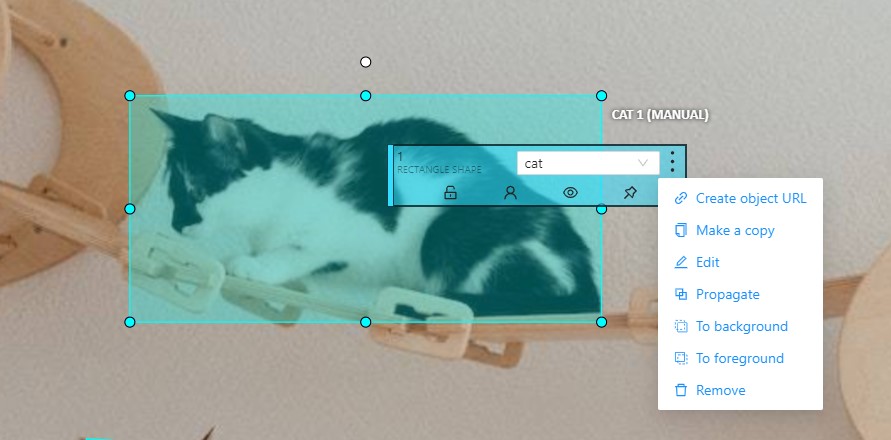

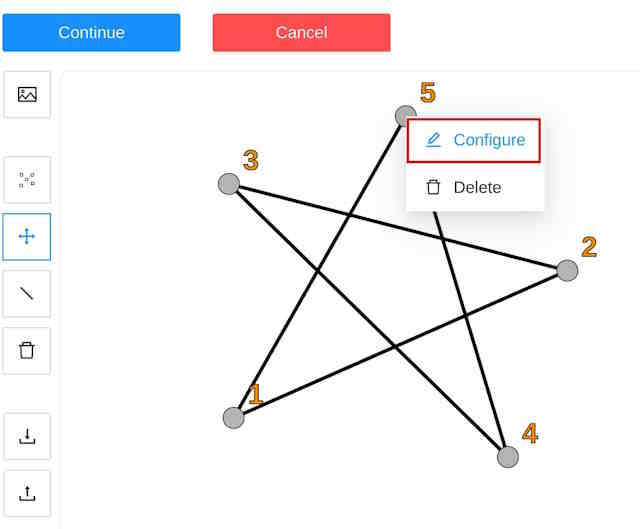

Object action menu

The action menu calls up the button:

The action menu contains:

-

Create object URL - puts a link to an object on the clipboard.

After you open the link, this object will be filtered.

-

Make a copy- copies an object. The keyboard shortcut is Ctrl + C Ctrl + V.

-

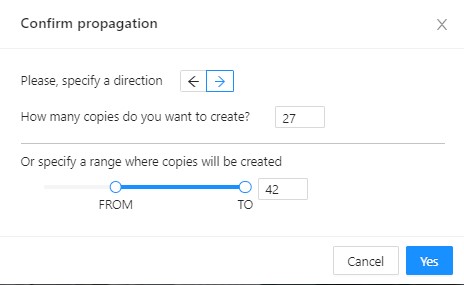

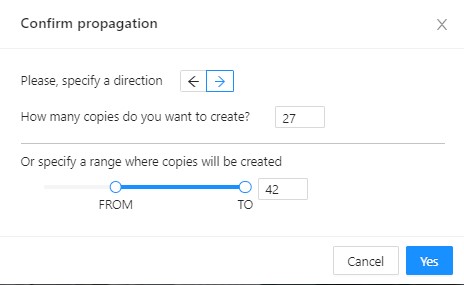

Propagate - Сopies the form to several frames,

invokes a dialog box in which you can specify the number of copies

or the frame onto which you want to copy the object. The keyboard shortcut Ctrl + B.

-

To background - moves the object to the background. The keyboard shortcut -,_.

-

To foreground - moves the object to the foreground. The keyboard shortcut +,=.

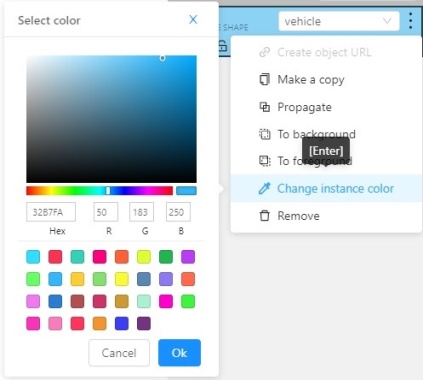

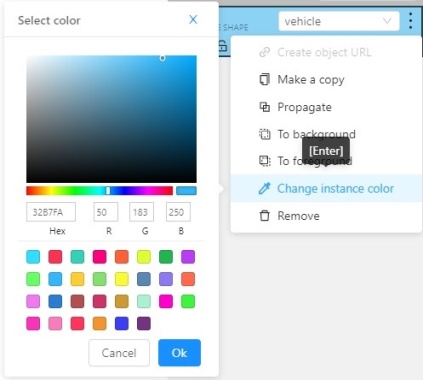

-

Change instance color- choosing a color using the color picker (available only in instance mode).

-

Remove - removes the object. The keyboard shortcut Del,Shift+Del.

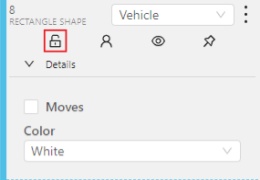

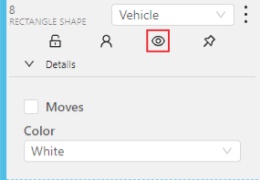

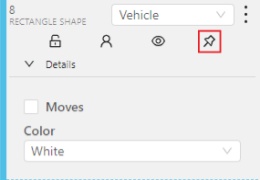

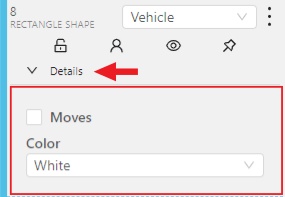

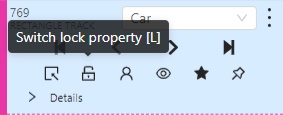

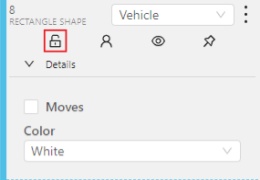

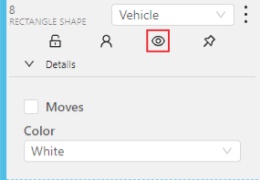

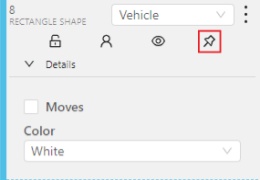

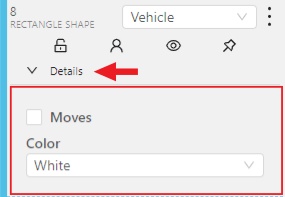

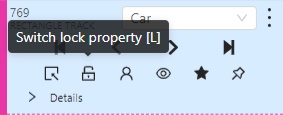

A shape can be locked to prevent its modification or moving by an accident. Shortcut to lock an object: L.

A shape can be Occluded. Shortcut: Q. Such shapes have dashed boundaries.

You can change the way an object is displayed on a frame (show or hide).

Switch pinned property - when enabled, a shape cannot be moved by dragging or dropping.

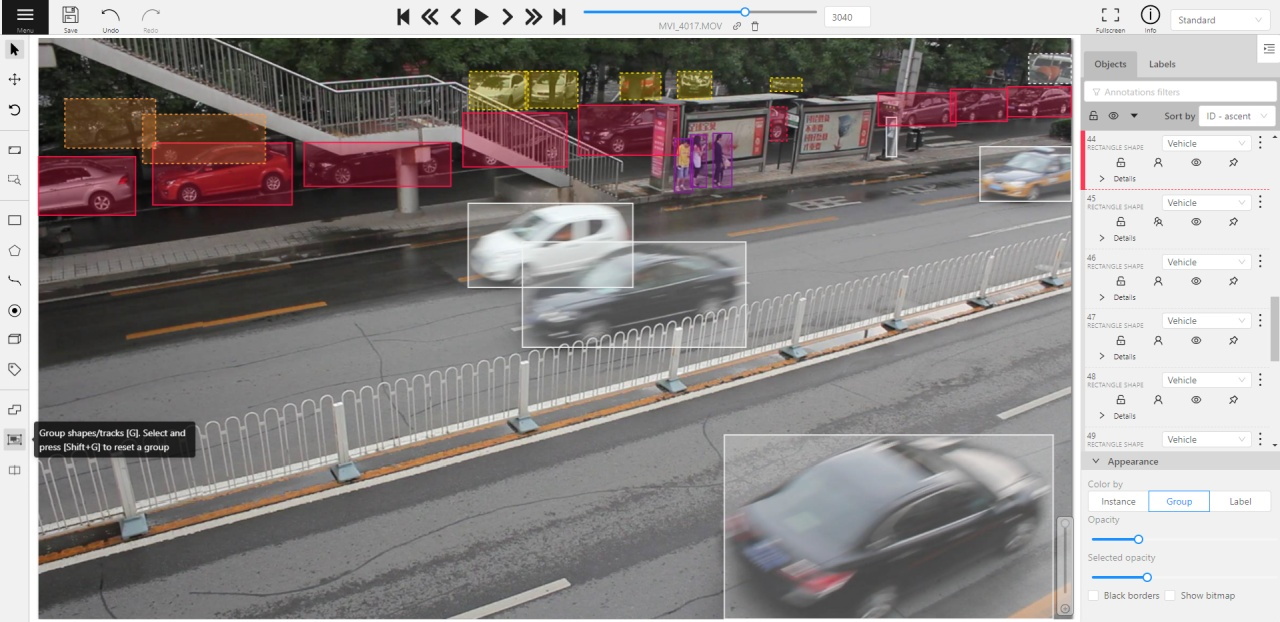

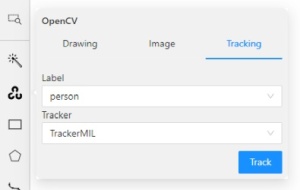

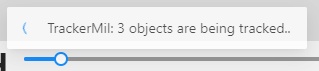

Tracker switcher - enable/disable tracking for the object.

By clicking on the Details button you can collapse or expand the field with all the attributes of the object.

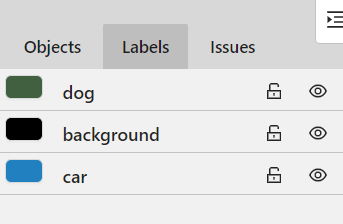

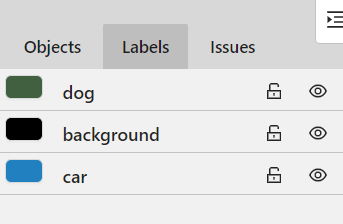

Labels

In this tab you can lock or hide objects of a certain label.

To change the color for a specific label,

you need to go to the task page and select the color by clicking the edit button,

this way you will change the label color for all jobs in the task.

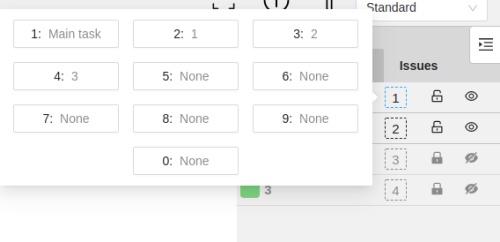

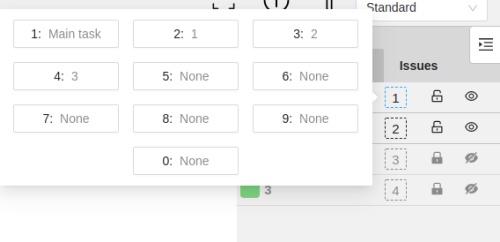

Fast label change

You can change the label of an object using hot keys.

In order to do it, you need to assign a number (from 0 to 9) to labels.

By default numbers 1,2…0 are assigned to the first ten labels.

To assign a number, click on the button placed at the right of a label name on the sidebar.

After that you will be able to assign a corresponding label to an object

by hovering your mouse cursor over it and pressing Ctrl + Num(0..9).

In case you do not point the cursor to the object, pressing Ctrl + Num(0..9) will set a chosen label as default,

so that the next object you create (use N key) will automatically have this label assigned.

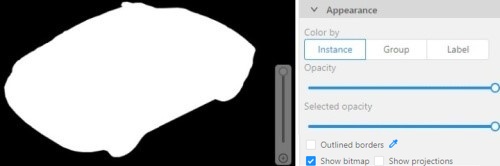

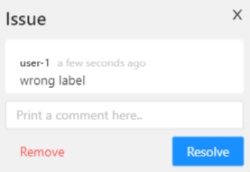

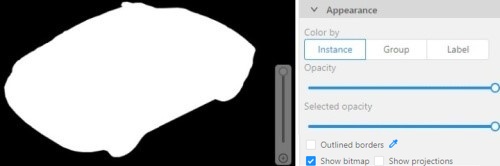

Appearance

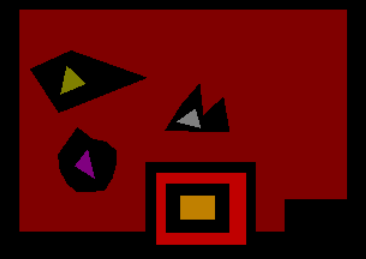

Color By options

Change the color scheme of annotation:

-

Instance — every shape has random color

-

Group — every group of shape has its own random color, ungrouped shapes are white

-

Label — every label (e.g. car, person) has its own random color

You can change any random color pointing to a needed box on a frame or on an

object sidebar.

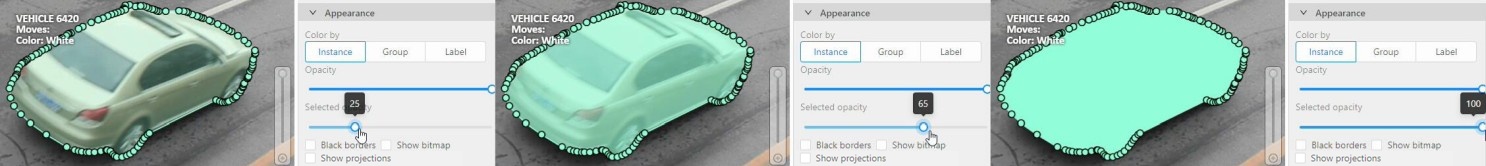

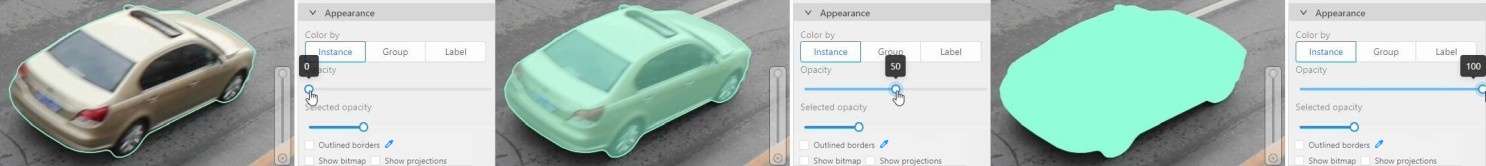

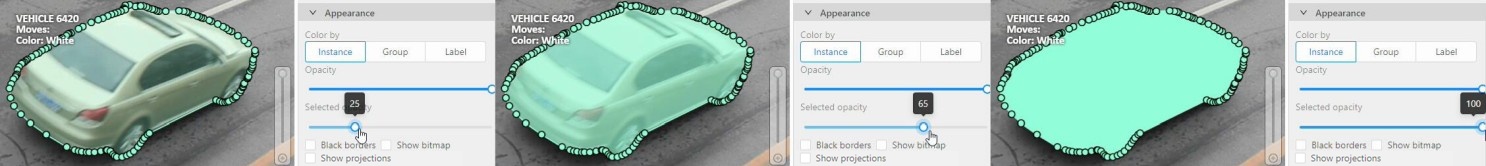

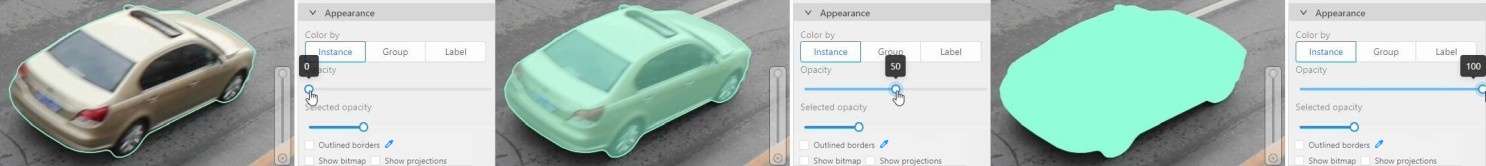

Fill Opacity slider

Change the opacity of every shape in the annotation.

Selected Fill Opacity slider

Change the opacity of the selected object’s fill. It is possible to change opacity while drawing an object in the case

of rectangles, polygons and cuboids.

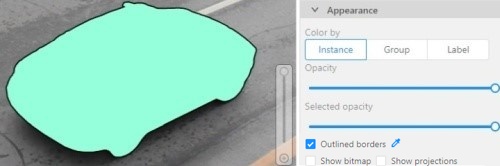

Outlines borders checkbox

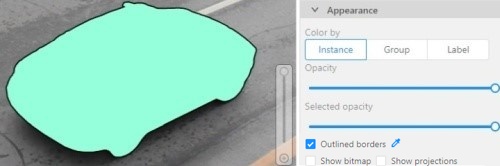

You can change a special shape border color by clicking on the Eyedropper icon.

Show bitmap checkbox

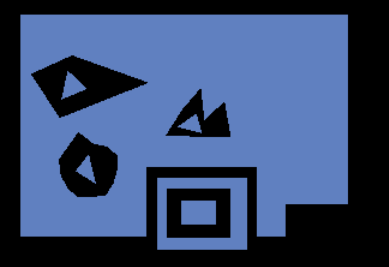

If enabled all shapes are displayed in white and the background is black.

Show projections checkbox

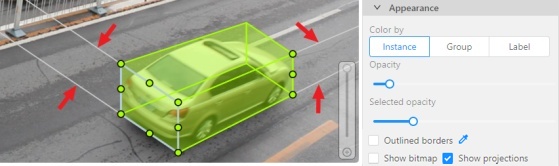

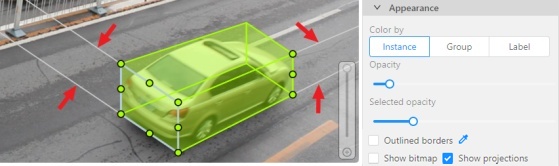

Enables / disables the display of auxiliary perspective lines. Only relevant for cuboids

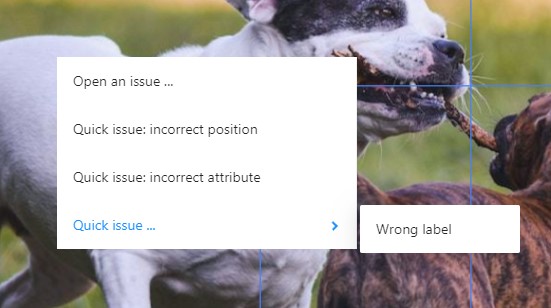

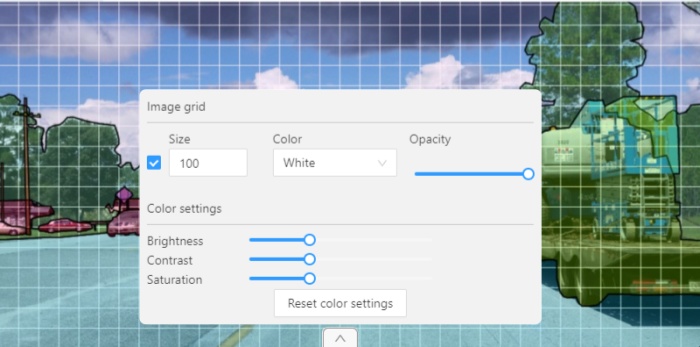

2.1.11 - Workspace

Overview of available functions on the workspace of the annotation tool.

This is the main field in which drawing and editing objects takes place.

In addition the workspace also has the following functions:

-

Right-clicking on an object calls up the Object card - this is an element containing

the necessary controls for changing the label and attributes of the object, as well as the action menu.

-

Right-clicking a point deletes it.

-

Z-axis slider - Allows you to switch annotation layers hiding the upper layers

(slider is enabled if several z layers are on a frame).

This element has a button for adding a new layer. When pressed, a new layer is added and switched to it.

You can move objects in layers using the + and - keys.

-

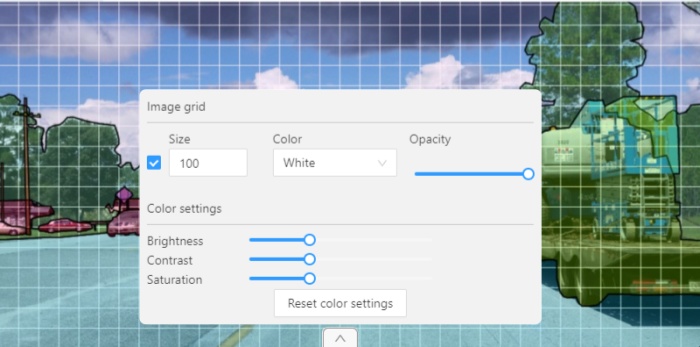

Image settings panel - used to set up the grid and set up image brightness contrast saturation.

-

Show Grid, change grid size, choose color and transparency:

-

Adjust Brightness/Contrast/Saturation of too exposed or too

dark images using F3 — color settings (changes displaying settings and not the

image itself).

Shortcuts:

-

Shift+B+=/Shift+B+- for brightness.

-

Shift+C+=/Shift+C+- for contrast.

-

Shift+S+=/Shift+S+- for saturation.

-

Reset color settings to default values.

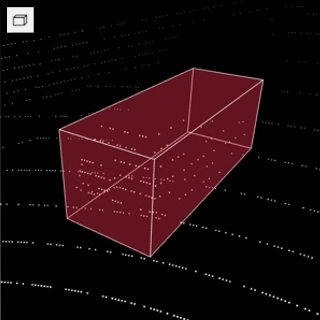

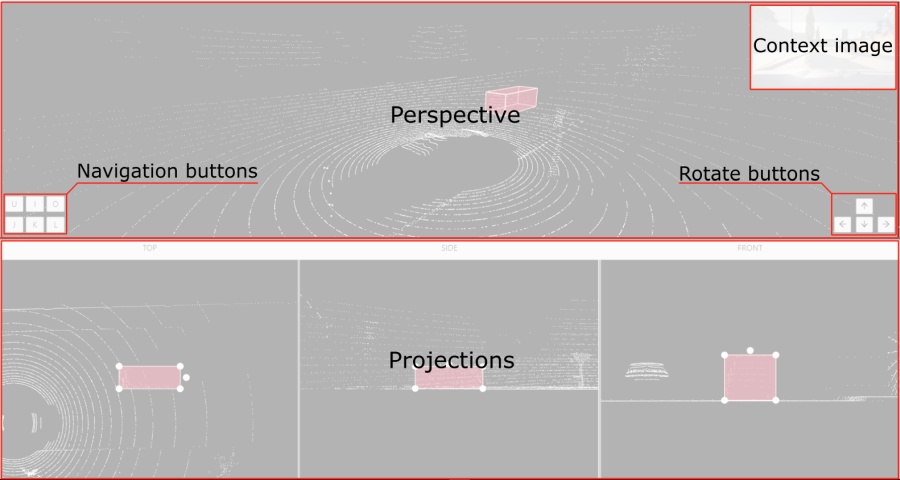

2.1.12 - 3D task workspace

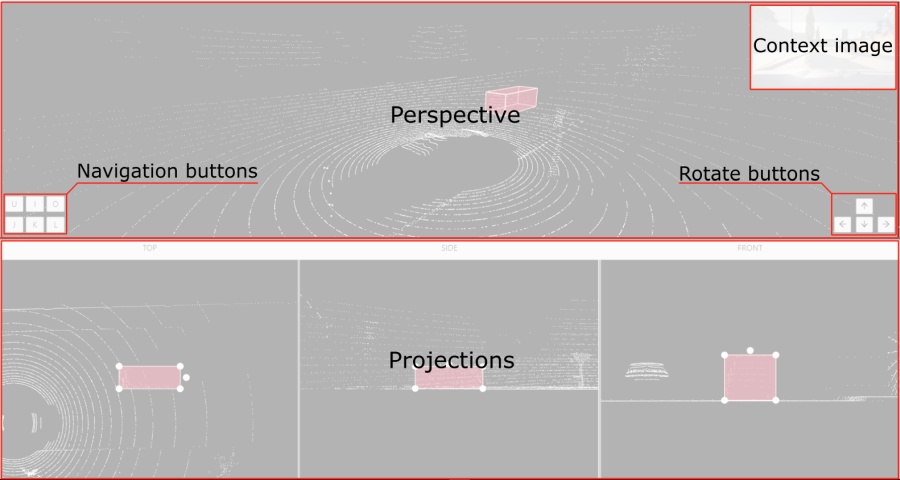

If the related_images folder contains any images, a context image will be available in the perspective window.

The contextual image could be compared to 3D data and would help to identify the labels of marked objects.

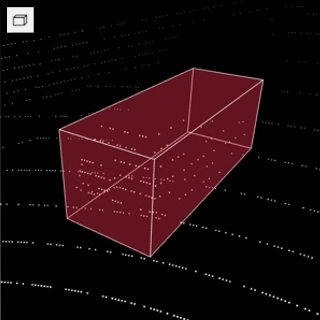

Perspective – a main window for work with objects in a 3D task.

Projections - projections are tied to an object so that a cuboid is in the center and looks like a rectangle.

Projections show only the selected object.

Top – a projection of the view from above.Side – a projection of the left side of the object.Front - a frontal projection of the object.

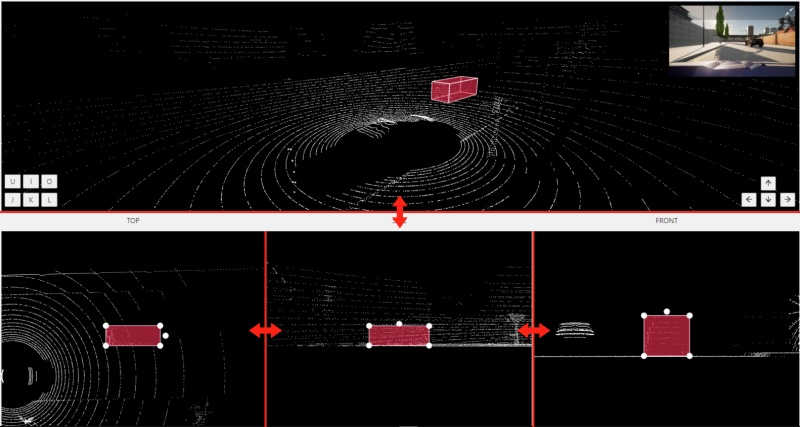

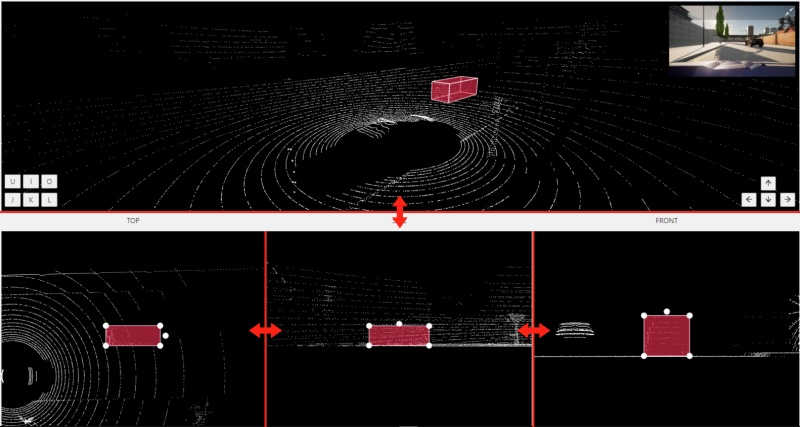

2.1.13 - Standard 3D mode (basics)

Standard 3d mode - Designed to work with 3D data.

The mode is automatically available if you add PCD or Kitty BIN format data when you create a task.

read more

You can adjust the size of the projections, to do so, simply drag the boundary between the projections.

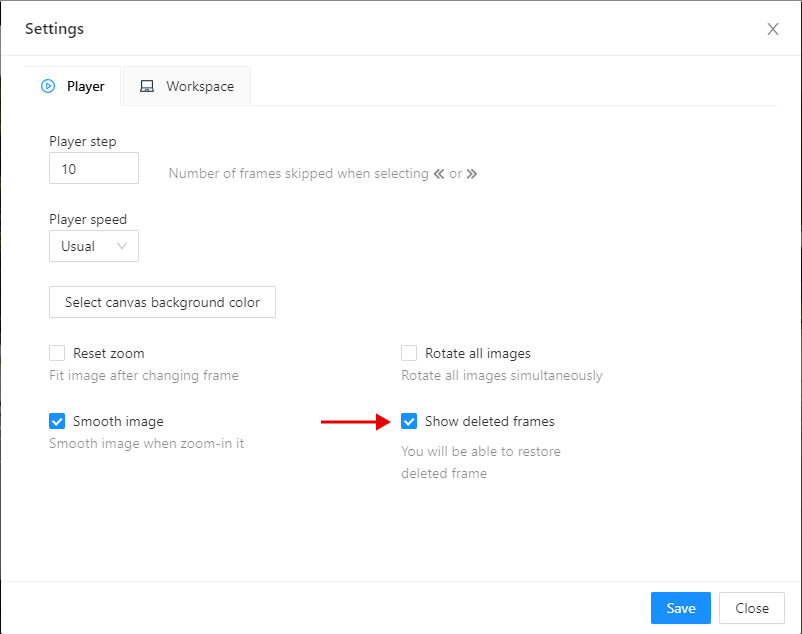

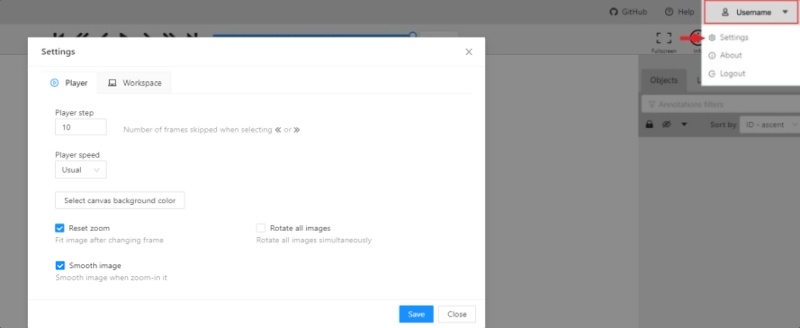

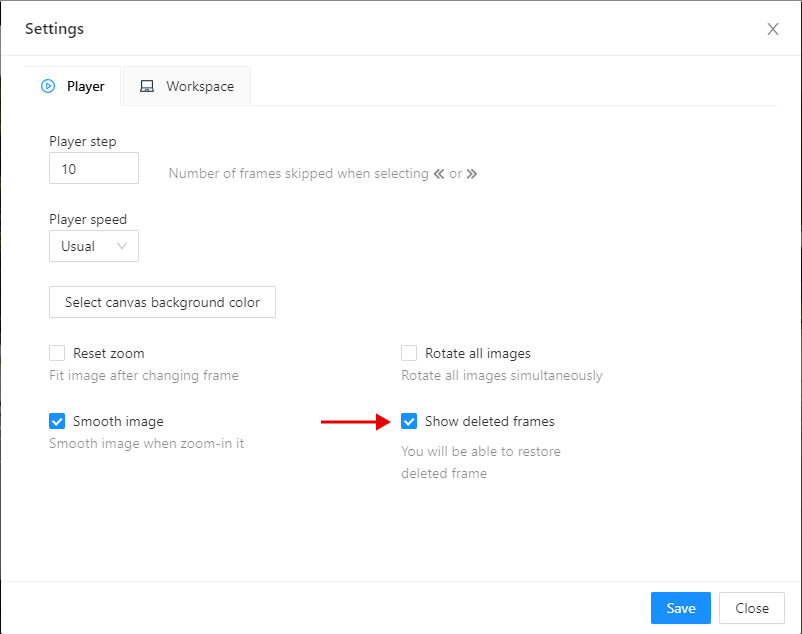

2.1.14 - Settings

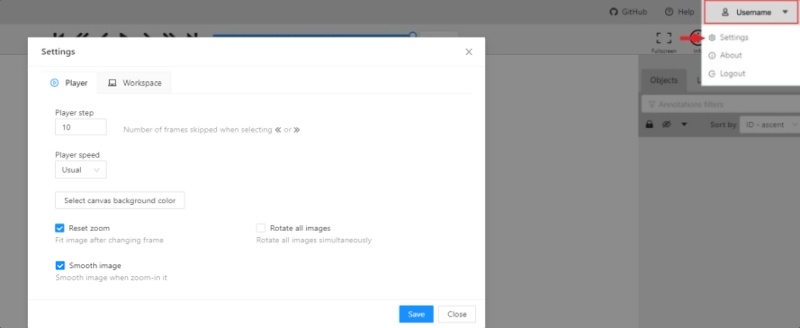

To open the settings open the user menu in the header and select the settings item or press F2.

Settings have two tabs:

In tab Player you can:

- Control step of

C and V shortcuts.

- Control speed of

Space/Play button.

- Select canvas background color. You can choose a background color or enter manually (in RGB or HEX format).

Reset zoom Show every image in full size or zoomed out like previous

(it is enabled by default for interpolation mode and disabled for annotation mode).Rotate all images checkbox — switch the rotation of all frames or an individual frame.Smooth image checkbox — smooth image when zoom-in it.

| smoothed |

pixelized |

|

|

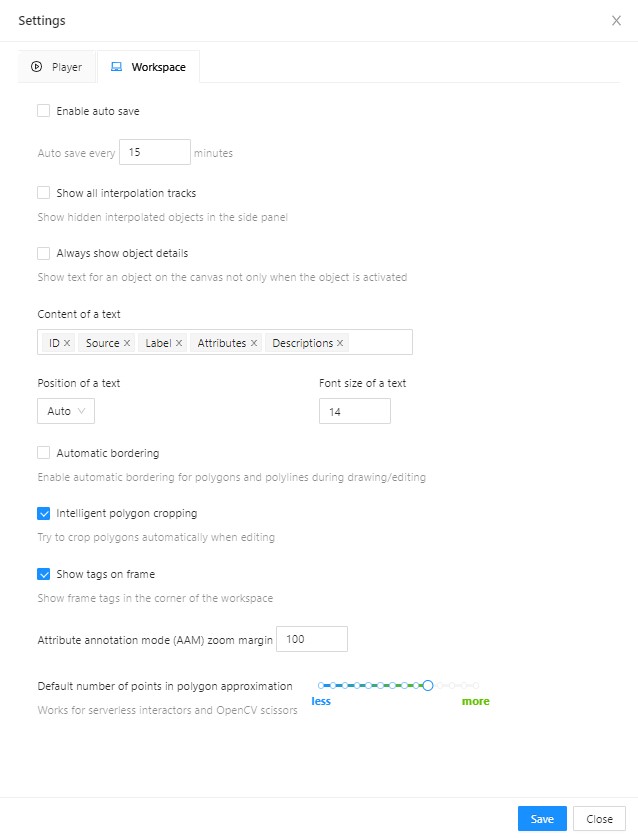

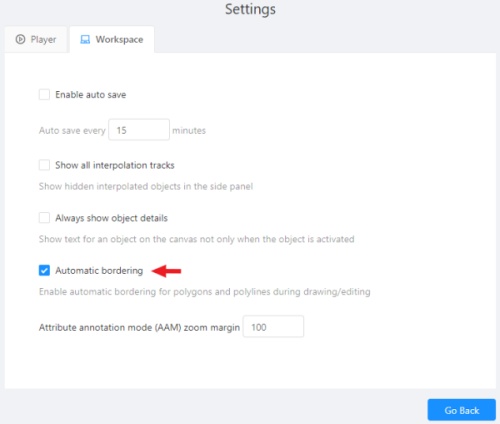

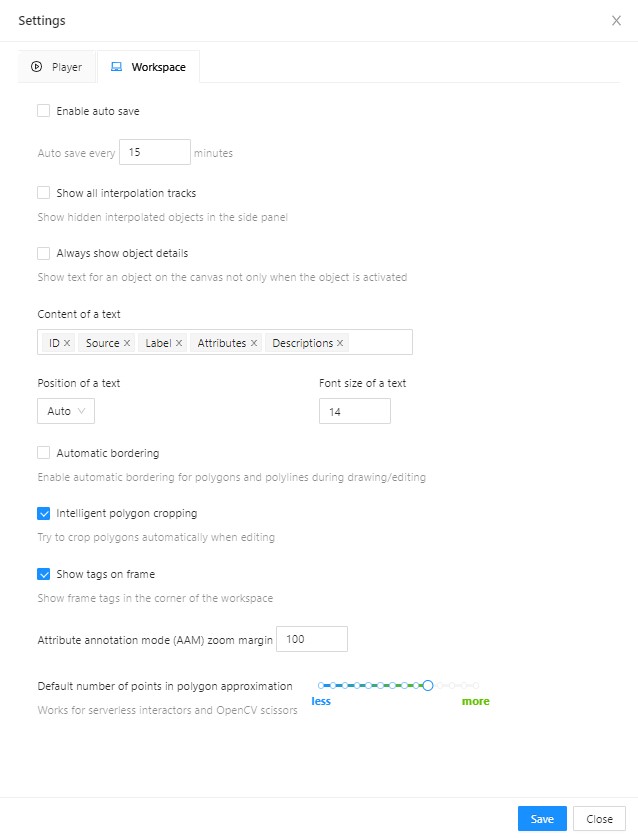

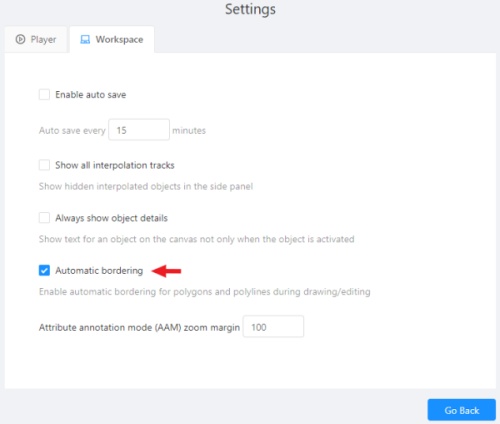

In tab Workspace you can:

-

Enable auto save checkbox — turned off by default.

-

Auto save interval (min) input box — 15 minutes by default.

-

Show all interpolation tracks checkbox — shows hidden objects on the

side panel for every interpolated object (turned off by default).

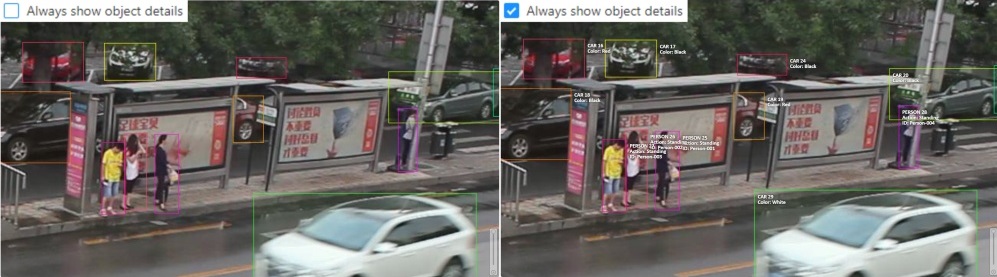

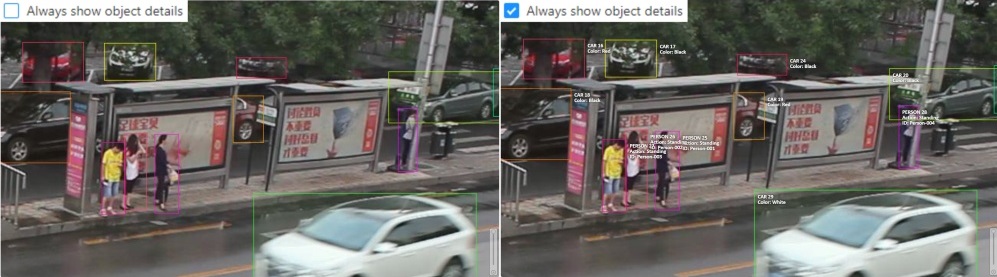

-

Always show object details - show text for an object on the canvas not only when the object is activated:

-

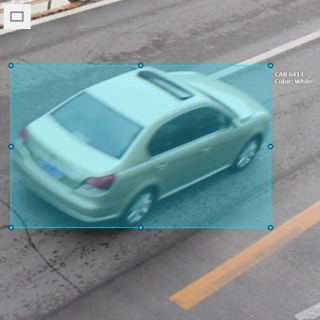

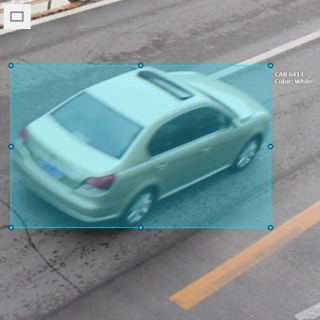

Content of a text - setup of the composition of the object details:

ID - object identifier.Attributes - attributes of the object.Label - object label.Source- source of creating of objects MANUAL, AUTO or SEMI-AUTO.Descriptions - description of attributes.

-

Position of a text - text positioning mode selection:

Auto - the object details will be automatically placed where free space is.Center - the object details will be embedded to a corresponding object if possible.

-

Font size of a text - specifies the text size of the object details.

-

Automatic bordering - enable automatic bordering for polygons and polylines during drawing/editing.

For more information To find out more, go to the section annotation with polygons.

-

Intelligent polygon cropping - activates intelligent cropping when editing the polygon (read more in the section edit polygon

-

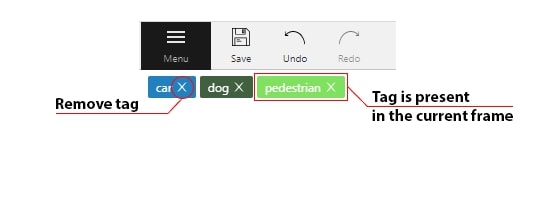

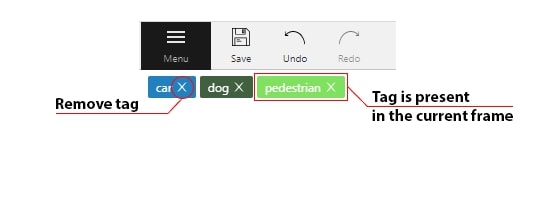

Show tags on frame - shows/hides frame tags on current frame

-

Attribute annotation mode (AAM) zoom margin input box — defines margins (in px)

for shape in the attribute annotation mode.

-

Control points size — defines a size of any interactable points in the tool

(polygon’s vertexes, rectangle dragging points, etc.)

-

Default number of points in polygon approximation

With this setting, you can choose the default number of points in polygon.

Works for serverless interactors and OpenCV scissors.

-

Click Save to save settings (settings will be saved on the server and will not change after the page is refreshed).

Click Cancel or press F2 to return to the annotation.

2.1.15 - Types of shapes

List of shapes available for annotation.

There are several shapes with which you can annotate your images:

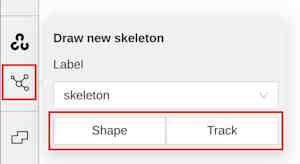

Rectangle or Bounding boxPolygonPolylinePointsEllipseCuboidCuboid in 3d taskSkeletonTag

And there is how they all look like:

Tag - has no shape in the workspace, but is displayed in objects sidebar.

2.1.16 - Shape mode (basics)

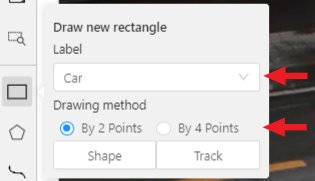

Usage examples and basic operations available during annotation in shape mode.

Usage examples:

- Create new annotations for a set of images.

- Add/modify/delete objects for existing annotations.

-

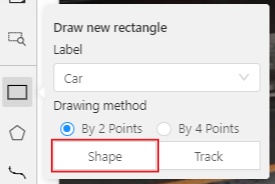

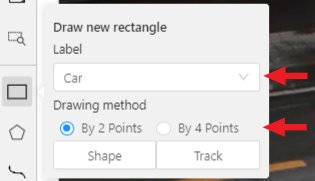

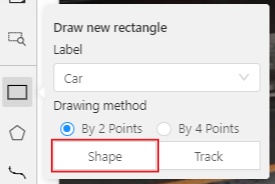

You need to select Rectangle on the controls sidebar:

Before you start, select the correct Label (should be specified by you when creating the task)

and Drawing Method (by 2 points or by 4 points):

-

Creating a new annotation in Shape mode:

-

Create a separate Rectangle by clicking on Shape.

-

Choose the opposite points. Your first rectangle is ready!

-

To learn more about creating a rectangle read here.

-

It is possible to adjust boundaries and location of the rectangle using a mouse.

Rectangle’s size is shown in the top right corner , you can check it by clicking on any point of the shape.

You can also undo your actions using Ctrl+Z and redo them with Shift+Ctrl+Z or Ctrl+Y.

-

You can see the Object card in the objects sidebar or open it by right-clicking on the object.

You can change the attributes in the details section.

You can perform basic operations or delete an object by clicking on the action menu button.

-

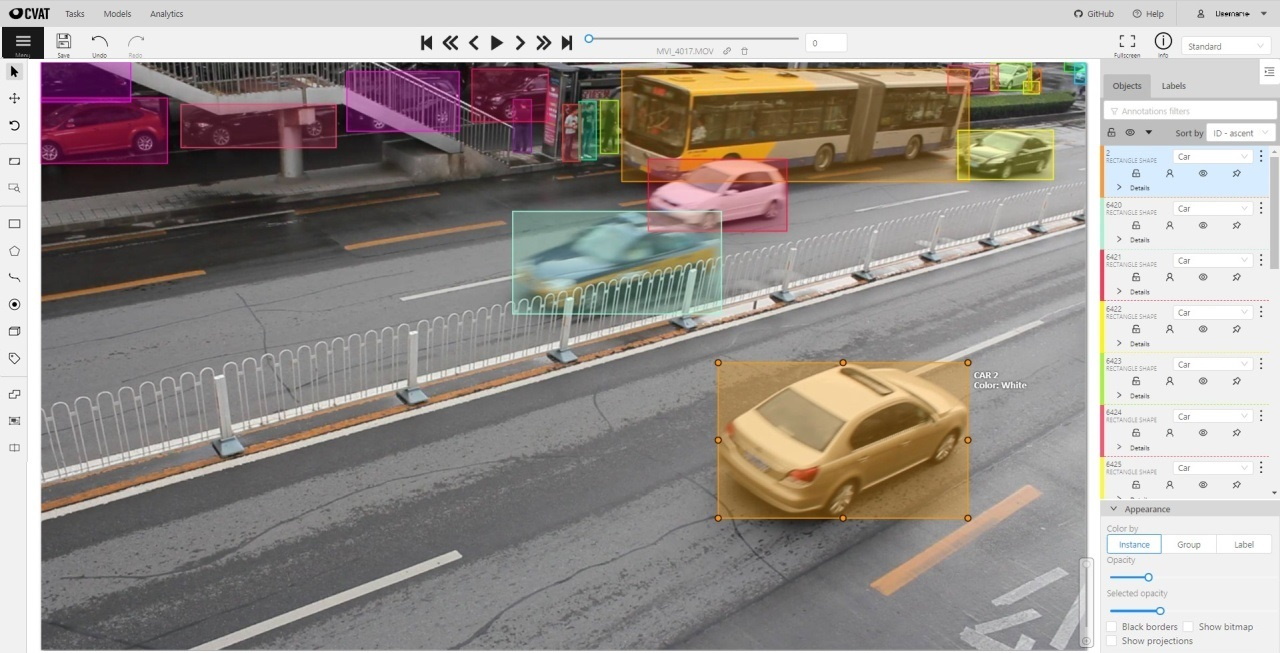

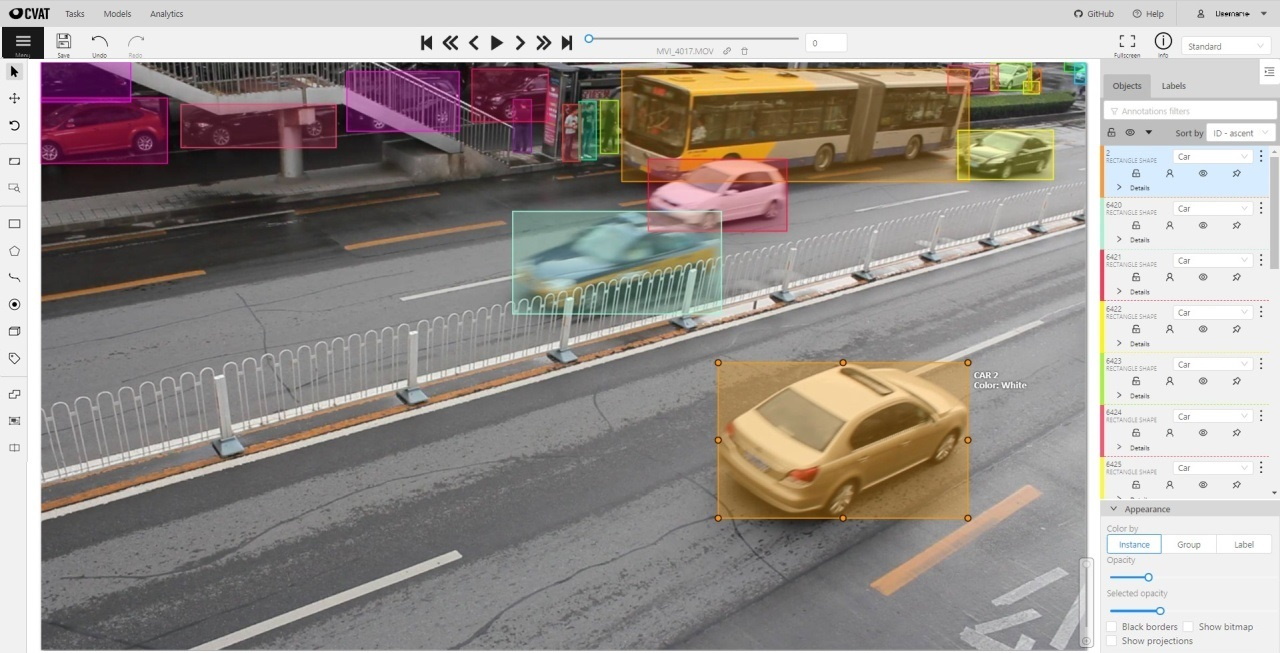

The following figure is an example of a fully annotated frame with separate shapes.

Read more in the section shape mode (advanced).

2.1.17 - Track mode (basics)

Usage examples and basic operations available during annotation in track mode.

Usage examples:

- Create new annotations for a sequence of frames.

- Add/modify/delete objects for existing annotations.

- Edit tracks, merge several rectangles into one track.

-

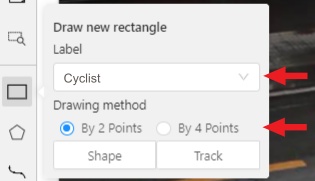

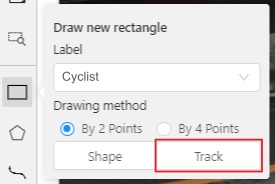

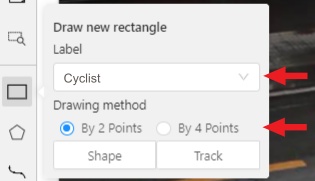

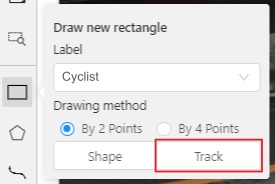

Like in the Shape mode, you need to select a Rectangle on the sidebar,

in the appearing form, select the desired Label and the Drawing method.

-

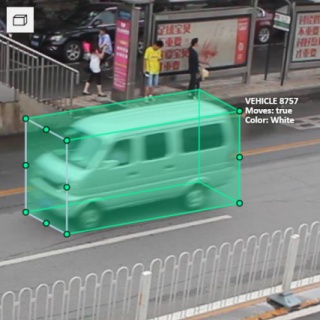

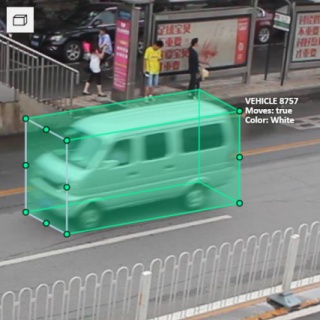

Creating a track for an object (look at the selected car as an example):

-

Create a Rectangle in Track mode by clicking on Track.

-

In Track mode the rectangle will be automatically interpolated on the next frames.

-

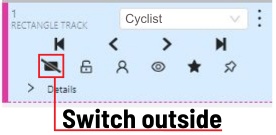

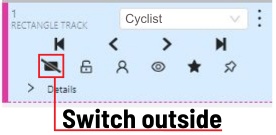

The cyclist starts moving on frame #2270. Let’s mark the frame as a key frame.

You can press K for that or click the star button (see the screenshot below).

-

If the object starts to change its position, you need to modify the rectangle where it happens.

It isn’t necessary to change the rectangle on each frame, simply update several keyframes

and the frames between them will be interpolated automatically.

-

Let’s jump 30 frames forward and adjust the boundaries of the object. See an example below:

-

After that the rectangle of the object will be changed automatically on frames 2270 to 2300:

-

When the annotated object disappears or becomes too small, you need to

finish the track. You have to choose Outside Property, shortcut O.

-

If the object isn’t visible on a couple of frames and then appears again,

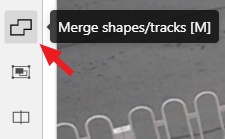

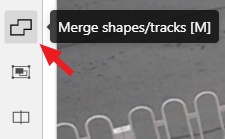

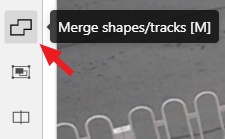

you can use the Merge feature to merge several individual tracks

into one.

-

Create tracks for moments when the cyclist is visible:

-

Click Merge button or press key M and click on any rectangle of the first track

and on any rectangle of the second track and so on:

-

Click Merge button or press M to apply changes.

-

The final annotated sequence of frames in Interpolation mode can

look like the clip below:

Read more in the section track mode (advanced).

2.1.18 - 3D Object annotation (basics)

Overview of basic operations available when annotating 3D objects.

Navigation

To move in 3D space you can use several methods:

You can move around by pressing the corresponding buttons:

- To rotate the camera use:

Shift+arrrowup/Shift+arrrowdown/Shift+arrrowleft/Shift+arrrowright.

- To move left/right use:

Allt+J/Alt+L.

- To move up/down use:

Alt-U/Alt+O.

- To zoom in/out use:

Alt+K/Alt+I.

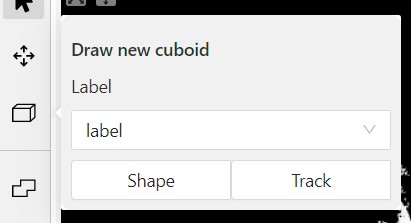

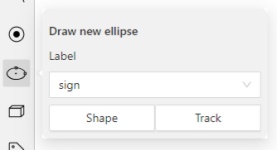

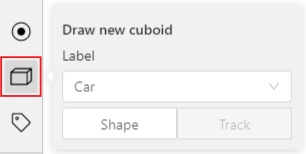

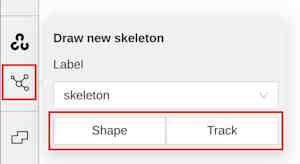

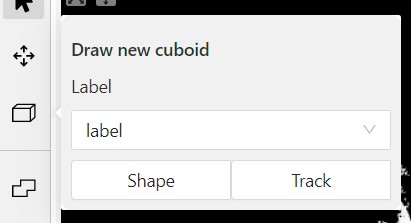

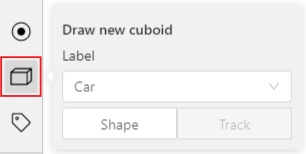

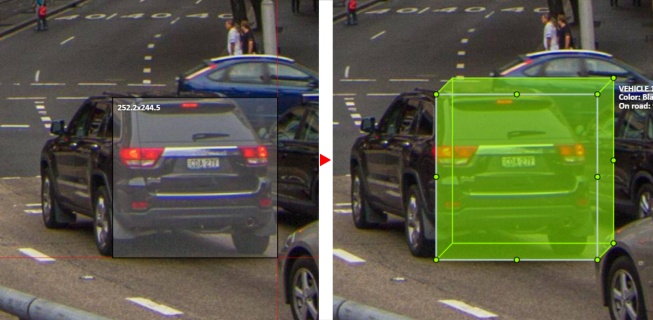

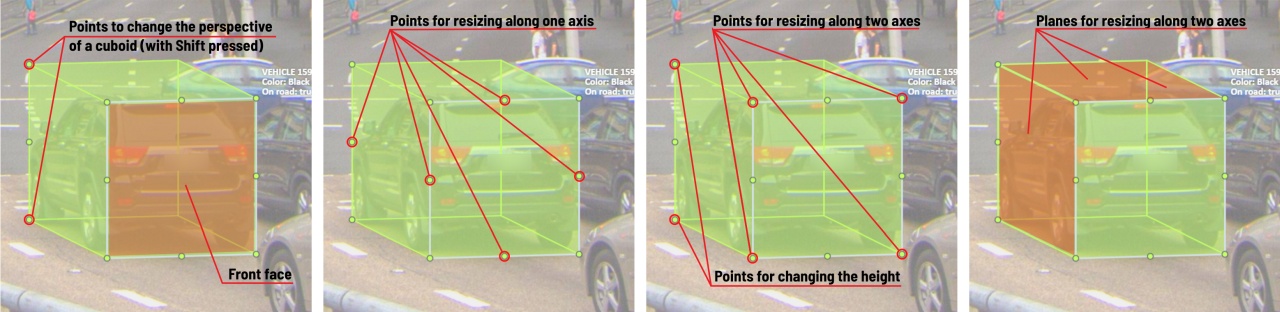

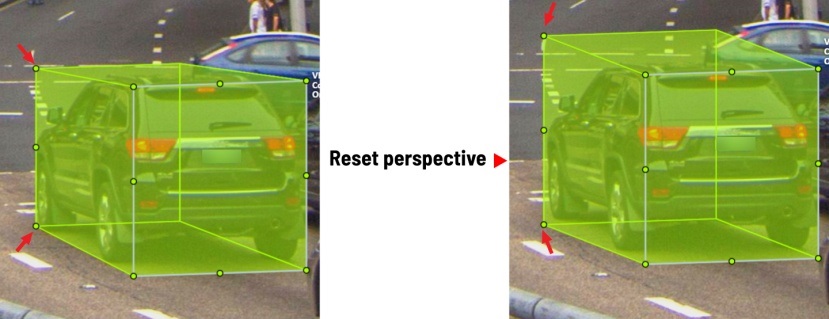

Creating a cuboid

To create a cube in a 3D task you need to click the appropriate icon on the control sidebar,

select the label of the future object and click shape.

After that the cursor will be followed by a cube. In the creation process you can rotate and move the camera

only using the keys. Left double-click will create an object.

You can place an object only near the dots of the point cloud.

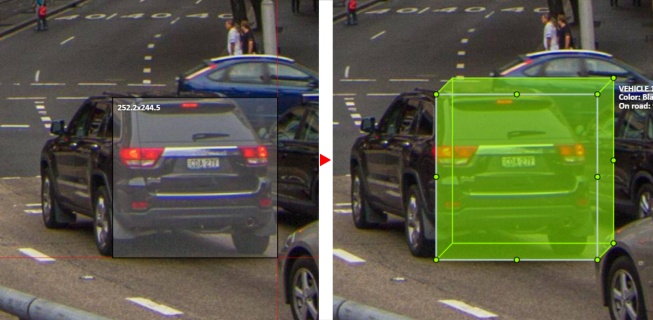

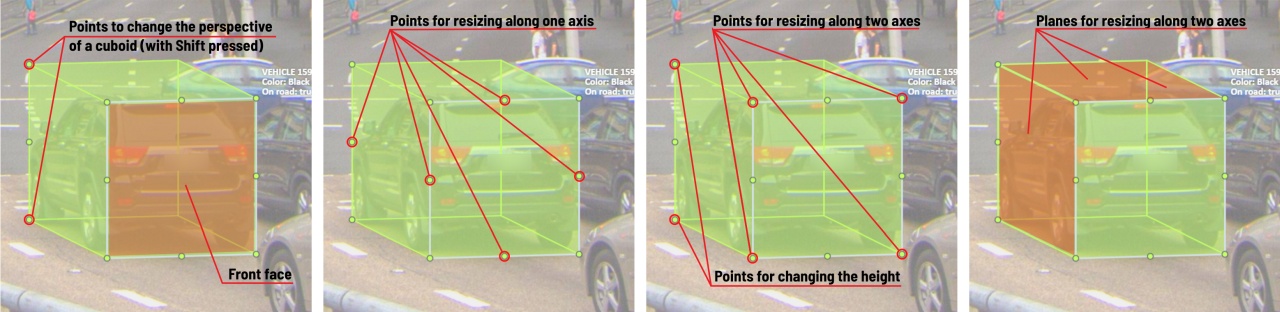

To adjust the size precisely, you need to edit the cuboid on the projections, for this change Сursor on control

sidebar or press Esc. In each projection you can:

Move the object in the projection plane - to do this, hover over the object,

press the left mouse button and move the object.

Move one of the four points - you can change the size of the cuboid by dragging the points in the projection.

Rotate the cuboid in the projection plane – to rotate the cuboid you should click on the appropriate point

and then drag it up/down or to the left/right.

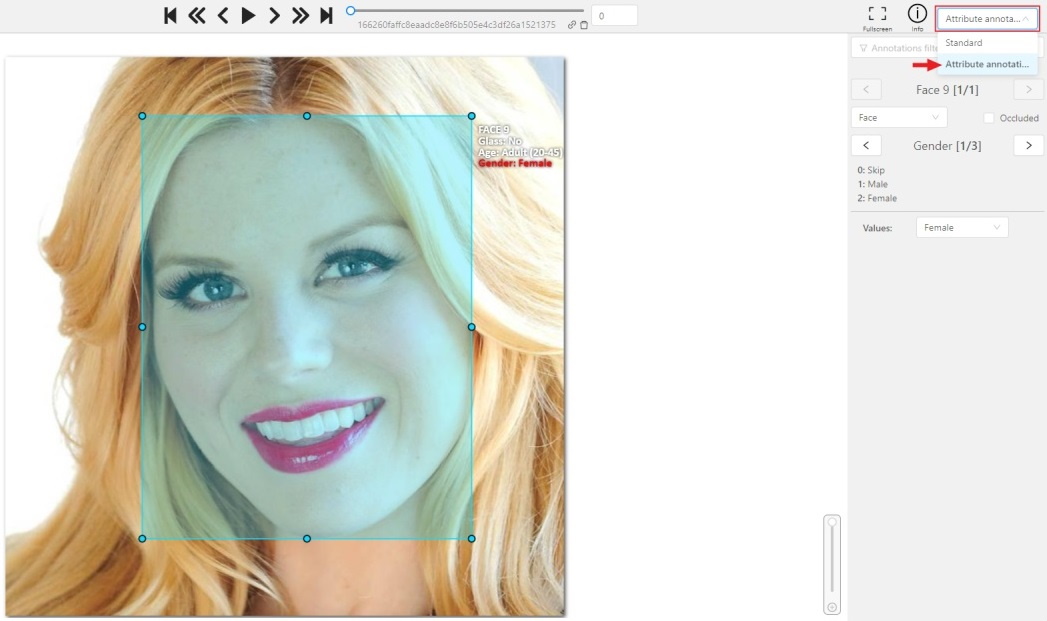

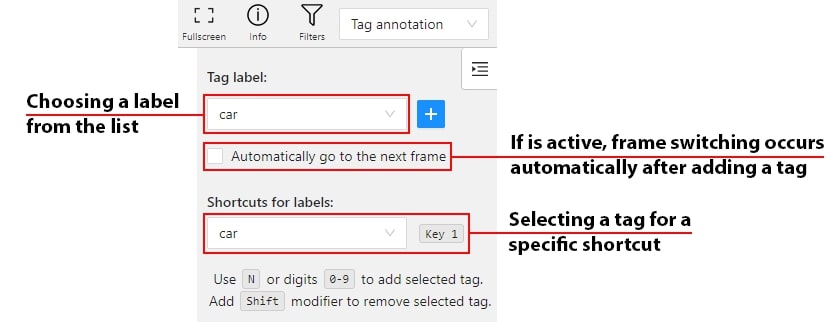

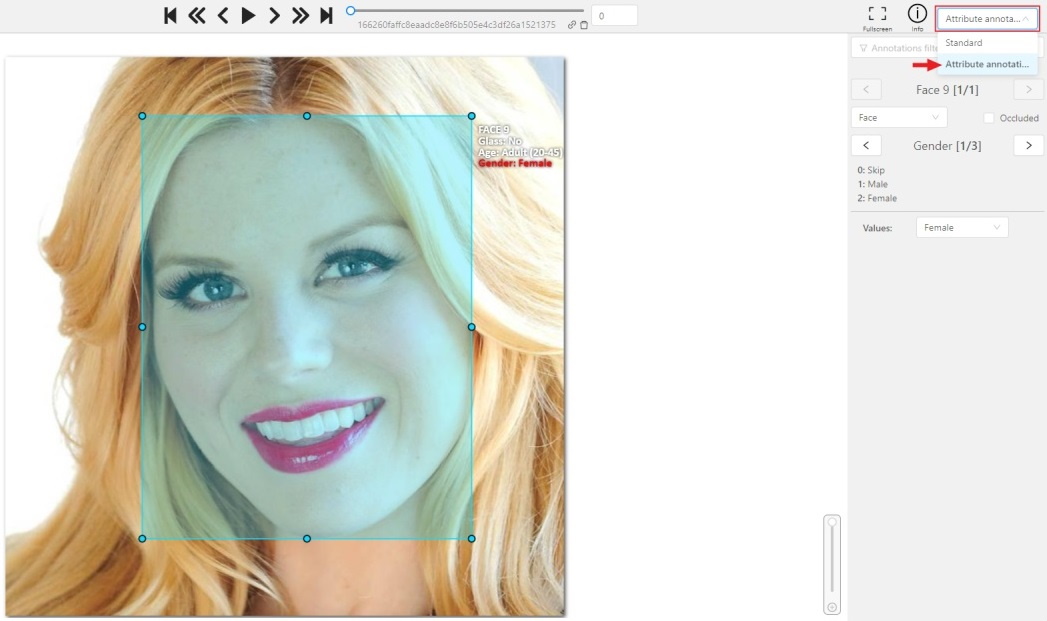

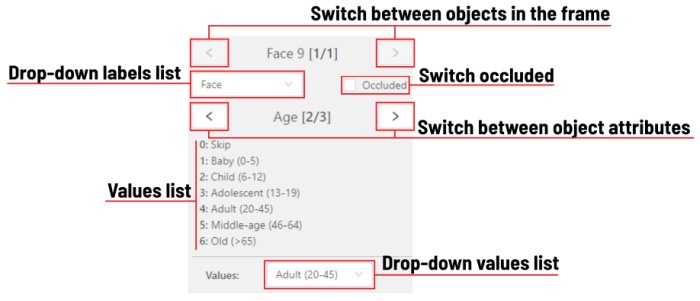

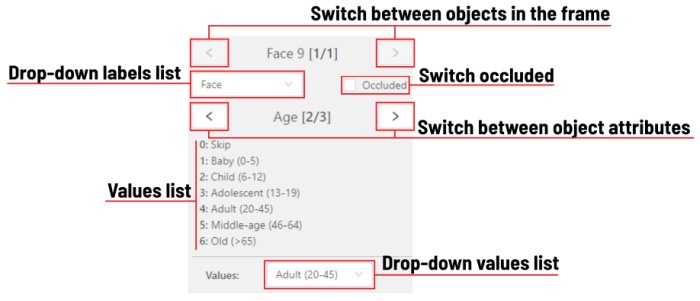

2.1.19 - Attribute annotation mode (basics)

Usage examples and basic operations available in attribute annotation mode.

-

In this mode you can edit attributes with fast navigation between objects and frames using a keyboard.

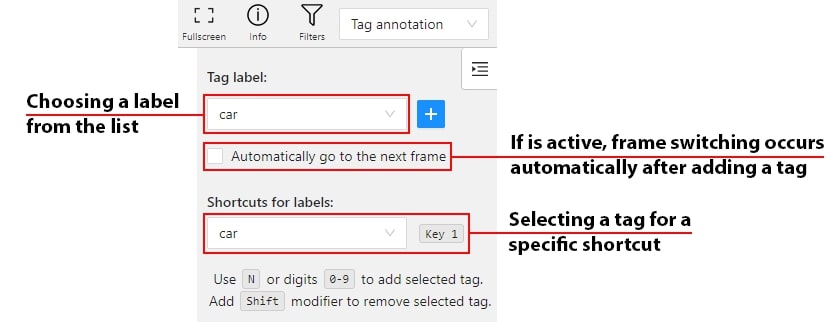

Open the drop-down list in the top panel and select Attribute annotation Mode.

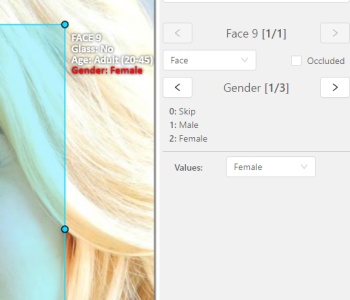

-

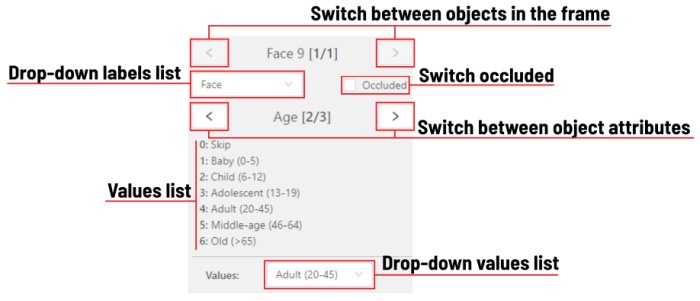

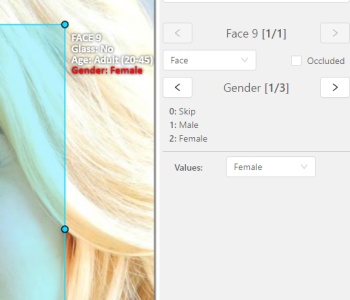

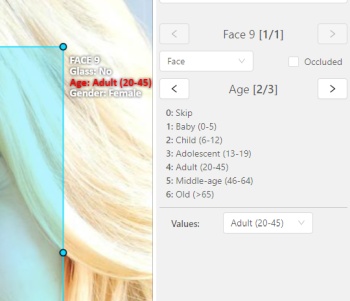

In this mode objects panel change to a special panel :

-

The active attribute will be red. In this case it is gender . Look at the bottom side panel to see all possible

shortcuts for changing the attribute. Press key 2 on your keyboard to assign a value (female) for the attribute

or select from the drop-down list.

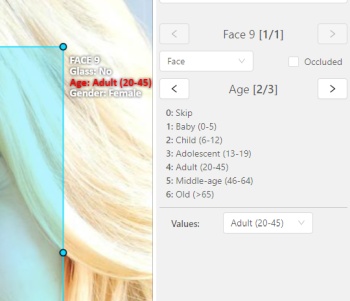

-

Press Up Arrow/Down Arrow on your keyboard or click the buttons in the UI to go to the next/previous

attribute. In this case, after pressing Down Arrow you will be able to edit the Age attribute.

-

Use Right Arrow/Left Arrow keys to move to the previous/next image with annotation.

To see all the hot keys available in the attribute annotation mode, press F2.

Read more in the section attribute annotation mode (advanced).

2.1.20 - Vocabulary

List of terms pertaining to annotation in CVAT.

Label

Label is a type of an annotated object (e.g. person, car, vehicle, etc.)

Attribute

Attribute is a property of an annotated object (e.g. color, model,

quality, etc.). There are two types of attributes:

Unique

Unique immutable and can’t be changed from frame to frame (e.g. age, gender, color, etc.)

Temporary

Temporary mutable and can be changed on any frame (e.g. quality, pose, truncated, etc.)

Track

Track is a set of shapes on different frames which corresponds to one object.

Tracks are created in Track mode

Annotation

Annotation is a set of shapes and tracks. There are several types of annotations:

- Manual which is created by a person

- Semi-automatic which is created mainly automatically, but the user provides some data (e.g. interpolation)

- Automatic which is created automatically without a person in the loop

Approximation

Approximation allows you to reduce the number of points in the polygon.

Can be used to reduce the annotation file and to facilitate editing polygons.

Trackable

Trackable object will be tracked automatically if the previous frame was

a latest keyframe for the object. More details in the section trackers.

Mode

Interpolation

Mode for video annotation, which uses track objects.

Only objects on keyframes are manually annotation, and intermediate frames are linearly interpolated.

Related sections:

Annotation

Mode for images annotation, which uses shape objects.

Related sections:

Dimension

Depends on the task data type that is defined when the task is created.

2D

The data format of 2d tasks are images and videos.

Related sections:

3D

The data format of 3d tasks is a cloud of points.

Data formats for a 3D task

Related sections:

State

State of the job. The state can be changed by an assigned user in the menu inside the job.

There are several possible states: new, in progress, rejected, completed.

Stage

Stage of the job. The stage is specified with the drop-down list on the task page.

There are three stages: annotation, validation or acceptance. This value affects the task progress bar.

Subset

A project can have subsets. Subsets are groups for tasks that make it easier to work with the dataset.

It could be test, train, validation or custom subset.

Credentials

Under credentials is understood Key & secret key, Account name and token, Anonymous access, Key file.

Used to attach cloud storage.

Resource

Under resource is understood bucket name or container name.

Used to attach cloud storage.

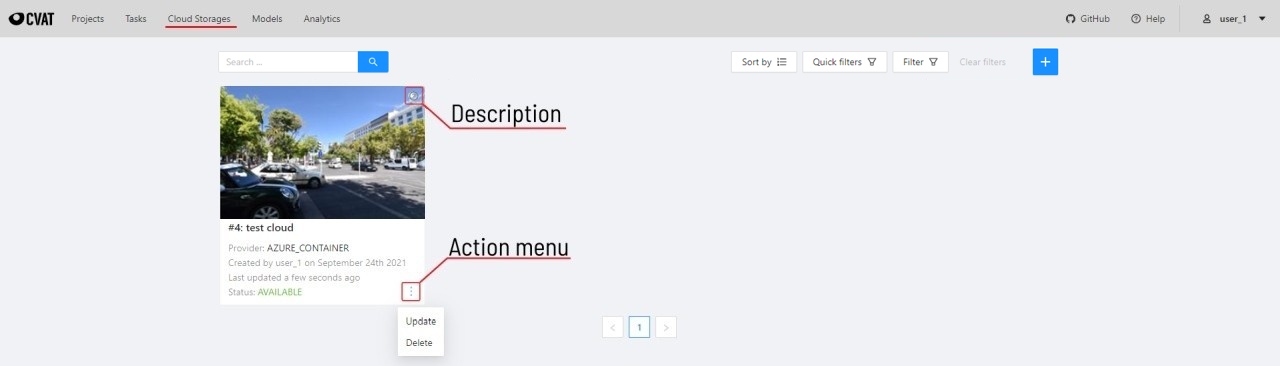

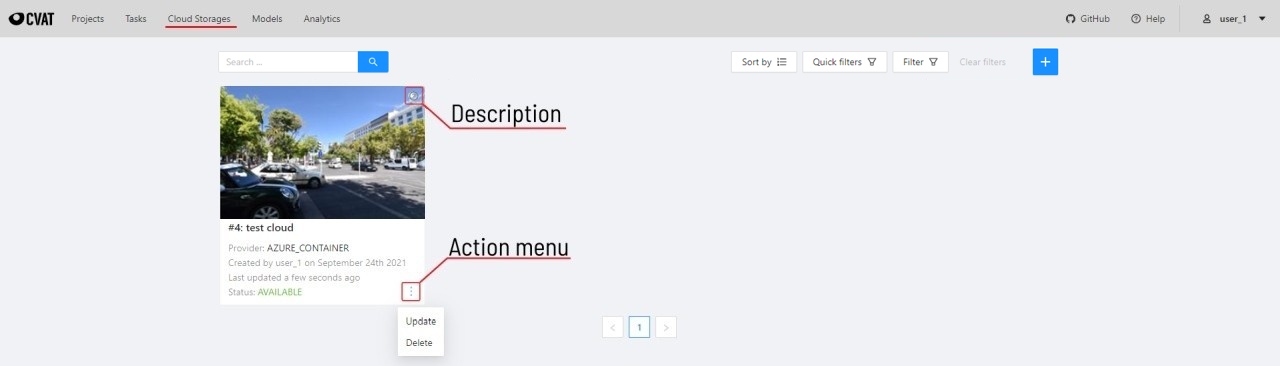

2.1.21 - Cloud storages page

Overview of the cloud storages page.

The cloud storages page contains elements, each of them relating to a separate cloud storage.

Each element contains: preview, cloud storage name, provider, creation and update info, status,

? button for displaying the description and the actions menu.

Each button in the action menu is responsible for a specific function:

Update — update this cloud storageDelete — delete cloud storage.

This preview will appear when it is impossible to get a real preview (e.g. storage is empty or

invalid credentials were used).

In the upper left corner there is a search bar,

using which you can find the cloud storage by display name, provider, etc.

In the upper right corner there are sorting, quick filters and filter.

Filter

Applying filter disables the quick filter.

The filter works similarly to the filters for annotation,

you can create rules from properties,

operators and values and group rules into groups.

For more details, see the filter section.

Learn more about date and time selection.

For clear all filters press Clear filters.

Supported properties for cloud storages list

| Properties |

Supported values |

Description |

ID |

number or range of task ID |

|

Provider type |

AWS S3, Azure, Google cloud |

|

Credentials type |

Key & secret key, Account name and token,

Anonymous access, Key file |

|

Resource name |

|

Bucket name or container name |

Display name |

|

Set when creating cloud storage |

Description |

|

Description of the cloud storage |

Owner |

username |

The user who owns the project, task, or job |

Last updated |

last modified date and time (or value range) |

The date can be entered in the dd.MM.yyyy HH:mm format

or by selecting the date in the window that appears

when you click on the input field |

Click the + button to attach a new cloud storage.

2.1.22 - Attach cloud storage

Instructions on how to attach cloud storage using UI

In CVAT you can use AWS-S3, Azure Blob Container

and Google cloud storages to store image datasets for your tasks.

Using AWS-S3

Create AWS account

First, you need to create an AWS account, to do this, register of 5 steps

following the instructions

(even if you plan to use a free basic account you may need to link a credit card to verify your identity).

To learn more about the operation and benefits of AWS cloud,

take a free AWS Cloud Practitioner Essentials course,

which will be available after registration.

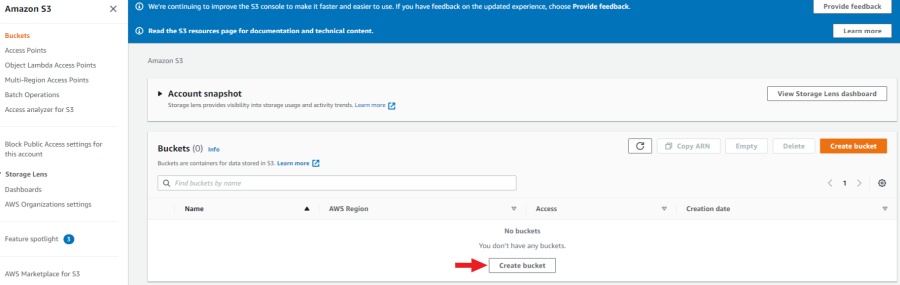

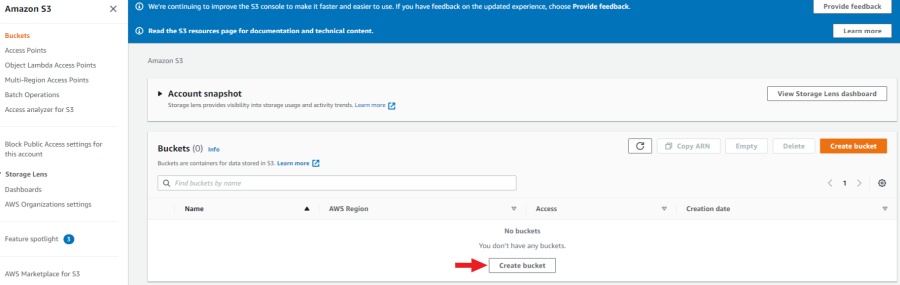

Create a bucket

After the account is created, go to console AWS-S3

and click Create bucket.

You’ll be taken to the bucket creation page. Here you have to specify the name of the bucket, region,

optionally you can copy the settings of another bucket by clicking on the choose bucket button.

Checkbox block all public access can be enabled as we will use access key ID and secret access key to gain access.

In the following sections, you can leave the default settings and click create bucket.

After you create the bucket it will appear in the list of buckets.

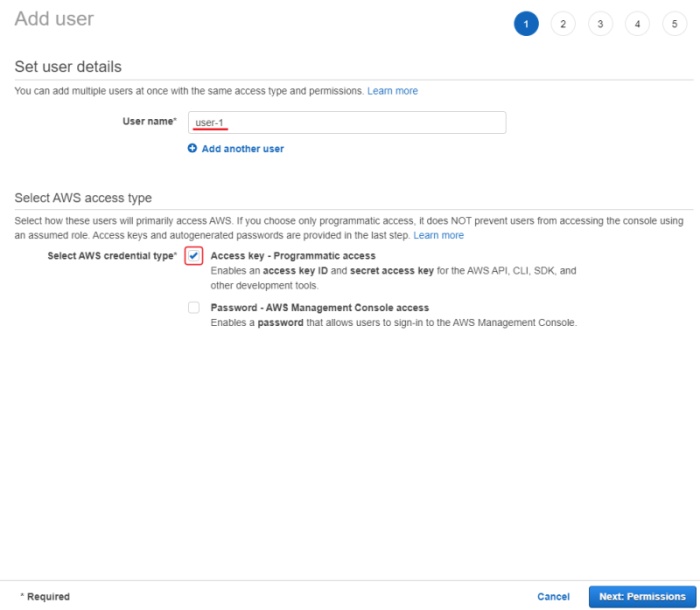

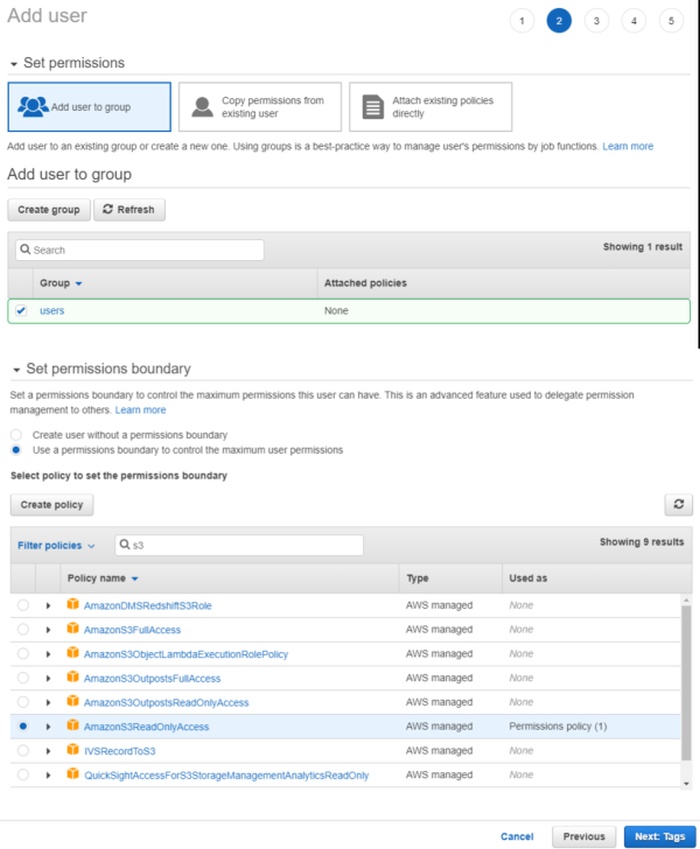

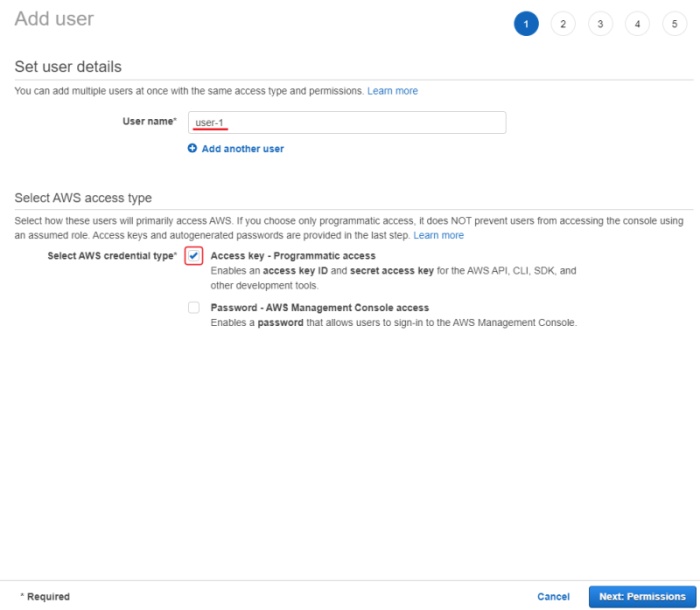

To access bucket you will need to create a user, to do this, go IAM

and click add users. You need to choose AWS access type, have an access key ID and secret access key.

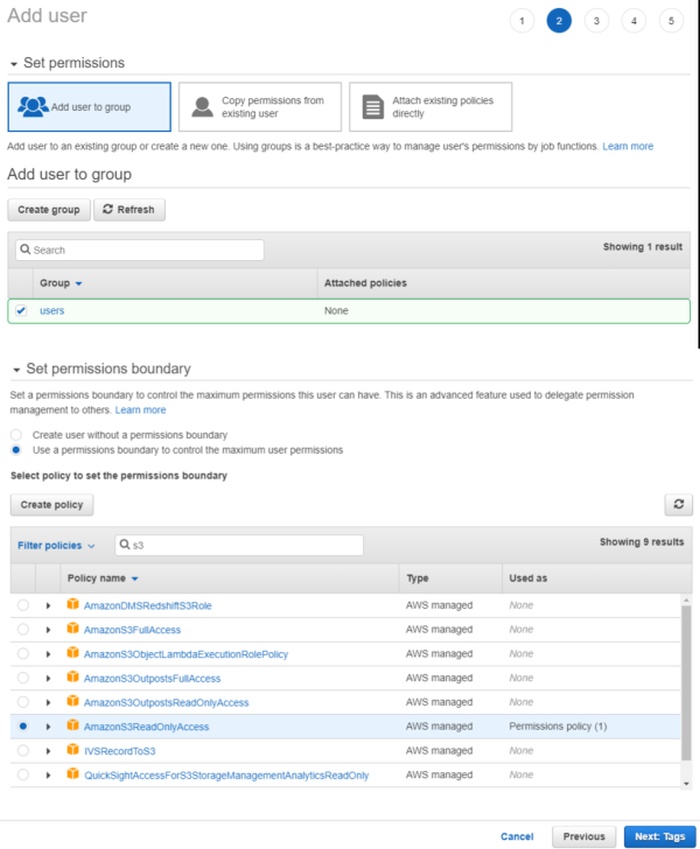

After pressing next button to configure permissions, you need to create a user group.

To do this click create a group, input the group name and select permission policies add AmazonS3ReadOnlyAccess

using the search (if you want the user you create to have write rights to bucket select AmazonS3FullAccess).

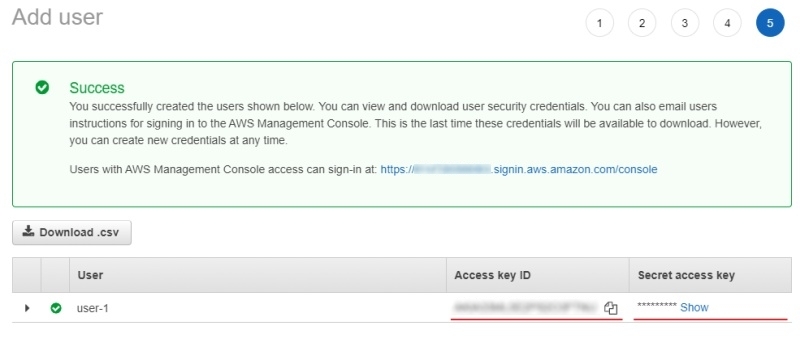

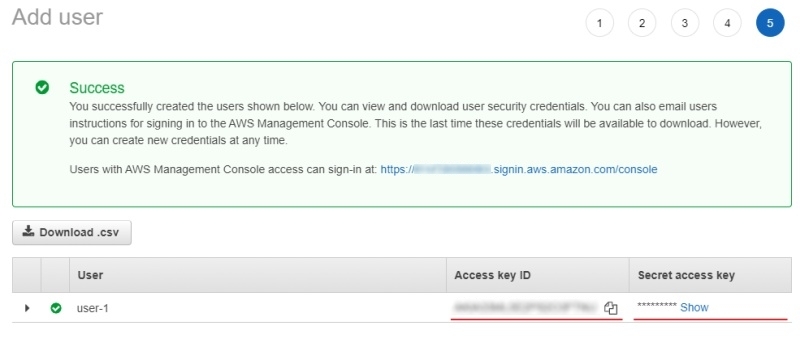

You can also add tags for the user (optional), and look again at the entered data. In the last step of creating a user,

you will be provided with access key ID and secret access key,

they will need to be used in CVAT when adding cloud storage.

Upload dataset

Prepare dataset

For example, let’s take The Oxford-IIIT Pet Dataset:

Upload

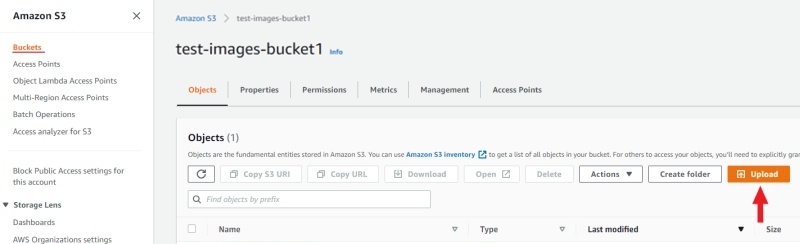

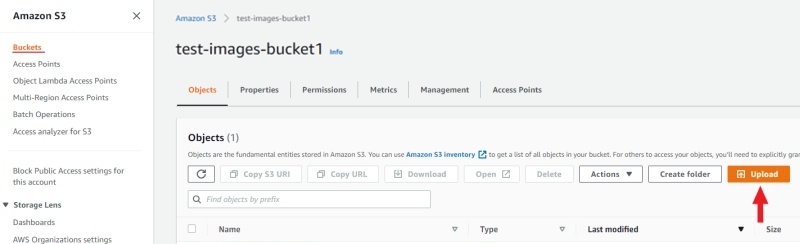

-

When the manifest file is ready, open the previously prepared bucket and click Upload:

-

Drag the manifest file and image folder on the page and click Upload:

Now you can attach new cloud storage into CVAT.

Using Azure Blob Container

Create Microsoft account

First, create a Microsoft account by registering,

or you can use your GitHub account to log in. After signing up for Azure, you’ll need to choose a subscription plan,

you can choose a free 12-month subscription, but you’ll need to enter your credit card details to verify your identity.

To learn more about Azure, read documentation.

Create a storage account

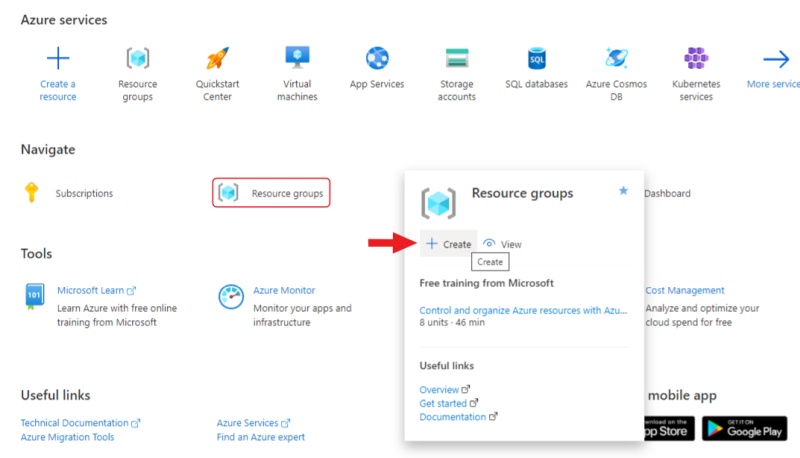

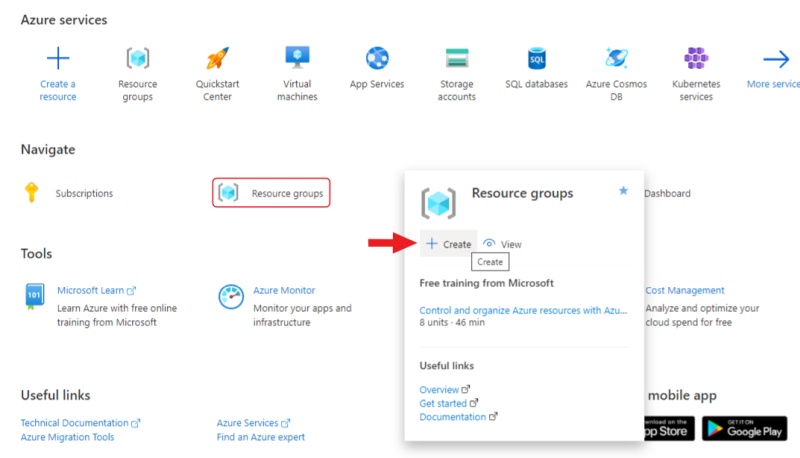

After registration, go to Azure portal.

Hover over the resource groups and click create in the window that appears.

Enter a name for the group and click review + create, check the entered data and click create.

After the resource group is created,

go to the resource groups page

and navigate to the resource group that you created.

Click create for create a storage account.

-

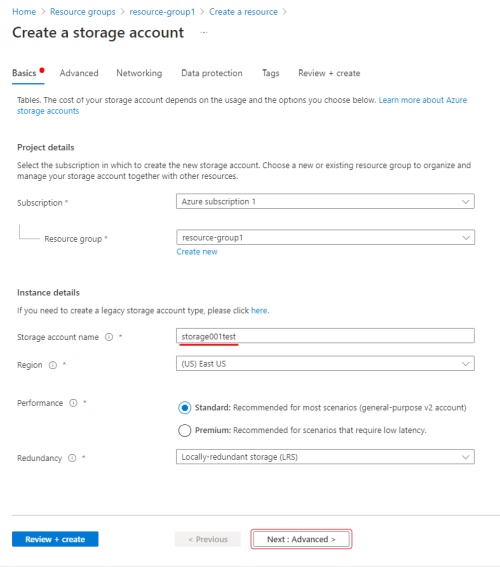

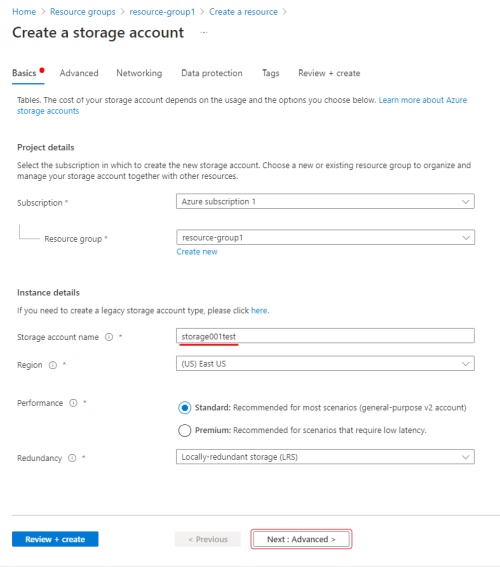

Basics

Enter storage account name (will be used in CVAT to access your container), select a region,

select performance in our case will be standard enough, select redundancy enough LRS

more about redundancy.

Click next to go to the advanced section.

-

Advanced

In the advanced section, you can change public access by disabling enable blob public access

to deny anonymous access to the container.

If you want to change public access you can find this switch in the configuration section of your storage account.

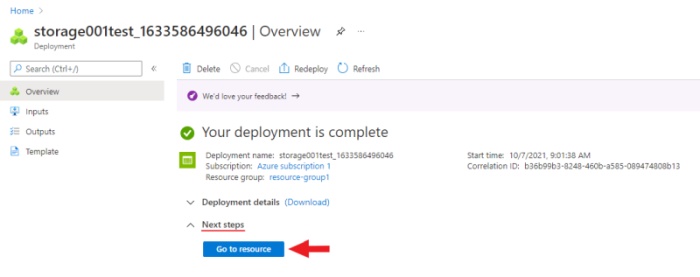

After that, go to the review section, check the entered data and click create.

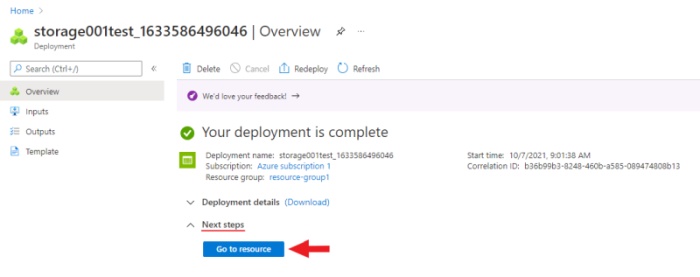

You will be reached to the deployment page after the finished,

navigate to the resource by clicking on go to resource.

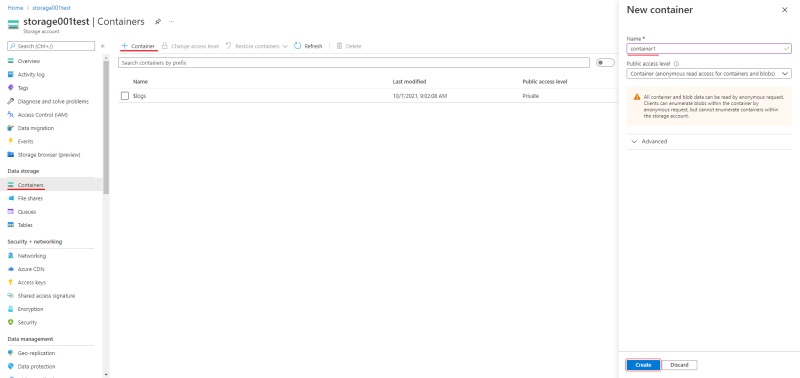

Create a container

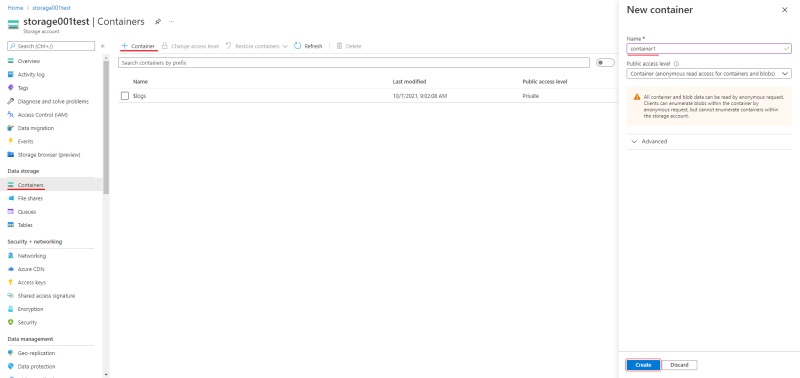

Go to the containers section and create a new container. Enter the name of the container

(will be used in CVAT to access your container) and select container in public access level.

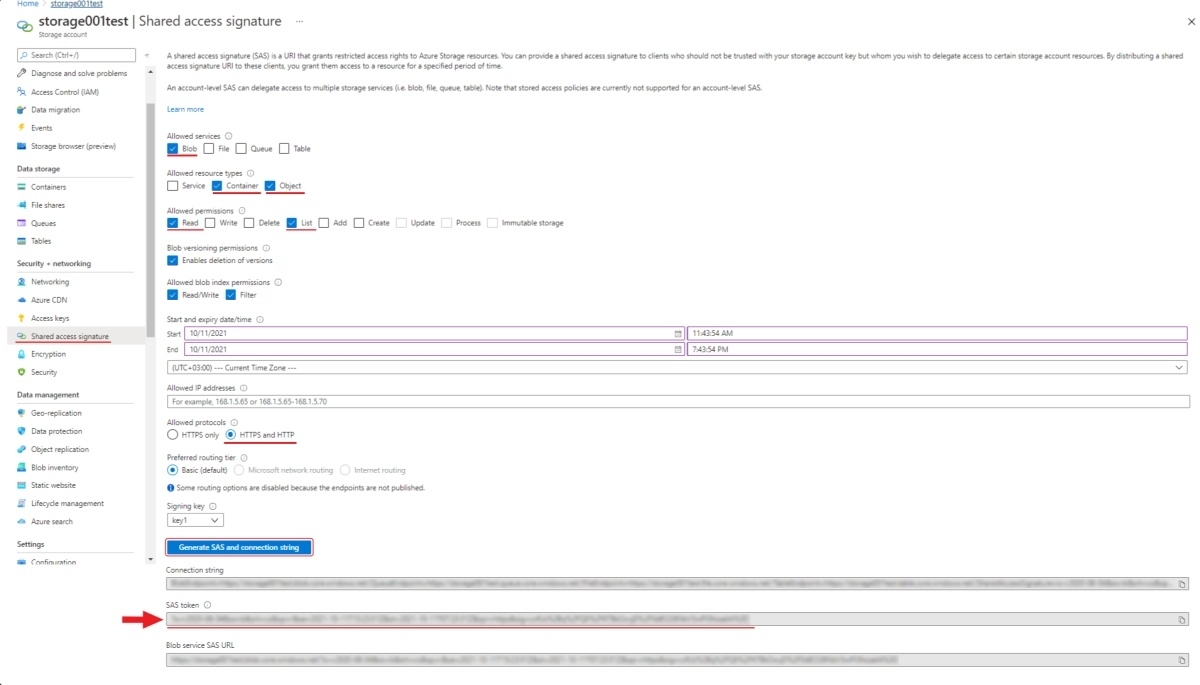

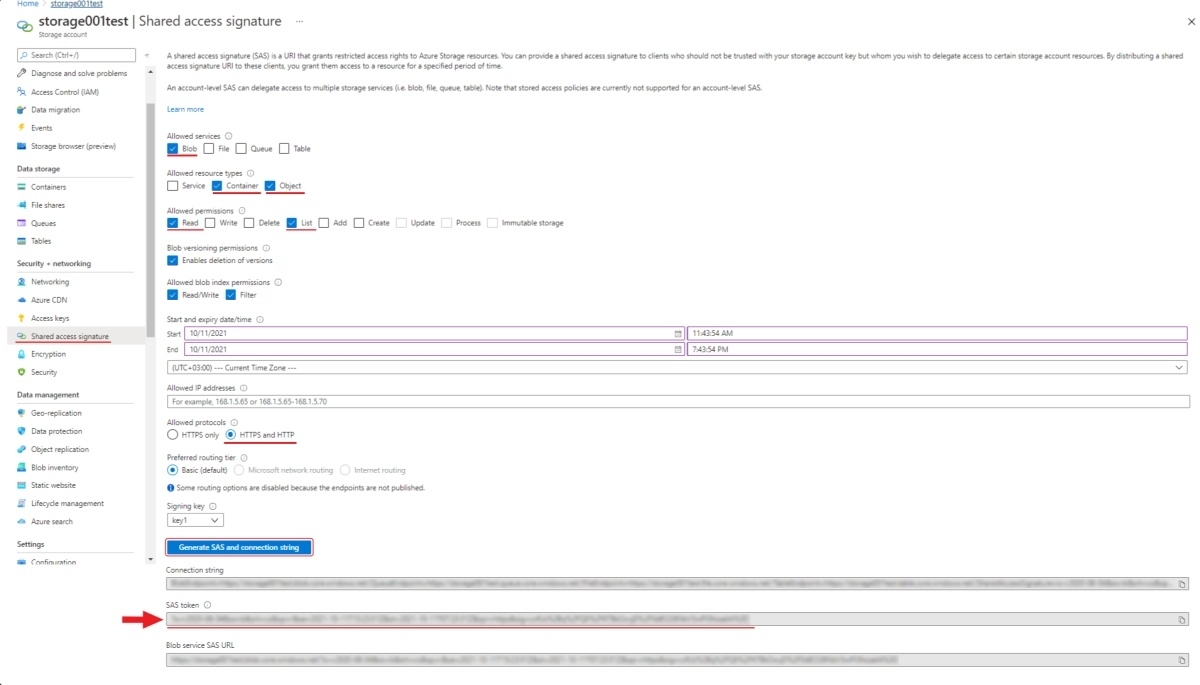

SAS token

Using the SAS token, you can securely transfer access to the container to other people by preconfiguring rights,

as well as the date/time of the starting and expiration of the token.

To generate a SAS token, go to Shared access signature section of your storage account.

Here you should enable Blob in the Allowed services, Container and Object in the Allowed resource types,

Read and List in the Allowed permissions, HTTPS and HTTP in the Allowed protocols,

also here you can set the date/time of the starting and expiration for the token. Click Generation SAS token.

and copy SAS token (will be used in CVAT to access your container).

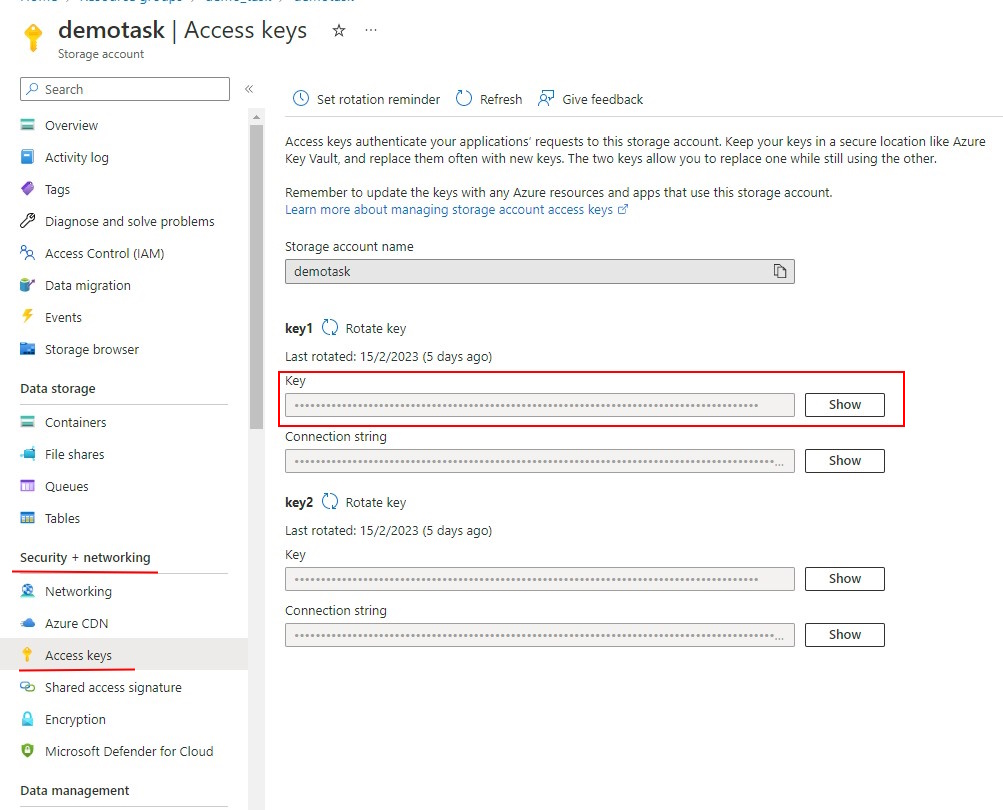

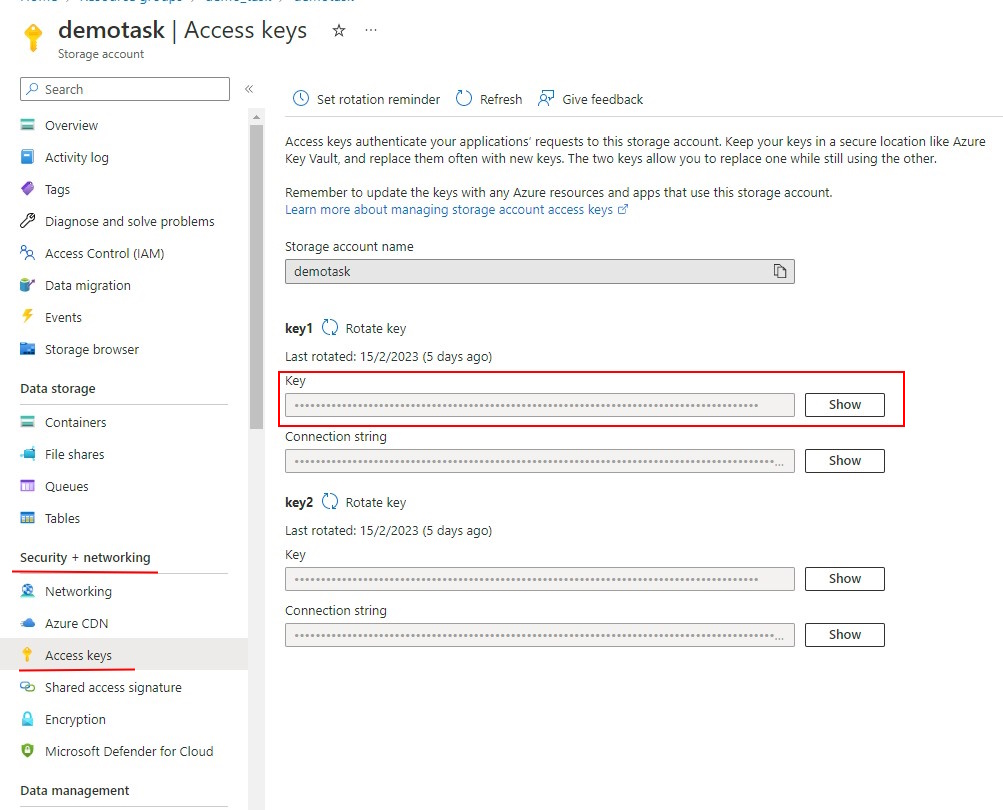

For personal use, you can enter the Access Key from the your storage account in the SAS Token field,

access key can be found in the security + networking section.

Click show keys to show the key.

Upload dataset

Prepare the dataset as in the point prepare dataset.

-

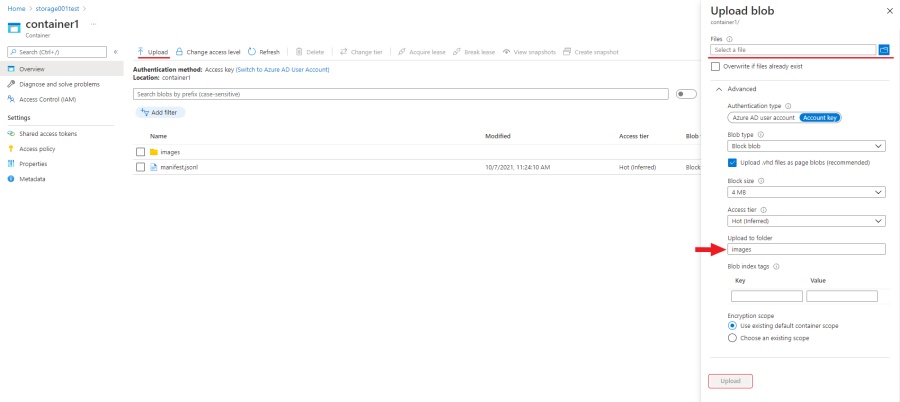

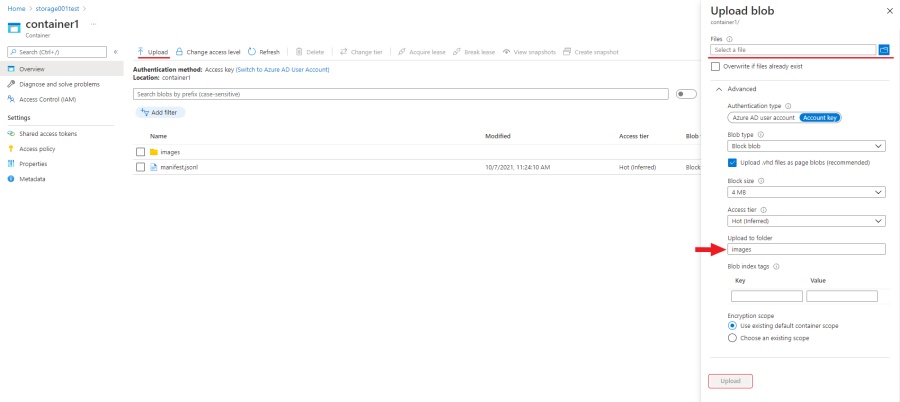

When the dataset is ready, go to your container and click upload.

-

Click select a files and select all images from the images folder

in the upload to folder item write the name of the folder in which you want to upload images in this case “images”.

-

Click upload, when the images are loaded you will need to upload a manifest file. When loading a manifest, you

need to make sure that the relative paths specified in the manifest file match the paths

to the files in the container. Click select a file and select manifest file, in order to upload file to the root

of the container leave blank upload to folder field.

Now you can attach new cloud storage into CVAT.

Using Google Cloud Storage

Create Google account

First, create a Google account, go to account login page and click Create account.

After, go to the Google Cloud page, click Get started, enter the required data

and accept the terms of service (you’ll need credit card information to register).

Create a bucket

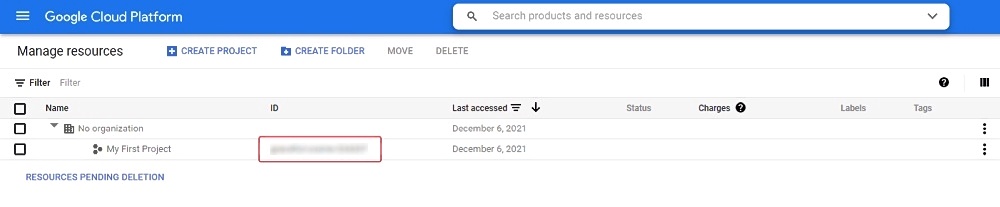

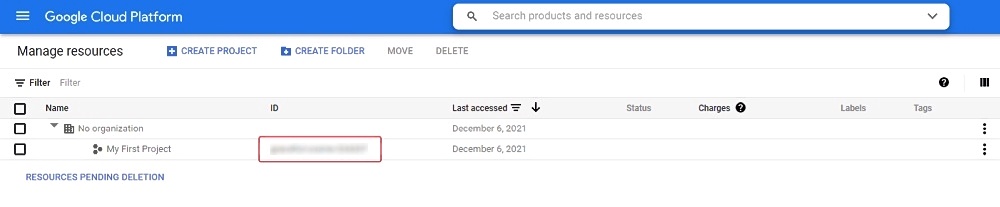

Your first project will be created automatically, you can see it on the cloud resource manager page.

To create a bucket, go to the cloud storage page

and press Create bucket. Next, enter the name of the bucket, add labels if necessary, select the type of location

for example region and the location nearest to you, select storage class, when selecting access control

you can enable Enforce public access prevention on this bucket (if you plan to have anonymous access to your bucket,

it should be disabled) you can select Uniform or Fine-grained access control, if you need protection of your

object data select protect object data type. When all the information is entered click Create to create the bucket.

Upload

Prepare the dataset as in the point prepare dataset.

To upload files, you can simply drag and drop files and folders into a browser window

or use the upload folder and/or upload files.

Access permissions

To access Google Cloud Storage from CVAT you will need a Project ID

you can find it by going to cloud resource manager page

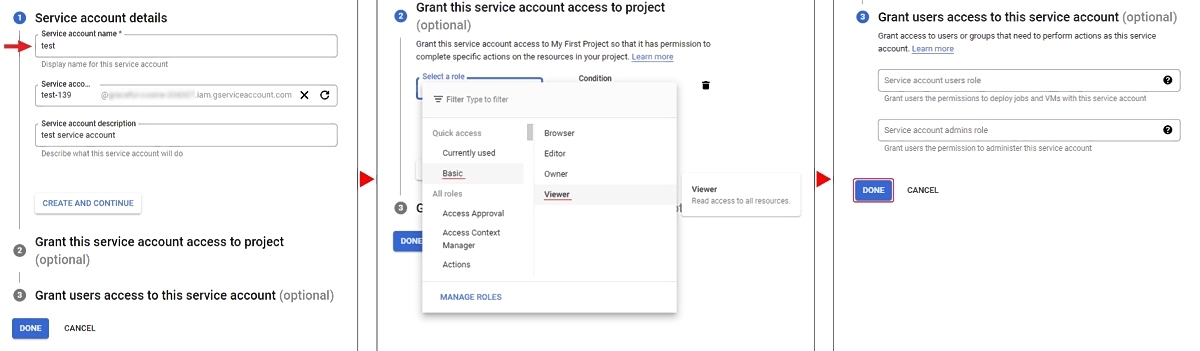

Create a service account and key file

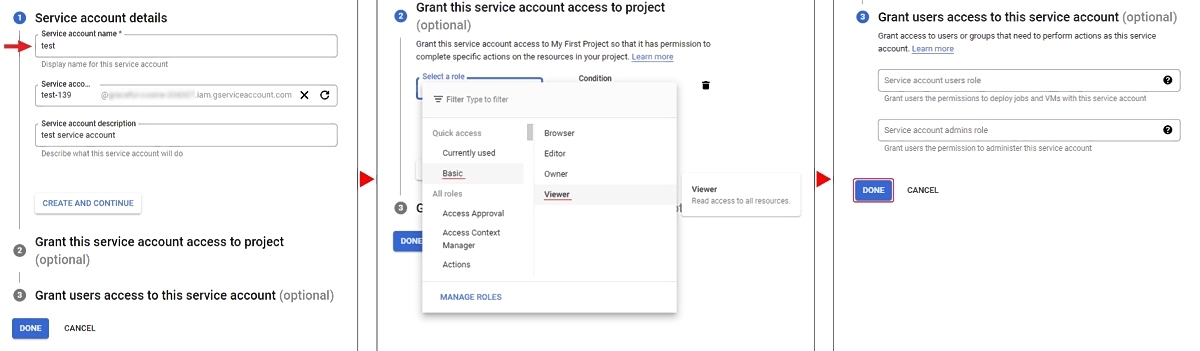

To access your bucket you need a key file and a service account. To create a service account,

go to IAM & Admin/Service Accounts and press Create Service Account. Enter your account

name and click Create And Continue. Select a role for example Basic/Viewer.

Next, you can give access rights to the service account, to complete click Done.

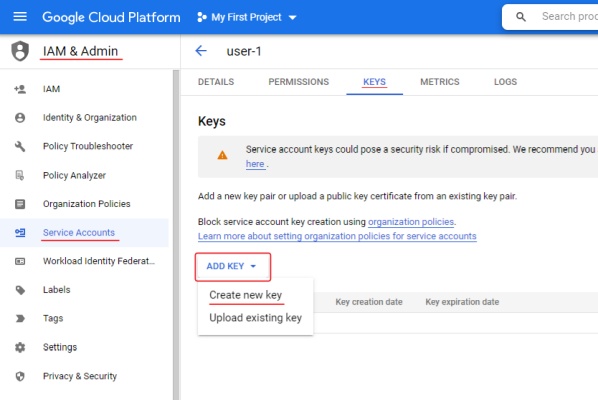

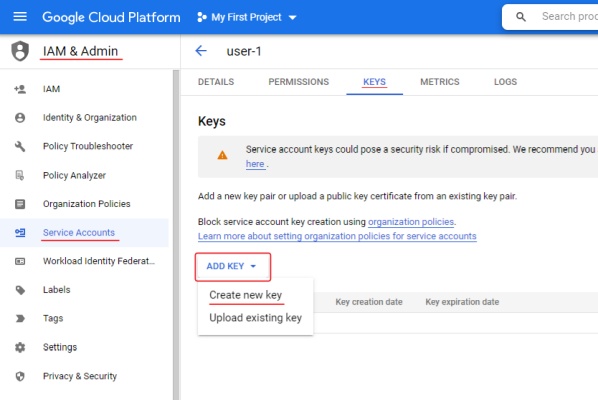

The account you created will appear in the service accounts list, open it and go to the Keys tab.

To create a key, click ADD and select Create new key, next you need to choose the key type JSON and select Create.

The key file will be downloaded automatically.

Learn more about creating keys.

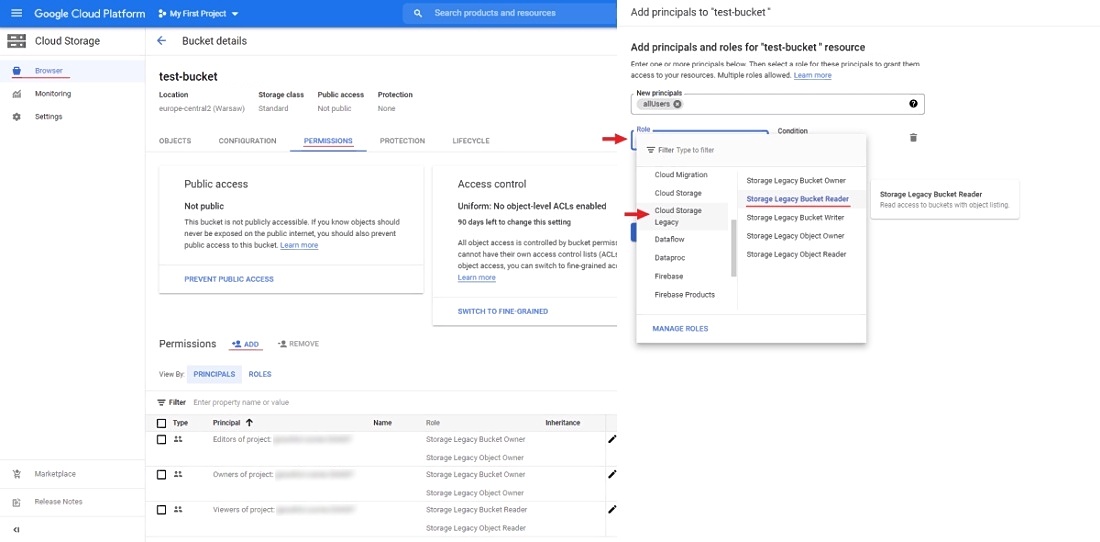

Anonymous access

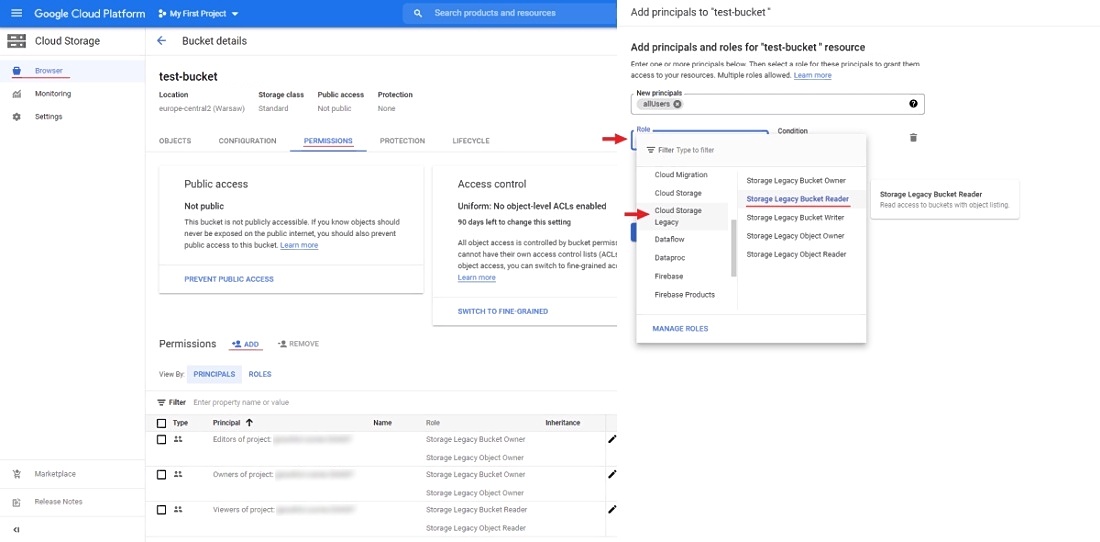

To configure anonymous access, open your bucket and go to the permissions tab click ADD to add new principals.

In new principals field specify allUsers, select role for example Cloud Storage Legacy/Storage Legacy Bucket Reader

and press SAVE.

Now you can attach new cloud storage into CVAT.

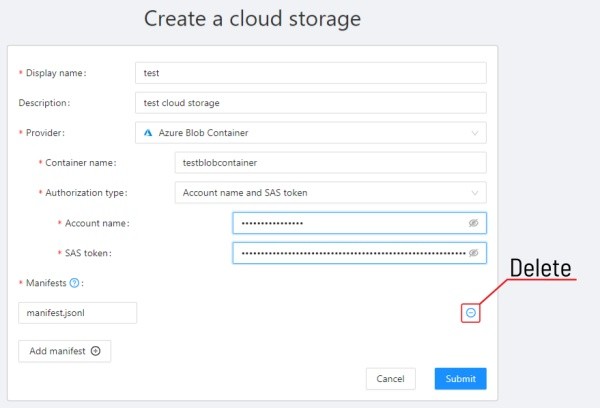

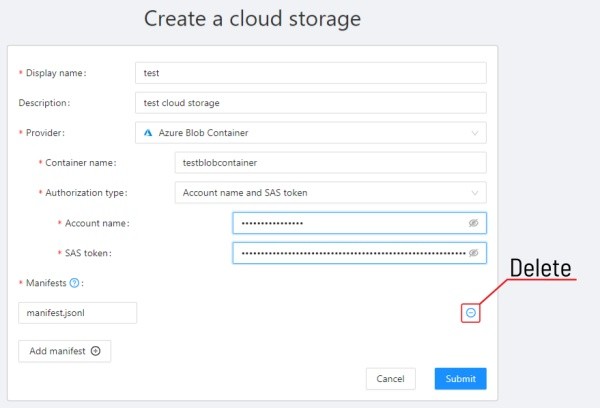

Attach new cloud storage

After you upload the dataset and manifest file to AWS-S3, Azure Blob Container or Google Cloud Storage

you will be able to attach a cloud storage. To do this, press the + button on the Cloud storages page

and fill out the following form:

-

Display name - the display name of the cloud storage.

-

Description (optional) - description of the cloud storage, appears when you click on the ? button

of an item on cloud storages page.

-

Provider - choose provider of the cloud storage:

-

AWS-S3:

-

Azure Blob Container:

-

Google Cloud:

-

Bucket name - cloud storage bucket name,

you can find the created bucket on the storage browser page.

-

Authorization type:

-

Key file - you can drag a key file to the area attach a file

or click on the area to select the key file through the explorer. If the environment variable

GOOGLE_APPLICATION_CREDENTIALS is specified for an environment with a deployed CVAT instance, then it will

be used if you do not attach the key file

(more about GOOGLE_APPLICATION_CREDENTIALS).

-

Anonymous access - for anonymous access, you need to enable public access to bucket.

-

Prefix - used to filter data from the bucket.

-

Project ID - you can find

the created project on the cloud resource manager page,

note that the project name does not match the project ID.

-

Location - here you can choose a region from the list or add a new one. To get more information click

on ?.

-

Manifest - the path to the manifest file on your cloud storage.

You can add multiple manifest files using the Add manifest button.

You can find on how to prepare dataset manifest here.

If you have data on the cloud storage and don’t want to download content locally, you can mount your

cloud storage as a share point according to that guide

and prepare manifest for the data.

To publish the cloud storage, click submit, after which it will be available on

the Cloud storages page.

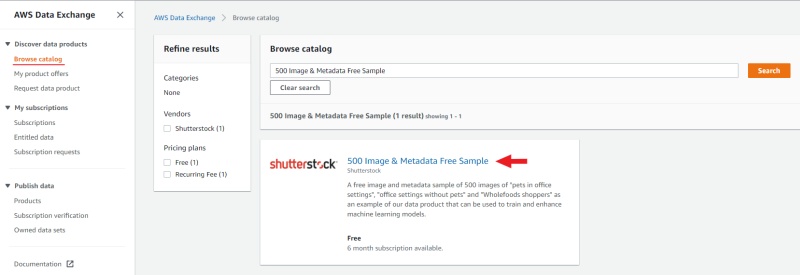

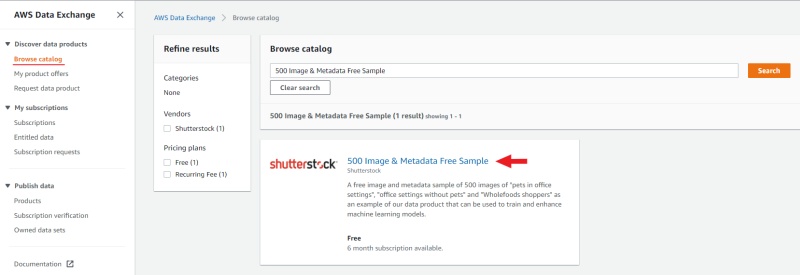

Using AWS Data Exchange

Subscribe to data set

You can use AWS Data Exchange to add image datasets.

For example, consider adding a set of datasets 500 Image & Metadata Free Sample.

Go to browse catalog and use the search to find

500 Image & Metadata Free Sample, open the dataset page and click continue to subscribe,

you will be taken to the page complete subscription request, read the information provided

and click send subscription request to provider.

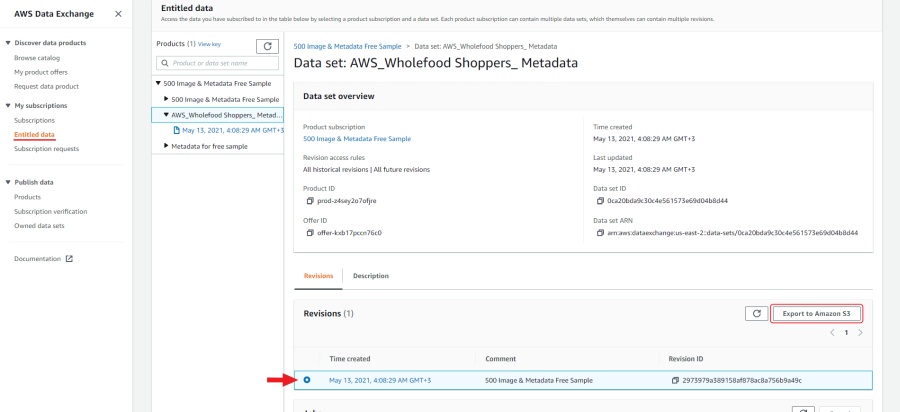

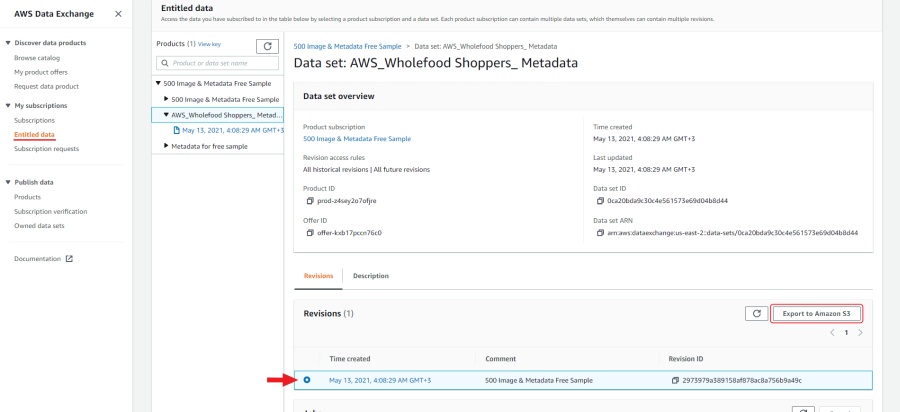

Export to bucket

After that, this dataset will appear in the

list subscriptions.

Now you need to export the dataset to Amazon S3.

First, let’s create a new one bucket similar to described above.

To export one of the datasets to a new bucket open it entitled data select one of the datasets,

select the corresponding revision and click export to Amazon S3

(please note that if bucket and dataset are located in different regions, export fees may apply).

In the window that appears, select the created bucket and click export.

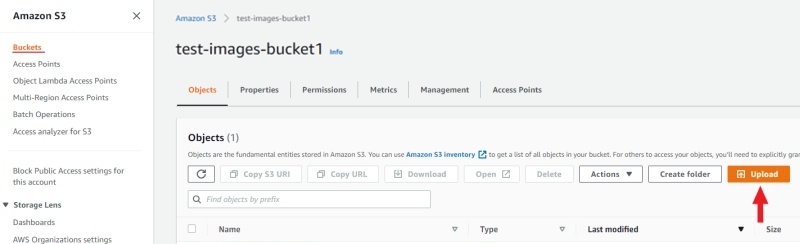

Prepare manifest file

Now you need to prepare a manifest file. I used AWS cli and

script for prepare manifest file.

Perform the installation using the manual aws-shell,

I used aws-cli 1.20.49 Python 3.7.9 Windows 10.

You can configure credentials by running aws configure.

You will need to enter Access Key ID and Secret Access Key as well as region.

aws configure

Access Key ID: <your Access Key ID>

Secret Access Key: <your Secret Access Key>

Copy the content of the bucket to a folder on your computer:

aws s3 cp <s3://bucket-name> <yourfolder> --recursive

After copying the files, you can create a manifest file as described in preapair manifest file section:

python <cvat repository>/utils/dataset_manifest/create.py --output-dir <yourfolder> <yourfolder>

When the manifest file is ready, you can upload it to aws s3 bucket. If you gave full write permissions

when you created the user, run:

aws s3 cp <yourfolder>/manifest.jsonl <s3://bucket-name>

If you have given read-only permissions, use the download through the browser, click upload,

drag the manifest file to the page and click upload.

Now you can attach new cloud storage using the dataset 500 Image & Metadata Free Sample.

2.2 - Advanced

This section contains advanced documents for CVAT users

2.2.1 - Projects page

Creating and exporting projects in CVAT.

Projects page

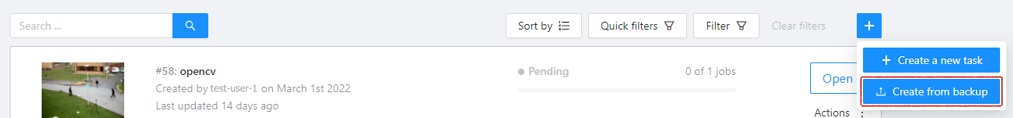

On this page you can create a new project, create a project from a backup, and also see the created projects.

In the upper left corner there is a search bar, using which you can find the project by project name, assignee etc.

In the upper right corner there are sorting, quick filters and filter.

Filter

Applying filter disables the quick filter.

The filter works similarly to the filters for annotation,

you can create rules from properties,

operators and values and group rules into groups.

For more details, see the filter section.

Learn more about date and time selection.

For clear all filters press Clear filters.

Supported properties for projects list

| Properties |

Supported values |

Description |

Assignee |

username |

Assignee is the user who is working on the project, task or job.

(is specified on task page) |

Owner |

username |

The user who owns the project, task, or job |

Last updated |

last modified date and time (or value range) |

The date can be entered in the dd.MM.yyyy HH:mm format

or by selecting the date in the window that appears

when you click on the input field |

ID |

number or range of job ID |

|

Name |

name |

On the tasks page - name of the task,

on the project page - name of the project |

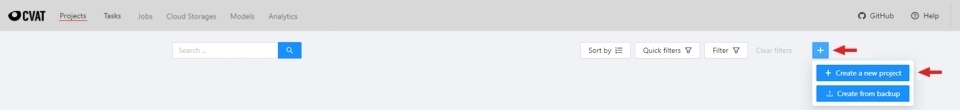

Create a project

At CVAT, you can create a project containing tasks of the same type.

All tasks related to the project will inherit a list of labels.

To create a project, go to the projects section by clicking on the Projects item in the top menu.

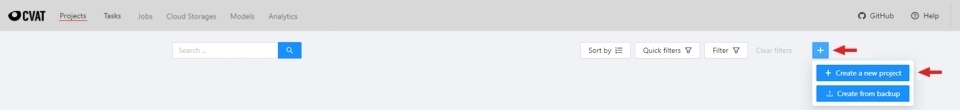

On the projects page, you can see a list of projects, use a search,

or create a new project by clicking on the + button and select Create New Project.

Note that the project will be created in the organization that you selected at the time of creation.

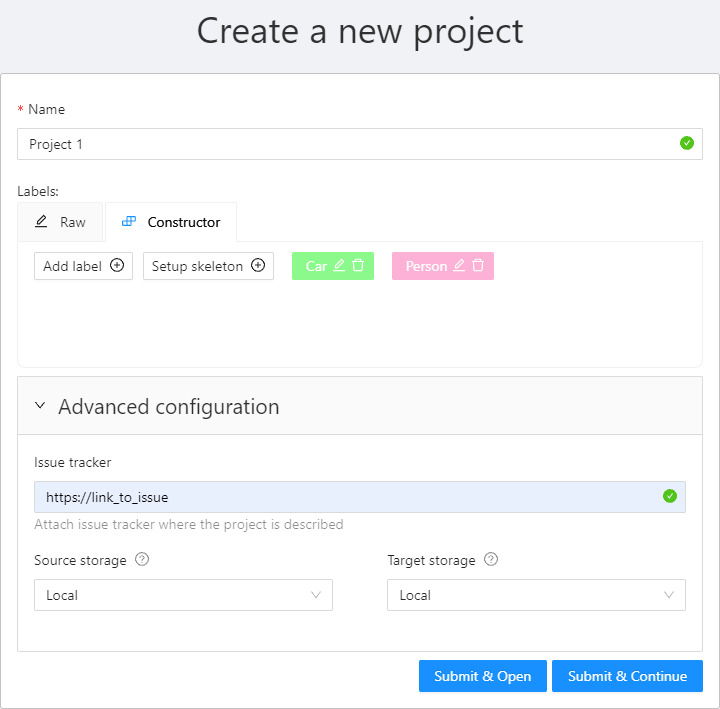

Read more about organizations.

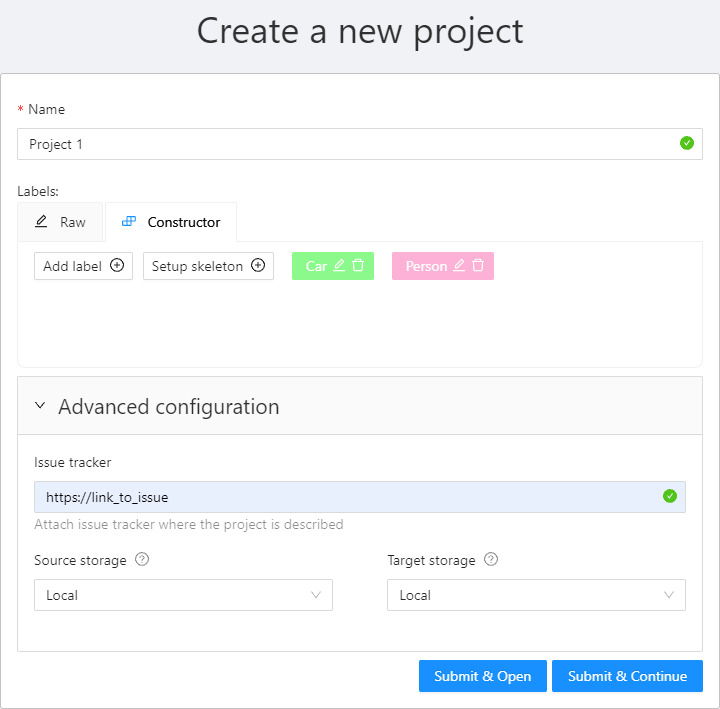

You can change: the name of the project, the list of labels

(which will be used for tasks created as parts of this project) and a link to the issue.

Learn more about creating a label list.

To save and open project click on Submit & Open button. Also you

can click on Submit & Continue button for creating several projects in sequence

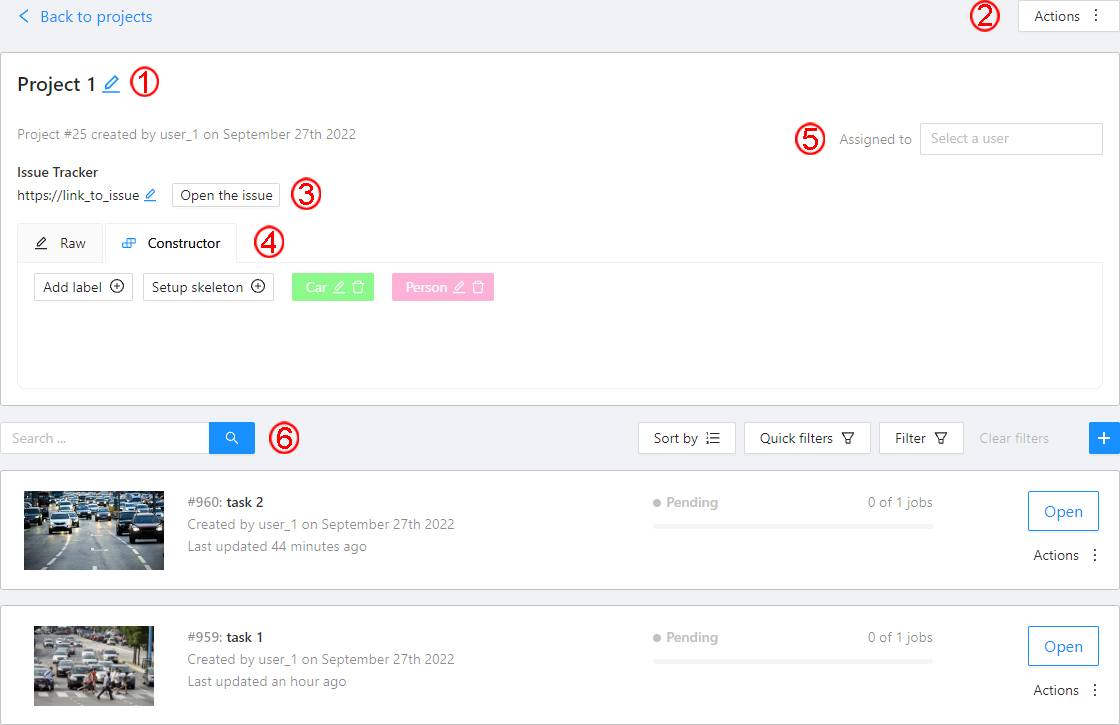

Once created, the project will appear on the projects page. To open a project, just click on it.

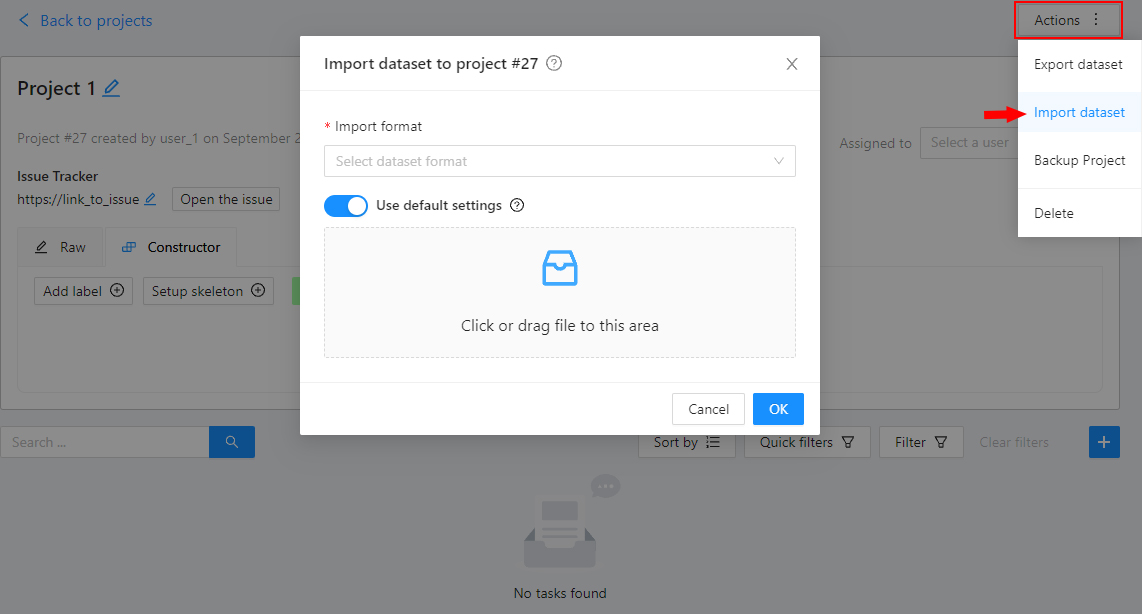

Here you can do the following:

- Change the project’s title.

- Open the

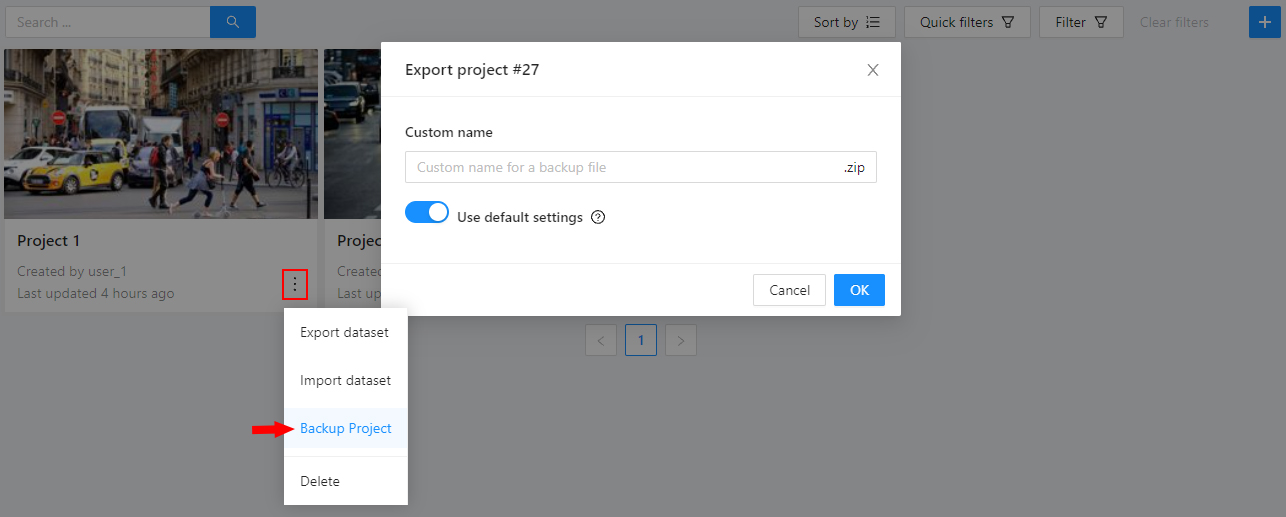

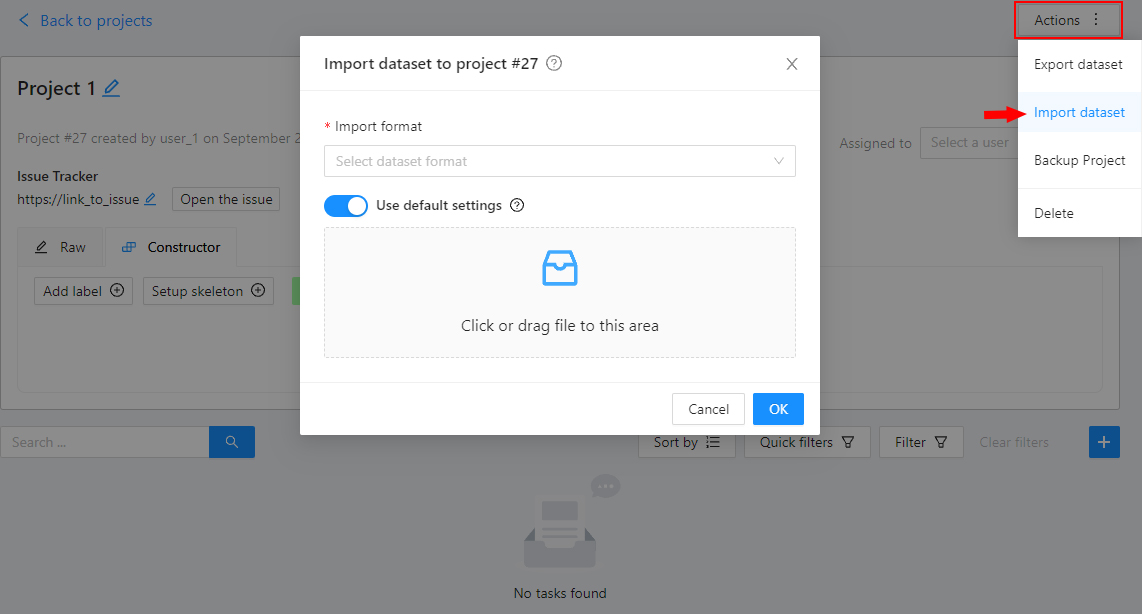

Actions menu. Each button is responsible for a specific function in the Actions menu:

Export dataset/Import dataset - download/upload annotations or annotations and images in a specific format.

More information is available in the export/import datasets

section.Backup project - make a backup of the project read more in the backup section.Delete - remove the project and all related tasks.

- Change issue tracker or open issue tracker if it is specified.

- Change labels.

You can add new labels or add attributes for the existing labels in the

Raw mode or the Constructor mode.

You can also change the color for different labels. By clicking Copy you can copy the labels to the clipboard.

- Assigned to — is used to assign a project to a person.

Start typing an assignee’s name and/or choose the right person out of the dropdown list.

Tasks — is a list of all tasks for a particular project, with the ability to search,

sort and filter for tasks in the project.

Read more about search.

Read more about sorting and filter

It is possible to choose a subset for tasks in the project. You can use the available options

(Train, Test, Validation) or set your own.

2.2.2 - Organization

Using organization in CVAT.

Personal workspace

Your Personal workspace will display the tasks and projects you’ve created.

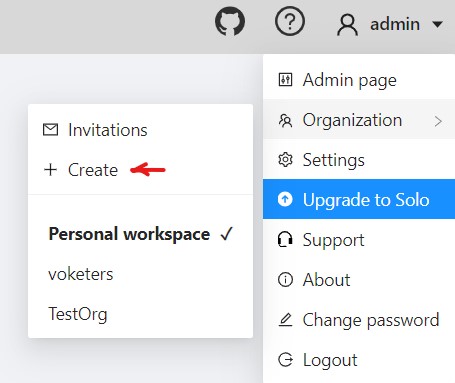

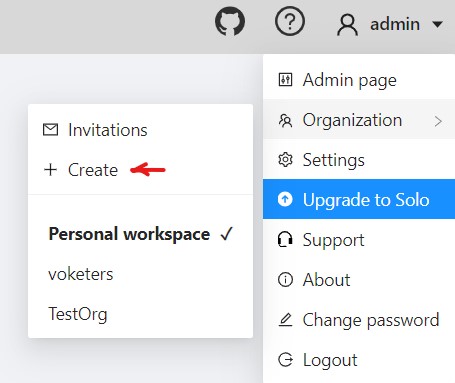

Create a new organization

To create an organization, open the user menu, go to Organization and click Create.

Fill in the required information to create your organization.

You need to enter a Short name of the organization, which will be displayed in the menu.

You can specify other fields: Full Name, Description and the organization contacts.

Of them will be visible on the organization settings page.

Organization page

To go to the organization page, open the user menu, go to Organization and click Settings.

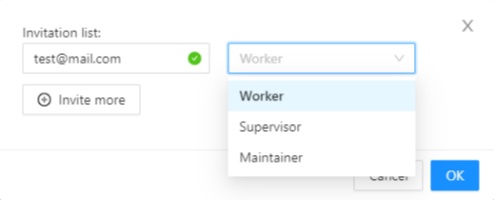

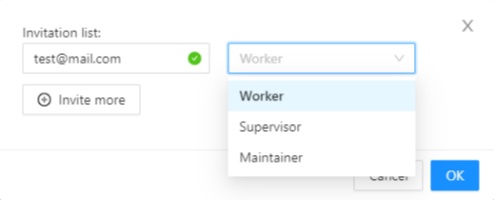

Invite members into organization

To add members, click Invite members. In the window that appears,

enter the email of the user you want to add and select the role (the role defines a set of rules):

Worker - workers have only access to tasks, projects, and jobs, assigned to them.Supervisor - this role allows you to create and assign jobs, tasks and projects to members of the organization.Maintainer - a member with this role has all the capabilities of the role supervisor,

sees all the tasks and the projects created by other members of the organization,

has full access to the Cloud Storages feature, and can modify members and their roles.Owner - a role assigned to the creator of the organization with maximum capabilities.

In addition to roles, there are groups of users that are configured on the Admin page.

Read more about the roles in IAM system roles section.

After you add members, they will appear on your organization settings page,

with each member listed and information about invitation details.

You can change a member’s role or remove a member at any time.

The member can leave the organization on his own by clicking Leave organization on the organization settings page.

Remove organization

You can remove an organization that you created.

Deleting an organization will delete all related resources (annotations, jobs, tasks, projects, cloud storages, ..).

In order to remove an organization, click Remove organization,

you will be asked to confirm the deletion by entering the short name of the organization.

2.2.3 - Search

Overview of available search options.

There are several options how to use the search.

- Search within all fields (owner, assignee, task name, task status, task mode).

To execute enter a search string in search field.

- Search for specific fields. How to perform:

owner: admin - all tasks created by the user who has the substring “admin” in his nameassignee: employee - all tasks which are assigned to a user who has the substring “employee” in his namename: training - all tasks with the substring “training” in their namesmode: annotation or mode: interpolation - all tasks with images or videos.status: annotation or status: validation or status: completed - search by statusid: 5 - task with id = 5.

- Multiple filters. Filters can be combined (except for the identifier) using the keyword

AND:

mode: interpolation AND owner: adminmode: annotation and status: annotation

The search is case insensitive.

2.2.4 - Shape mode (advanced)

Advanced operations available during annotation in shape mode.

Basic operations in the mode were described in section shape mode (basics).

Occluded

Occlusion is an attribute used if an object is occluded by another object or

isn’t fully visible on the frame. Use Q shortcut to set the property

quickly.

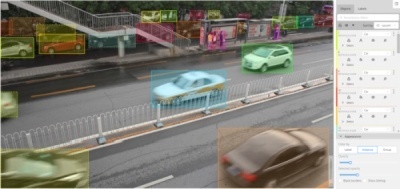

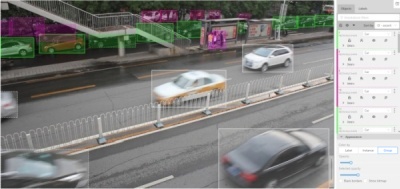

Example: the three cars on the figure below should be labeled as occluded.

If a frame contains too many objects and it is difficult to annotate them

due to many shapes placed mostly in the same place, it makes sense

to lock them. Shapes for locked objects are transparent, and it is easy to

annotate new objects. Besides, you can’t change previously annotated objects

by accident. Shortcut: L.

2.2.5 - Track mode (advanced)

Advanced operations available during annotation in track mode.

Basic operations in the mode were described in section track mode (basics).

Shapes that were created in the track mode, have extra navigation buttons.

You can use the Split function to split one track into two tracks:

2.2.6 - 3D Object annotation (advanced)

Overview of advanced operations available when annotating 3D objects.

As well as 2D-task objects, 3D-task objects support the ability to change appearance, attributes,

properties and have an action menu. Read more in objects sidebar section.

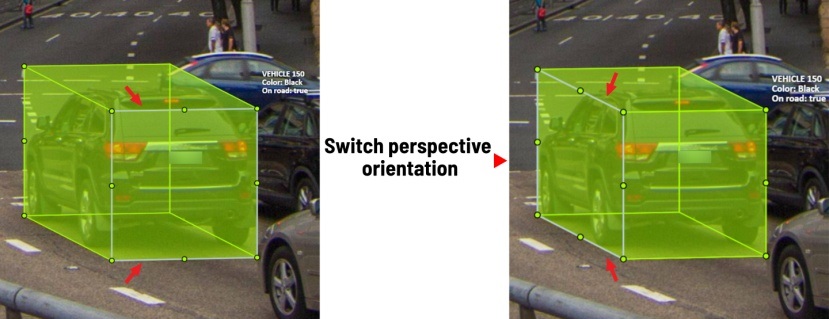

Moving an object

If you hover the cursor over a cuboid and press Shift+N, the cuboid will be cut,

so you can paste it in other place (double-click to paste the cuboid).

Copying

As well as in 2D task you can copy and paste objects by Ctrl+C and Ctrl+V,

but unlike 2D tasks you have to place a copied object in a 3D space (double click to paste).

Image of the projection window

You can copy or save the projection-window image by left-clicking on it and selecting a “save image as” or “copy image”.

2.2.7 - Attribute annotation mode (advanced)

Advanced operations available in attribute annotation mode.

Basic operations in the mode were described in section attribute annotation mode (basics).

It is possible to handle lots of objects on the same frame in the mode.

It is more convenient to annotate objects of the same type. In this case you can apply

the appropriate filter. For example, the following filter will

hide all objects except person: label=="Person".

To navigate between objects (person in this case),

use the following buttons switch between objects in the frame on the special panel:

or shortcuts:

Tab — go to the next objectShift+Tab — go to the previous object.

In order to change the zoom level, go to settings (press F3)

in the workspace tab and set the value Attribute annotation mode (AAM) zoom margin in px.

2.2.8 - Annotation with rectangles

To learn more about annotation using a rectangle, see the sections:

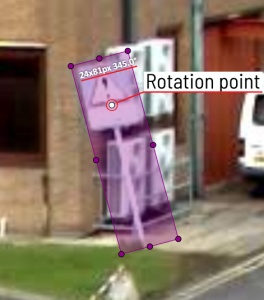

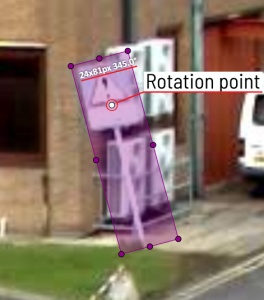

Rotation rectangle

To rotate the rectangle, pull on the rotation point. Rotation is done around the center of the rectangle.

To rotate at a fixed angle (multiple of 15 degrees),

hold shift. In the process of rotation, you can see the angle of rotation.

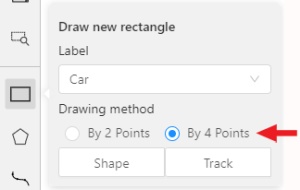

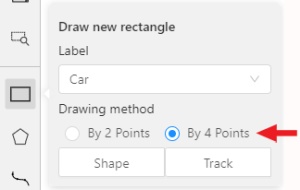

Annotation with rectangle by 4 points

It is an efficient method of bounding box annotation, proposed

here.

Before starting, you need to make sure that the drawing method by 4 points is selected.

Press Shape or Track for entering drawing mode. Click on four extreme points:

the top, bottom, left- and right-most physical points on the object.

Drawing will be automatically completed right after clicking the fourth point.

Press Esc to cancel editing.

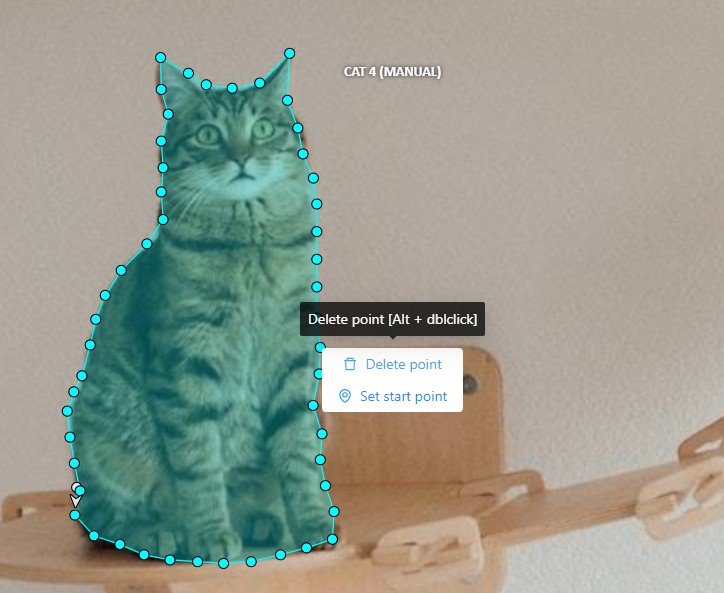

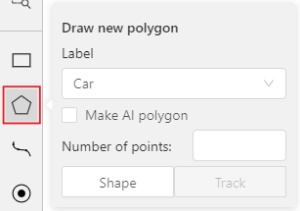

2.2.9 - Annotation with polygons

Guide to creating and editing polygons.

2.2.9.1 - Manual drawing

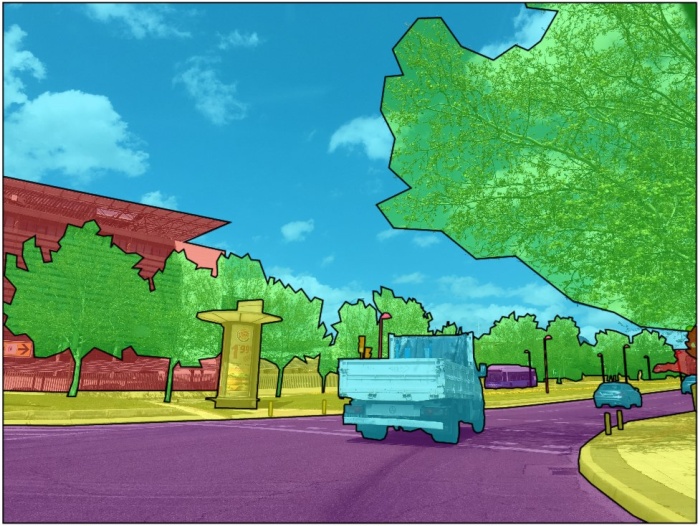

It is used for semantic / instance segmentation.

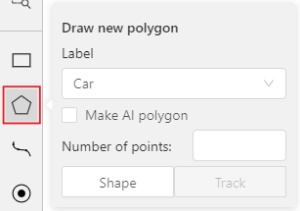

Before starting, you need to select Polygon on the controls sidebar and choose the correct Label.

- Click

Shape to enter drawing mode.

There are two ways to draw a polygon: either create points by clicking or

by dragging the mouse on the screen while holding Shift.

| Clicking points |

Holding Shift+Dragging |

|

|

- When

Shift isn’t pressed, you can zoom in/out (when scrolling the mouse

wheel) and move (when clicking the mouse wheel and moving the mouse), you can also

delete the previous point by right-clicking on it.

- You can use the

Selected opacity slider in the Objects sidebar to change the opacity of the polygon.

You can read more in the Objects sidebar section.

- Press

N again or click the Done button on the top panel for completing the shape.

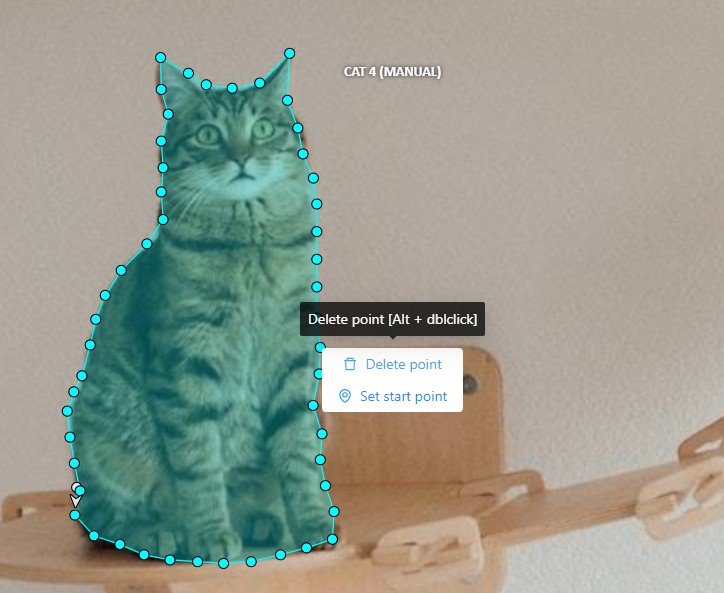

- After creating the polygon, you can move the points or delete them by right-clicking and selecting

Delete point

or clicking with pressed Alt key in the context menu.

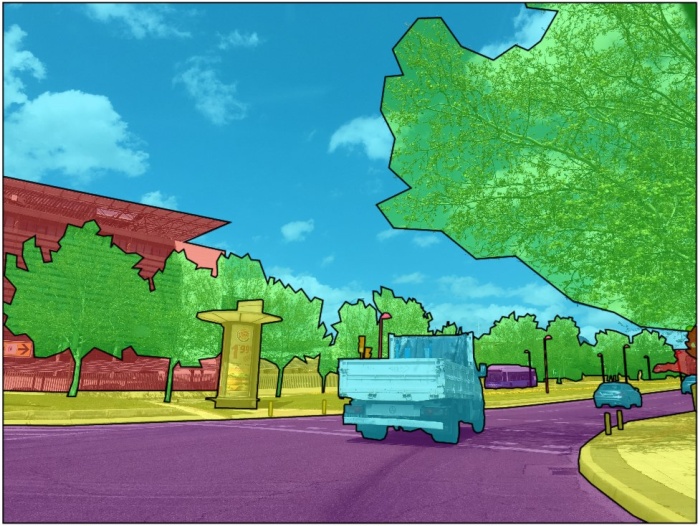

2.2.9.2 - Drawing using automatic borders

You can use auto borders when drawing a polygon. Using automatic borders allows you to automatically trace

the outline of polygons existing in the annotation.

-

To do this, go to settings -> workspace tab and enable Automatic Bordering

or press Ctrl while drawing a polygon.

-

Start drawing / editing a polygon.

-

Points of other shapes will be highlighted, which means that the polygon can be attached to them.

-

Define the part of the polygon path that you want to repeat.

-

Click on the first point of the contour part.

-

Then click on any point located on part of the path. The selected point will be highlighted in purple.

-

Click on the last point and the outline to this point will be built automatically.

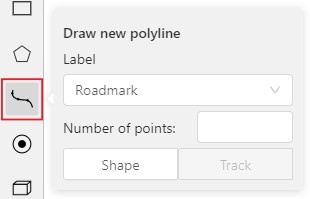

Besides, you can set a fixed number of points in the Number of points field, then

drawing will be stopped automatically. To enable dragging you should right-click

inside the polygon and choose Switch pinned property.

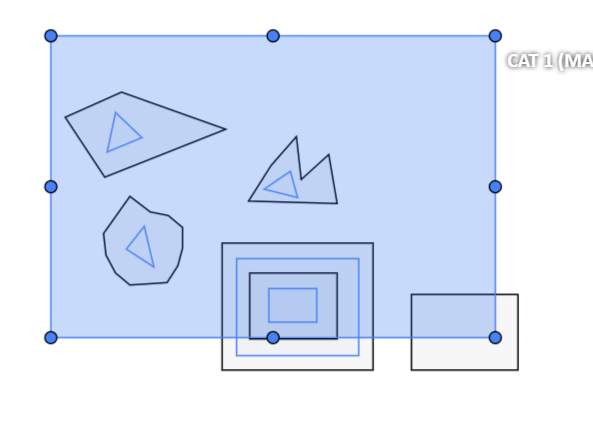

Below you can see results with opacity and black stroke:

If you need to annotate small objects, increase Image Quality to

95 in Create task dialog for your convenience.

2.2.9.3 - Edit polygon

To edit a polygon you have to click on it while holding Shift, it will open the polygon editor.

-

In the editor you can create new points or delete part of a polygon by closing the line on another point.

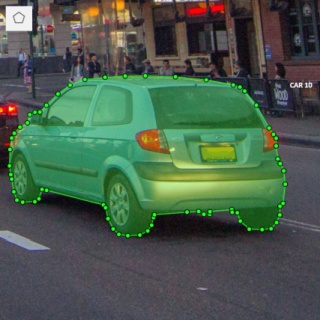

-

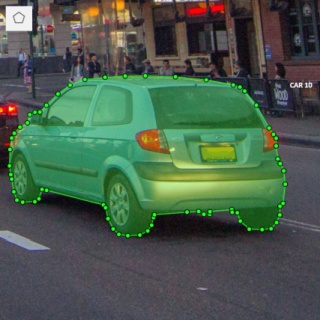

When Intelligent polygon cropping option is activated in the settings,

СVAT considers two criteria to decide which part of a polygon should be cut off during automatic editing.

- The first criteria is a number of cut points.

- The second criteria is a length of a cut curve.

If both criteria recommend to cut the same part, algorithm works automatically,

and if not, a user has to make the decision.

If you want to choose manually which part of a polygon should be cut off,

disable Intelligent polygon cropping in the settings.

In this case after closing the polygon, you can select the part of the polygon you want to leave.

-

You can press Esc to cancel editing.

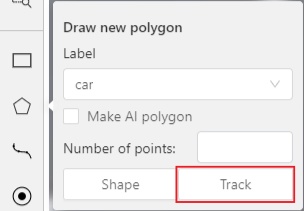

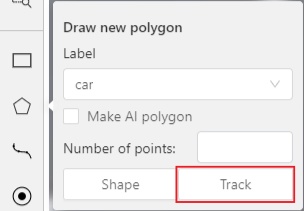

2.2.9.4 - Track mode with polygons

Polygons in the track mode allow you to mark moving objects more accurately other than using a rectangle

(Tracking mode (basic); Tracking mode (advanced)).

-

To create a polygon in the track mode, click the Track button.

-

Create a polygon the same way as in the case of Annotation with polygons.

Press N or click the Done button on the top panel to complete the polygon.

-

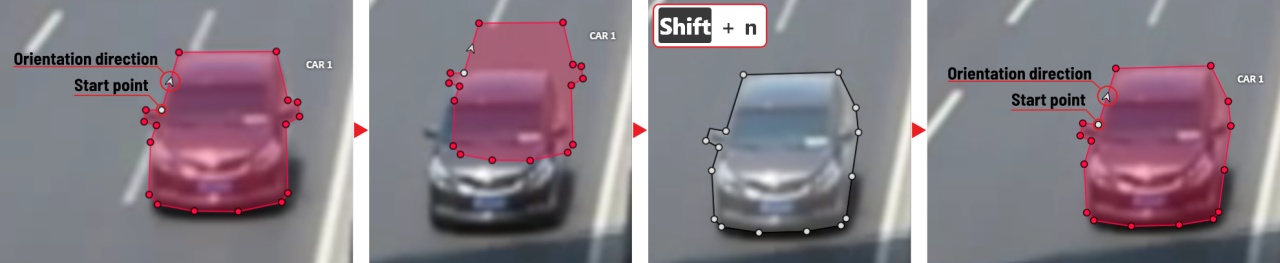

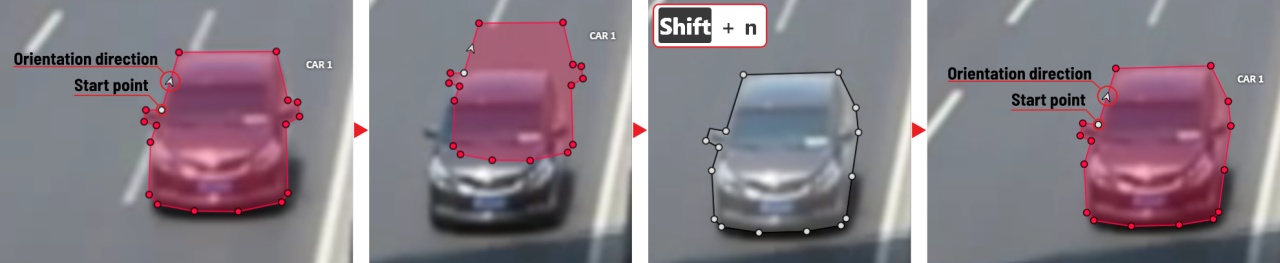

Pay attention to the fact that the created polygon has a starting point and a direction,

these elements are important for annotation of the following frames.

-

After going a few frames forward press Shift+N, the old polygon will disappear and you can create a new polygon.

The new starting point should match the starting point of the previously created polygon

(in this example, the top of the left mirror). The direction must also match (in this example, clockwise).

After creating the polygon, press N and the intermediate frames will be interpolated automatically.

-

If you need to change the starting point, right-click on the desired point and select Set starting point.

To change the direction, right-click on the desired point and select switch orientation.

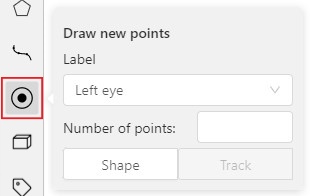

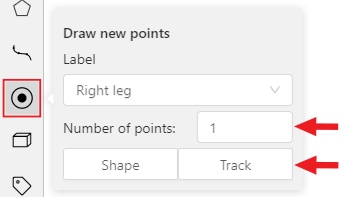

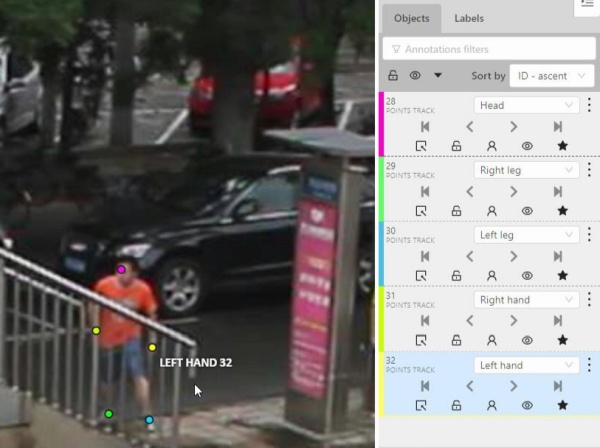

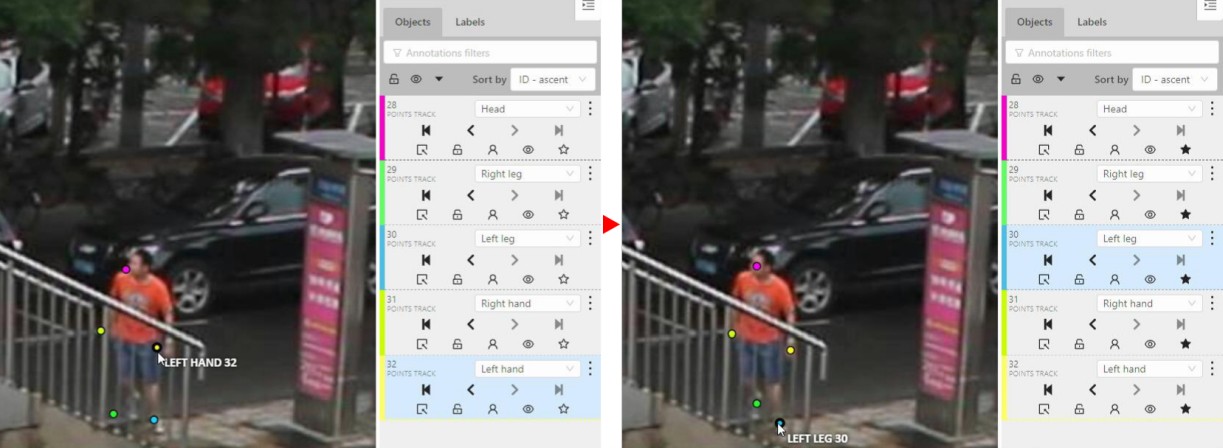

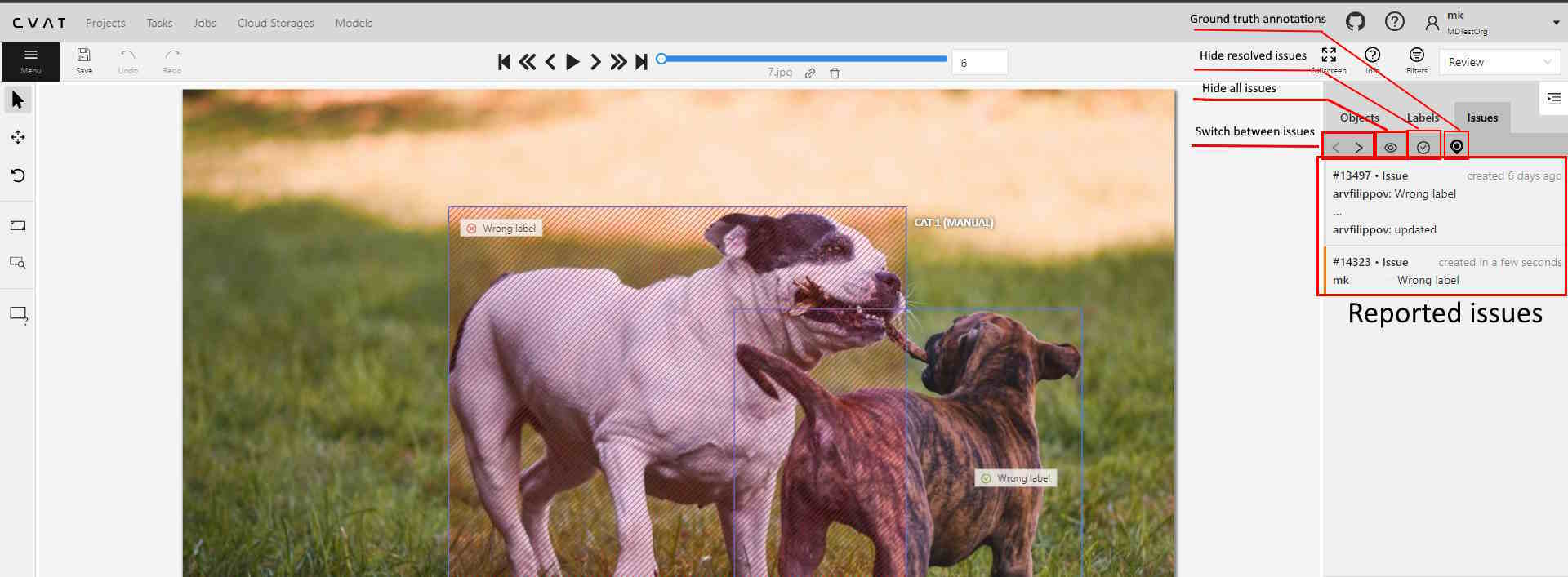

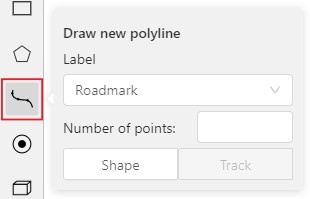

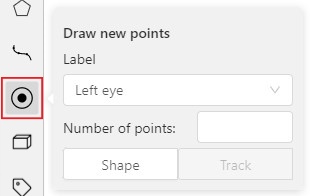

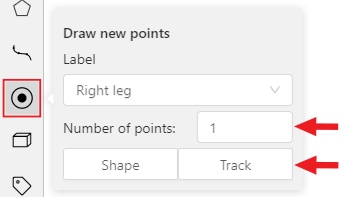

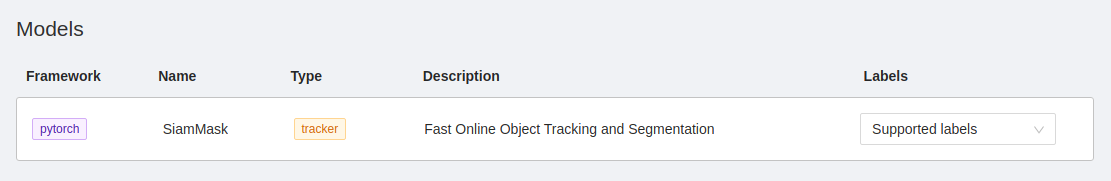

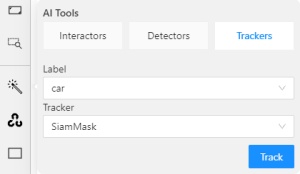

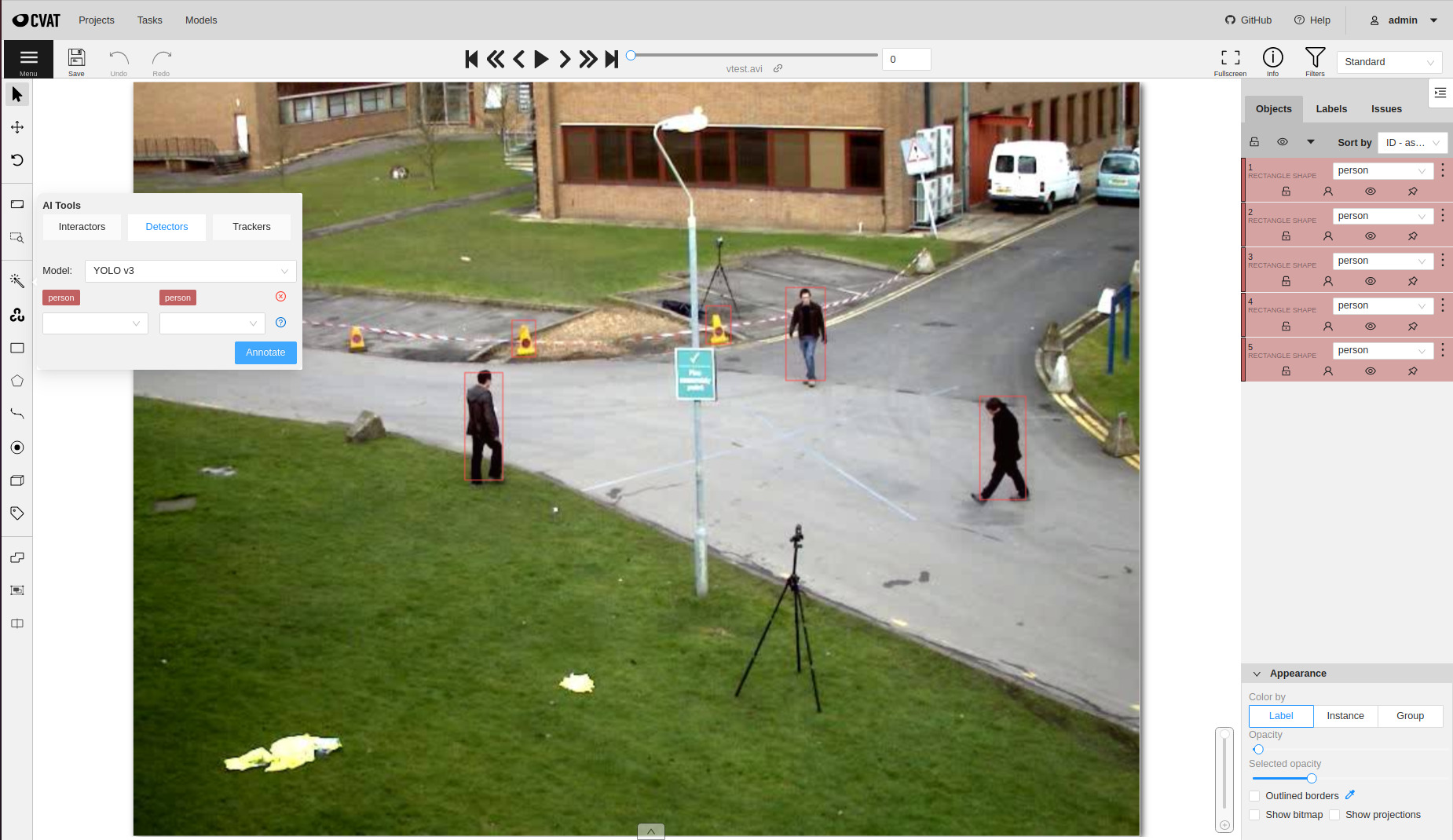

There is no need to redraw the polygon every time using Shift+N,