This is the multi-page printable view of this section. Click here to print.

Annotation

- 1: Editor

- 1.1: Menu and Navigation Bar

- 1.2: Workspace

- 1.3: Objects sidebar

- 1.4: Controls sidebar

- 1.5: Settings

- 1.6: 3D task workspace

- 2: Manual Annotation

- 2.1: Annotation modes

- 2.1.1: Single shape

- 2.1.2: Track mode

- 2.1.3: Attribute annotation mode

- 2.1.4: 3D object annotation

- 2.1.5: Annotation with tags

- 2.2: Annotation with shapes

- 2.2.1: Annotation with rectangles

- 2.2.2: Shape mode

- 2.2.3: Annotation with polygons

- 2.2.3.1: Manual drawing

- 2.2.3.2: Drawing using automatic borders

- 2.2.3.3: Edit polygon

- 2.2.3.4: Track mode with polygons

- 2.2.3.5: Creating masks

- 2.2.4: Annotation with polylines

- 2.2.5: Track mode

- 2.2.6: Annotation with points

- 2.2.6.1: Points in shape mode

- 2.2.6.2: Linear interpolation with one point

- 2.2.7: Annotation with ellipses

- 2.2.8: Annotation with cuboids

- 2.2.8.1: Creating the cuboid

- 2.2.8.2: Editing the cuboid

- 2.2.9: Annotation with skeletons

- 2.2.10: Annotation with brush tool

- 2.2.11: Types of shapes

- 2.2.12: Shape grouping

- 2.3: Editing & Utility Tools

- 2.3.1: Join and slice tools

- 2.3.2: Shapes converter

- 2.3.3: Contextual images

- 2.3.4: Filter

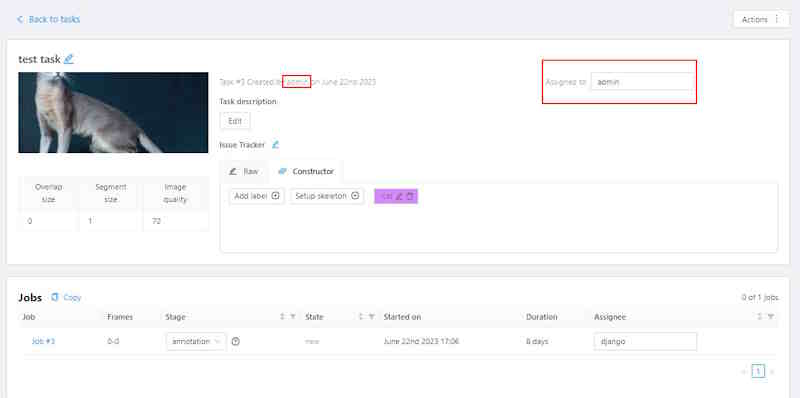

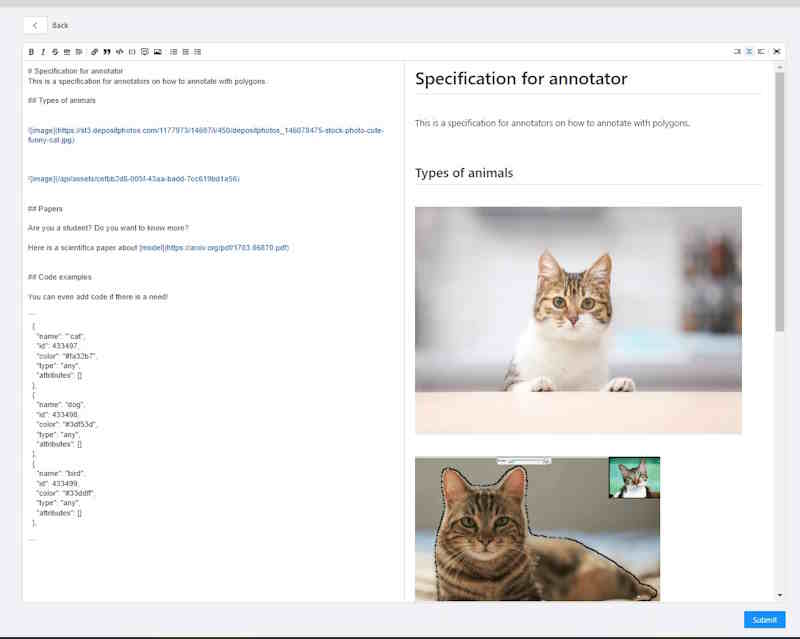

- 3: Specification for annotators

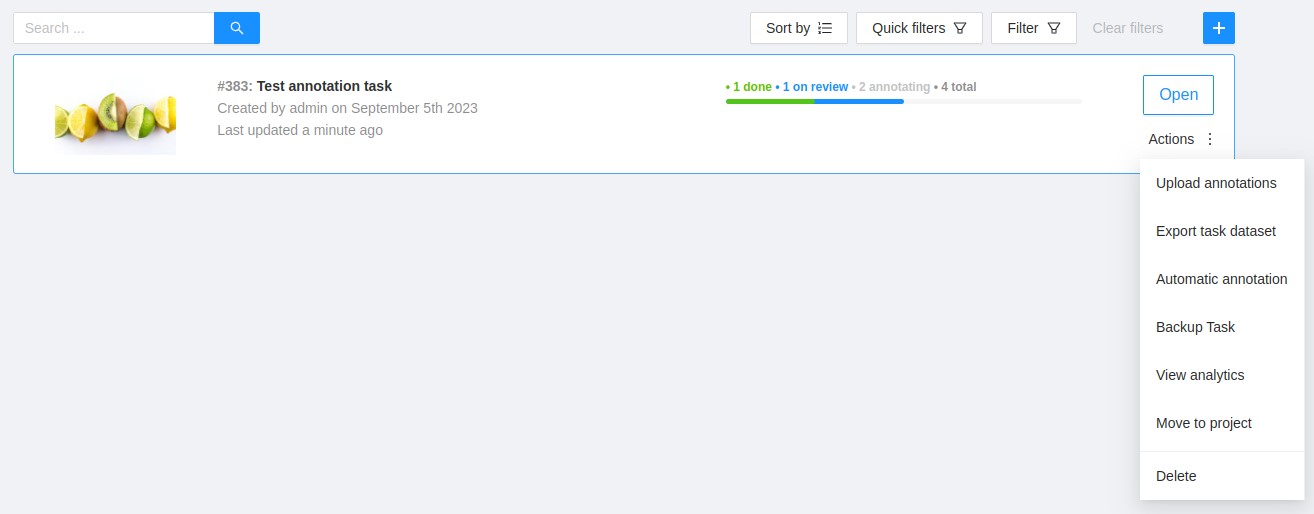

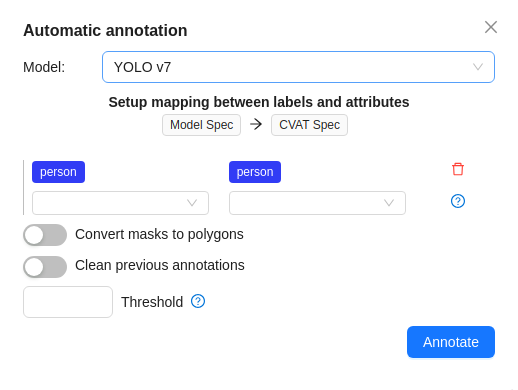

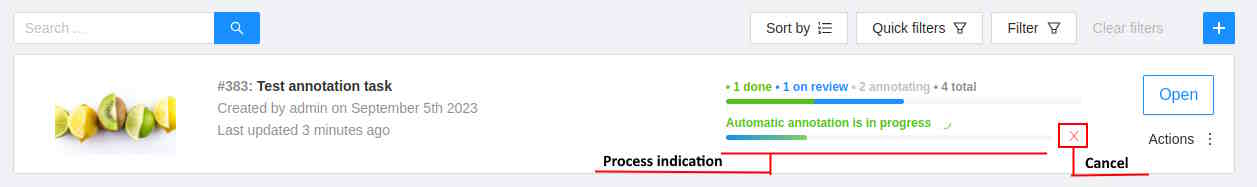

- 4: Automated Annotation

- 4.1: Overview

- 4.2: Segment Anything 2 Tracker

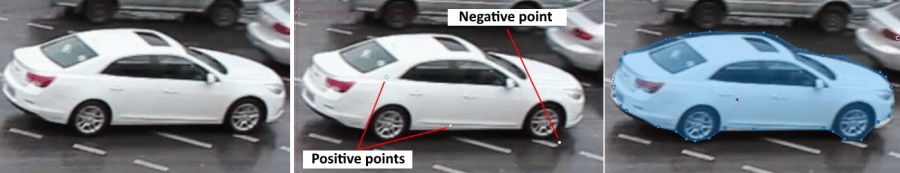

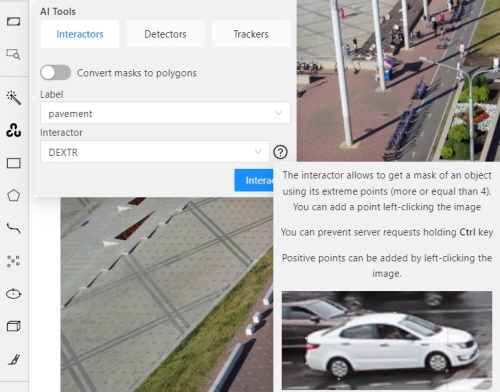

- 4.3: AI Tools

1 - Editor

This section of the documentation describes the annotation interface and all available options that you can use to annotate image data accurately and quickly.

The interface includes the following areas:

1.1 - Menu and Navigation Bar

The navigation panel and drop-down Menu, allow you to switch between frames, change the annotation mode, save your work, and more.

See:

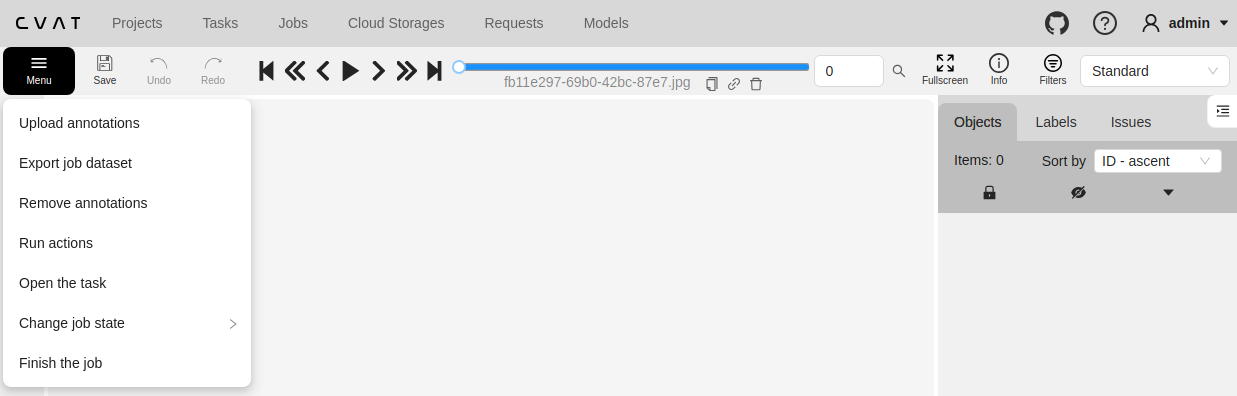

Menu

Use the Menu options to upload and download annotations, change the status of the job, and access other features listed in the table below:

| Panel Item | Description |

|---|---|

| Upload annotations | Upload annotations into a task. |

| Export as a dataset | Download a dataset in one of the supported formats. |

| Remove annotations | Delete all annotations for the current job. Use Select range to remove annotations for a specific range of frames. Enable the Delete only keyframe for tracks checkbox to delete only keyframes from the tracks within the selected range.  |

| Preload data | Activates background preloading of job chunks. This feature loads data in advance to ensure smoother playback and navigation. The progress of loaded chunks is displayed on the player slider, which fills with blue color as chunks are preloaded. To stop preloading, click Cancel preload. |

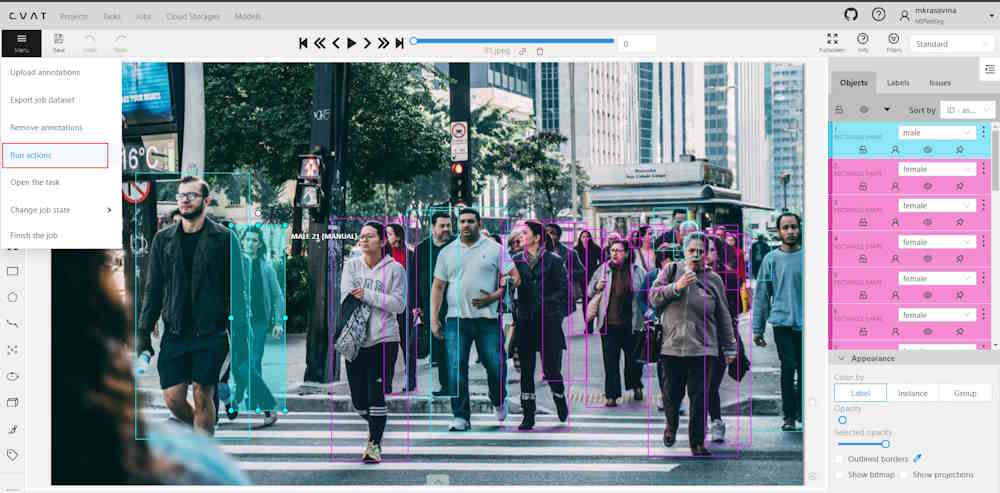

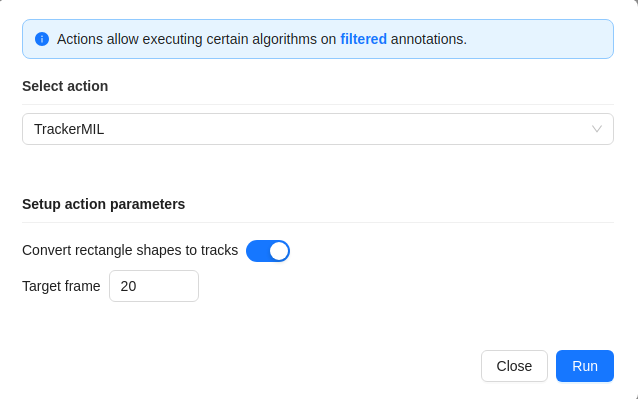

| Run actions | Run annotation actions on the annotated dataset. Annotations action is a feature that allows you to modify a bulk of annotations on many frames. It supports only shape objects. |

| Open the task | Opens a page with details about the task. |

| Change job state | Changes the state of the job:

|

| Finish the job | Saves annotations and sets job state to Completed. |

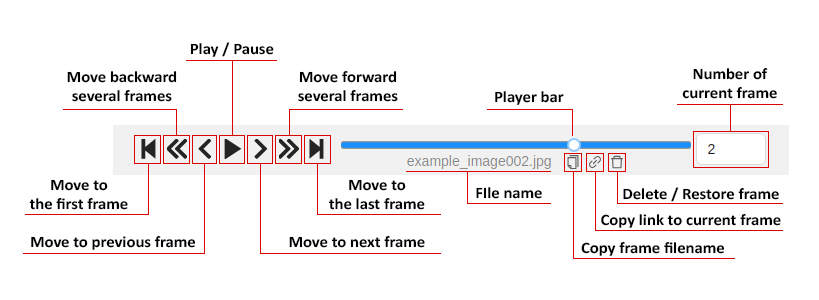

Navigation bar

Use the navigation bar to save annotation results, switch between frames, and access other features listed in the tables below.

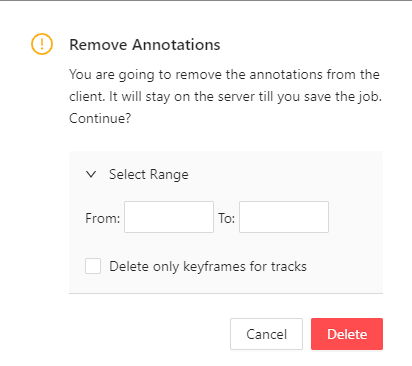

Save, Undo, Done

Use the following buttons, to save your work, undo changes, and move tasks to done.

| Function | Description |

|---|---|

Save work |

Saves annotations for the current job. The button indicates the saving process. |

Undo/Redo |

Use buttons to undo actions or redo them. |

Done |

Used to complete the creation of the object. This button appears only when the object is being created. |

Block |

Used to pause automatic line creation when drawing a polygon with OpenCV Intelligent scissors. Also used to postpone server requests when creating an object using AI Tools. |

Navigation controls

Overview of how to navigate through frames within the interface, with detailed descriptions provided in the table below.

| Function | Description |

|---|---|

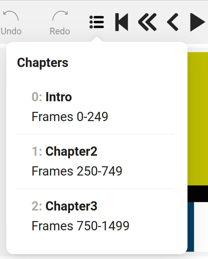

Toggle chapter menu |

Presents the list of chapters stored in the metadata of the video file. Click on a chapter in the list to seek the player to the start frame of this chapter.  Only available in video tasks and if the video file contains chapters. |

Go to the first/last frame |

Navigate to the first or the last frame of the sequence. |

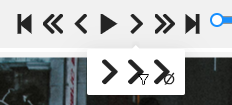

Go back with a step/Go next with a step |

Move to the previous or next frame by a predefined step. Shortcuts: Default step size is 10 frames. To modify this, navigate to Nickname > Settings > Player Step. |

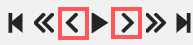

Go back/Go next |

Navigate to the neighboring frames. Shortcuts: Go back/Go next buttons are customizable:  To customize, right-click on the button and select one of the options (from left to right):

|

Play/Pause |

Switch between playing and pausing the sequence of frames or set of images. Shortcut: Space. To adjust the playback speed, go to Nickname > Settings > Player Speed. |

Go to the specific frame |

Enter the number of the frame you want to go to and press Enter. |

| Search frame by filename |

Click to open the search pop-up. Type a frame filename to search for it within the job. Select the filename and press Enter to navigate to the selected frame. |

| Copy frame name |

Click to copy frame name to the clipboard. |

| Copy frame link |

Click to copy link to the frame. |

| Delete frame |

Click to delete or restore current frame. |

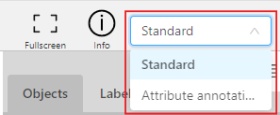

Job information and Annotation Mode switcher

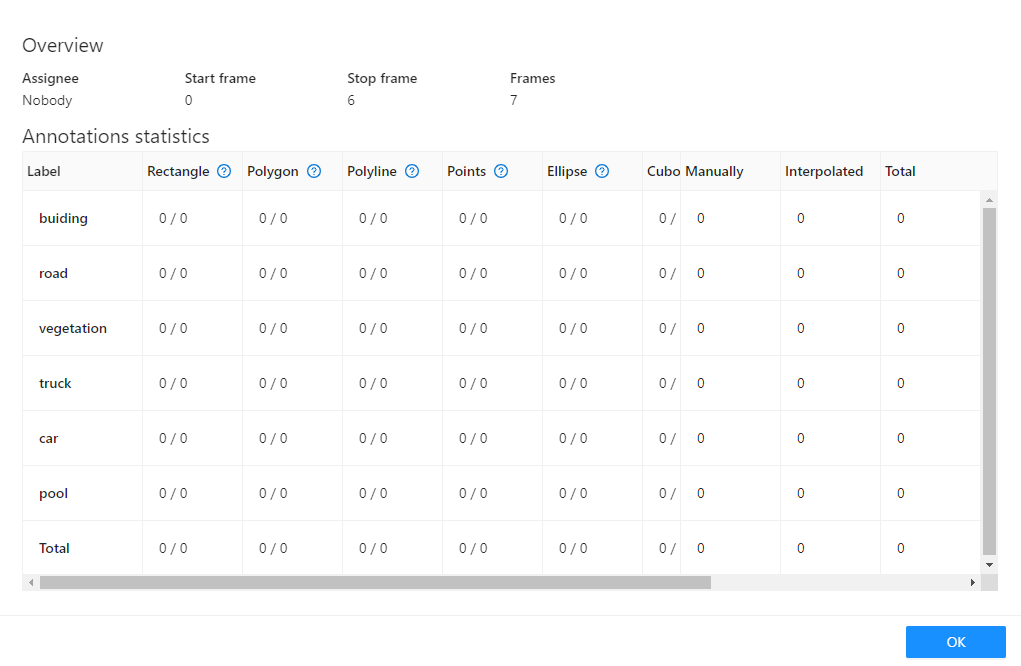

This section outlines various functionalities, including how to switch to the fullscreen player mode, access job information, and use the Workspace Switcher to toggle between different annotation and QA modes.

| Function | Description |

|---|---|

Fullscreen |

The fullscreen player mode. The keyboard shortcut is F11. |

Info |

Open the job info.  Overview:

Annotations Statistics table displays the number of created shapes, categorized by labels (e.g. vehicle, person) and the type of annotation (shape, track), as well as the count of manual and interpolated frames. |

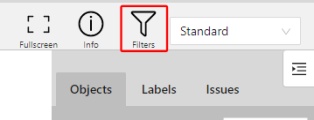

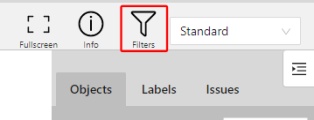

Filters |

Switches on Filters. |

| Workplace Switcher | The drop-down list to switch between different annotation modes:  Overview:

|

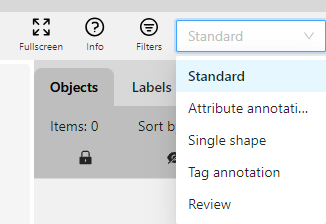

1.2 - Workspace

In CVAT, the workspace serves as a work area where annotators interact with images, videos, and the various tools available to create high-quality annotations.

See:

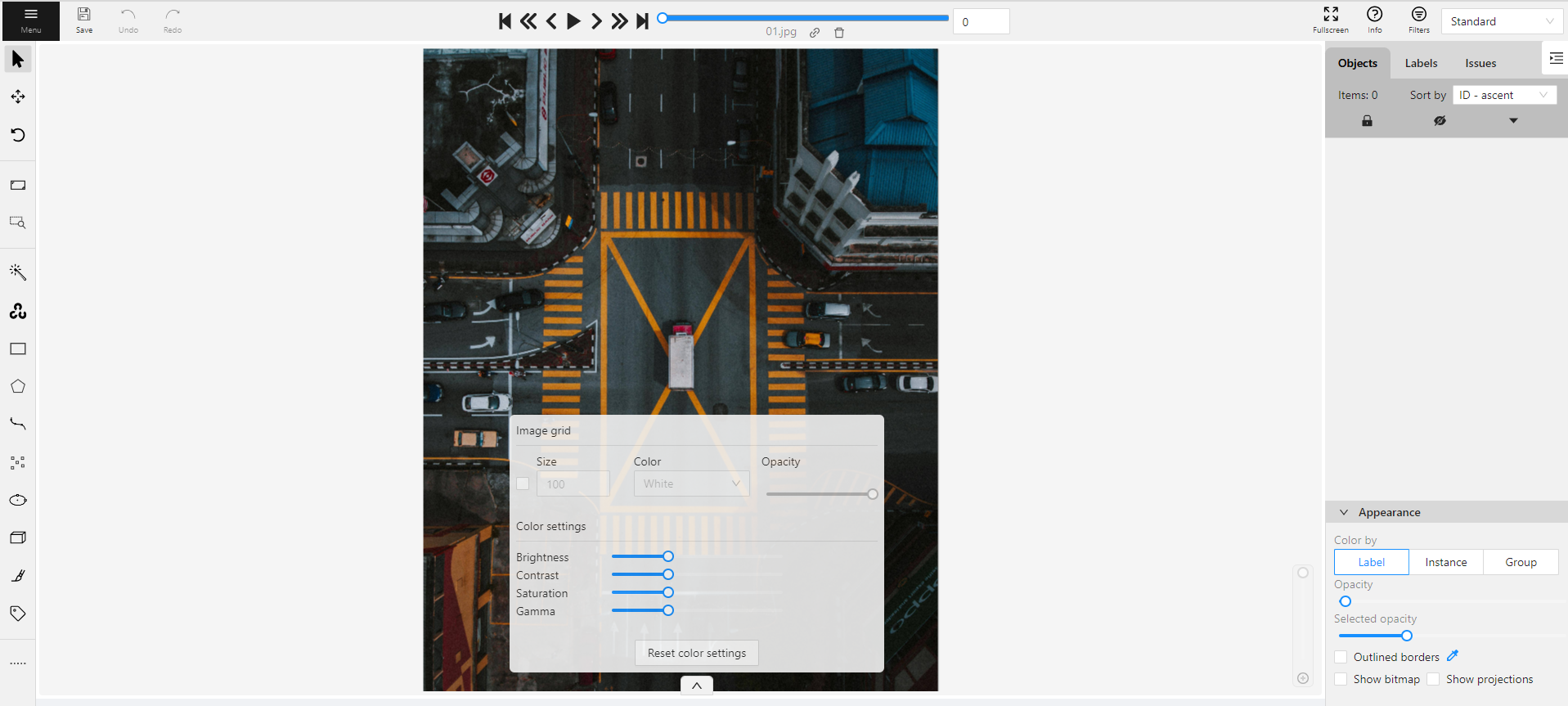

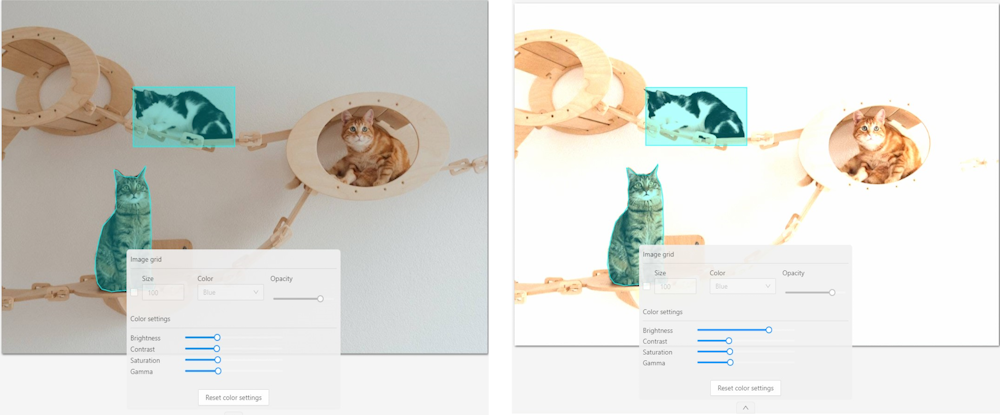

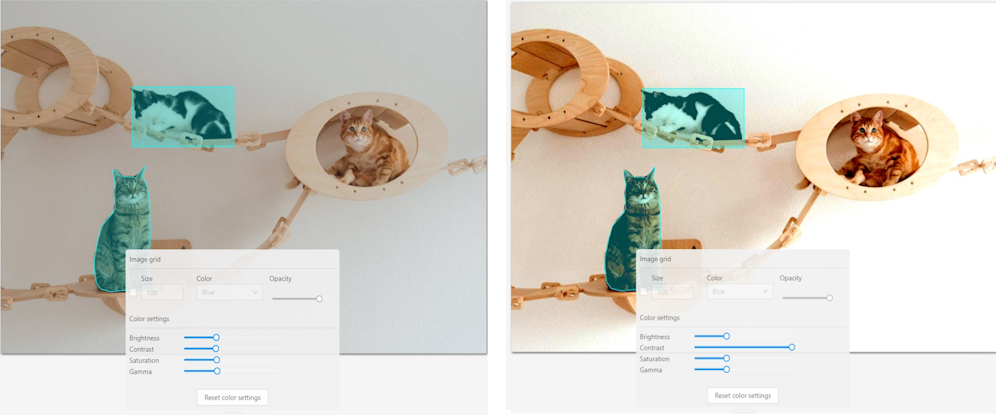

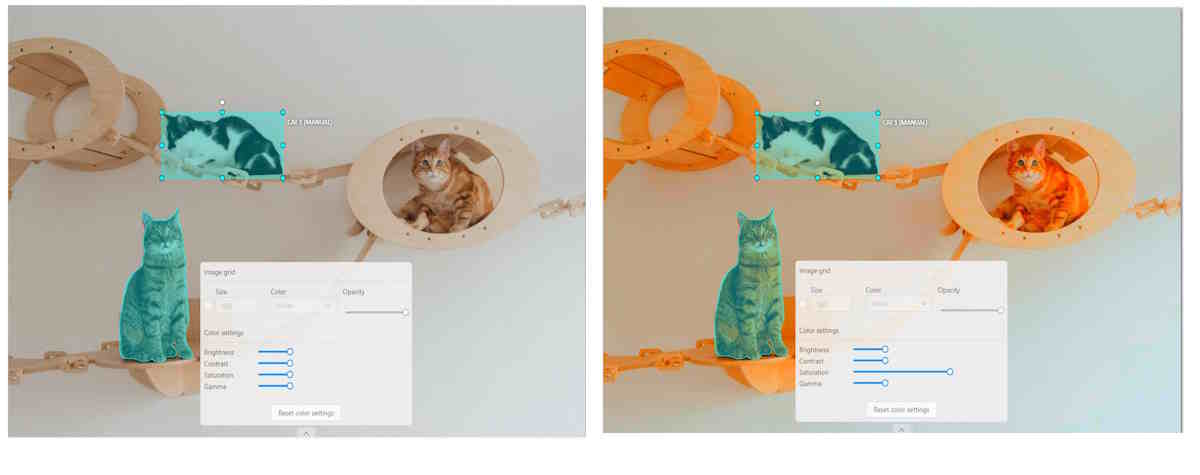

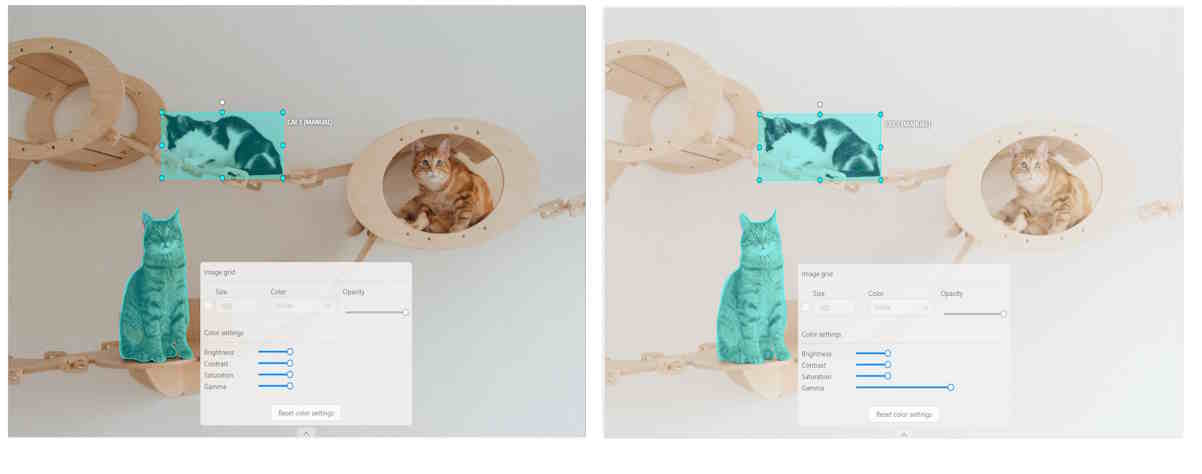

Image settings in CVAT

The Image settings panel serves as a versatile tool for fine-tuning the visual aspects of your image. Whether you need to brighten the image, increase contrast, or make other adjustments, this panel is your go-to.

Additionally, the panel allows you to overlay a grid on the image for more precise annotation.

Note

Adjusting the image settings only alters how the pictures are displayed. The images themselves will remain unmodified and unchanged.By default, the Image settings panel is not visible. To access

it, click on the Arrow Up (![]() )

icon located at the bottom of the workspace.

)

icon located at the bottom of the workspace.

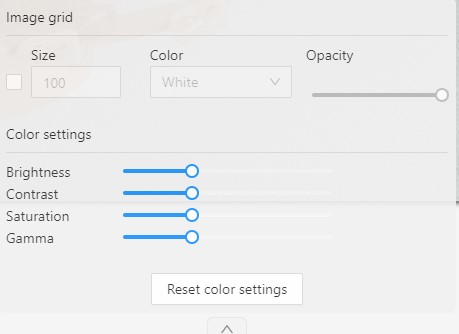

Adding grid overlay to image in CVAT

To add the grid to the image, do the following:

- Open the Image Settings panel.

- Locate and check the box that allows you to overlay a grid on the image.

- Specify the grid cell size in square millimeters by entering the desired number in the Size field.

- From the Color drop-down list, select the color of the grid.

- Use the Opacity slider to change the transparency of the grid overlay.

Changing color settings of image in CVAT

To change the color setting of the image is CVAT, do the following:

- Open the Image Settings panel.

- Use the slider to change the color quality.

There are four color quality settings in CVAT:

Brightness increases and decreases the overall lightness of the image:

Contrast is the range of brightness, from lightest to darkest, in an image.

Saturation describes the intensity of the color.

Gamma correction can be used to control the overall brightness of an image

To reset the setting to default values, click Reset color settings

Adding layers and Z-axis slider

Z-axis Slider enables you to add annotation layers while hiding the layers positioned beyond.

You can also move between layers by moving the slider to the layer you need.

The slider becomes active when multiple Z-layers are present within a frame. Click + on the slider to add a new layer; upon pressing it, a new layer is automatically created and activated.

You can also relocate objects between layers using the + and - keys.

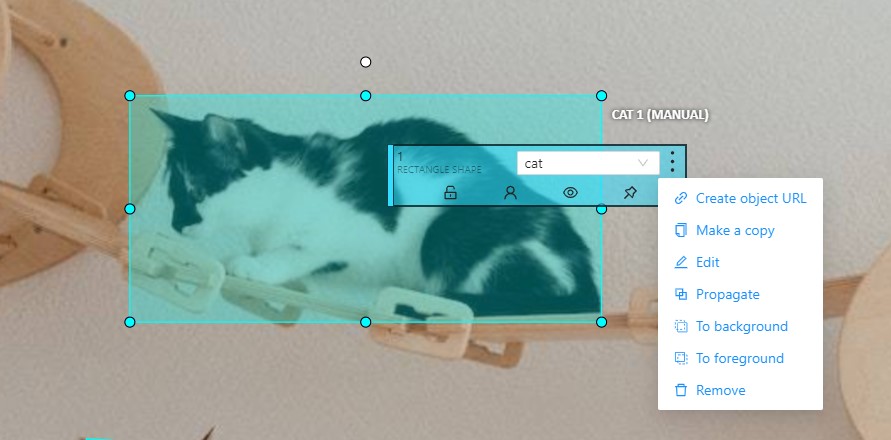

Interacting with Objects

The workspace is also equipped with the following features:

-

Right-clicking an object opens the Object Card. This interface contains essential controls for modifying the object’s label and attributes, as well as providing access to an action menu.

-

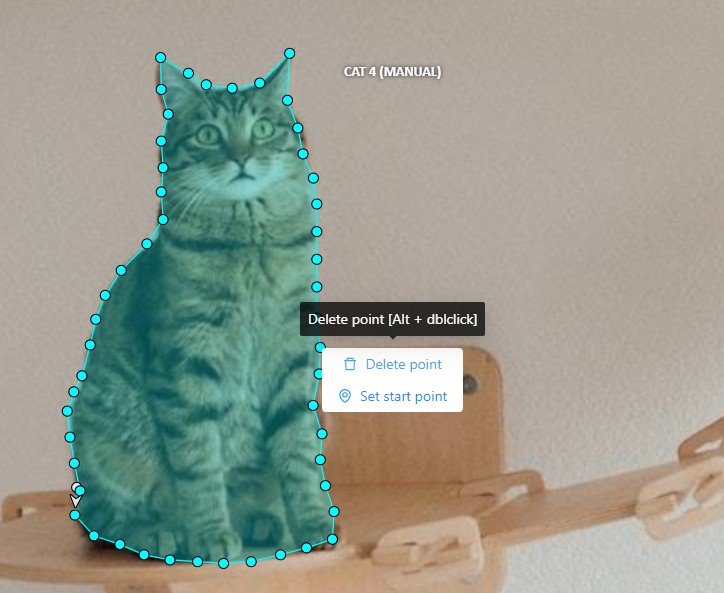

Right-clicking on a polygon point will open a menu, from which you can Delete point or Set start point.

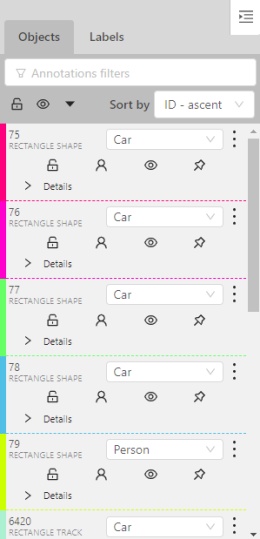

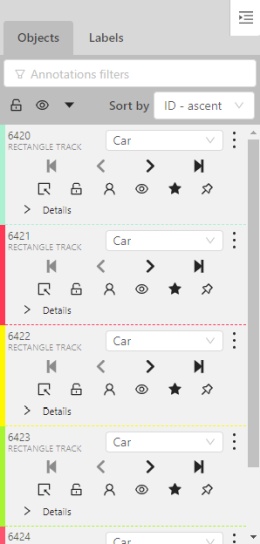

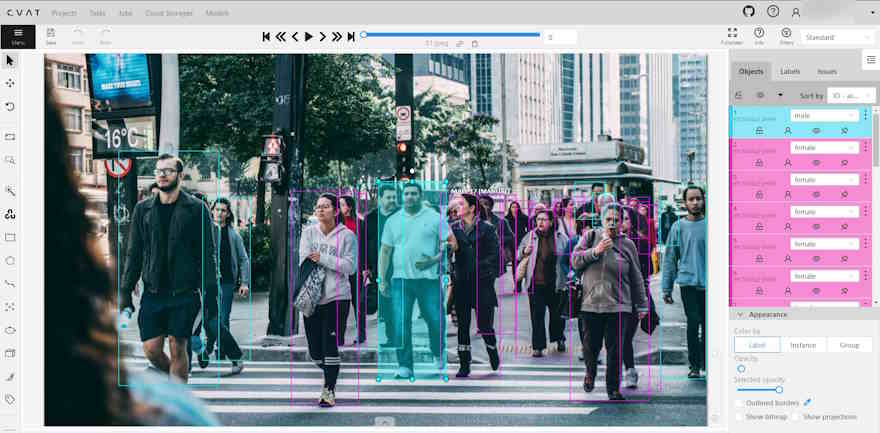

1.3 - Objects sidebar

In the objects sidebar, you can see the list of available objects on the current frame. The following figure is an example of how the list might look like:

| Shape mode | Track mode |

|---|---|

|

|

Objects properties

Filter input box

The way how to use filters is described in the advanced guide here.

List of objects

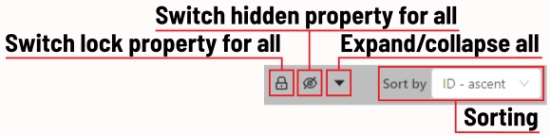

- Switch lock property for all - switches lock property of all objects in the frame.

- Switch hidden property for all - switches hide the property of all objects in the frame.

- Expand/collapse all - collapses/expands the details field of all objects in the frame.

- Sorting - sort the list of objects: updated time, ID - accent, ID - descent

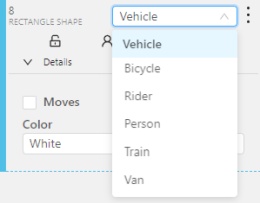

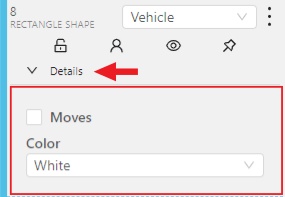

Objects on the sidebar

The type of shape can be changed by selecting the Label property. For instance, it can look like shown in the figure below:

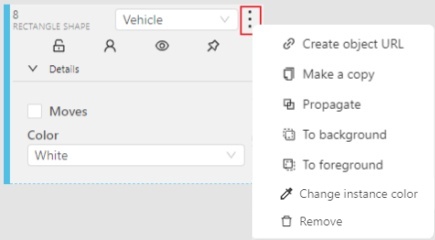

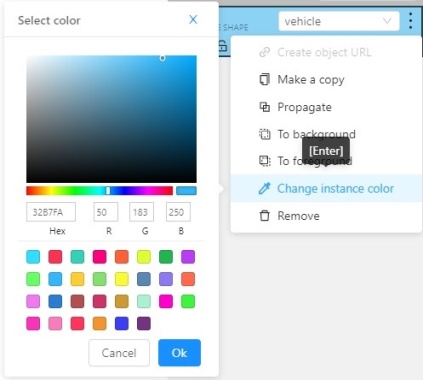

Object action menu

The action menu calls up the button:

The action menu contains:

-

Create object URL - puts a link to an object on the clipboard. After you open the link, this object will be filtered.

-

Make a copy - copies an object. The keyboard shortcut is Ctrl + C > Ctrl + V.

-

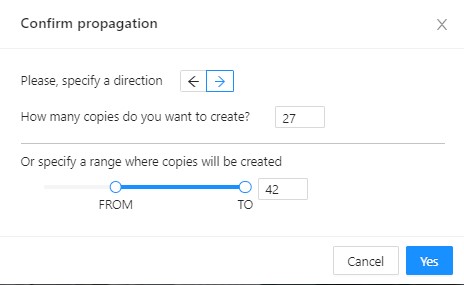

Propagate function copies the form to multiple frames and displays a dialog box where you can specify the number of copies or the frame to which you want to copy the object. The keyboard shortcut is Ctrl + B. On how to propagate only filtered shapes, see Shapes converter

There are two options available:- Propagate forward (

) creates a

copy of the object on

) creates a

copy of the object on Nsubsequent frames at the same position. - Propagate backward (

) creates

a copy of the object on

) creates

a copy of the object on Nprevious frames at the same position.

- Propagate forward (

-

To background - moves the object to the background. The keyboard shortcut - or _

-

To foreground - moves the object to the foreground. The keyboard shortcut + or =

-

To one layer backward - Moves the object one layer backward.

-

To one layer forward - Moves the object one layer forward.

-

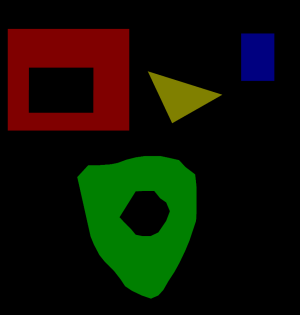

Change instance color- choosing a color using the color picker (available only in instance mode).

-

Remove - removes the object. The keyboard shortcut Del, Shift+Del.

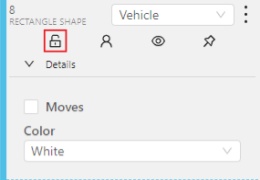

A shape can be locked to prevent its modification or moving by an accident. Shortcut to lock an object: L.

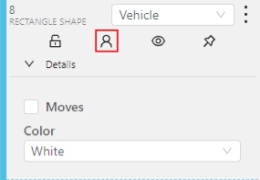

A shape can be Occluded. Shortcut: Q. Such shapes have dashed boundaries.

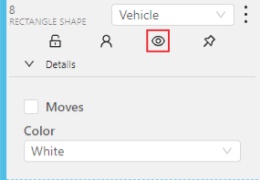

You can change the way an object is displayed on a frame (show or hide).

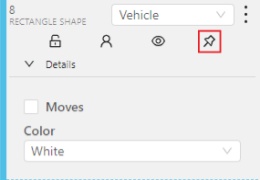

Switch pinned property - when enabled, a shape cannot be moved by dragging or dropping.

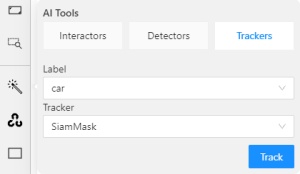

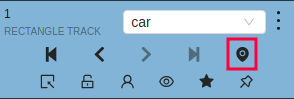

Tracker switcher - enable/disable tracking for the object.

![]()

By clicking on the Details button you can collapse or expand the field with all the attributes of the object.

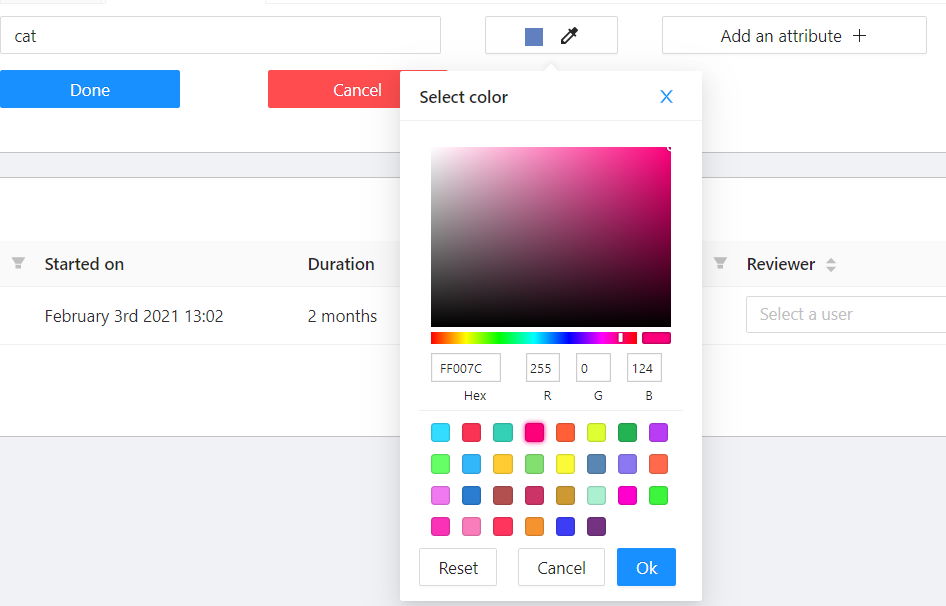

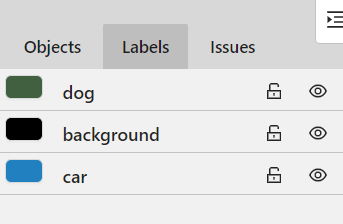

Labels

In this tab, you can lock or hide objects of a certain label. To change the color for a specific label, you need to go to the task page and select the color by clicking the edit button, this way you will change the label color for all jobs in the task.

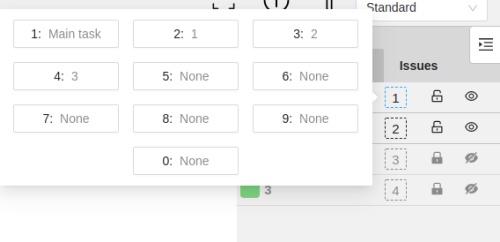

Fast label change

You can change the label of an object using hotkeys. In order to do it, you need to assign a number (from 0 to 9) to labels. By default numbers 1,2…0 are assigned to the first ten labels. To assign a number, click on the button placed at the right of a label name on the sidebar.

After that, you will be able to assign a corresponding label to an object by hovering your mouse cursor over it and pressing Ctrl + Num(0..9).

In case you do not point the cursor to the object, pressing Ctrl + Num(0..9) will set a chosen label as default,

so that the next object you create (use the N key) will automatically have this label assigned.

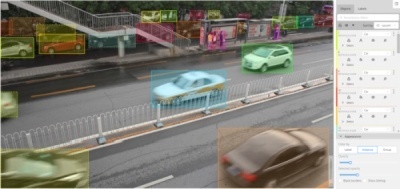

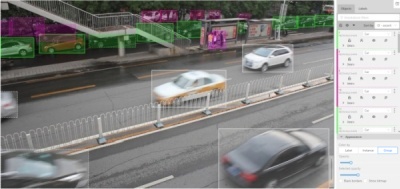

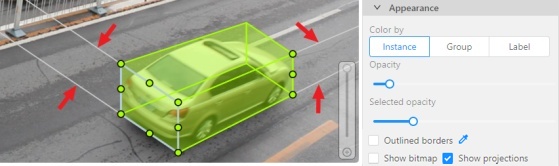

Appearance

Color By options

Change the color scheme of the annotation:

-

Instance— every shape has a random color

-

Group— every group of shape has its own random color, ungrouped shapes are white

-

Label— every label (e.g. car, person) has its own random color

You can change any random color pointing to a needed box on a frame or on an object sidebar.

Fill Opacity slider

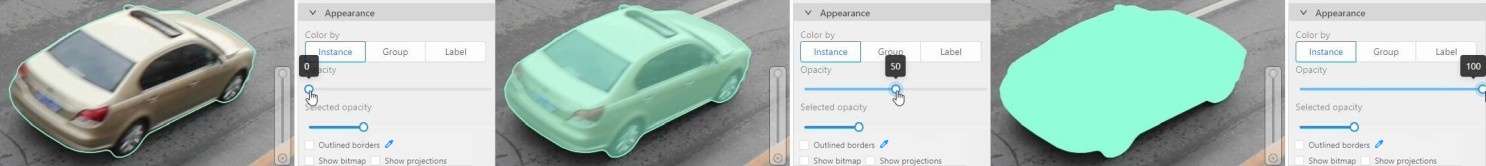

Change the opacity of every shape in the annotation.

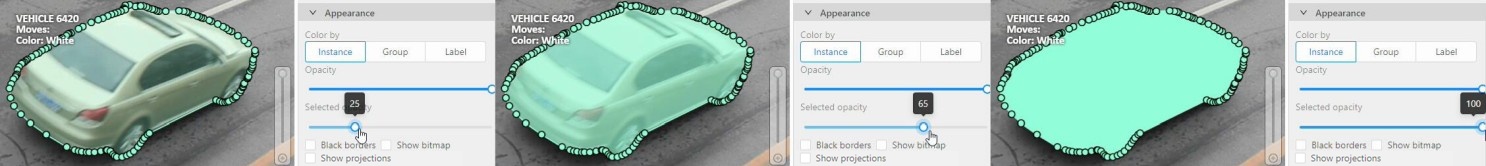

Selected Fill Opacity slider

Change the opacity of the selected object’s fill. It is possible to change the opacity while drawing an object in the case of rectangles, polygons, and cuboids.

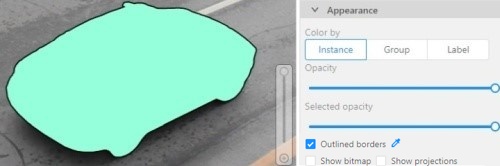

Outlined borders checkbox

You can change a special shape border color by clicking on the Eyedropper icon.

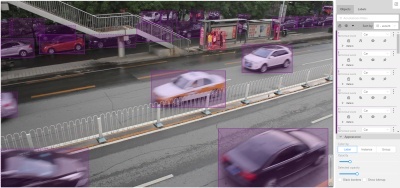

Show bitmap checkbox

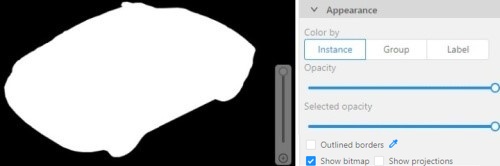

If enabled all shapes are displayed in white and the background is black.

Show projections checkbox

Enables/disables the display of auxiliary perspective lines. Only relevant for cuboids

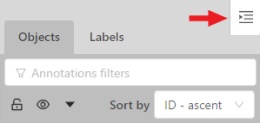

Hide objects sidebar

Hide - the button hides the object’s sidebar.

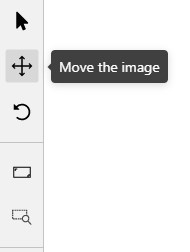

1.4 - Controls sidebar

Navigation

Navigation block - contains tools for moving and rotating images.

| Icon | Description |

|---|---|

|

Cursor (Esc)- a basic annotation editing tool. |

|

Move the image- a tool for moving around the image withoutthe possibility of editing. |

|

Rotate- two buttons to rotate the current framea clockwise ( Ctrl+R) and anticlockwise (Ctrl+Shift+R).You can enable Rotate all images in the settings to rotate all the images in the job |

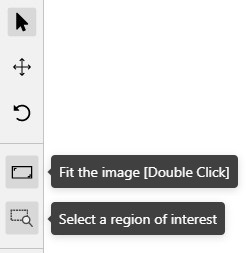

Zoom

Zoom block - contains tools for image zoom.

| Icon | Description |

|---|---|

|

Fit image- fits image into the workspace size.Shortcut - double click on an image |

|

Select a region of interest- zooms in on a selected region.You can use this tool to quickly zoom in on a specific part of the frame. |

Shapes

Shapes block - contains all the tools for creating shapes.

| Icon | Description | Links to section |

|---|---|---|

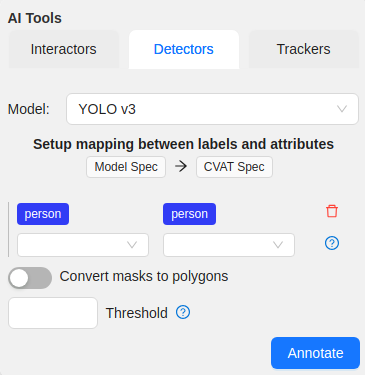

|

AI Tools |

AI Tools |

|

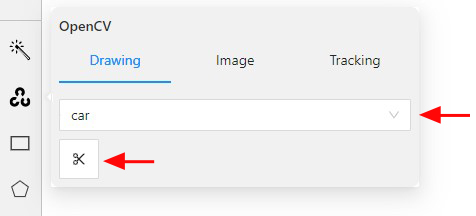

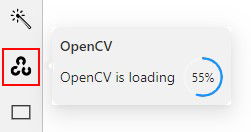

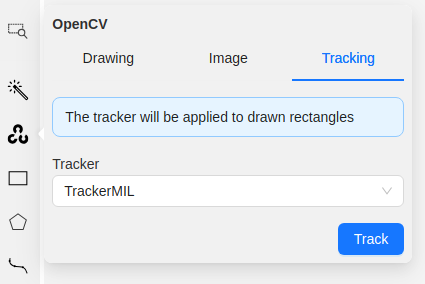

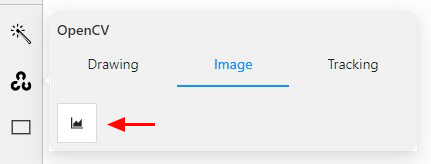

OpenCV |

OpenCV |

|

Rectangle |

Shape mode; Track mode; Drawing by 4 points |

|

Polygon |

Annotation with polygons; Track mode with polygons |

|

Polyline |

Annotation with polylines |

|

Points |

Annotation with points |

|

Ellipses |

Annotation with ellipses |

|

Cuboid |

Annotation with cuboids |

Brushing tools |

Annotation with brushing | |

|

Tag |

Annotation with tags |

|

Open an issue |

Review (available only in review mode) |

Edit

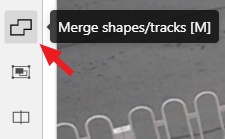

Edit block - contains tools for editing tracks and shapes.

| Icon | Description | Links to section |

|---|---|---|

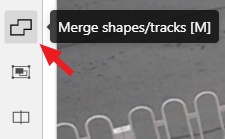

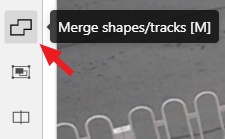

|

Merge Shapes(M) - starts/stops the merging shapes mode. |

Track mode (basics) |

|

Group Shapes (G) - starts/stops the grouping shapes mode. |

Shape grouping |

|

Split - splits a track. |

Track mode (advanced) |

| Joins multiple labels into one | Joining mask tool | |

| Slices one label into several. | Slice mask/polygon |

Move image

Switching between user interface modes.

-

Use arrows below to move to the next/previous frame. Use the scroll bar slider to scroll through frames. Almost every button has a shortcut. To get a hint about a shortcut, just move your mouse pointer over an UI element.

-

To navigate the image, use the button on the controls sidebar. Another way an image can be moved/shifted is by holding the left mouse button inside an area without annotated objects. If the

Mouse Wheelis pressed, then all annotated objects are ignored. Otherwise the a highlighted bounding box will be moved instead of the image itself.

-

You can use the button on the sidebar controls to zoom on a region of interest. Use the button

Fit the imageto fit the image in the workspace. You can also use the mouse wheel to scale the image (the image will be zoomed relatively to your current cursor position).

1.5 - Settings

To open the Settings, open the user menu in the header and select Settings.

Or you can use the default F2 shortcut.

The Settings section has three tabs:

Player

In the Player tab, you can:

- Control step of

CandVshortcuts. - Control the speed of

Space/Playbutton. - Select canvas background color. You can choose a background color or enter manually (in RGB or HEX format).

Reset zoomShow every image in full size or zoomed out like the previous (it is enabled by default for interpolation mode and disabled for annotation mode).Rotate all imagescheckbox — switch the rotation of all frames or an individual frame.Smooth imagecheckbox — smooth image when zoom-in it.smoothed pixelized

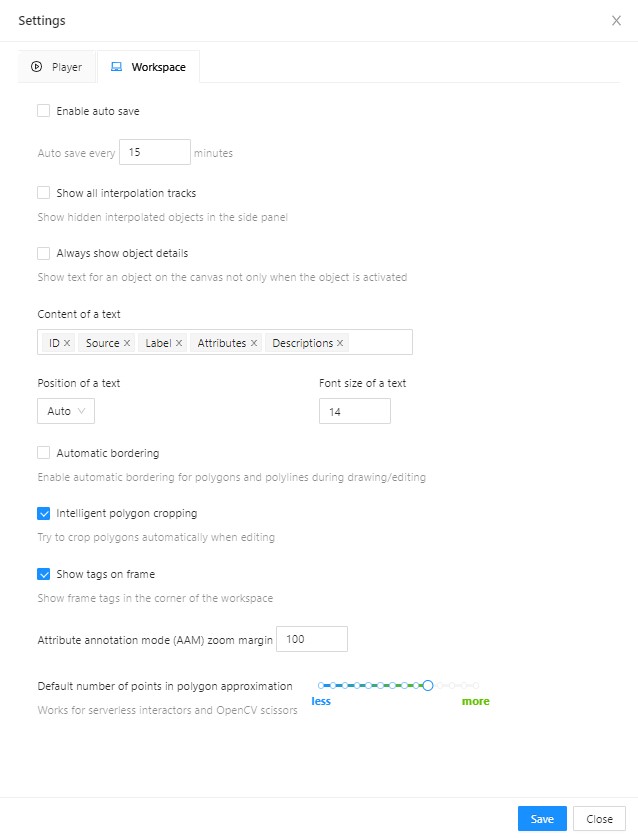

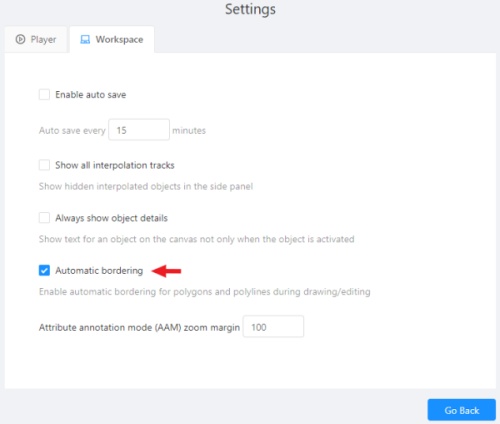

Workspace

In the Workspace tab, you can:

-

Enable auto savecheckbox — turned off by default. -

Auto save interval (min)input box — 15 minutes by default. -

Show all interpolation trackscheckbox — shows hidden objects on the side panel for every interpolated object (turned off by default). -

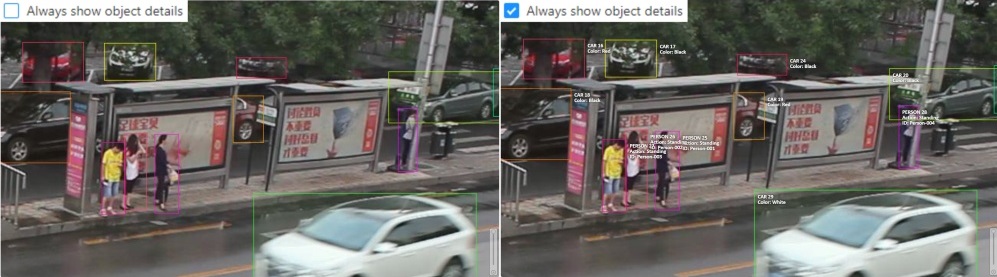

Always show object details- show text for an object on the canvas not only when the object is activated:

-

Content of a text- setup of the composition of the object details:ID- object identifier.Attributes- attributes of the object.Label- object label.Source- source of creating of objectsMANUAL,AUTOorSEMI-AUTO.Descriptions- description of attributes.Dimensions- width, height and rotation for rectangles and ellipses.

-

Position of a text- text positioning mode selection:Auto- the object details will be automatically placed where free space is.Center- the object details will be embedded to a corresponding object if possible.

-

Font size of a text- specifies the text size of the object details. -

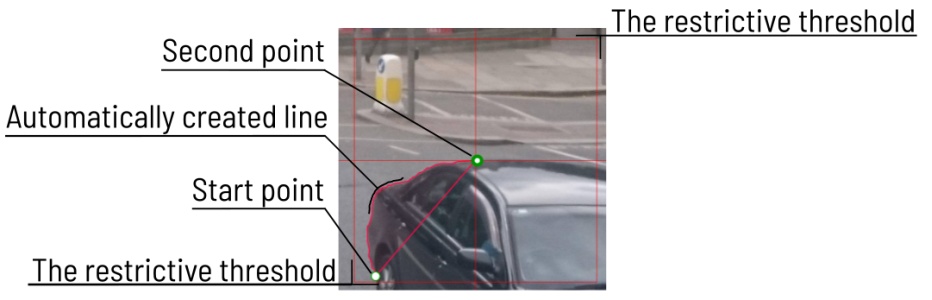

Automatic bordering- enable automatic bordering for polygons and polylines during drawing/editing. For more information To find out more, go to the section annotation with polygons. -

Intelligent polygon cropping- activates intelligent cropping when editing the polygon (read more in the section edit polygon -

Show tags on frame- shows/hides frame tags on the current frame -

Attribute annotation mode (AAM) zoom margininput box — defines margins (in px) for shape in the attribute annotation mode. -

Control points size— defines the size of any interactable points in the tool (polygon’s vertices, rectangle dragging points, etc.) -

Default number of points in polygon approximationWith this setting, you can choose the default number of points in polygon. Works for serverless interactors and OpenCV scissors. -

Select Save to save settings (settings will be saved on the server and will not change after the page is refreshed). Select Cancel or press

F2to return to the annotation.

Shortcuts

In the Shortcuts tab, you can set the keyboard combinations. Learn more in Shortcuts.

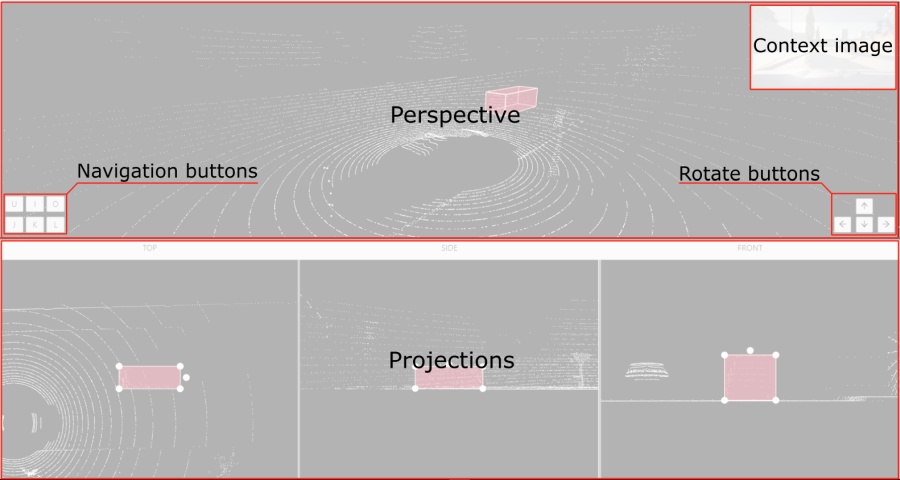

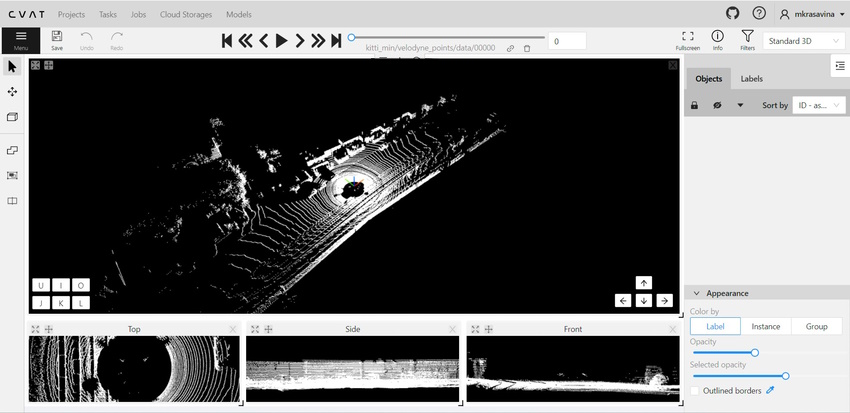

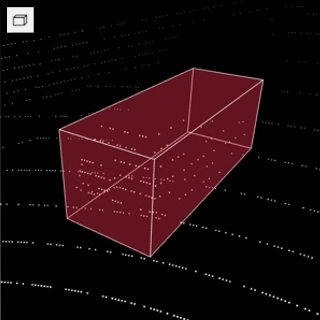

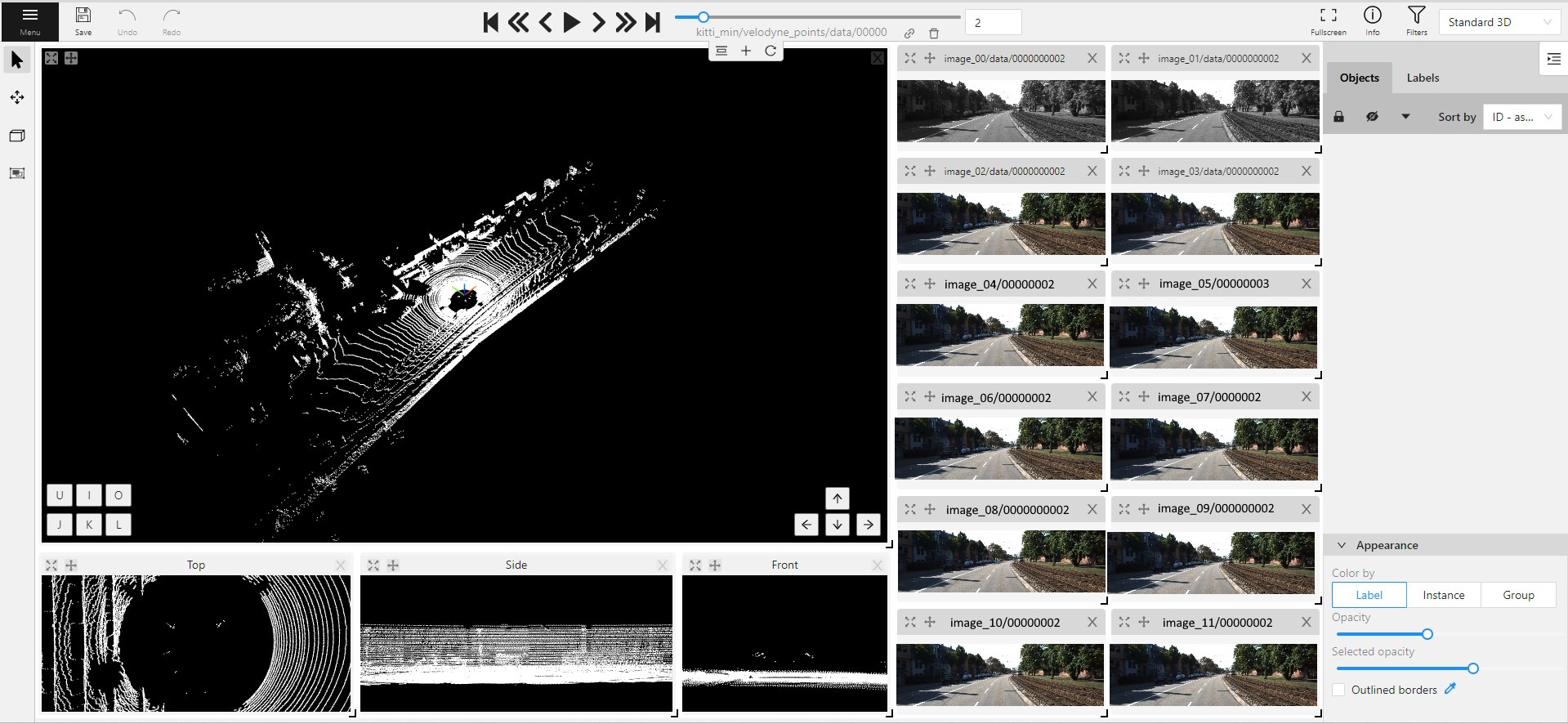

1.6 - 3D task workspace

If the related_images folder contains any images, a context image will be available in the perspective window.

The contextual image could be compared to 3D data and would help to identify the labels of marked objects.

Perspective – a main window for work with objects in a 3D task.

Projections - projections are tied to an object so that a cuboid is in the center and looks like a rectangle. Projections show only the selected object.

Top– a projection of the view from above.Side– a projection of the left side of the object.Front- a frontal projection of the object.

2 - Manual Annotation

2.1 - Annotation modes

CVAT provides several editor-level modes that change how the annotation workspace behaves. These modes control what actions are available to the user, which tools can be used, and how objects can be created or modified.

Use this section to understand when to switch modes and how each mode supports a specific step of the annotation workflow.

2.1.1 - Single shape

The CVAT Single Shape annotation mode accelerates the annotation process and enhances workflow efficiency for specific scenarios.

By using this mode you can label objects with a chosen annotation shape and label when an image contains only a single object. By eliminating the necessity to select tools from the sidebar and facilitating quicker navigation between images without the reliance on hotkeys, this feature makes the annotation process significantly faster.

See:

- Single Shape mode annotation interface

- Annotating in Single Shape mode

- Query parameters

- Video tutorial

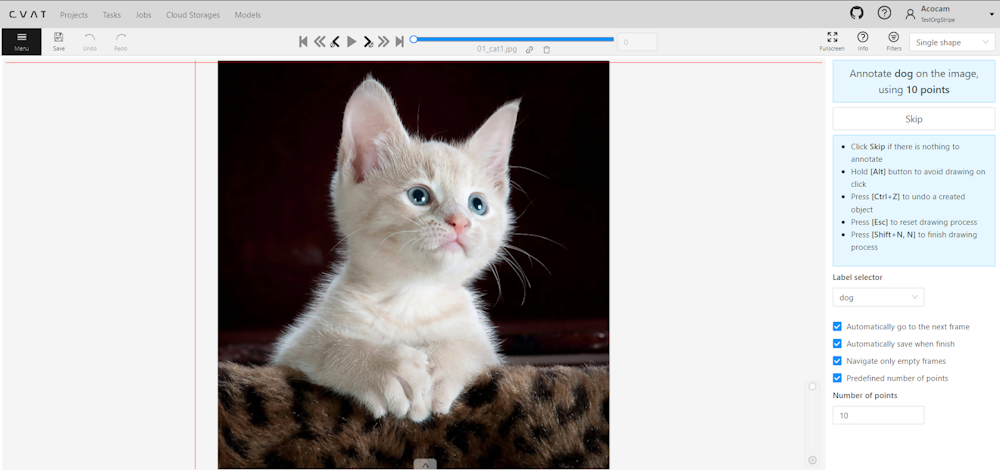

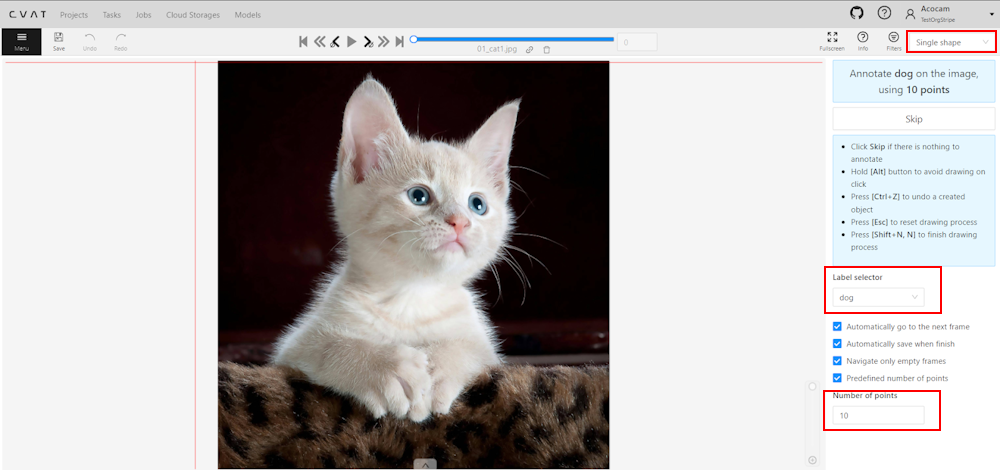

Single Shape mode annotation interface

A set of controls in the interface of the Single Shape annotation mode may vary depending on different settings.

Images below displays the complete interface, featuring all available fields; as mentioned above, certain fields may be absent depending on the scenario.

For instance, when annotating with rectangles, the Number of points field will not appear, and if annotating a single class, the Labels selector will be omitted.

To access Single Shape mode, open the job, navigate to the top right corner, and from the drop-down menu, select Single Shape.

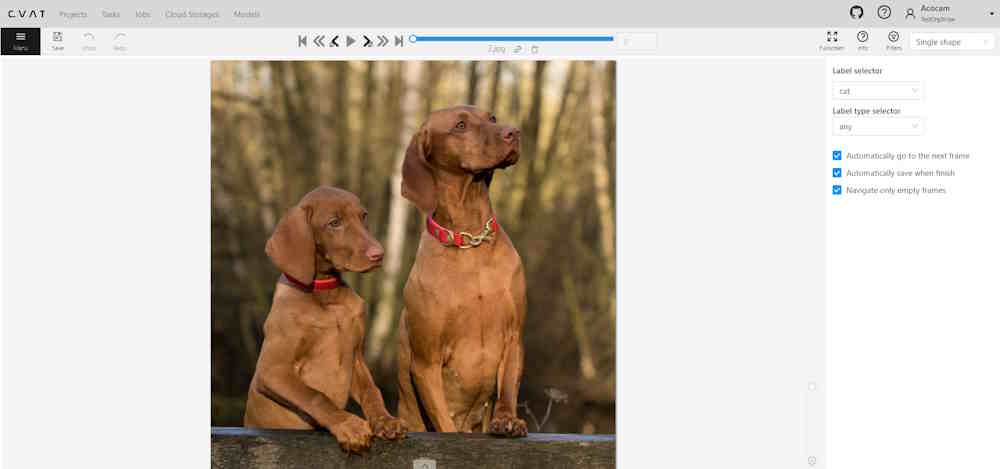

The interface will be different if the shape type was set to Any in the label Constructor:

The Single Shape annotation mode has the following fields:

| Feature | Explanation |

|---|---|

| Prompt for Shape and Label | Displays the selected shape and label for the annotation task, for example: “Annotate cat on the image using rectangle”. |

| Skip Button | Enables moving to the next frame without annotating the current one, particularly useful when the frame does not have anything to be annotated. |

| List of Hints | Offers guidance on using the interface effectively, including: - Click Skip for frames without required annotations. - Hold the Alt button to avoid unintentional drawing (e.g. when you want only move the image). - Use the Ctrl+Z combination to undo the last action if needed. - Use the Esc button to completely reset the current drawing progress. |

| Label selector | Allows for the selection of different labels (cat, or dog in our example) for annotation within the interface. |

| Label type selector | A drop-down list to select type of the label (rectangle, ellipse, etc). Only visible when the type of the shape is Any. |

| Options to Enable or Disable | Provides configurable options to streamline the annotation process, such as: - Automatically go to the next frame. - Automatically save when finish. - Navigate only empty frames. - Predefined number of points - Specific to polyshape annotations, enabling this option auto-completes a shape once a predefined number of points is reached. Otherwise, pressing N is required to finalize the shape. |

| Number of Points | Applicable for polyshape annotations, indicating the number of points to use for image annotation. |

Annotating in Single Shape mode

To annotate in Single Shape mode, follow these steps:

- Open the job and switch to Single Shape mode.

- Annotate the image based on the selected shape. For more information on shapes, see Annotation Tools.

- (Optional) If the image does not contain any objects to annotate, click Skip at the top of the right panel.

- Submit your work.

Query parameters

Also, we introduced additional query parameters, which you may append to the job link, to initialize the annotation process and automate workflow:

| Query Parameter | Possible Values | Explanation |

|---|---|---|

defaultWorkspace |

Workspace identifier (e.g., single_shape, tags, review, attributes) |

Specifies the workspace to be used initially, streamlining the setup for different annotation tasks. |

defaultLabel |

A string representation of a label (label name) | Sets a default label for the annotation session, facilitating consistency across similar tasks. |

defaultPointsCount |

Integer - number of points for polyshapes | Defines a preset number of points for polyshape annotations, optimizing the annotation process. |

You can combine these parameters to customize the workspace for an annotator, for example:

/tasks/<tid>/jobs/<jid>?defaultWorkspace=single_shape&defaultLabel=dog&defaultPointsCount=10

Will open the following job:

Video tutorial

For a better understanding of how Single Shape mode operates, we recommend watching the following tutorial.

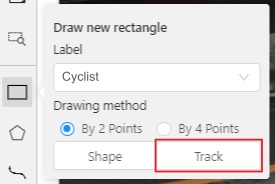

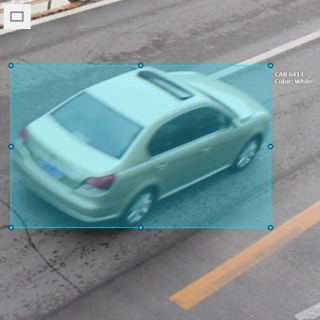

2.1.2 - Track mode

Usage examples:

- Create new annotations for a sequence of frames.

- Add/modify/delete objects for existing annotations.

- Edit tracks, merge several rectangles into one track.

-

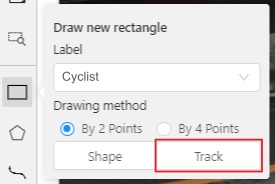

Like in the

Shape mode, you need to select aRectangleon the sidebar, in the appearing form, select the desiredLabeland theDrawing method.

-

Creating a track for an object (look at the selected car as an example):

-

Create a

RectangleinTrack modeby selectingTrack.

-

In

Track mode, the rectangle will be automatically interpolated on the next frames. -

The cyclist starts moving on frame #2270. Let’s mark the frame as a key frame. You can press

Kfor that or select thestarbutton (see the screenshot below).

-

If the object starts to change its position, you need to modify the rectangle where it happens. It isn’t necessary to change the rectangle on each frame, simply update several keyframes and the frames between them will be interpolated automatically.

-

Let’s jump 30 frames forward and adjust the boundaries of the object. See an example below:

-

After that the rectangle of the object will be changed automatically on frames 2270 to 2300:

-

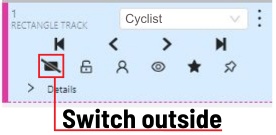

-

When the annotated object disappears or becomes too small, you need to finish the track. You have to choose

Outside Property, shortcutO.

-

If the object isn’t visible on a couple of frames and then appears again, you can use the

Mergefeature to merge several individual tracks into one.

-

Create tracks for moments when the cyclist is visible:

-

Select

Mergebutton or press keyMand select on any rectangle of the first track and on any rectangle of the second track and so on:

-

Select

Mergebutton or pressMto apply changes.

-

The final annotated sequence of frames in

Interpolationmode can look like the clip below:

-

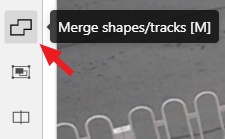

Shapes that were created in the track mode, have extra navigation buttons.

-

These buttons help to jump to the previous/next keyframe.

-

The button helps to jump to the initial frame and to the last keyframe.

You can use the Split function to split one track into two tracks:

2.1.3 - Attribute annotation mode

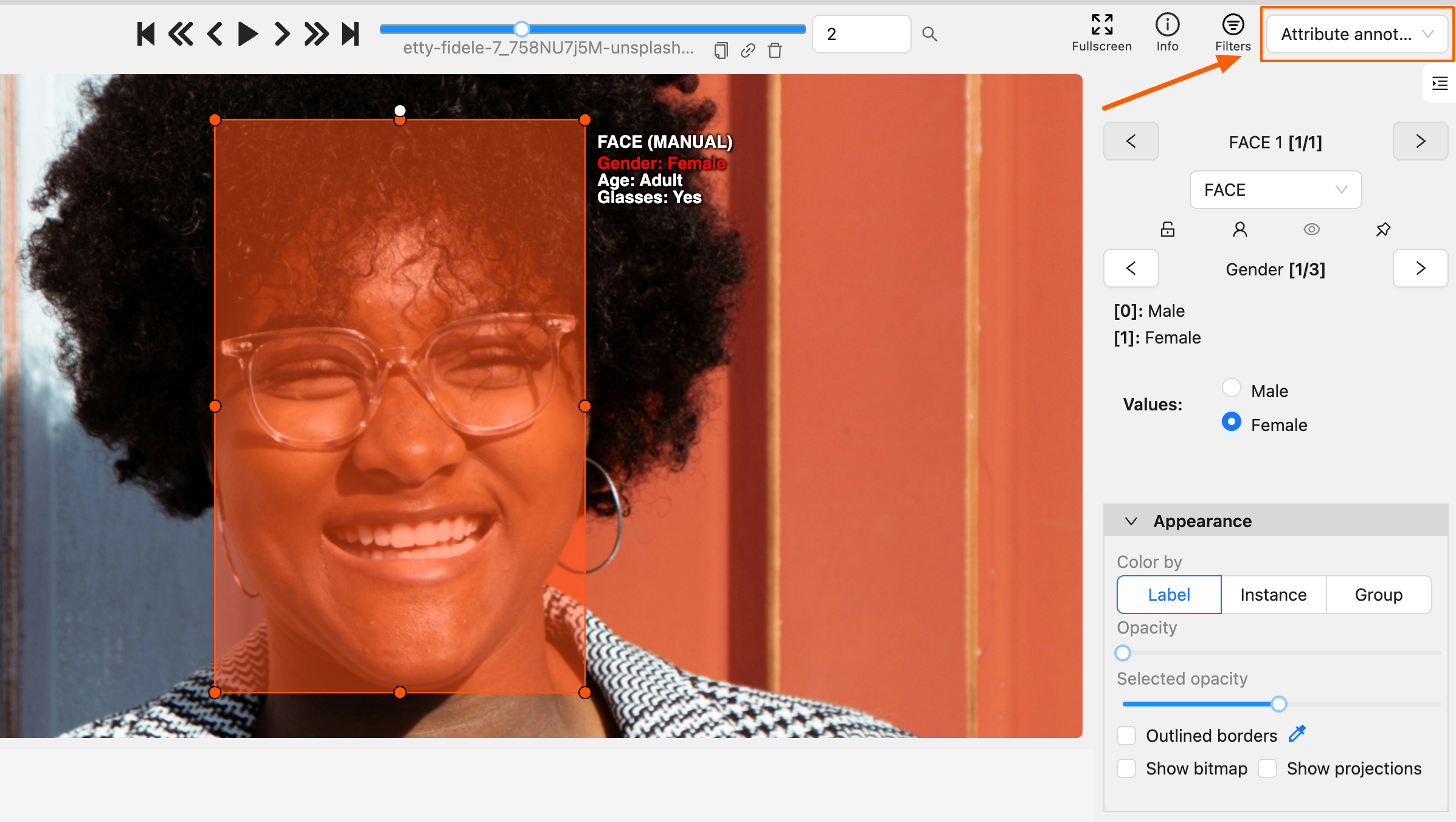

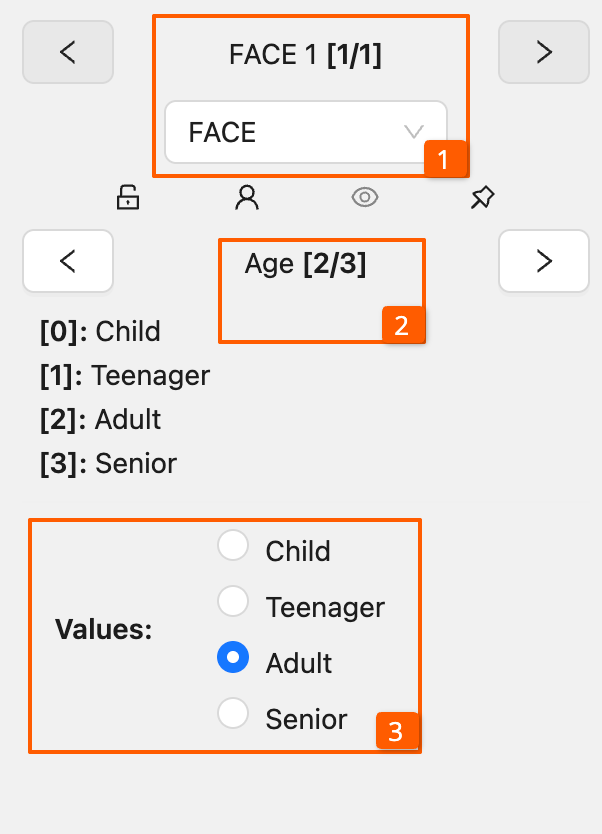

-

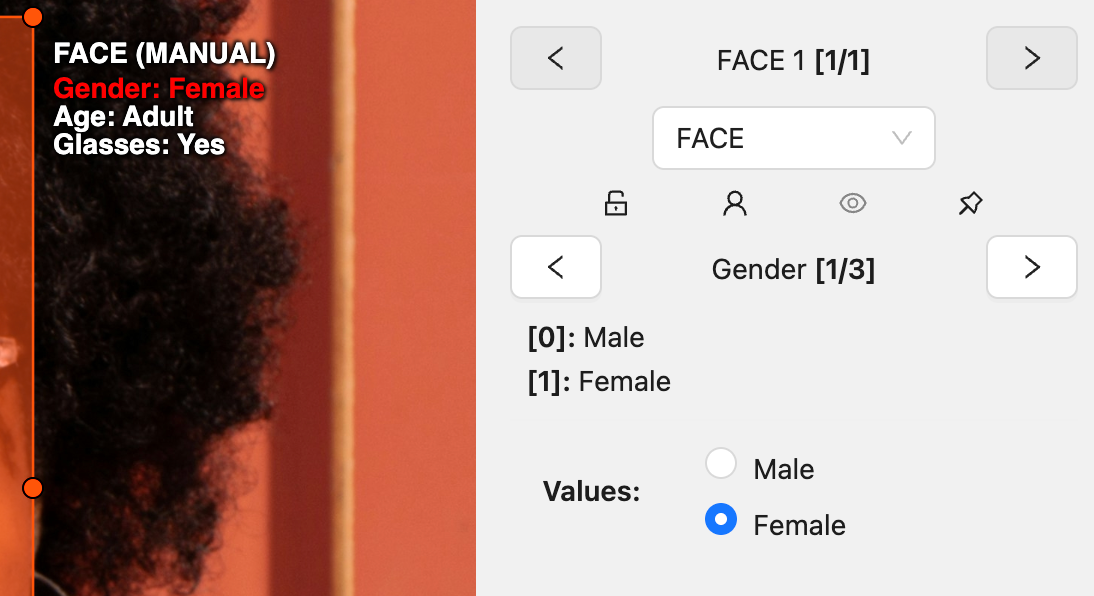

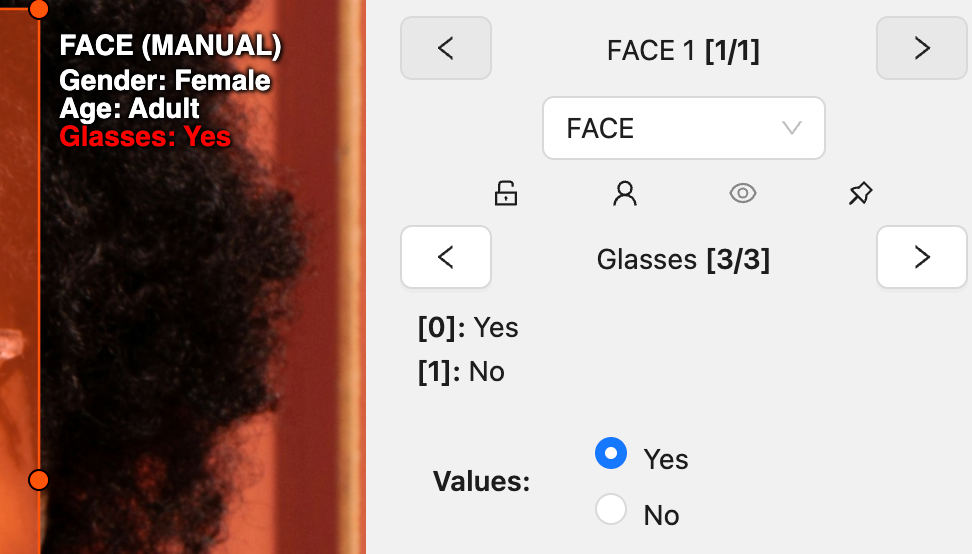

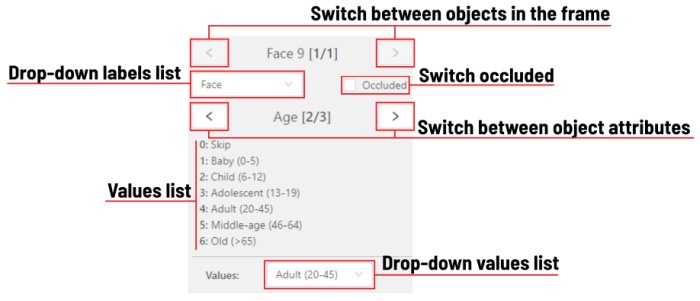

In this mode, you can edit attributes with fast navigation between objects and frames using a keyboard. Open the drop-down list in the top panel and select Attribute annotation.

-

In this mode, objects panel change to a special panel:

-

The active attribute will be red. In this case, it is

gender. Look at the bottom side panel to see all possible shortcuts for changing the attribute. Press key2on your keyboard to assign a value (female) for the attribute or select from the drop-down list.

-

Press

Up Arrow/Down Arrowon your keyboard or select the buttons in the UI to go to the next/previous attribute. In this case, after pressingDown Arrowyou will be able to edit theAgeattribute.

-

Use

Right Arrow/Left Arrowkeys to move to the previous/next image with annotation.

To display all the hot keys available in the attribute annotation mode, press F2.

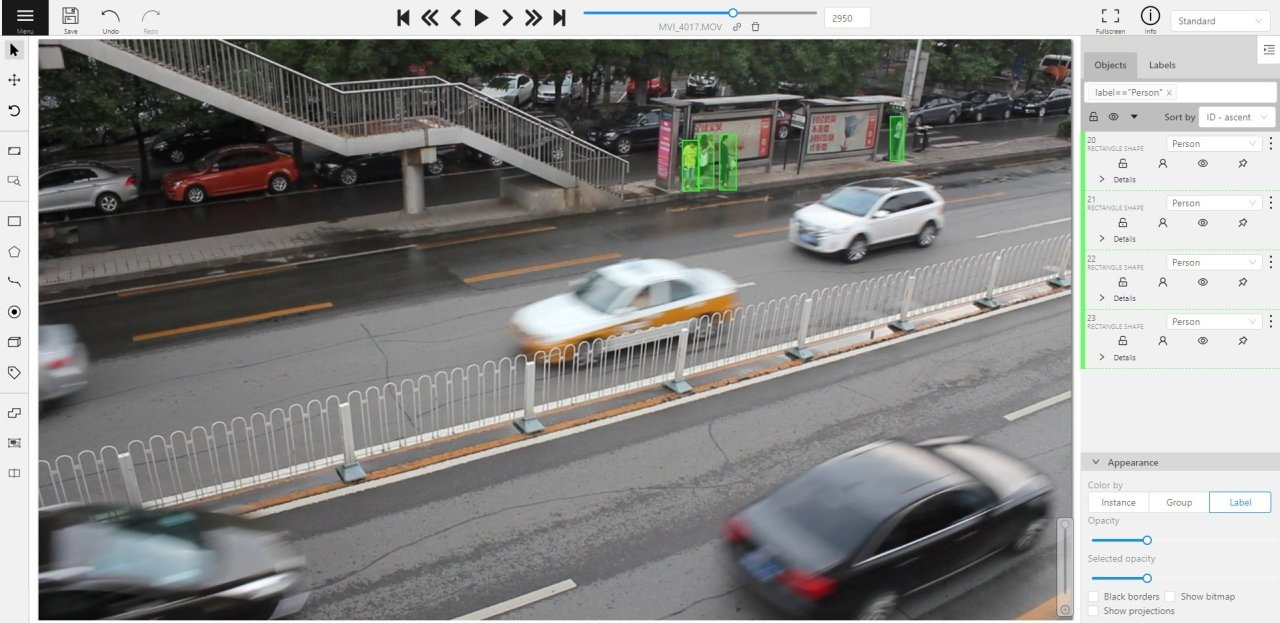

It is possible to handle lots of objects on the same frame in the mode.

It is more convenient to annotate objects of the same type. In this case you can apply

the appropriate filter. For example, the following filter will

hide all objects except person: label=="Person".

To navigate between objects (person in this case),

use the following buttons switch between objects in the frame on the special panel:

or shortcuts:

Tab— go to the next objectShift+Tab— go to the previous object.

In order to change the zoom level, go to settings (press F3) in the workspace tab and set the value Attribute annotation mode (AAM) zoom margin in px.

2.1.4 - 3D object annotation

Use the 3D Annotation tool for labeling 3D objects and scenes, such as vehicles, buildings, landscapes, and others.

Check out:

Navigation

The 3D annotation canvas looks like the following:

Note

If you added contextual images to the dataset, the canvas will include them. For more information, consult Contextual imagesFor information on the available tools, consult Controls sidebar.

You can navigate, using the mouse, or navigation keys:

You can also use keyboard shortcuts to navigate:

| Action | Keys |

|---|---|

| Camera rotation | Shift + Arrow (Up, Down, Left, Right) |

| Left/Right | Alt+J/ Alt+L |

| Up/down | Alt+U/ Alt+O |

| Zoom in/ou | Alt+K/ Alt+I |

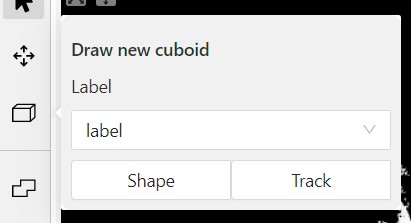

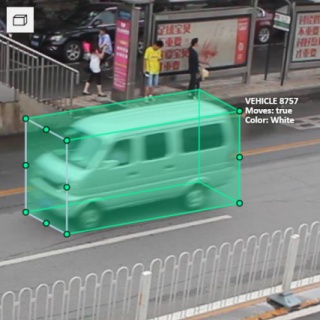

Annotation with cuboids

There are two options available for 3D annotation:

- Shape: for tasks like object detection.

- Track: uses interpolation to predict the position of objects in subsequent frames. A unique ID will be assigned to each object and maintained throughout the sequence of images.

Annotation with shapes

To add a 3D shape:

-

On the objects pane, select Draw new cuboid > select the label from the drop-down list > Shape.

-

The cursor will be followed by a cuboid. Place the cuboid on the 3D scene.

-

Use projections to adjust the cuboid. Click and hold the left mouse button to edit the label shape on the projection.

-

(Optional) Move one of the four points to change the size of the cuboid.

-

(Optional) To rotate the cuboid, select the middle point and then drag the cuboid up/down or to left/right.

Tracking with cuboids

To track with cuboids:

-

On the objects pane, select Draw new cuboid > select the label from the drop-down list > Track.

-

The cursor will be followed by a cuboid. Place the cuboid on the 3D scene.

-

Use projections to adjust the cuboid. Select and hold the left mouse button to edit the label shape on the projection.

-

(Optional) Move one of the four points to change the size of the cuboid.

-

(Optional) To rotate the cuboid, click on the middle point and then drag the cuboid up/down or to left/right.

-

Move several frames forward. You will see the cuboid you’ve added in frame 1. Adjust it, if needed.

-

Repeat to the last frame with the presence of the object you are tracking.

For more information about tracking, consult Track mode.

As well as 2D-task objects, 3D-task objects support the ability to change appearance, attributes, properties and have an action menu. Read more in objects sidebar section.

Moving an object

If you hover the cursor over a cuboid and press Shift+N, the cuboid will be cut,

so you can paste it in other place (double-click to paste the cuboid).

Copying

As well as in 2D task you can copy and paste objects by Ctrl+C and Ctrl+V,

but unlike 2D tasks you have to place a copied object in a 3D space (double click to paste).

Image of the projection window

You can copy or save the projection-window image by left-clicking on it and selecting a “save image as” or “copy image”.

Cuboid orientation

The feature enables or disables the display of cuboid orientation arrows in the 3D space. It is controlled by a checkbox located in the appearance block. When enabled, arrows representing the cuboid’s axis orientation (X - red, Y - green, Z - blue) are displayed, providing a visual reference for the cuboid’s alignment within the 3D environment. This feature is useful for understanding the spatial orientation of the cuboid.

Cuboid size input

The size input feature allows users to manually specify the dimensions of a cuboid in the 3D space. This feature is accessible through the objects sidebar - details panel, where you can input precise values for the width, height, and length (X - width, Y - height, Z - length) of the cuboid. By entering these values, the cuboid’s size is adjusted accordingly to its orientation, providing greater control and accuracy when annotating objects in 3D tasks.

2.1.5 - Annotation with tags

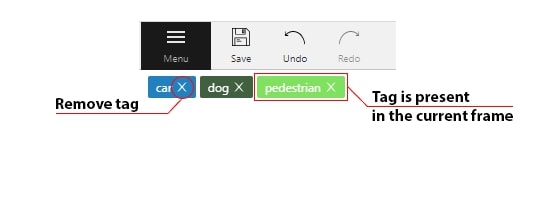

It is used to annotate frames, tags are not displayed in the workspace.

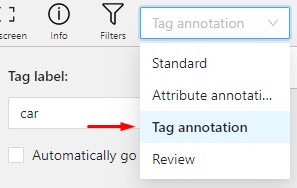

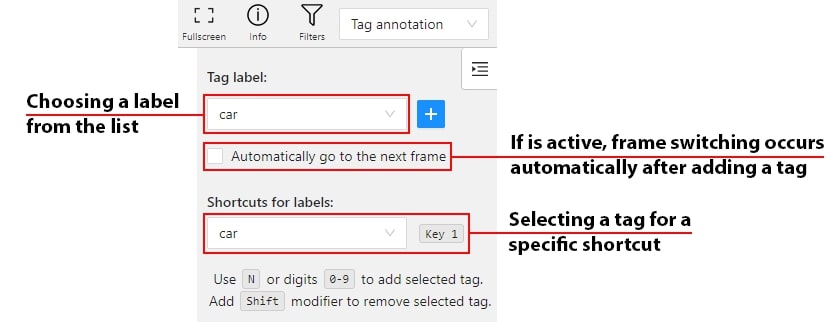

Before you start, open the drop-down list in the top panel and select Tag annotation.

The objects sidebar will be replaced with a special panel for working with tags.

Here you can select a label for a tag and add it by clicking on the Plus button.

You can also customize hotkeys for each label.

If you need to use only one label for one frame, then enable the Automatically go to the next frame

checkbox, then after you add the tag the frame will automatically switch to the next.

Tags will be shown in the top left corner of the canvas. You can show/hide them in the settings.

2.2 - Annotation with shapes

This section describes all shapes you can create in CVAT. Each shape type supports its own tools, editing options, and use cases.

Review these pages to choose the correct shape tool for your annotation task.

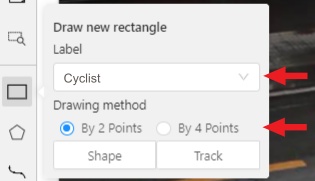

2.2.1 - Annotation with rectangles

To learn more about annotation using a rectangle, see the sections:

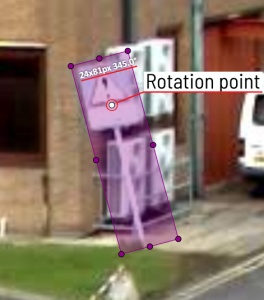

Rotation rectangle

To rotate the rectangle, pull on the rotation point. Rotation is done around the center of the rectangle.

To rotate at a fixed angle (multiple of 15 degrees),

hold shift. In the process of rotation, you can see the angle of rotation.

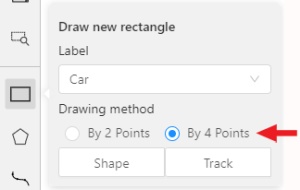

Annotation with rectangle by 4 points

It is an efficient method of bounding box annotation, proposed here. Before starting, you need to make sure that the drawing method by 4 points is selected.

Press Shape or Track for entering drawing mode. Click on four extreme points:

the top, bottom, left- and right-most physical points on the object.

Drawing will be automatically completed right after clicking the fourth point.

Press Esc to cancel editing.

2.2.2 - Shape mode

Usage examples:

- Create new annotations for a set of images.

- Add/modify/delete objects for existing annotations.

-

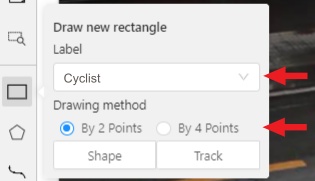

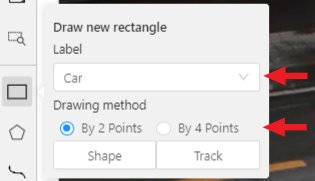

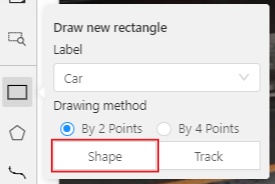

You need to select

Rectangleon the controls sidebar:

Before you start, select the correct

Label(should be specified by you when creating the task) andDrawing Method(by 2 points or by 4 points):

-

Creating a new annotation in

Shape mode:-

Create a separate

Rectangleby selectingShape.

-

Choose the opposite points. Your first rectangle is ready!

-

To learn more about creating a rectangle read here.

-

It is possible to adjust boundaries and location of the rectangle using a mouse. The rectangle’s size is shown in the top right corner, you can check it by selecting any point of the shape. You can also undo your actions using

Ctrl+Zand redo them withShift+Ctrl+ZorCtrl+Y.

-

-

You can see the

Object cardin the objects sidebar or open it by right-clicking on the object. You can change the attributes in the details section. You can perform basic operations or delete an object by selecting on the action menu button.

-

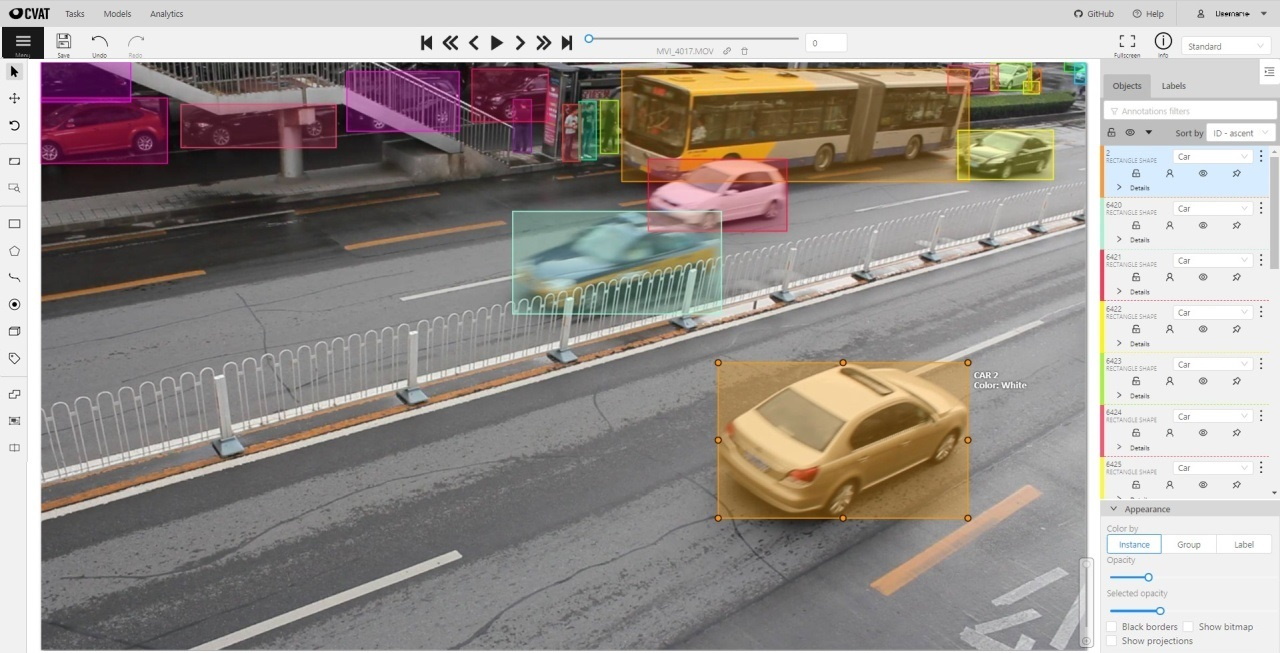

The following figure is an example of a fully annotated frame with separate shapes.

Occluded

Occlusion is an attribute used if an object is occluded by another object or

isn’t fully visible on the frame. Use Q shortcut to set the property

quickly.

Example: the three cars on the figure below should be labeled as occluded.

If a frame contains too many objects and it is difficult to annotate them

due to many shapes placed mostly in the same place, it makes sense

to lock them. Shapes for locked objects are transparent, and it is easy to

annotate new objects. Besides, you can’t change previously annotated objects

by accident. Shortcut: L.

2.2.3 - Annotation with polygons

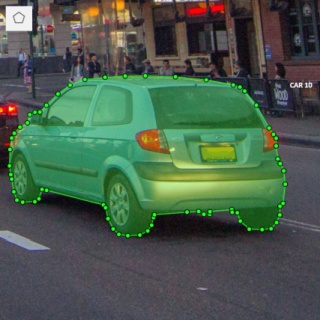

2.2.3.1 - Manual drawing

It is used for semantic / instance segmentation.

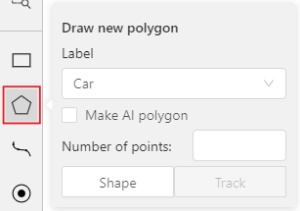

Before starting, you need to select Polygon on the controls sidebar and choose the correct Label.

- Click

Shapeto enter drawing mode. There are two ways to draw a polygon: either create points by clicking or by dragging the mouse on the screen while holdingShift.

| Clicking points | Holding Shift+Dragging |

|---|---|

|

|

- When

Shiftisn’t pressed, you can zoom in/out (when scrolling the mouse wheel) and move (when clicking the mouse wheel and moving the mouse), you can also delete the previous point by right-clicking on it. - You can use the

Selected opacityslider in theObjects sidebarto change the opacity of the polygon. You can read more in the Objects sidebar section. - Press

Nagain or click theDonebutton on the top panel for completing the shape. - After creating the polygon, you can move the points or delete them by right-clicking and selecting

Delete pointor clicking with pressedAltkey in the context menu.

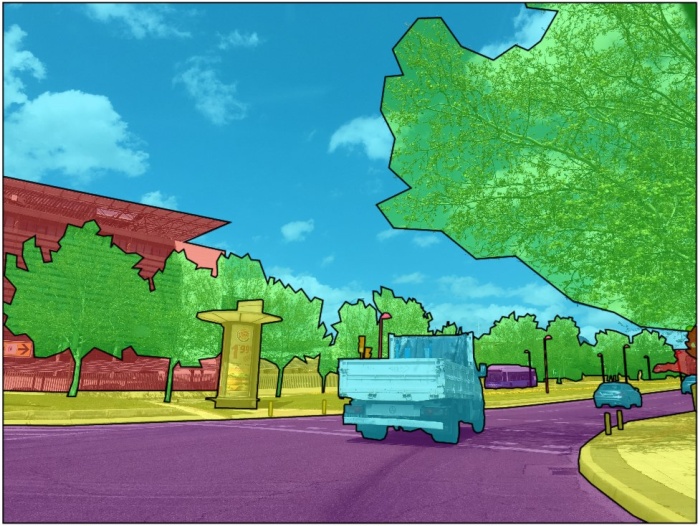

2.2.3.2 - Drawing using automatic borders

You can use auto borders when drawing a polygon. Using automatic borders allows you to automatically trace the outline of polygons existing in the annotation.

-

To do this, go to settings -> workspace tab and enable

Automatic Borderingor pressCtrlwhile drawing a polygon.

-

Start drawing / editing a polygon.

-

Points of other shapes will be highlighted, which means that the polygon can be attached to them.

-

Define the part of the polygon path that you want to repeat.

-

Click on the first point of the contour part.

-

Then click on any point located on part of the path. The selected point will be highlighted in purple.

-

Click on the last point and the outline to this point will be built automatically.

Besides, you can set a fixed number of points in the Number of points field, then

drawing will be stopped automatically. To enable dragging you should right-click

inside the polygon and choose Switch pinned property.

Below you can see results with opacity and black stroke:

If you need to annotate small objects, increase Image Quality to

95 in Create task dialog for your convenience.

2.2.3.3 - Edit polygon

To edit a polygon you have to click on it while holding Shift, it will open the polygon editor.

-

In the editor you can create new points or delete part of a polygon by closing the line on another point.

-

When

Intelligent polygon croppingoption is activated in the settings, CVAT considers two criteria to decide which part of a polygon should be cut off during automatic editing.- The first criteria is a number of cut points.

- The second criteria is a length of a cut curve.

If both criteria recommend to cut the same part, algorithm works automatically, and if not, a user has to make the decision. If you want to choose manually which part of a polygon should be cut off, disable

Intelligent polygon croppingin the settings. In this case after closing the polygon, you can select the part of the polygon you want to leave.

-

You can press

Escto cancel editing.

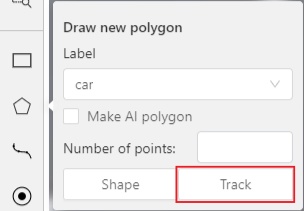

2.2.3.4 - Track mode with polygons

Polygons in the track mode allow you to mark moving objects more accurately other than using a rectangle.

-

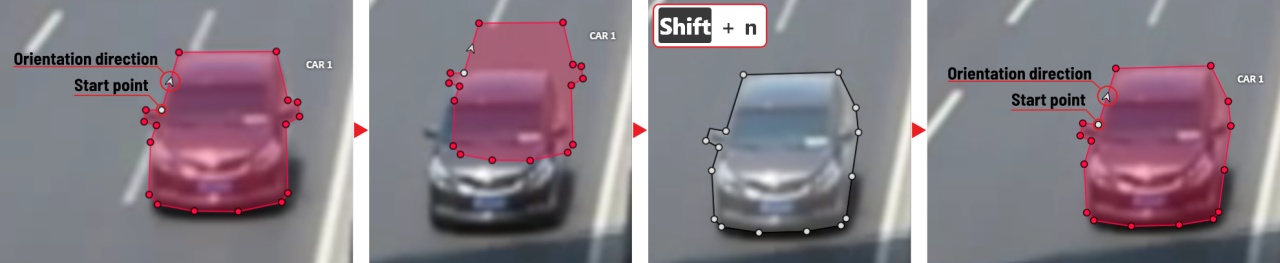

To create a polygon in the track mode, click the

Trackbutton.

-

Create a polygon the same way as in the case of Annotation with polygons. Press

Nor click theDonebutton on the top panel to complete the polygon. -

Pay attention to the fact that the created polygon has a starting point and a direction, these elements are important for annotation of the following frames.

-

After going a few frames forward press

Shift+N, the old polygon will disappear and you can create a new polygon. The new starting point should match the starting point of the previously created polygon (in this example, the top of the left mirror). The direction must also match (in this example, clockwise). After creating the polygon, pressNand the intermediate frames will be interpolated automatically.

-

If you need to change the starting point, right-click on the desired point and select

Set starting point. To change the direction, right-click on the desired point and select switch orientation.

There is no need to redraw the polygon every time using Shift+N,

instead you can simply move the points or edit a part of the polygon by pressing Shift+Click.

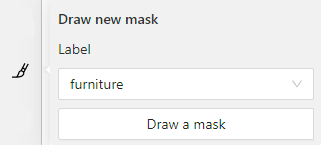

2.2.3.5 - Creating masks

Cutting holes in polygons

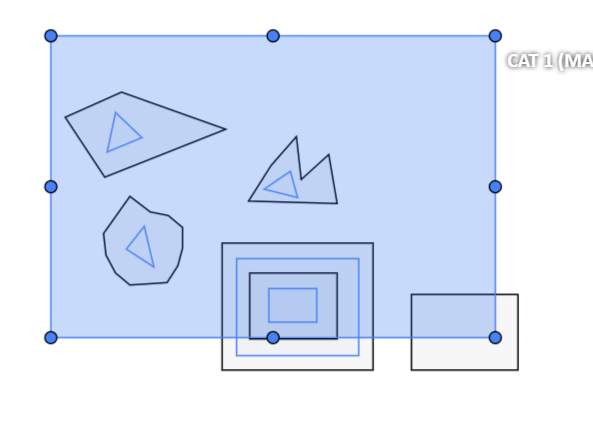

Currently, CVAT does not support cutting transparent holes in polygons. However, it is possible to generate holes in exported instance and class masks. To do this, one needs to define a background class in the task and draw holes with it as additional shapes above the shapes needed to have holes:

The editor window:

Remember to use z-axis ordering for shapes by [-] and [+, =] keys.

Exported masks:

Notice that it is currently impossible to have a single instance number for internal shapes (they will be merged into the largest one and then covered by “holes”).

Creating masks

There are several formats in CVAT that can be used to export masks:

Segmentation Mask(PASCAL VOC masks)CamVidMOTSICDARCOCO(RLE-encoded instance masks, guide)Datumaro

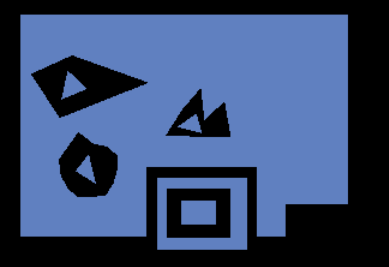

An example of exported masks (in the Segmentation Mask format):

Important notices:

- Both boxes and polygons are converted into masks

- Grouped objects are considered as a single instance and exported as a single mask (label and attributes are taken from the largest object in the group)

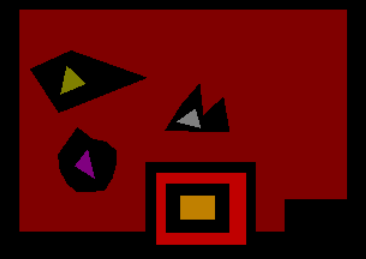

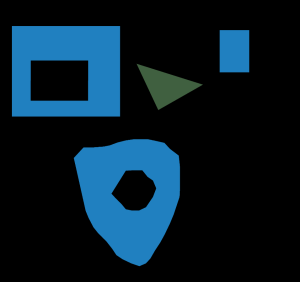

Class colors

All the labels have associated colors, which are used in the generated masks. These colors can be changed in the task label properties:

Label colors are also displayed in the annotation window on the right panel, where you can show or hide specific labels (only the presented labels are displayed):

A background class can be:

- A default class, which is implicitly-added, of black color (RGB 0, 0, 0)

backgroundclass with any color (has a priority, name is case-insensitive)- Any class of black color (RGB 0, 0, 0)

To change background color in generated masks (default is black),

change background class color to the desired one.

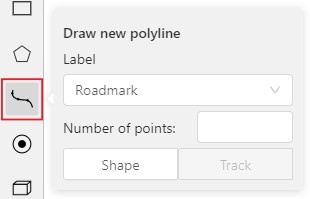

2.2.4 - Annotation with polylines

It is used for road markup annotation etc.

Before starting, you need to select the Polyline. You can set a fixed number of points

in the Number of points field, then drawing will be stopped automatically.

Click Shape to enter drawing mode. There are two ways to draw a polyline —

you either create points by clicking or by dragging a mouse on the screen while holding Shift.

When Shift isn’t pressed, you can zoom in/out (when scrolling the mouse wheel)

and move (when clicking the mouse wheel and moving the mouse), you can delete

previous points by right-clicking on it.

Press N again or click the Done button on the top panel to complete the shape.

You can delete a point by clicking on it with pressed Ctrl or right-clicking on a point

and selecting Delete point. Click with pressed Shift will open a polyline editor.

There you can create new points(by clicking or dragging) or delete part of a polygon closing

the red line on another point. Press Esc to cancel editing.

2.2.5 - Track mode

Usage examples:

- Create new annotations for a sequence of frames.

- Add/modify/delete objects for existing annotations.

- Edit tracks, merge several rectangles into one track.

-

Like in the

Shape mode, you need to select aRectangleon the sidebar, in the appearing form, select the desiredLabeland theDrawing method.

-

Creating a track for an object (look at the selected car as an example):

-

Create a

RectangleinTrack modeby selectingTrack.

-

In

Track mode, the rectangle will be automatically interpolated on the next frames. -

The cyclist starts moving on frame #2270. Let’s mark the frame as a key frame. You can press

Kfor that or select thestarbutton (see the screenshot below).

-

If the object starts to change its position, you need to modify the rectangle where it happens. It isn’t necessary to change the rectangle on each frame, simply update several keyframes and the frames between them will be interpolated automatically.

-

Let’s jump 30 frames forward and adjust the boundaries of the object. See an example below:

-

After that the rectangle of the object will be changed automatically on frames 2270 to 2300:

-

-

When the annotated object disappears or becomes too small, you need to finish the track. You have to choose

Outside Property, shortcutO.

-

If the object isn’t visible on a couple of frames and then appears again, you can use the

Mergefeature to merge several individual tracks into one.

-

Create tracks for moments when the cyclist is visible:

-

Select

Mergebutton or press keyMand select on any rectangle of the first track and on any rectangle of the second track and so on:

-

Select

Mergebutton or pressMto apply changes.

-

The final annotated sequence of frames in

Interpolationmode can look like the clip below:

-

Shapes that were created in the track mode, have extra navigation buttons.

-

These buttons help to jump to the previous/next keyframe.

-

The button helps to jump to the initial frame and to the last keyframe.

You can use the Split function to split one track into two tracks:

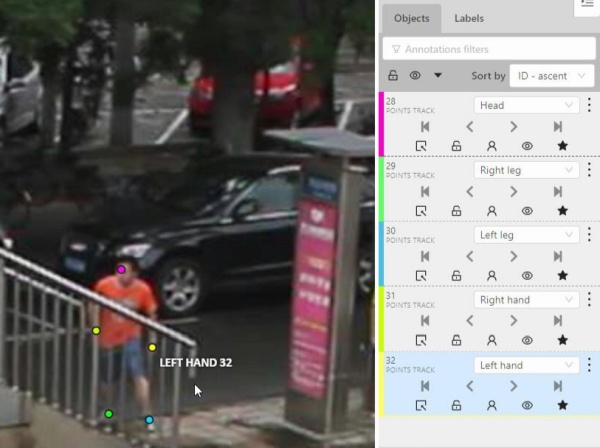

2.2.6 - Annotation with points

2.2.6.1 - Points in shape mode

It is used for face, landmarks annotation etc.

Before you start you need to select the Points. If necessary you can set a fixed number of points

in the Number of points field, then drawing will be stopped automatically.

Click Shape to entering the drawing mode. Now you can start annotation of the necessary area.

Points are automatically grouped — all points will be considered linked between each start and finish.

Press N again or click the Done button on the top panel to finish marking the area.

You can delete a point by clicking with pressed Ctrl or right-clicking on a point and selecting Delete point.

Clicking with pressed Shift will open the points shape editor.

There you can add new points into an existing shape. You can zoom in/out (when scrolling the mouse wheel)

and move (when clicking the mouse wheel and moving the mouse) while drawing. You can drag an object after

it has been drawn and change the position of individual points after finishing an object.

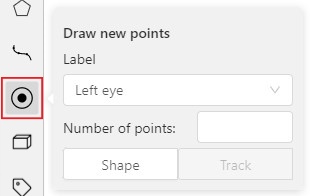

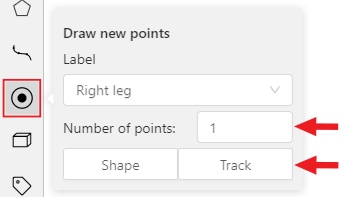

2.2.6.2 - Linear interpolation with one point

You can use linear interpolation for points to annotate a moving object:

-

Before you start, select the

Points. -

Linear interpolation works only with one point, so you need to set

Number of pointsto 1. -

After that select the

Track.

-

Click

Trackto enter the drawing mode left-click to create a point and after that shape will be automatically completed.

-

Move forward a few frames and move the point to the desired position, this way you will create a keyframe and intermediate frames will be drawn automatically. You can work with this object as with an interpolated track: you can hide it using the

Outside, move around keyframes, etc.

-

This way you’ll get linear interpolation using the

Points.

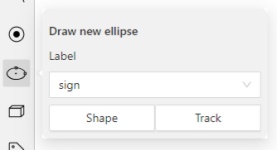

2.2.7 - Annotation with ellipses

It is used for road sign annotation etc.

First of all you need to select the ellipse on the controls sidebar.

Choose a Label and click Shape or Track to start drawing. An ellipse can be created the same way as

a rectangle,

you need to specify two opposite points,

and the ellipse will be inscribed in an imaginary rectangle. Press N or click the Done button on the top panel

to complete the shape.

You can rotate ellipses using a rotation point in the same way as rectangles.

Annotation with ellipses video tutorial

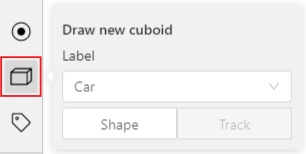

2.2.8 - Annotation with cuboids

It is used to annotate 3 dimensional objects such as cars, boxes, etc… Currently the feature supports one point perspective and has the constraint where the vertical edges are exactly parallel to the sides.

2.2.8.1 - Creating the cuboid

Before you start, you have to make sure that Cuboid is selected

and choose a drawing method from rectangle or by 4 points.

Drawing cuboid by 4 points

Choose a drawing method by 4 points and select Shape to enter the drawing mode.

There are many ways to draw a cuboid.

You can draw the cuboid by placing 4 points, after that the drawing will be completed automatically.

The first 3 points determine the plane of the cuboid while the last point determines the depth of that plane.

For the first 3 points, it is recommended to only draw the 2 closest side faces, as well as the top and bottom face.

A few examples:

Drawing cuboid from rectangle

Choose a drawing method from rectangle and select Shape to enter the drawing mode.

When you draw using the rectangle method, you must select the frontal plane of the object using the bounding box.

The depth and perspective of the resulting cuboid can be edited.

Example:

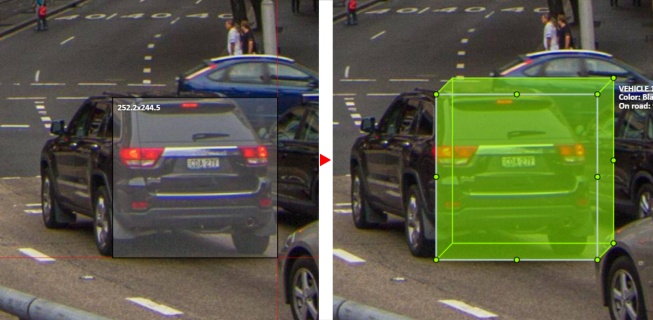

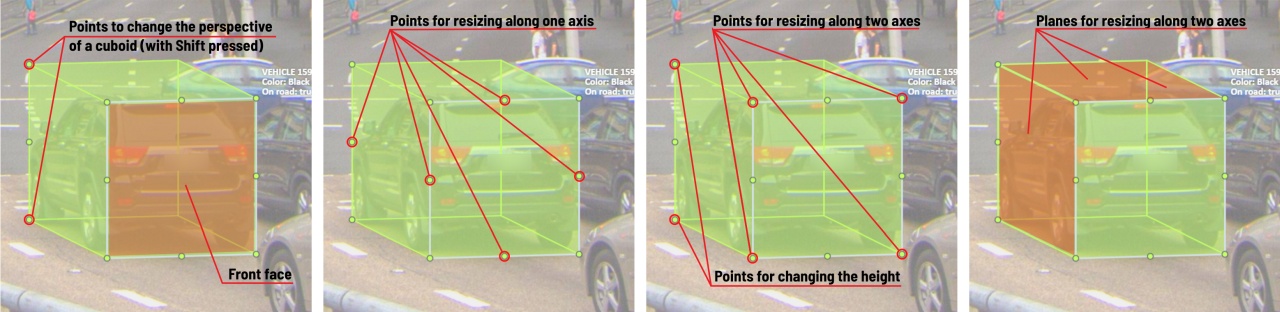

2.2.8.2 - Editing the cuboid

The cuboid can be edited in multiple ways: by dragging points, by dragging certain faces or by dragging planes. First notice that there is a face that is painted with gray lines only, let us call it the front face.

You can move the cuboid by simply dragging the shape behind the front face. The cuboid can be extended by dragging on the point in the middle of the edges. The cuboid can also be extended up and down by dragging the point at the vertices.

To draw with perspective effects it should be assumed that the front face is the closest to the camera.

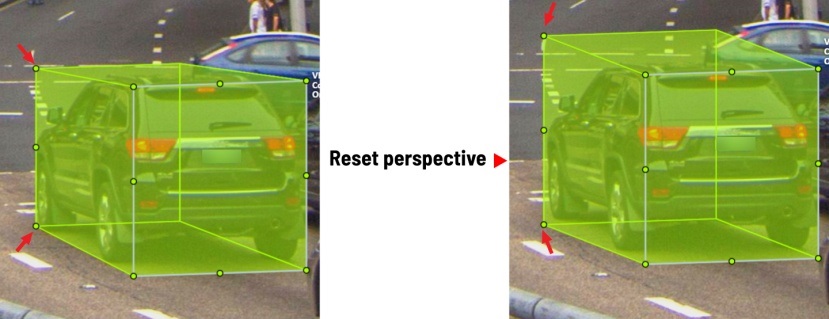

To begin simply drag the points on the vertices that are not on the gray/front face while holding Shift.

The cuboid can then be edited as usual.

If you wish to reset perspective effects, you may right click on the cuboid,

and select Reset perspective to return to a regular cuboid.

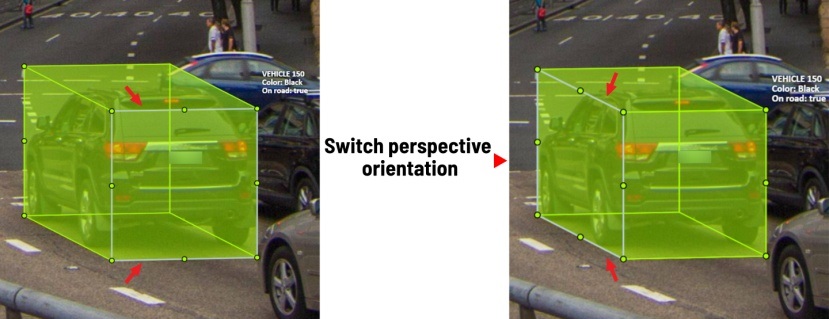

The location of the gray face can be swapped with the adjacent visible side face.

You can do it by right clicking on the cuboid and selecting Switch perspective orientation.

Note that this will also reset the perspective effects.

Certain faces of the cuboid can also be edited, these faces are: the left, right and dorsal faces, relative to the gray face. Simply drag the faces to move them independently from the rest of the cuboid.

You can also use cuboids in track mode, similar to rectangles in track mode (basics) or

2.2.9 - Annotation with skeletons

In this guide, we delve into the efficient process of annotating complex structures through the implementation of Skeleton annotations.

Skeletons serve as annotation templates for annotating complex objects with a consistent structure, such as human pose estimation or facial landmarks.

A Skeleton is composed of numerous points (also referred to as elements), which may be connected by edges. Each point functions as an individual object, possessing unique attributes and properties like color, occlusion, and visibility.

Skeletons can be exported in two formats: CVAT for image and COCO Keypoints.

Note

Skeletons’ labels cannot be imported in a label-less project by importing a dataset. You need to define the labels manually before the import.See:

- Adding Skeleton manually

- Adding Skeleton labels from the model

- Annotation with Skeletons

- Automatic annotation with Skeletons

- Editing skeletons on the canvas

- Editing skeletons on the sidebar

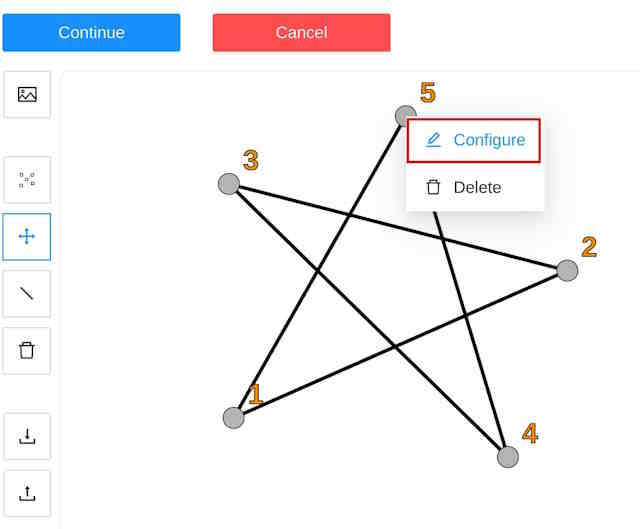

Adding Skeleton manually

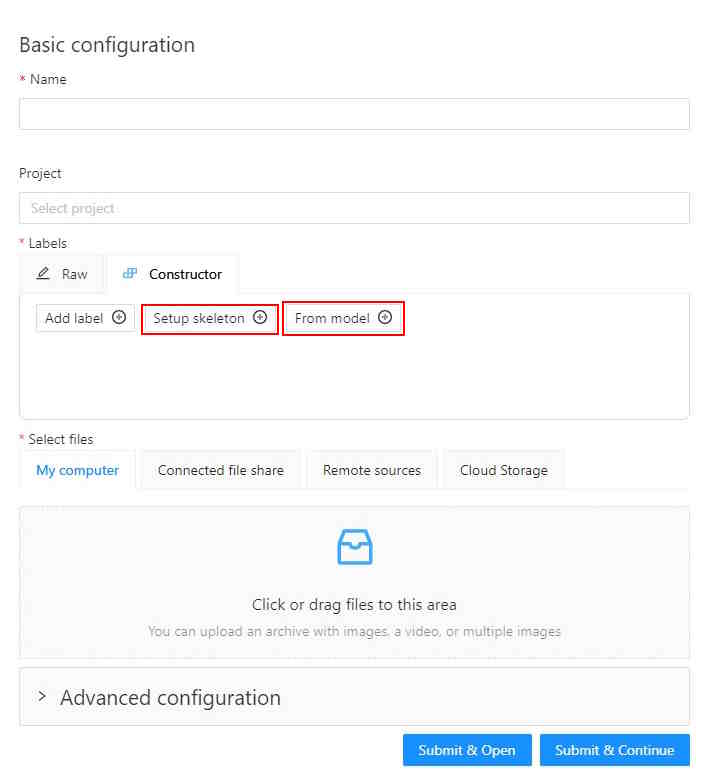

To start annotating using skeletons, you need to set up a Skeleton task in Configurator:

To open Configurator, when creating a task, click on the Setup skeleton button if you want to set up the skeleton manually, or From model if you want to add skeleton labels from a model.

Skeleton Configurator

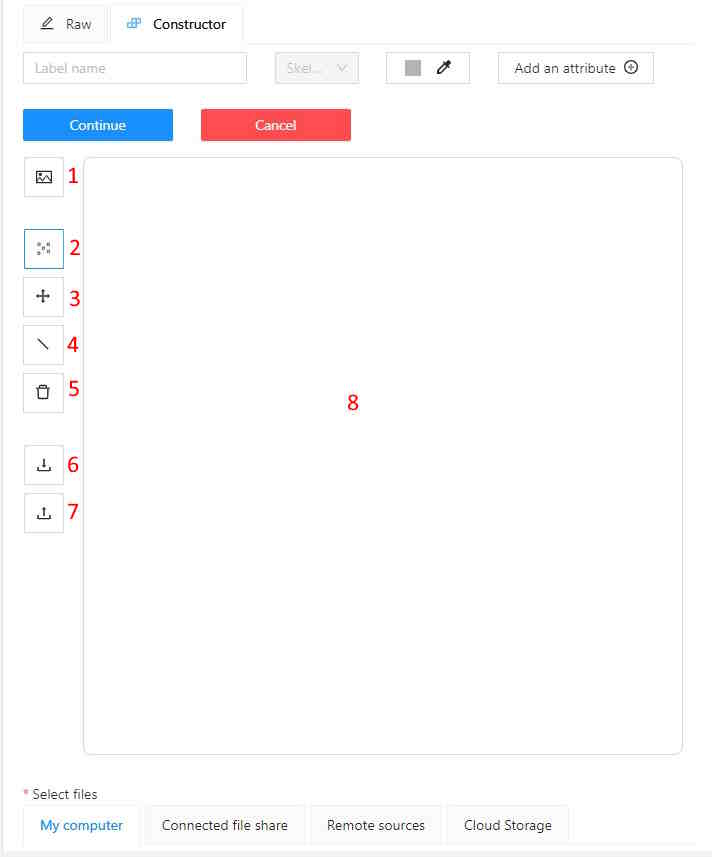

The skeleton Configurator is a tool to build skeletons for annotation. It has the following fields:

| Number | Name | Description |

|---|---|---|

| 1 | Upload background image | (Optional) Use it to upload a background image, to draw a skeleton on top of it. |

| 2 | Add point | Use it to add Skeleton points to the Drawing area (8). |

| 3 | Click and drag | Use it to move points across the Drawing area (8). |

| 4 | Add edge | Use it to add edge on the Drawing area (8) to connect the points (2). |

| 5 | Remove point | Use it to remove points. Click on Remove point and then on any point (2) on the Drawing area (8) to delete the point. |

| 6 | Download skeleton | Use it to download created skeleton in .SVG format. |

| 7 | Upload skeleton | Use it to upload skeleton in .SVG format. |

| 8 | Drawing area | Use it as a canvas to draw a skeleton. |

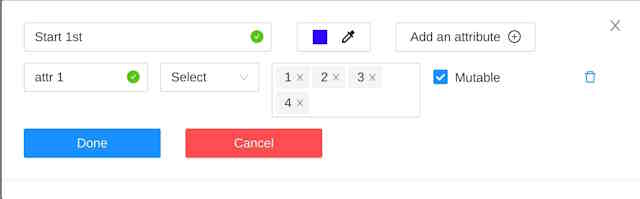

Configuring Skeleton points

You can name labels, set attributes, and change the color of each point of the skeleton.

To do this, right-click on the skeleton point and select Configure:

In the opened menu, you can change the point setting. It is similar to adding labels and attributes of the regular task:

A Skeleton point can only exist within its parent Skeleton.

Note

You cannot change the skeleton configuration for an existing task/project.

You can copy/insert skeleton configuration from the Raw tab of the label configurator.

Adding Skeleton labels manually

To create the Skeleton task, do the following:

- Open Configurator.

- (Optional) Upload background image.

- In the Label name field, enter the name of the label.

- (Optional) Add attribute

Note: you can add attributes exclusively to each point, for more information, see Configuring Skeleton points - Use Add point to add points to the Drawing area.

- Use Add edge to add edges between points.

- Upload files.

- Click:

- Submit & Open to create and open the task.

- Submit & Continue to submit the configuration and start creating a new task.

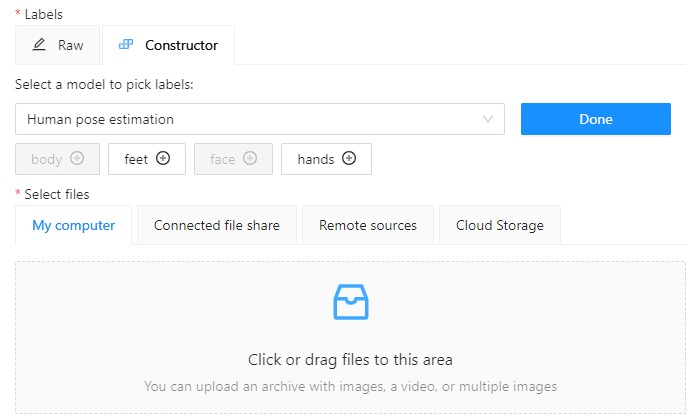

Adding Skeleton labels from the model

To add points from the model, and annotate do the following:

-

Open Basic configurator.

-

On the Constructor tab, click From model.

-

From the Select a model to pick labels select the

Human pose estimationmodel or others if available. -

Click on the model’s labels, you want to use.

Selected labels will become gray.

-

(Optional) If you want to adjust labels, within the label, click the Update attributes icon.

The Skeleton configurator will open, where you can configure the skeleton.

Note: Labels cannot be adjusted after the task/project is created. -

Click Done. The labels, that you selected, will appear in the labels window.

-

Upload data.

-

Click:

- Submit & Open to create and open the task.

- Submit & Continue to submit the configuration and start creating a new task.

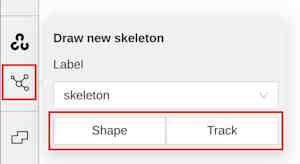

Annotation with Skeletons

To annotate with Skeleton, do the following

-

Open job.

-

On the tools panel select Draw new skeleton.

-

Select Track to annotate with tracking or Shape to annotate without tracking.

-

Draw a skeleton on the image.

Automatic annotation with Skeletons

To automatically annotate with Skeleton, do the following

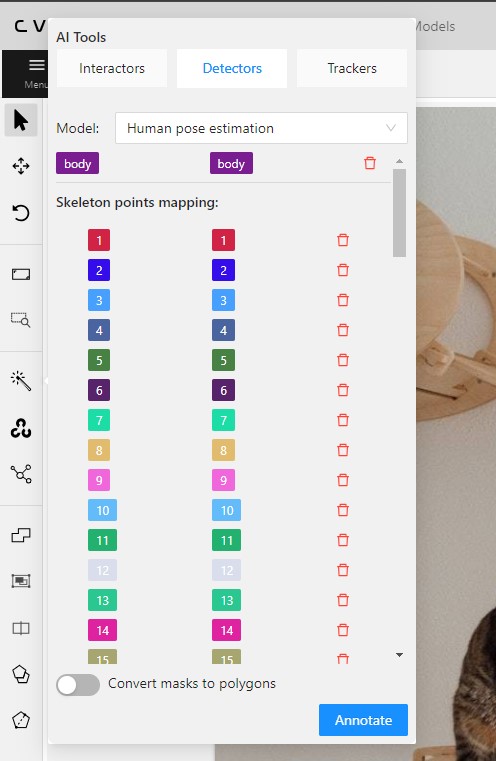

-

Open the job and on the tools panel select AI Tools > Detectors

-

From the drop-down list select the model. You will see a list of points to match and the name of the skeleton on the top of the list.

-

(Optional) By clicking on the Bin icon, you can remove any mapped item:

- A skeleton together with all points.

- Certain points from two mapped skeletons.

-

Click Annotate.

Editing skeletons on the canvas

A drawn skeleton is encompassed within a bounding box, it allows you to manipulate the skeleton as a regular bounding box, enabling actions such as dragging, resizing, or rotating:

Upon repositioning a point, the bounding box adjusts automatically, without affecting other points:

Additionally, Shortcuts are applicable to both the skeleton as a whole and its elements:

- To use a shortcut to the entire skeleton, hover over the bounding box and push the shortcut keyboard key. This action is applicable for shortcuts like the lock, occluded, pinned, keyframe, and outside for skeleton tracks.

- To use a shortcut to a specific skeleton point, hover over the point and push the shortcut keyboard key. The same list of shortcuts is available, with the addition of outside, which is also applicable to individual skeleton shape elements.

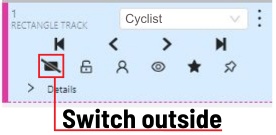

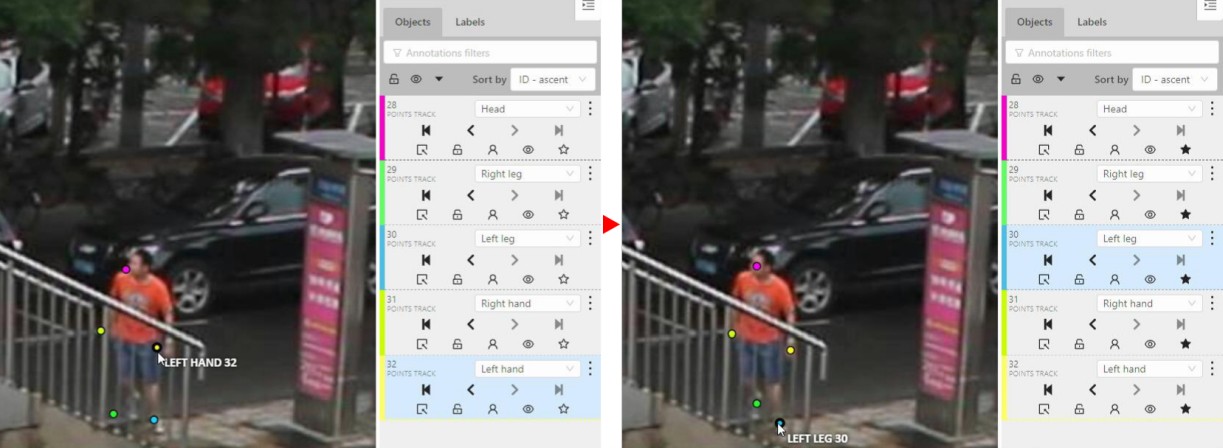

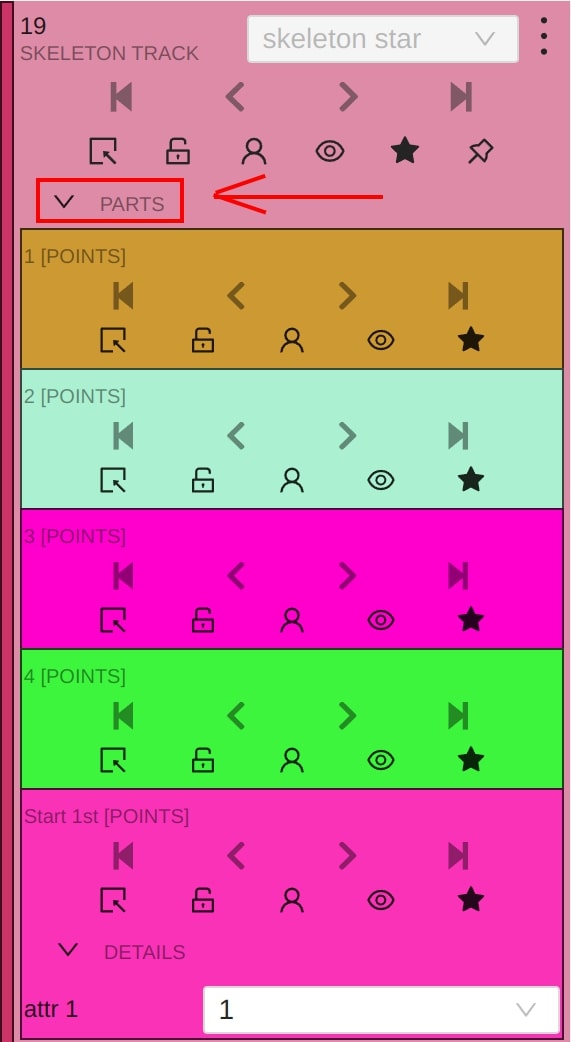

Editing skeletons on the sidebar

In CVAT, the sidebar offers an alternative method for setting up skeleton properties and attributes.

This approach is similar to that used for other object types supported by CVAT, but with a few specific alterations:

An additional collapsible section is provided for users to view a comprehensive list of skeleton parts.

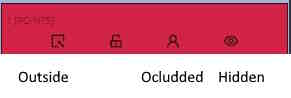

Skeleton points can have properties like Outside, Occluded, and Hidden.

Both Outside and Hidden make a skeleton point invisible.

-

Outside property is part of annotations. Use it when part of the object is out of frame borders.

-

Hidden makes a point hidden only for the annotator’s convenience, this property will not be saved between different sessions.

-

Occluded keeps the point visible on the frame and usually means that the point is still on a frame, just hidden behind another object.

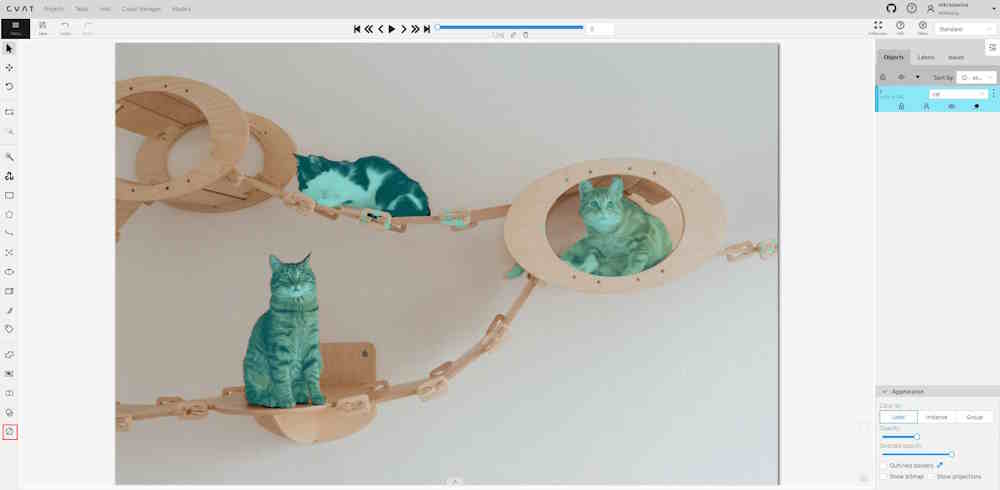

2.2.10 - Annotation with brush tool

With a brush tool, you can create masks for disjoint objects, that have multiple parts, such as a house hiding behind trees, a car behind a pedestrian, or a pillar behind a traffic sign. The brush tool has several modes, for example: erase pixels, change brush shapes, and polygon-to-mask mode.

Use brush tool for Semantic (Panoptic) and Instance Image Segmentation tasks.

For more information about segmentation masks in CVAT, see Creating masks.

See:

- Brush tool menu

- Annotation with brush

- Annotation with polygon-to-mask

- Remove underlying pixels

- AI Tools

- Import and export

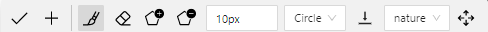

Brush tool menu

The brush tool menu appears on the top of the screen after you click Shape:

It has the following elements:

| Element | Description |

|---|---|

| Save mask saves the created mask. The saved mask will appear on the object sidebar | |

|

Save mask and continue adds a new mask to the object sidebar and allows you to draw a new one immediately. |

| Brush adds new mask/ new regions to the previously added mask). | |

|

Eraser removes part of the mask. |

|

Polygon selection tool. Selection will become a mask. |

|

Remove polygon selection subtracts part of the polygon selection. |

|

Brush size in pixels. Note: Visible only when Brush or Eraser are selected. |

|

Brush shape with two options: circle and square. Note: Visible only when Brush or Eraser are selected. |

| Remove underlying pixels. When you are drawing or editing a mask with this tool, pixels on other masks that are located at the same positions as the pixels of the current mask are deleted. |

|

|

Hide mask. When drawing or editing a mask, you can enable this feature to temporarily hide the mask, allowing you to see the objects underneath more clearly. |

|

Label that will be assigned to the newly created mask |

|

Move. Click and hold to move the menu bar to the other place on the screen |

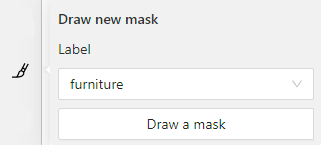

Annotation with brush

To annotate with brush, do the following:

-

From the controls sidebar, select Brush

.

. -

In the Draw new mask menu, select label for your mask, and click Shape.

The Brush tool will be selected by default.

tool will be selected by default.

-

With the brush, draw a mask on the object you want to label.

To erase selection, use Eraser

-

After you applied the mask, on the top menu bar click Save mask

to finish the process (or N on the keyboard). -

Added object will appear on the objects sidebar.

To add the next object, repeat steps 1 to 5. All added objects will be visible on the image and the objects sidebar.

To save the job with all added objects, on the top menu, click Save  .

.

Annotation with polygon-to-mask

To annotate with polygon-to-mask, do the following:

-

From the controls sidebar, select Brush

.

. -

In the Draw new mask menu, select label for your mask, and click Shape.

-

In the brush tool menu, select Polygon

.

. -

With the Polygon

tool, draw a mask for the object you want to label.

tool, draw a mask for the object you want to label.

To correct selection, use Remove polygon selection .

. -

Use Save mask

(or N on the keyboard)

(or N on the keyboard)

to switch between add/remove polygon tools:

-

After you added the polygon selection, on the top menu bar click Save mask

to finish the process (or N on the keyboard). -

Click Save mask

again (or N on the keyboard).

again (or N on the keyboard).

The added object will appear on the objects sidebar.

To add the next object, repeat steps 1 to 5.

All added objects will be visible on the image and the objects sidebar.

To save the job with all added objects, on the top menu, click Save  .

.

Remove underlying pixels

Use Remove underlying pixels tool when you want to add a mask and simultaneously delete the pixels of

other masks that are located at the same positions. It is a highly useful feature to avoid meticulous drawing edges twice between two different objects.

![]()

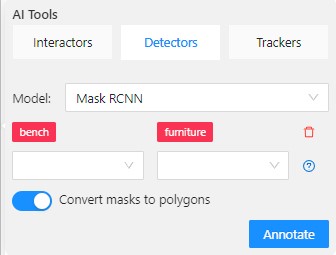

AI Tools

You can convert AI tool masks to polygons. To do this, use the following AI tool menu:

- Go to the Detectors tab.

- Switch toggle Masks to polygons to the right.

- Add source and destination labels from the drop-down lists.

- Click Annotate.

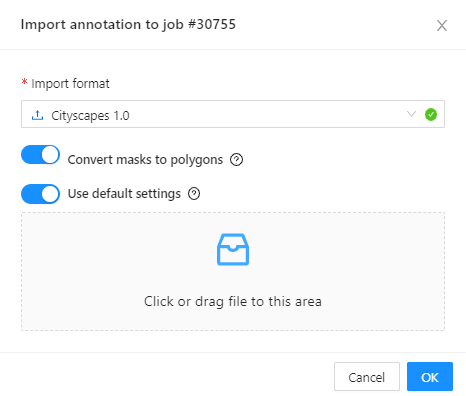

Import and export

For export, see Export dataset

Import follows the general import dataset procedure, with the additional option of converting masks to polygons.

Note

This option is available for formats that work with masks only.To use it, when uploading the dataset, switch the Convert masks to polygon toggle to the right:

2.2.11 - Types of shapes

There are several shapes with which you can annotate your images:

RectangleorBounding boxPolygonPolylinePointsEllipseCuboidCuboid in 3D taskSkeletonTag

And there is what they look like:

Tag - has no shape in the workspace, but is displayed in objects sidebar.

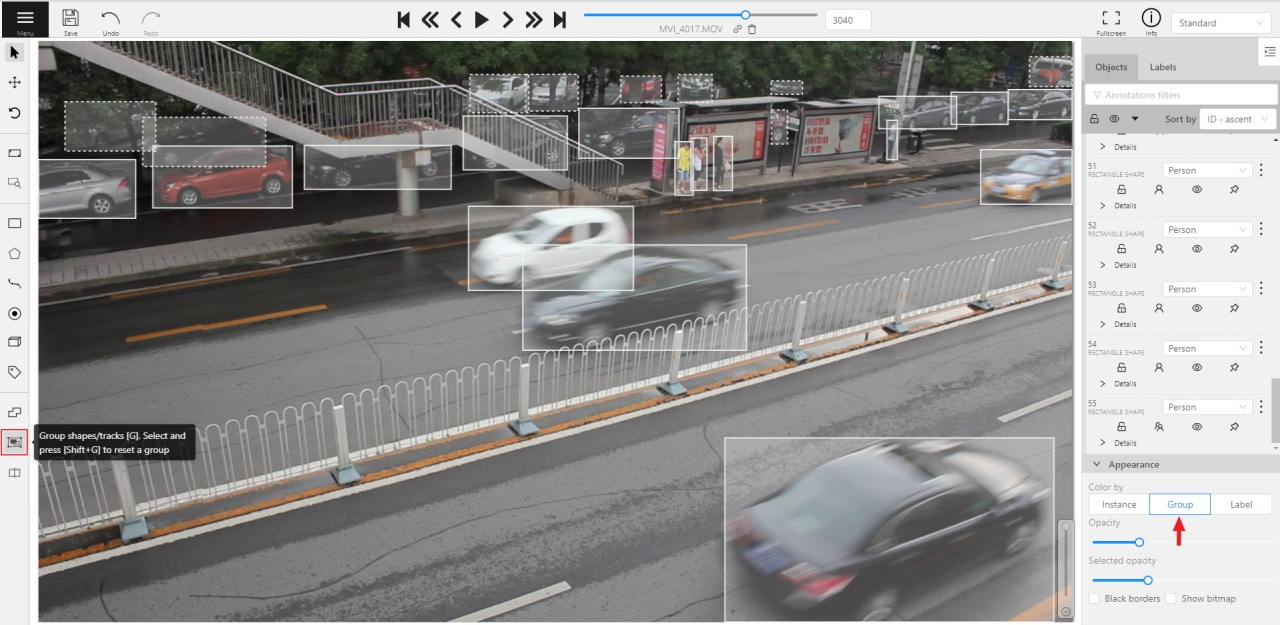

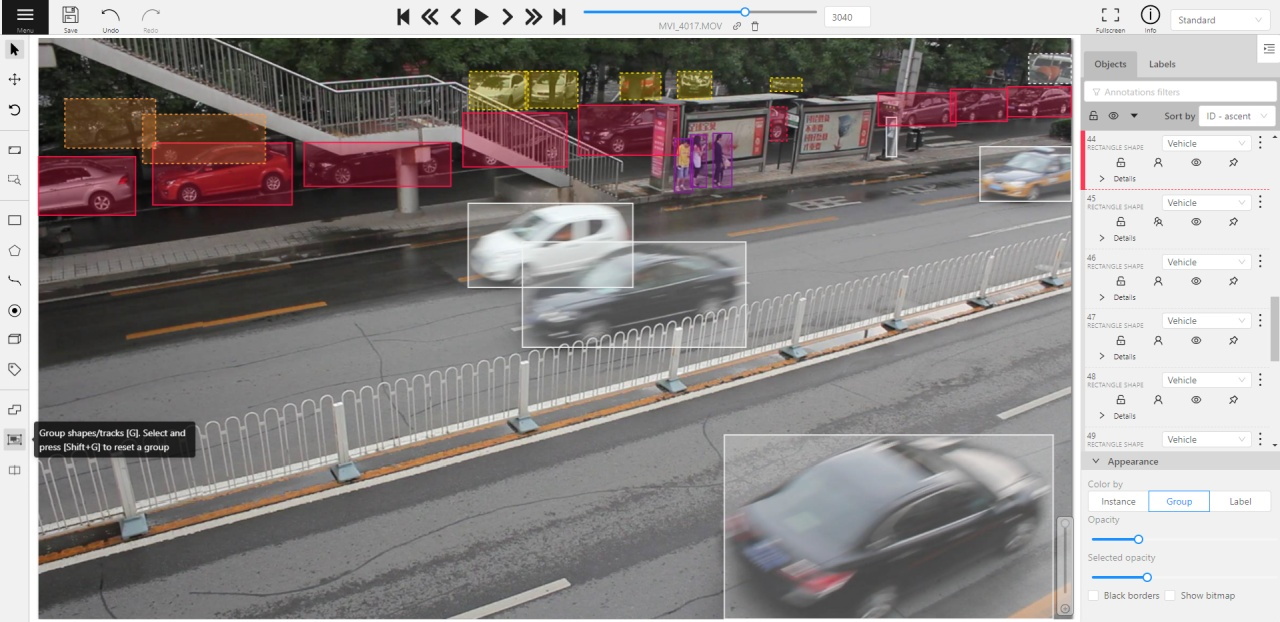

2.2.12 - Shape grouping

This feature allows us to group several shapes.

You may use the Group Shapes button or shortcuts:

G— start selection / end selection in group modeEsc— close group modeShift+G— reset group for selected shapes

You may select shapes clicking on them or selecting an area.

Grouped shapes will have group_id filed in dumped annotation.

Also you may switch color distribution from an instance (default) to a group.

You have to switch Color By Group checkbox for that.

Shapes that don’t have group_id, will be highlighted in white.

Shapes grouping video tutorial

2.3 - Editing & Utility Tools

This section covers tools that help modify shapes, manage large annotations, and streamline the annotation workflow.

Use these guides to learn how to join or split shapes, group them, filter objects, and work with contextual images and frame operations.

2.3.1 - Join and slice tools

In CVAT you can modify shapes by either joining multiple shapes into a single label or slicing a single label into several shapes.

This document provides guidance on how to perform these operations effectively.

See:

Joining masks

The Join masks tool (![]() ),

is specifically designed to work with mask annotations.

),

is specifically designed to work with mask annotations.

This tool is useful in scenarios where a single object in an image is annotated with multiple shapes, and there is a need to merge these shapes into a single one.

To join masks, do the following:

- From the Edit block,

select Join masks

.

. - Click on the canvas area, to select masks that you want to join.

- (Optional) To remove the selection click the mask one more time.

- Click again on Join masks

(J) to execute the join operation.

(J) to execute the join operation.

Upon completion, the selected masks will be joined into a single mask.

Slicing polygons and masks

The Slice mask/polygon (![]() )

is compatible with both mask and polygon annotations.

)

is compatible with both mask and polygon annotations.

This tool is useful in scenarios where multiple objects in an image are annotated with one shape, and there is a need to slice this shape into multiple parts.

Note

The shape can be sliced only in two parts at a time. Use the slice tool several times to split a shape to as many parts as you need.

To slice mask or polygon, do the following:

- From the Edit block,

select Slice mask/polygon

.

. - Click on the shape you intend to slice. A black contour will appear around the selected shape.

- Set an initial point for slicing by clicking on the contour.

- Draw a line across the shape to define the slicing path.

Hold Shift to add points automatically on cursor movement.

Note: The line cannot cross itself.

Note: The line cannot cross the contour more than twice. - (Optional)> Right-click to cancel the latest point.

- Click on the contour (Alt+J) (outside the contour) to finalize the slicing.

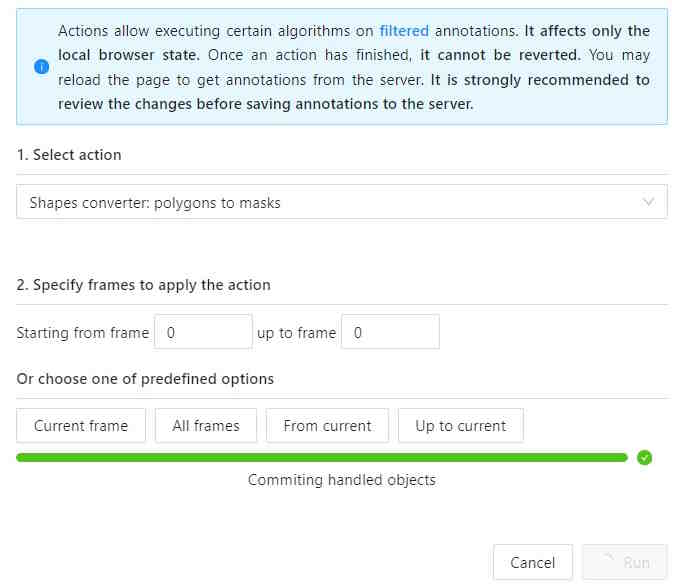

2.3.2 - Shapes converter

The shapes converter is a feature that enables bulk actions on filtered shapes. It allows you to perform mutual conversion between masks, polygons and rectangles.

Note

All shapes converter work only when the filter is set up.See:

Run actions menu

Annotations actions can be accessed from the annotation menu. To access it, click on the burger icon and then select Run actions.

Note

All Shapes converter functions work in alignment with set up filter.

You will see the following dialog:

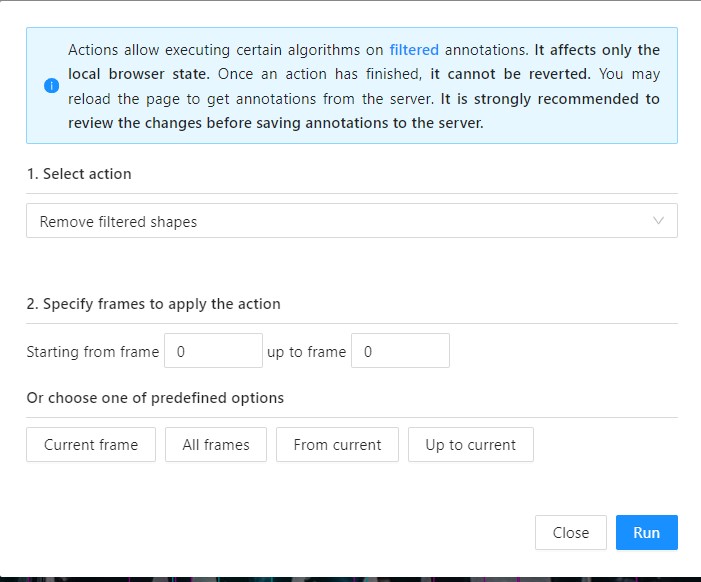

With the following fields:

| Field | Description |

|---|---|

| Select action | Drop-down list with available actions: Note: only Propagate shapes and Remove filtered shapes is available in the community version. |

| Specify frames to run action | Field where you can specify the frame range for the selected action. Enter the starting frame in the Starting from frame: field, and the ending frame in the up to frame field. If nothing is selected here or in Choose one of the predefined options section, the action will be applied to all fields. |

| Choose one of the predefined options | Predefined options to apply to frames. Selection here is mutually exclusive with Specify frames to run action. If nothing is selected here or in Specify frames to run action section, the action will be applied to all fields. |

Convert shapes

Recommended Precautions Before Running Annotation Actions

-

Saving changes: It is recommended to save all changes prior to initiating the annotation action. If unsaved changes are detected, a prompt will advise to save these changes to avoid any potential loss of data.

-

Disable auto-save: Prior to running the annotation action, disabling the auto-save feature is advisable. A notification will suggest this action if auto-save is currently active.

-

Committing changes: Changes applied during the annotation session will not be committed to the server until the saving process is manually initiated. This can be done either by the user or through the auto-save feature, should it be enabled.

To convert shapes, do the following:

-

Annotate your dataset.

-

Set up filters.

-

From the burger menu, select Run actions.

-

Choose the action you need from the Select action drop-down list.

-

(Optional) In the Starting from frame field, enter the frame number where the action should begin, and in the up to frame field, specify the frame number where the action should end.

-

(Optional) Select an option from Or choose one of the predefined options to apply the action.

-

Click Run.

A progress bar will appear. You may abort the process by clicking Cancel until the process commits modified objects at the end of pipeline.

Note

Once the action is applied, it cannot be undone.Convert shapes video tutorial

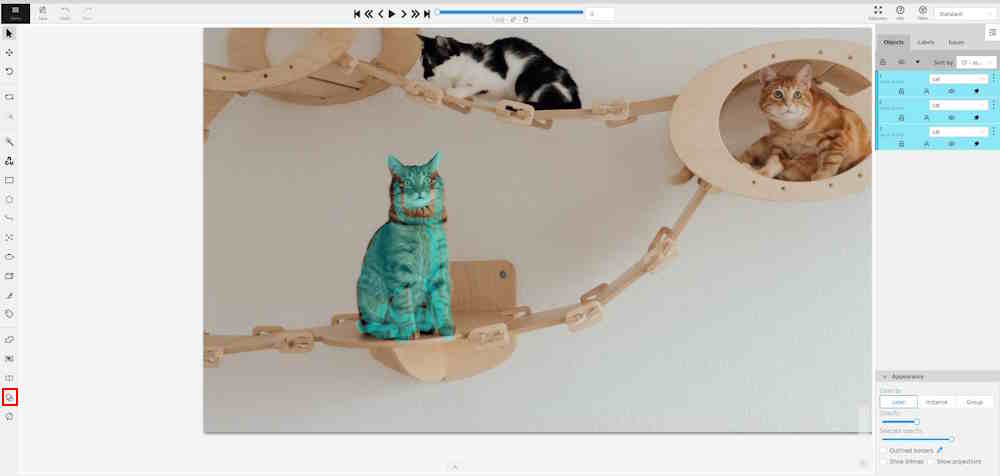

2.3.3 - Contextual images

Contextual images (or related images) are additional images that provide context or additional information related to the primary image.

Use them to add extra contextual about the object to improve the accuracy of annotation.

Contextual images are available for 2D and 3D tasks.

See:

Folder structure

To add contextual images to the task, you need to organize the images folder into one of the supported file layouts. A task with contextual images can be created both from an archive or from raw files.

Example for 2D tasks:

- In the folder with the images for annotation, create a folder:

related_images. - Add to the

related_imagesa subfolder with the same name as the primary image to which it should be linked. - Place the contextual image(s) within the subfolder created in step 2.

- Add folder to the archive.

- Create task.

Supported file layouts for 2D and 3D tasks:

root_directory/

image_1_to_be_annotated.jpg

image_2_to_be_annotated.jpg

related_images/

image_1_to_be_annotated_jpg/

context_image_for_image_1.jpg

image_2_to_be_annotated_jpg/

context_image_for_image_2.jpg

subdirectory_example/

image_3_to_be_annotated.jpg

related_images/

image_3_to_be_annotated_jpg/

context_image_for_image_3.jpg

Point clouds and related images are put into the same directory. Related files must have the same names as the corresponding point clouds. This format is limited by only 1 related image per point cloud.

root_directory/

pointcloud1.pcd

pointcloud1.jpg

pointcloud2.pcd

pointcloud2.png

...

Each point cloud is put into a separate directory with matching file name. Related images are put next to the corresponding point cloud, the file names and extensions can be arbitrary.

root_directory/

pointcloud1/

pointcloud1.pcd

pointcloud1_ri1.png

pointcloud1_ri2.jpg

...

pointcloud2/

pointcloud2.pcd

pointcloud2_ri1.bmp

Context images are placed in the image_00/, image_01/, image_N/ (N is any number)

directories. Their file names must correspond to the point cloud files in the data/ directory.

image_00/

data/

0000000000.png

0000000001.png

0000000002.png

0000000003.png

image_01/

data/

0000000000.png

0000000001.png

0000000002.png

0000000003.png

image_N/

data/

0000000000.png

0000000001.png

0000000002.png

0000000003.png

velodyne_points/

data/

0000000000.bin

0000000001.bin

0000000002.bin

0000000003.bin

root_directory/

pointcloud/

pointcloud1.pcd

pointcloud2.pcd

related_images/

pointcloud1_pcd/

context_image_for_pointclud1.jpg

pointcloud2_pcd/

context_image_for_pointcloud2.jpg

For more general information about 3D data formats, see 3D data formats.

Contextual images

The maximum amount of contextual images is twelve.

By default they will be positioned on the right side of the main image.

Note

By default, only three contextual images will be visible.

When you add contextual images to the set, small toolbar will appear on the top of the screen, with the following elements:

| Element | Description |

|---|---|

|

Fit views. Click to restore the layout to its original appearance. If you’ve expanded any images in the layout, they will returned to their original size. This won’t affect the number of context images on the screen. |

|

Add new image. Click to add context image to the layout. |

|

Reload layout. Click to reload layout to the default view. Note, that this action can change the number of context images resetting them back to three. |

Each context image has the following elements:

| Element | Description |

|---|---|

| 1 | Full screen. Click to expand the contextual image in to the full screen mode. Click again to revert contextual image to windowed mode. |

| 2 | Move contextual image. Hold and move contextual image to the other place on the screen.

|

| 3 | Name. Unique contextual image name |

| 4 | Select contextual image. Click to open a horizontal listview of all available contextual images. Click on one to select. |

| 5 | Close. Click to remove image from contextual images menu. |

| 6 | Extend Hold and pull to extend the image. |

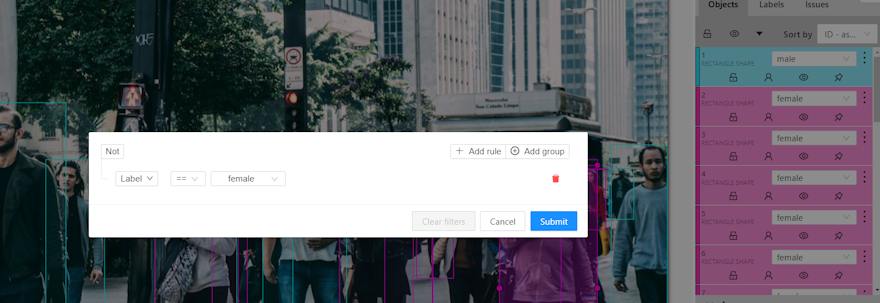

2.3.4 - Filter

There are some reasons to use the feature:

- When you use a filter, objects that don’t match the filter will be hidden.

- The fast navigation between frames which have an object of interest.

Use the

Left Arrow/Right Arrowkeys for this purpose or customize the UI buttons by right-clicking and selectswitching by filter. If there are no objects which correspond to the filter, you will go to the previous / next frame which contains any annotated objects.

To apply filters you need to click on the button on the top panel.

Create a filter

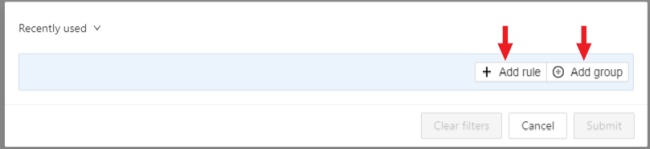

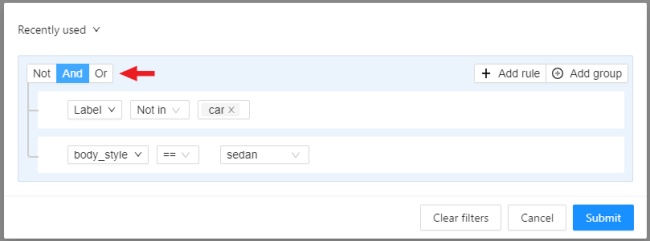

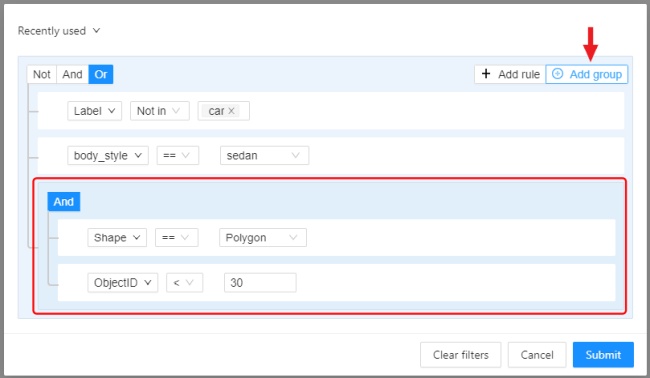

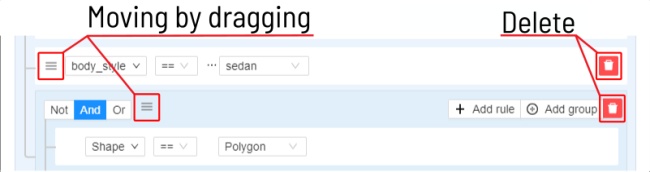

It will open a window for filter input. Here you will find two buttons: Add rule and Add group.

Rules

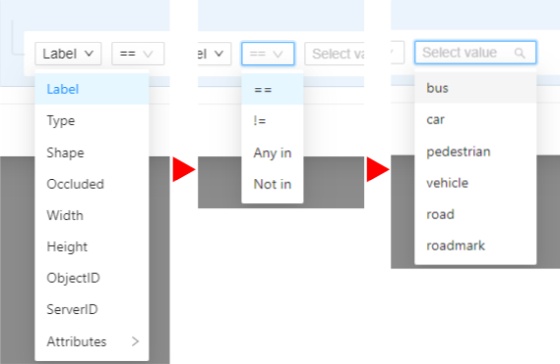

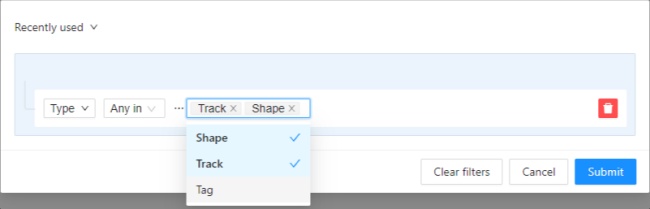

The Add rule button adds a rule for objects display. A rule may use the following properties:

Supported properties for annotation

| Properties | Supported values | Description |

|---|---|---|

Label |

all the label names that are in the task | label name |

Type |

shape, track or tag | type of object |

Shape |

all shape types | type of shape |

Occluded |

true or false | occluded (read more) |

Width |

number of px or field | shape width |

Height |

number of px or field | shape height |

ServerID |

number or field | ID of the object on the server (You can find out by forming a link to the object through the Action menu) |

ObjectID |

number or field | ID of the object in your client (indicated on the objects sidebar) |

Score |

number | consensus score |

Votes |

number | consensus votes |

Attributes |

some other fields including attributes with a similar type or a specific attribute value |

any fields specified by a label |

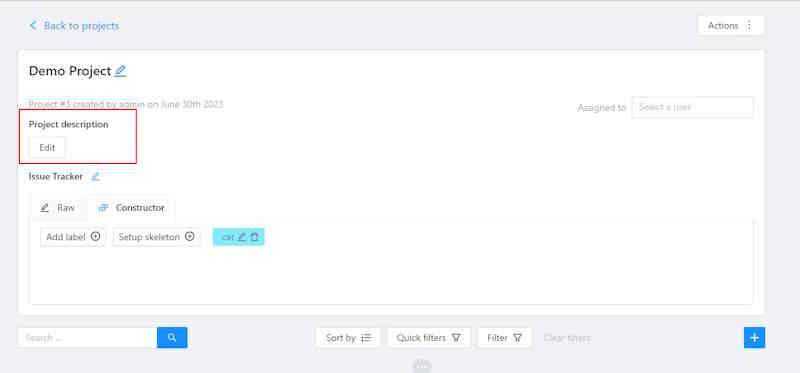

- Supported properties for projects list

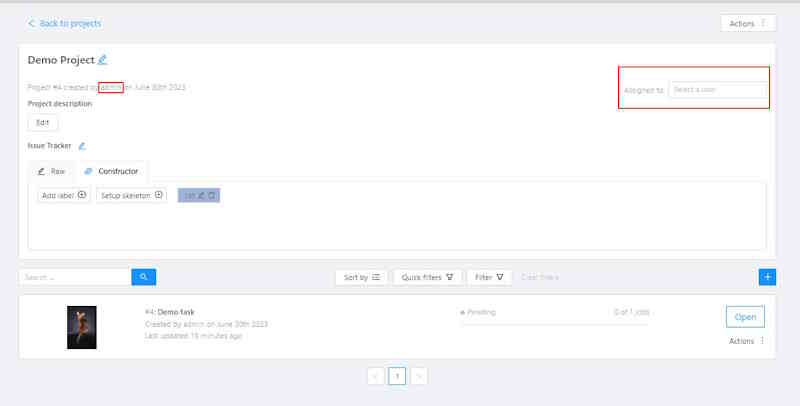

- Supported properties for tasks list

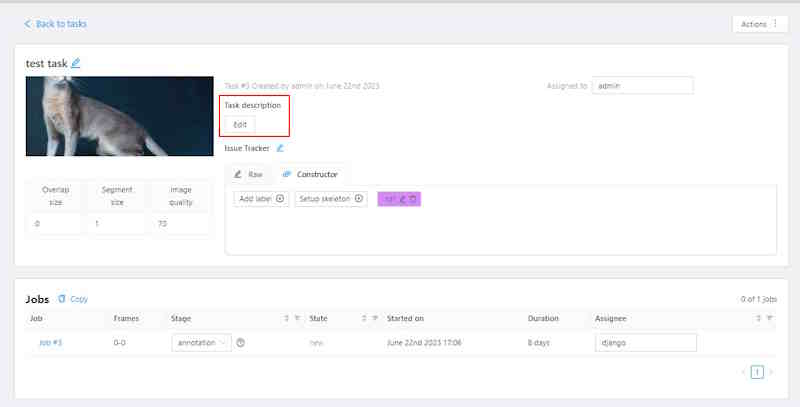

- Supported properties for jobs list

- Supported properties for cloud storages list

Supported operators for properties

== - Equally; != - Not equal; > - More; >= - More or equal; < - Less; <= - Less or equal;

Any in; Not in - these operators allow you to set multiple values in one rule;

Is empty; is not empty – these operators don’t require to input a value.

Between; Not between – these operators allow you to choose a range between two values.

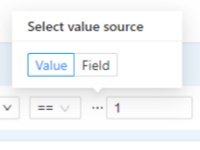

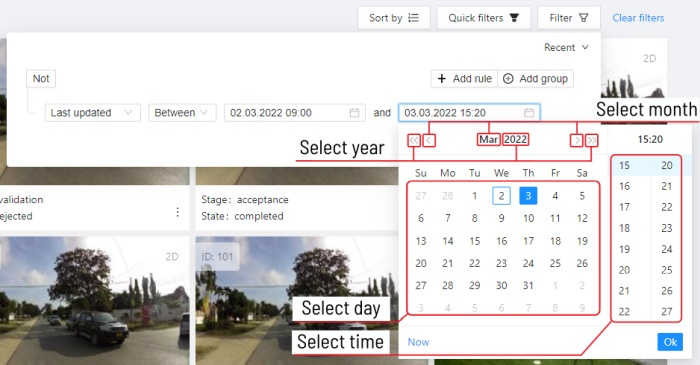

Like - this operator indicate that the property must contain a value.